Technology peripherals

Technology peripherals

AI

AI

Alumni of Shanghai Jiao Tong University won the best paper, and the awards for CoRL 2022, the top robotics conference, were announced

Alumni of Shanghai Jiao Tong University won the best paper, and the awards for CoRL 2022, the top robotics conference, were announced

Alumni of Shanghai Jiao Tong University won the best paper, and the awards for CoRL 2022, the top robotics conference, were announced

Since it was first held in 2017, CoRL has become one of the world's top academic conferences at the intersection of robotics and machine learning. CoRL is a single-track conference for robot learning research, covering multiple topics such as robotics, machine learning and control, including theory and applications.

The 2022 CoRL Conference will be held in Auckland, New Zealand from December 14th to 18th.

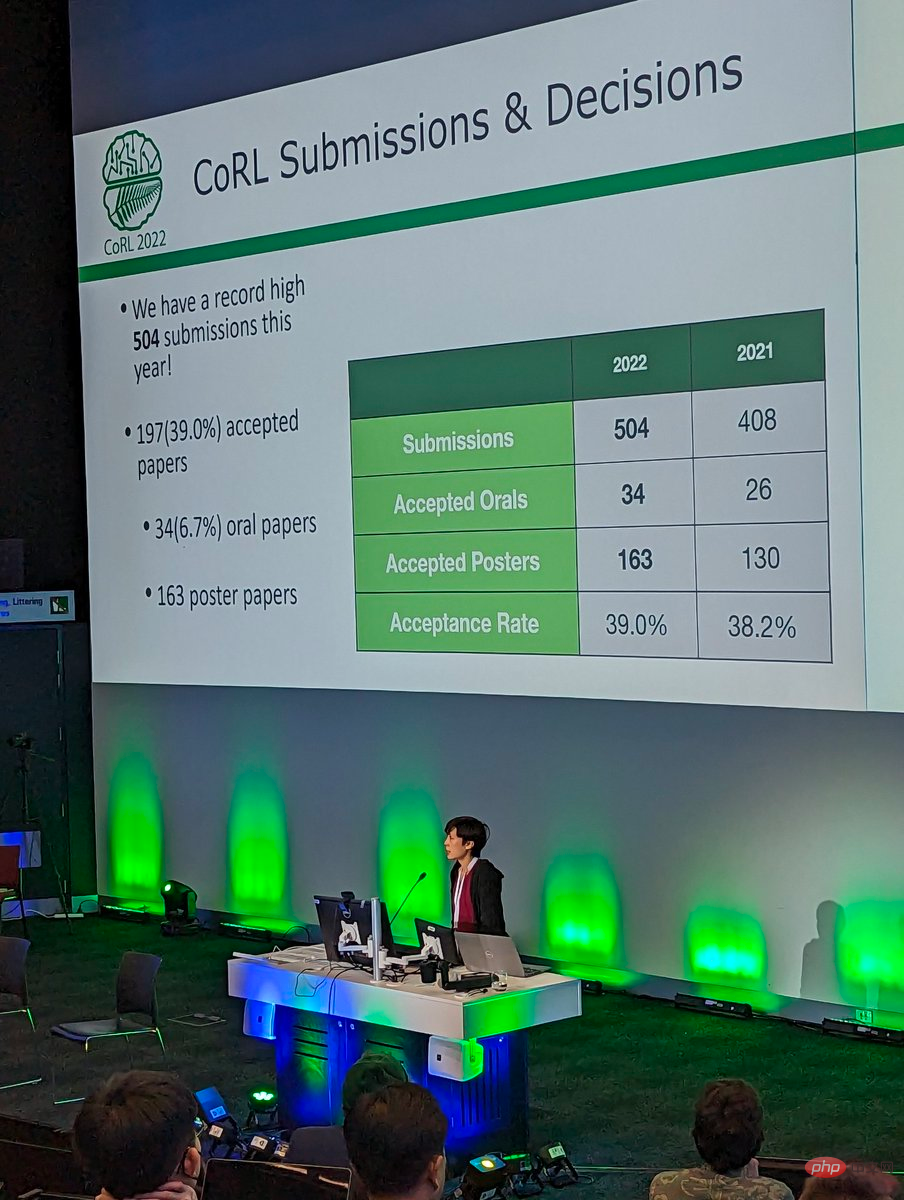

This conference received a total of 504 submissions, and finally accepted 34 Oral papers and 163 Poster papers. The acceptance rate is 39%.

##

##

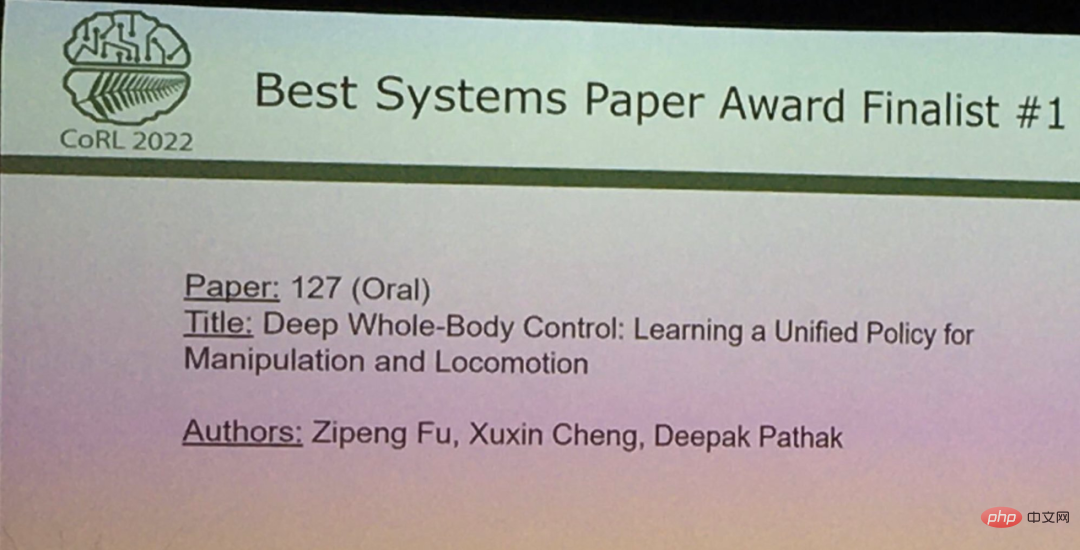

Currently, CoRL 2022 has announced the Best Paper Award, Best System Paper Award, Special Innovation Award, etc. Awards. Kun Huang, a master's degree from the GRASP Laboratory of the University of Pennsylvania and an alumnus of Shanghai Jiao Tong University, won the best paper award at the conference.

Best Paper AwardThe winner of the Best Paper Award at this conference is a study from the University of Pennsylvania.

- Paper title: Training Robots to Evaluate Robots: Example-Based Interactive Reward Functions for Policy Learning

- Authors: Kun Huang, Edward Hu, Dinesh Jayaraman ##Paper link: https://openreview.net/pdf?id=sK2aWU7X9b8

Abstract: Often, physical interactions help reveal less obvious information, such as we might pull on a table leg to assess Whether it's stable or turning a water bottle upside down to check if it's leaking, the study suggests this interactive behavior could be acquired automatically by training a robot to evaluate the results of the robot's attempts to perform the skill. These evaluations, in turn, serve as IRFs (interactive reward functions) that are used to train reinforcement learning policies to perform target skills, such as tightening table legs. Furthermore, IRF can serve as a verification mechanism to improve online task execution even after full training is completed. For any given task, IRF training is very convenient and requires no further specification.

Evaluation results show that IRF can achieve significant performance improvements and even surpass baselines with access to demos or carefully designed rewards. For example, in the picture below, the robot must first close the door, and then rotate the symmetrical door handle to completely lock the door.

Door locking evaluation example demonstration

Stacked Evaluation Example Demonstration

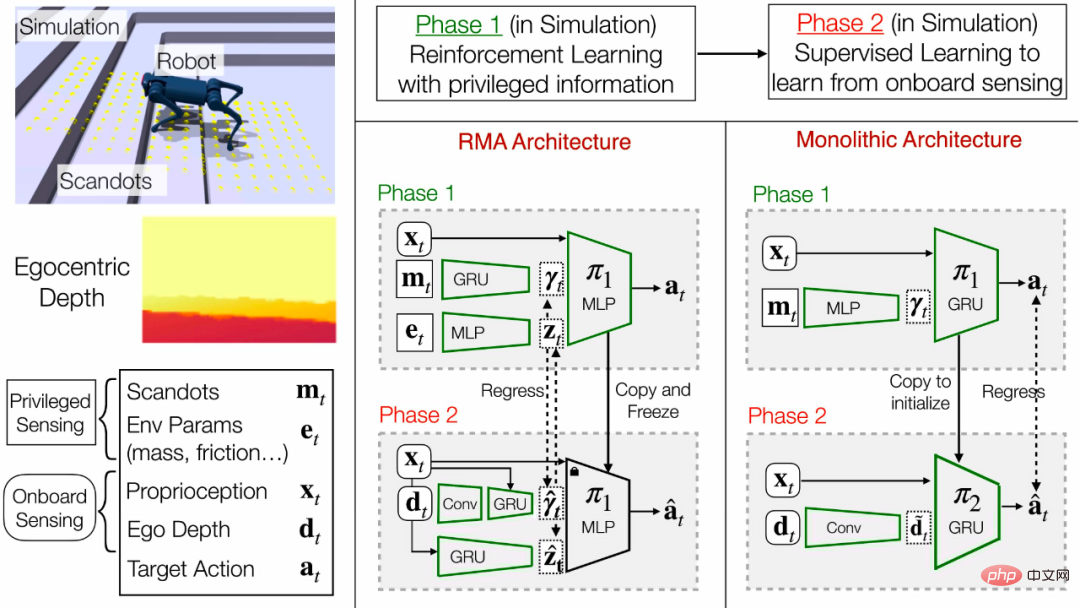

Introduction to the author There are three authors who won the CoRL 2022 Best Paper Award this time, namely Kun Huang, Edward Hu, and Dinesh Jayaraman. Dinesh Jayaraman is an assistant professor at the GRASP Laboratory at the University of Pennsylvania. He leads the Perception, Action, and Learning (PAL) research group, which is dedicated to the intersection of computer vision, machine learning, and robotics. Problem research. Kun Huang is a master of the GRASP Laboratory at the University of Pennsylvania and studies reinforcement learning under the guidance of Professor Dinesh Jayaraman. He received his BS in Computer Science from the University of Michigan, where he worked on robot perception with Professor Dmitry Berenson. Kun Huang graduated from Shanghai Jiao Tong University with a bachelor's degree. His research interests include robotics and real-world applications. Kun Huang interned at Waymo during his master's degree and will join Cruise as a machine learning engineer after graduation. LinkedIn homepage: https://www.linkedin.com/in/kun-huang-620034171/ Edward S. Hu I am a doctoral student in the GRASP Laboratory of the University of Pennsylvania, studying under Professor Dinesh Jayaraman. His main research interests include model-based reinforcement learning. Edward received his master's and bachelor's degrees in computer science from the University of Southern California, where he worked on reinforcement and imitation learning in robots with Professor Joseph J. Lim. A total of 3 papers were shortlisted for the Best Paper Award at this conference. In addition to the final winning paper, the other 2 papers are: ## ## Animals are able to use vision to make precise and agile movements, and replicating this ability has been a long-standing goal of robotics. The traditional approach is to break the problem down into an elevation mapping and foothold planning phase. However, elevation mapping is susceptible to glitches and large-area noise, requires specialized hardware and is biologically infeasible. In this paper, researchers propose the first end-to-end locomotion system capable of traversing stairs, curbs, stepping stones, and voids in a medium-sized building using a single facade. The results are demonstrated on a quadruped robot with a depth camera. Due to the small size of the robot, there is a need to discover specialized gait patterns not found elsewhere. The camera needs to master the strategy of remembering past information to estimate the terrain behind and below it. The researchers trained the robot’s strategy in a simulated environment. Training is divided into two stages: first using reinforcement learning to train a policy on deep image variants with low computational cost, and then refining it to a final policy on depth using supervised learning. The resulting strategy is transferable to the real world and can be run in real time on the limited computing power of the robot. It can traverse a wide range of terrains while being robust to disturbances such as slippery surfaces and rocky terrain. Stepping Stones and Gaps The robot is able to step over bar stools in various configurations and adjust the step length to Excessive gap. Since there are no cameras near the back feet, the robot has to remember the position of the bar stool and place its back feet accordingly. Stairs and curbs The robot is able to climb up to 24 cm High, 30 cm wide stairs. Strategies apply to different stairways and curbs under various lighting conditions. On unevenly spaced stairs, the robot will initially get stuck, but will eventually be able to use climbing behavior to cross these obstacles. Unstructured terrain Robots can traverse areas that are not part of their training One of the categories, unstructured terrain, shows the generalization ability of the system. Movement in the Dark The depth camera uses infrared light to project patterns , accurately estimating depth even in almost no ambient light. Robustness Strategy to high forces (throwing 5 kg weight from height) and slipperiness The surface (water poured on plastic sheet) is robust. Author introduction This item The study has four authors. Jitendra Malik is currently the Arthur J. Chick Professor in the Department of Electrical Engineering and Computer Science at UC Berkeley. His research areas include computer vision, computational modeling of human vision, computer graphics and biology. Image analysis, etc. Ashish Kumar, one of the authors of this award-winning study, is his doctoral student. Deepak Pathak is currently an assistant professor at Carnegie Mellon University. He received his PhD from the University of California, Berkeley, and his research topics include machine learning, robotics and computer vision. Ananye Agarwal, one of the authors of this award-winning study, is his doctoral student. In addition, Deepak Pathak has another study on the shortlist for the Best System Paper Award at this conference. This time The conference also selected a special innovation award. This research was jointly completed by many researchers from Google. Paper abstract: Large language models can encode a large amount of semantic knowledge about the world, and such knowledge is very useful for robots. However, language models have the disadvantage of lacking experience with the real world, which makes it difficult to leverage semantics to make decisions on a given task. The researchers used this principle when combining a large language model (LLM) with the physical tasks of the robot: in addition to letting the LLM simply interpret an instruction, you can also use It evaluates the probability that a single action will help complete the entire high-level instruction. Simply put, each action can have a language description, and we can use the prompt language model to let it score these actions. Furthermore, if each action has a corresponding affordance function, it is possible to quantify its likelihood of success from the current state (e.g., a learned value function). The product of two probability values is the probability that the robot can successfully complete an action that is helpful to the instruction. Sort a series of actions according to this probability and select the one with the highest probability.

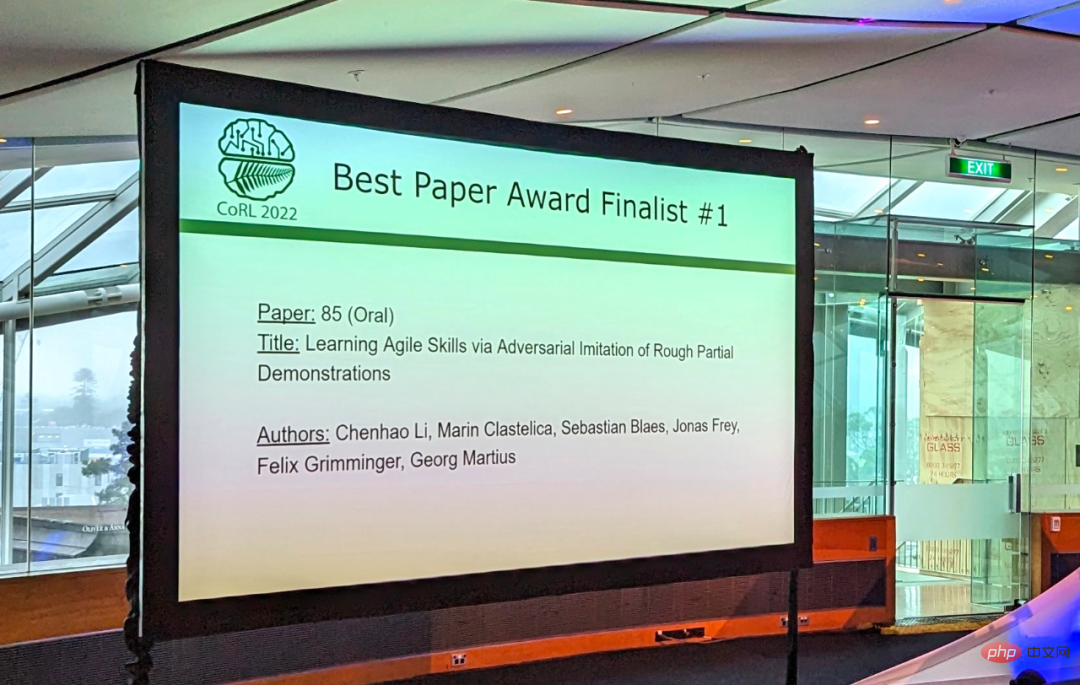

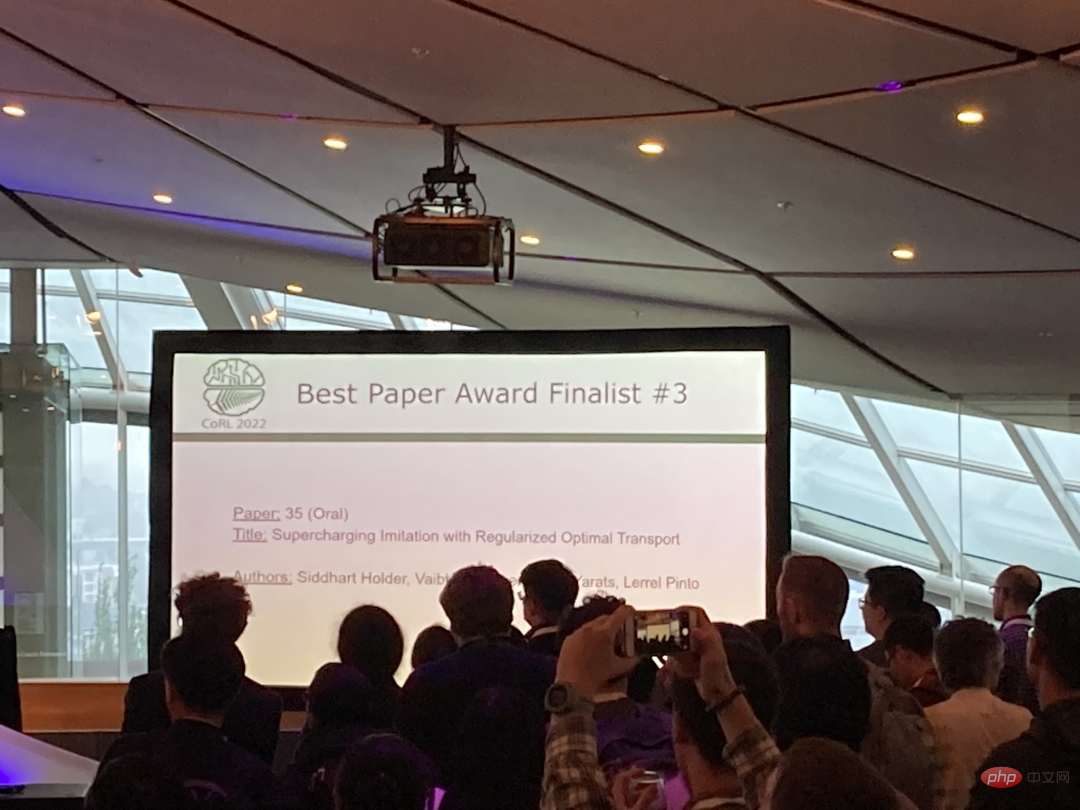

Best Paper Shortlist

##

##

Paper title: Supercharging Imitation with Regularized Optimal Transport

The winner of the Best System Paper Award at this conference is a study from CMU and UC Berkeley.

Abstract:

Special Innovation Award

##Paper title: Do As I Can, Not As I Say: Grounding Language in Robotic Affordances

The above is the detailed content of Alumni of Shanghai Jiao Tong University won the best paper, and the awards for CoRL 2022, the top robotics conference, were announced. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

In the field of industrial automation technology, there are two recent hot spots that are difficult to ignore: artificial intelligence (AI) and Nvidia. Don’t change the meaning of the original content, fine-tune the content, rewrite the content, don’t continue: “Not only that, the two are closely related, because Nvidia is expanding beyond just its original graphics processing units (GPUs). The technology extends to the field of digital twins and is closely connected to emerging AI technologies. "Recently, NVIDIA has reached cooperation with many industrial companies, including leading industrial automation companies such as Aveva, Rockwell Automation, Siemens and Schneider Electric, as well as Teradyne Robotics and its MiR and Universal Robots companies. Recently,Nvidiahascoll

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

Editor of Machine Power Report: Wu Xin The domestic version of the humanoid robot + large model team completed the operation task of complex flexible materials such as folding clothes for the first time. With the unveiling of Figure01, which integrates OpenAI's multi-modal large model, the related progress of domestic peers has been attracting attention. Just yesterday, UBTECH, China's "number one humanoid robot stock", released the first demo of the humanoid robot WalkerS that is deeply integrated with Baidu Wenxin's large model, showing some interesting new features. Now, WalkerS, blessed by Baidu Wenxin’s large model capabilities, looks like this. Like Figure01, WalkerS does not move around, but stands behind a desk to complete a series of tasks. It can follow human commands and fold clothes

NeRF and the past and present of autonomous driving, a summary of nearly 10 papers!

Nov 14, 2023 pm 03:09 PM

NeRF and the past and present of autonomous driving, a summary of nearly 10 papers!

Nov 14, 2023 pm 03:09 PM

Since Neural Radiance Fields was proposed in 2020, the number of related papers has increased exponentially. It has not only become an important branch of three-dimensional reconstruction, but has also gradually become active at the research frontier as an important tool for autonomous driving. NeRF has suddenly emerged in the past two years, mainly because it skips the feature point extraction and matching, epipolar geometry and triangulation, PnP plus Bundle Adjustment and other steps of the traditional CV reconstruction pipeline, and even skips mesh reconstruction, mapping and light tracing, directly from 2D The input image is used to learn a radiation field, and then a rendered image that approximates a real photo is output from the radiation field. In other words, let an implicit three-dimensional model based on a neural network fit the specified perspective

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

The following 10 humanoid robots are shaping our future: 1. ASIMO: Developed by Honda, ASIMO is one of the most well-known humanoid robots. Standing 4 feet tall and weighing 119 pounds, ASIMO is equipped with advanced sensors and artificial intelligence capabilities that allow it to navigate complex environments and interact with humans. ASIMO's versatility makes it suitable for a variety of tasks, from assisting people with disabilities to delivering presentations at events. 2. Pepper: Created by Softbank Robotics, Pepper aims to be a social companion for humans. With its expressive face and ability to recognize emotions, Pepper can participate in conversations, help in retail settings, and even provide educational support. Pepper's

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

In the blink of an eye, robots have learned to do magic? It was seen that it first picked up the water spoon on the table and proved to the audience that there was nothing in it... Then it put the egg-like object in its hand, then put the water spoon back on the table and started to "cast a spell"... …Just when it picked up the water spoon again, a miracle happened. The egg that was originally put in disappeared, and the thing that jumped out turned into a basketball... Let’s look at the continuous actions again: △ This animation shows a set of actions at 2x speed, and it flows smoothly. Only by watching the video repeatedly at 0.5x speed can it be understood. Finally, I discovered the clues: if my hand speed were faster, I might be able to hide it from the enemy. Some netizens lamented that the robot’s magic skills were even higher than their own: Mag was the one who performed this magic for us.