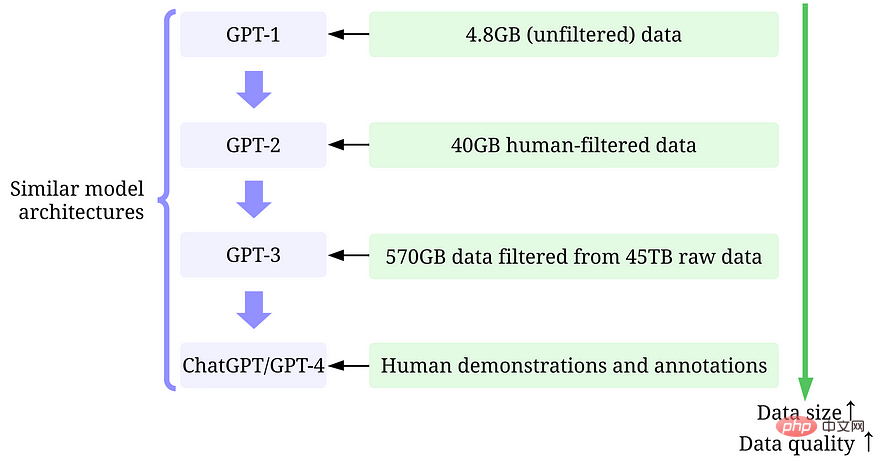

Talk about the data-centric AI behind the GPT model

Artificial intelligence (AI) is making huge strides in changing the way we live, work, and interact with technology. Recently, an area where significant progress has been made is the development of large language models (LLMs) such as GPT-3, ChatGPT, and GPT-4. These models can accurately perform tasks such as language translation, text summarization, and question answering.

While it is difficult to ignore the ever-increasing model sizes of LLMs, it is equally important to recognize that their success is largely due to the large number of High quality data.

In this article, we will provide an overview of recent advances in LLM from a data-centric AI perspective. We'll examine GPT models through a data-centric AI lens, a growing concept in the data science community. We reveal the data-centric AI concepts behind the GPT model by discussing three data-centric AI goals: training data development, inference data development, and data maintenance.

Large Language Model (LLM) and GPT Model

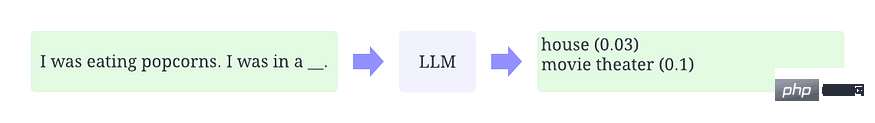

LLM is a natural language processing model trained to infer words in context. For example, the most basic function of LLM is to predict missing markers given context. To do this, LLMs are trained to predict the probability of each candidate word from massive amounts of data. The figure below is an illustrative example of using LLM in context to predict the probability of missing markers.

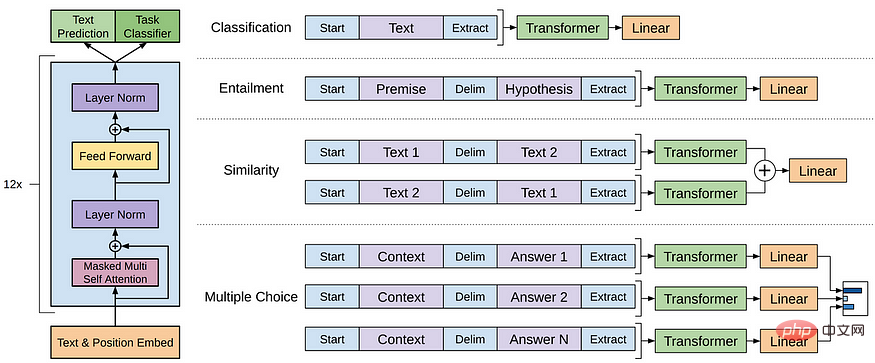

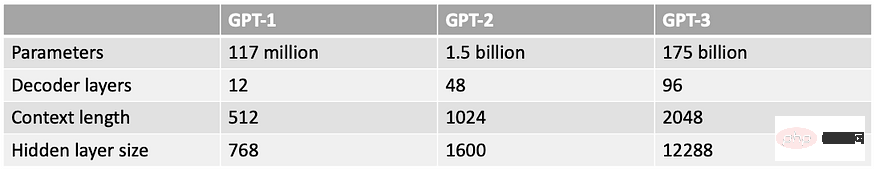

GPT model refers to a series of LLMs created by OpenAI, such as GPT-1, GPT-2, GPT-3, InstructGPT, ChatGPT/GPT-4, etc. Like other LLMs, the architecture of the GPT model is mainly based on Transformers, which uses text and location embeddings as inputs and uses attention layers to model the relationships of tokens.

GPT-1 model architecture

Later GPT models use a similar architecture to GPT-1, except that they use more model parameters and more layers. Larger context length, hidden layer size, etc.

What is Data-Centric Artificial Intelligence

Data-centric AI is an emerging new way of thinking about how to build AI systems. Data-centric AI is the discipline of systematically designing the data used to build artificial intelligence systems.

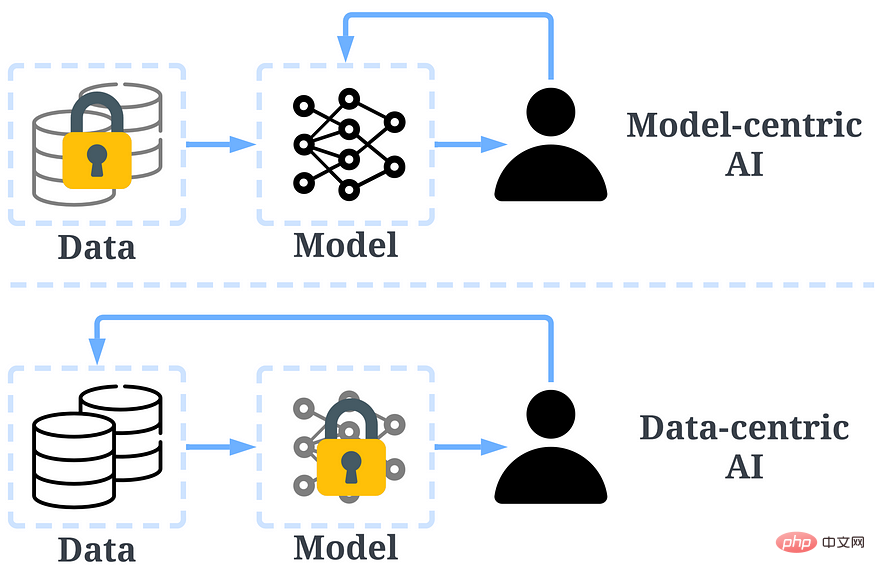

In the past, we have mainly focused on creating better models (model-centric AI) when the data is basically unchanged. However, this approach can cause problems in the real world because it does not take into account different issues that can arise in the data, such as label inaccuracies, duplications, and biases. Therefore, "overfitting" a data set does not necessarily lead to better model behavior.

In contrast, data-centric AI focuses on improving the quality and quantity of data used to build AI systems. This means that the attention is on the data itself and the model is relatively more fixed. Using a data-centric approach to develop AI systems has greater potential in real-world scenarios, as the data used for training ultimately determines the model's maximum capabilities.

It should be noted that there is a fundamental difference between "data-centered" and "data-driven". The latter only emphasizes using data to guide the development of artificial intelligence, and usually still focuses on developing models rather than data. .

Comparison between data-centric artificial intelligence and model-centric artificial intelligence

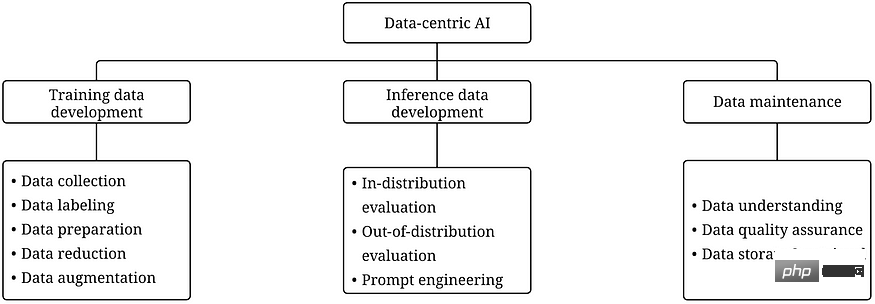

The data-centric AI framework contains three Target:

- Training data development is to collect and produce rich, high-quality data to support the training of machine learning models.

- Inference data development is for creating new evaluation sets that can provide more fine-grained insights into the model or trigger specific features of the model through data input.

- Data maintenance is to ensure the quality and reliability of data in a dynamic environment. Data maintenance is critical because real-world data is not created once but requires ongoing maintenance.

Data-centric AI framework

Why data-centric AI makes the GPT model successful

A few A few months ago, Yann LeCun tweeted that ChatGPT was nothing new. In fact, all the techniques used in ChatGPT and GPT-4 (transformers, reinforcement learning from human feedback, etc.) are not new at all. However, they did achieve results that were not possible with previous models. So, what is the reason for their success?

Training data development. The quantity and quality of data used to train GPT models has improved significantly through better data collection, data labeling, and data preparation strategies.

-

GPT-1: BooksCorpus dataset is used for training. The dataset contains 4629.00 MB of raw text covering various genres of books such as adventure, fantasy, and romance.

-Data-Centric AI Strategy: None.

- Results: Using GPT-1 on this dataset can improve the performance of downstream tasks through fine-tuning. -

GPT-2: Use WebText in training. This is an internal dataset within OpenAI created by scraping outbound links from Reddit.

- Data-Centric AI Strategy: (1) Collate/filter data using only outbound links from Reddit that earn at least 3 karma. (2) Use tools Dragnet and Newspaper to extract clean content. (3) Use deduplication and some other heuristic-based cleaning.

- Result: 40 GB of text after filtering. GPT-2 achieves robust zero-shot results without fine-tuning. -

GPT-3: The training of GPT-3 is mainly based on Common Crawl.

-Data-centric AI strategy: (1) Train a classifier to filter out low-quality documents based on each document's similarity to WebText (high-quality documents). (2) Use Spark’s MinHashLSH to fuzzy and deduplicate documents. (3) Data augmentation using WebText, book corpus, and Wikipedia.

- Result: 45TB of plaintext was filtered and 570GB of text was obtained (only 1.27% of the data was selected for this quality filtering). GPT-3 significantly outperforms GPT-2 in the zero-sample setting. -

InstructGPT: Let human evaluation adjust GPT-3 answers to better match human expectations. They designed a test for annotators, and only those who passed the test were eligible for annotation. They even designed a survey to ensure annotators were fully engaged in the annotation process.

-Data-centric AI strategy: (1) Tune the model through supervised training using answers to prompts provided by humans. (2) Collect comparative data to train a reward model, and then use this reward model to tune GPT-3 through reinforcement learning with human feedback (RLHF).

- Results: InstructGPT exhibits better realism and less bias, i.e. better alignment. - ChatGPT/GPT-4: OpenAI did not disclose details. But as we all know, ChatGPT/GPT-4 largely follows the design of the previous GPT model, and they still use RLHF to tune the model (possibly with more and higher quality data/labels). It is generally accepted that GPT-4 uses larger datasets as the model weights increase.

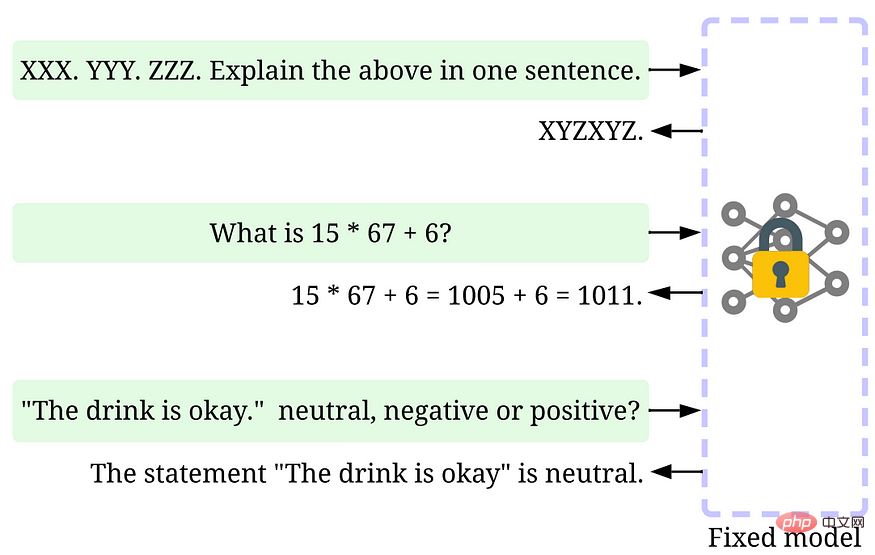

Inference data development. Since recent GPT models have become powerful enough, we can achieve various goals by adjusting hints or adjusting inference data while the model is fixed. For example, we can perform text summarization by providing the text to be summarized and instructions such as "summarize it" or "TL;DR" to guide the reasoning process.

Adjust in time

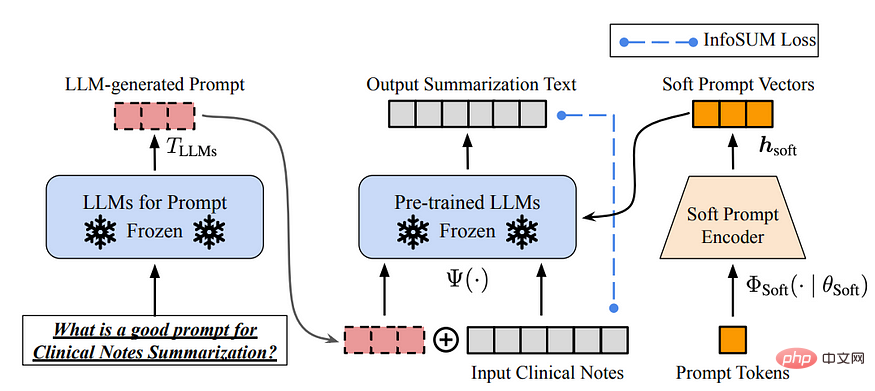

Designing the right reasoning prompts is a challenging task. It relies heavily on heuristics. A good survey summarizes the different promotional methods. Sometimes, even semantically similar cues can have very different outputs. In this case, soft cue-based calibration may be needed to reduce variance.

#Research on LLM inference data development is still in its early stages. In the near future, more inferential data development techniques that have been used for other tasks can be applied in LLM.

data maintenance. ChatGPT/GPT-4, as a commercial product, is not only trained once, but also continuously updated and maintained. Obviously, we have no way of knowing how data maintenance is done outside of OpenAI. Therefore, we discuss some general data-centric AI strategies that have been or will likely be used for GPT models:

- Continuous data collection: When we use ChatGPT/GPT-4 Our tips/feedback may in turn be used by OpenAI to further advance their models. Quality metrics and assurance strategies may have been designed and implemented to collect high-quality data during the process.

- Data Understanding Tools: Various tools can be developed to visualize and understand user data, promoting a better understanding of user needs and guiding the direction of future improvements.

- Efficient data processing: With the rapid growth of the number of ChatGPT/GPT-4 users, an efficient data management system is needed to achieve rapid data collection.

The picture above is an example of ChatGPT/GPT-4 collecting user feedback through "likes" and "dislikes".

What the data science community can learn from this wave of LLM

The success of LLM has revolutionized artificial intelligence. Going forward, LLM can further revolutionize the data science lifecycle. We make two predictions:

- Data-centric artificial intelligence becomes more important. After years of research, model design has become very mature, especially after Transformer. Data becomes a key way to improve AI systems in the future. Also, when the model becomes powerful enough, we don't need to train the model in our daily work. Instead, we only need to design appropriate inference data to explore knowledge from the model. Therefore, research and development of data-centric AI will drive future progress.

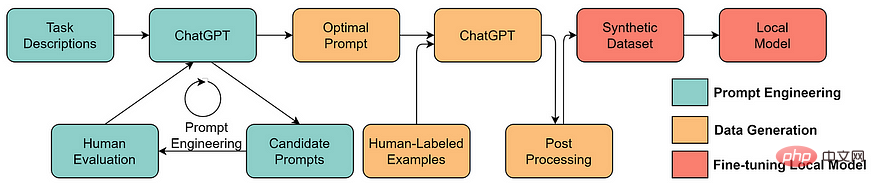

- LLM will enable better data-centric artificial intelligence solutions

Many tedious data science tasks can be more effective with the help of LLM carried out. For example, ChaGPT/GPT-4 already makes it possible to write working code to process and clean data. Additionally, LLM can even be used to create training data. For example, using LLM to generate synthetic data can improve model performance in text mining.

The above is the detailed content of Talk about the data-centric AI behind the GPT model. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year