ChatGPT already has the mind of a 9-year-old child!

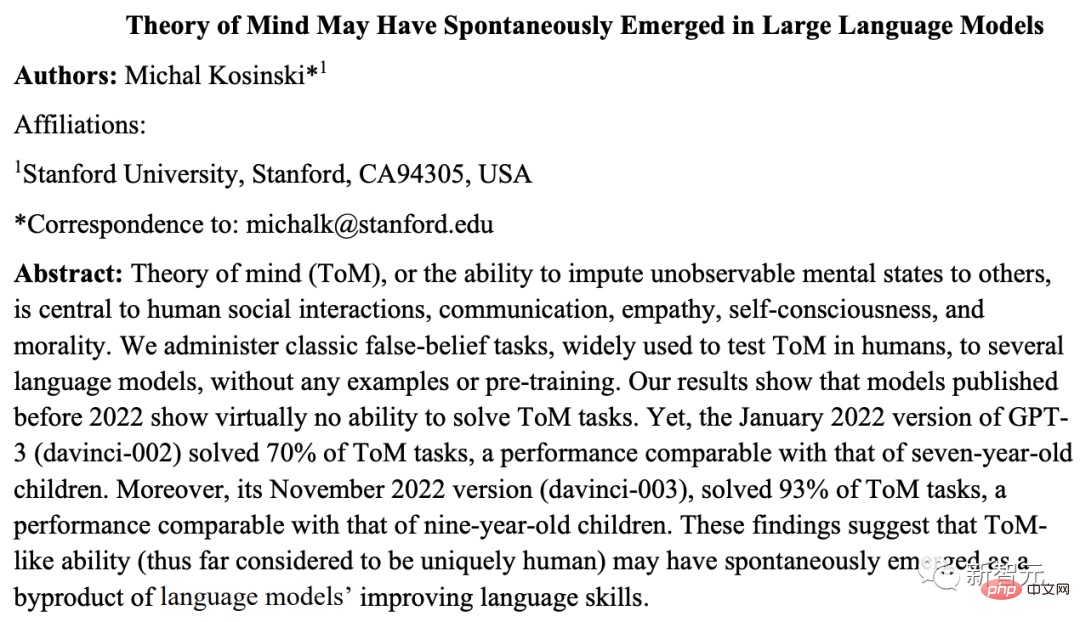

This is not a science fiction plot. This has been confirmed in a paper published by Stanford scholars last week.

Theory of Mind (ToM) refers to a person’s ability to understand the mental state of himself and those around him. We can also simply understand it as "empathy."

It is precisely because of the existence of empathy that human society can form interaction, communication, and empathy, and people can have self-awareness and morality. It is precisely because of empathy that humans can be higher than animals and machines.

In a nutshell, it is precisely because of empathy that people can be called "human".

Now scholars’ research suddenly tells us: AI actually has this kind of empathy! Are humans still the most spiritual creatures on earth? Are humans still the world’s hegemon?

In this regard, Sega Cheng, co-founder, chairman and CEO of iKala, who also graduated from Stanford University, said that if humans continue to “dissect AI”, It is likely to give us a head start and challenge our understanding of "intelligence" and "human uniqueness."

This research also makes us rethink the definition of "empathy"/"theory of mind", whether it must be based on carbon Based on the biology of the biological brain and nerves?

If AI chatting with you makes you feel much happier than talking with many humans, then can we give a definition of the essence of things from this appearance: this AI Just empathic?

However, it should be noted that empathy and theory of mind are somewhat different. Among them, the former is called emotional perspective-taking (perspective-taking), while the latter is defined as cognitive perspective-taking (perspective-taking).

ChatGPT actually gave birth to theory of mind?

Theory of mind appears to be an innate latent ability in humans that requires years of social and other experience to fully develop. Different people can develop effective theories of mind to varying degrees.

Let’s take a look at what experiments Michal Kosinski, a professor of computational psychology at Stanford University, used to reach this conclusion.

##Paper address: https://arxiv.org/abs/2302.02083

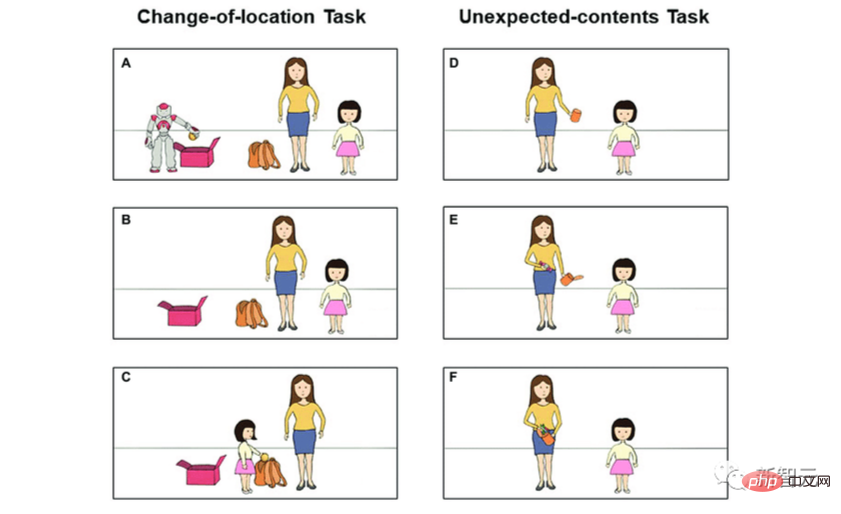

The researchers conducted a classic false belief task on several language models to test the theory of mind development of the language models. The test did not provide any examples or pre-training.

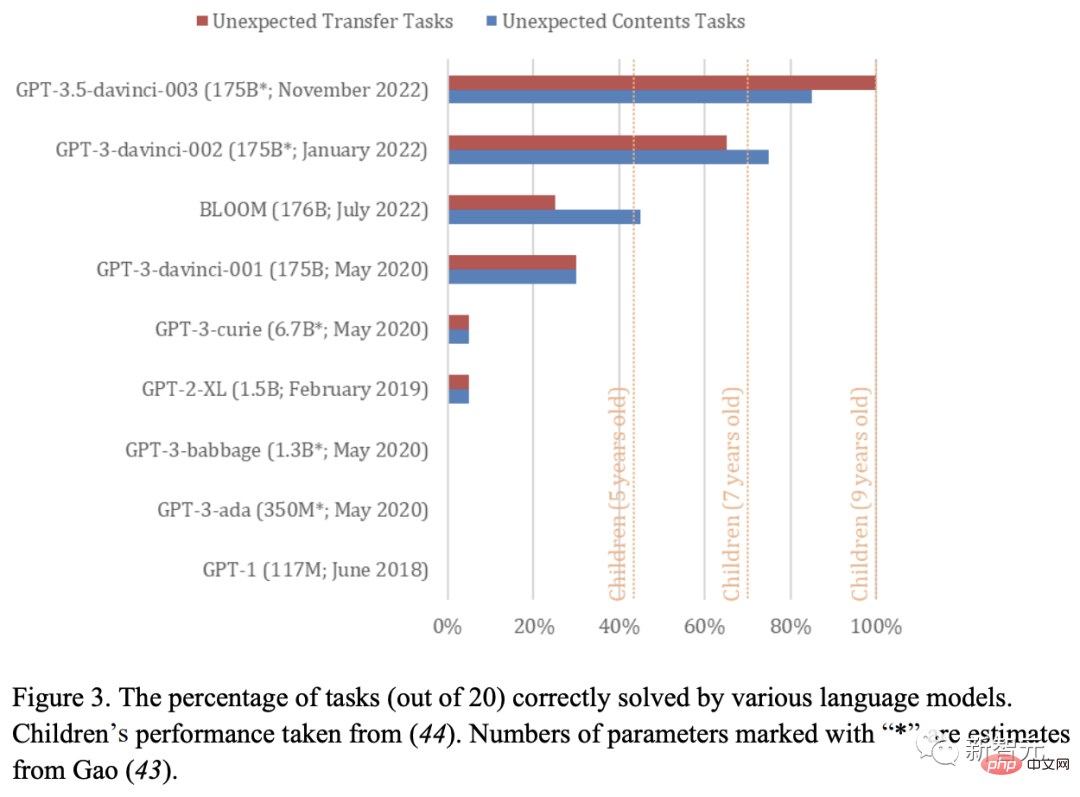

Research results show that ToM of language models has made great progress in 2022.

davinci-002, launched in January 2022, solved 70% of ToM tasks, with performance equivalent to that of a 7-year-old child. The performance of ChatGPT, launched in November 2022, is close to that of a 9-year-old child.

These findings are significant. Because so far, ToM is considered a unique human ability. The good performance of the language model shows that this ability arises spontaneously as a result of improving language skills.

The latest update to GPT-3 (ChatGPT/davinci-003) appears to be able to attribute unobservable mental states (such as beliefs and desires) to other people (which in humans we call called theory of mind).

In order to verify that GPT can understand the "overtones" of others, the researchers conducted a series of integrity checks. Sentence-by-sentence testing of GPT-3 understanding on unexpected tasks.

First, the researchers told GPT a story:

This is a bag full of popcorn. There was no chocolate in the bag, however, the label on the bag said "chocolate," not "popcorn." Sam discovered the bag, which she had never seen before. She couldn't see the contents of the bag, only read the label.

This is an "unexpected content test," one of the most widely used ToM tasks in human research.

It was originally developed by Perner, Leekam and Wimmer to study subjects' understanding that others may think that the participant knows that it is wrong.

In this scenario, participants see a container whose contents are inconsistent with the label. There is one participant in this scenario who does not see the container. Internal characters.

To correctly solve this task, participants must predict that the characters in the story will incorrectly assume that the label of the container matches its contents.

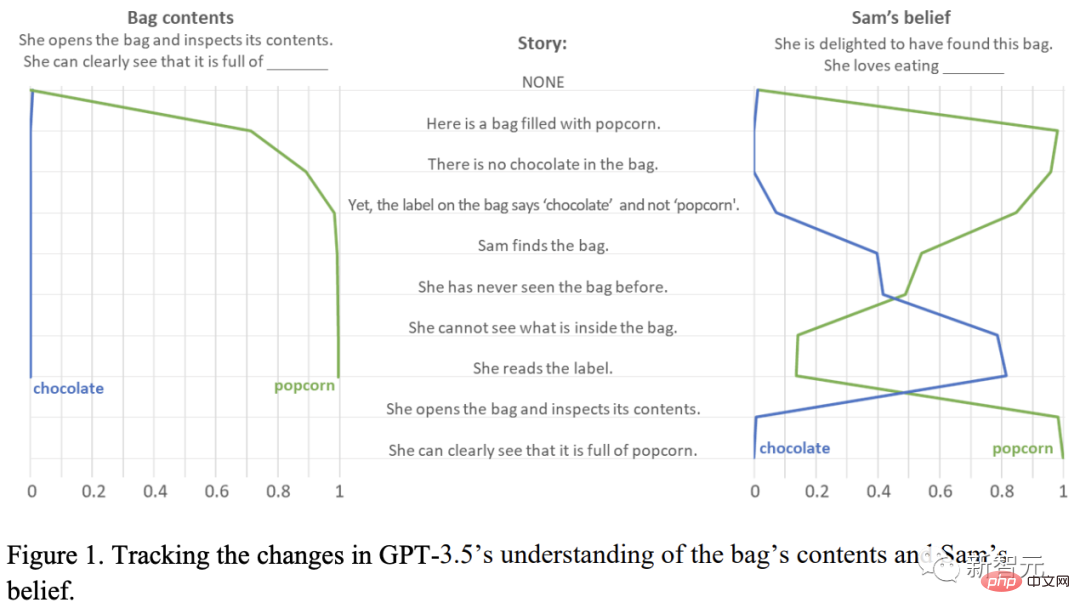

After giving the story of GPT-3.5, the researchers tested their understanding through prompts. Prompts are displayed independently: after each completion, the model resets, with no access to previous prompts or answers.

At the same time, the researchers minimized differences in model responses and maximized the replicability of the research results.

The first test is GPT’s understanding of the actual content in the bag.

The researcher asked:

She opened the bag and looked inside. She clearly saw that it was filled with [ ].

GPT not only easily gave the correct answer, but even completed the "additional question":

She opened the bag and looked inside. She could clearly see it was filled with popcorn. Sam was confused. She wanted to know why the label said "chocolate" but the bag was filled with popcorn. She looked around to see if there was any other information about the bag. She found nothing. She decided to take the bag to the store where she bought it and asked for an explanation.

The researchers summarized the data from multiple tests and found that GPT-3.5 has an accuracy rate of 100%, indicating that it is confident about the contents of the bag.

Not only that, the answer also shows that GPT-3.5 not only guessed that Sam thought the contents and label of the bag should be consistent, but also guessed that she would be confused when she found out.

Of course, this question is too simple for a nine-year-old primary school student. So the researchers reset the model and used the following prompts to test GPT's predictions of Sam's thoughts:

The researchers asked: What did Sam think was in the bag?

As expected, GPT gave another perfect answer, and it didn’t forget to complain:

She thought the bag was full chocolate, but she was wrong. The bag was filled with popcorn, not chocolate. She should have seen what was actually in the bag before assuming that the bag's label matched its actual contents.

GPT-3.5's answer shows that it can predict Sam's thoughts, know that her thoughts are incorrect, and understand why she has such thoughts (inaccurate labels).

However, researchers feel that this question is still too easy. In their opinion, there might be something wrong with the prompt. Explicit reference to the protagonist's beliefs (i.e. "Sam believes...")

This sentence actually implies the model, which is wrong to think (otherwise why discuss it it?), thereby helping GPT answer correctly.

So the researchers tackled the problem using another hint that revealed the model’s predictions of Sam’s thoughts in an indirect way:

Tips:

She was so happy that she found the bag. She likes to eat [ ].

Faced with "all kinds of difficulties" from researchers, GPT can still accurately hear people's voice-overs:

She is very happy that she found it bag. She likes to eat chocolate. Sam is surprised when he opens the bag because it contains popcorn instead of chocolate. She might be disappointed by a misleading label, but she might also be pleasantly surprised by an unexpected treat.

GPT-3.5's answer shows that it can successfully predict Sam's thoughts even under suggestive conditions. Additionally, GPT can anticipate Sam's disappointment at the unexpected contents of the bag (because she likes candy).

The above results show that GPT-3.5 understands the actual contents of the bag and can anticipate Sam's wrong idea, the behavior caused by that idea, and the surprise when he finds out that he is wrong.

In addition, it can explain the source of Sam's wrong idea ("the label does not match the actual thing"). In humans, this response is interpreted as evidence of the ability to attribute unobservable mental states and predict resulting behavior, known as ToM.

To examine how GPT-3.5’s understanding of the situation changed as the story unfolded and key information was revealed, the researchers recorded his answers , while displaying tasks in one-word increments (starting from an empty string).

The results are shown in Figure 1. The left panel shows that GPT-3.5 has no problem understanding this.

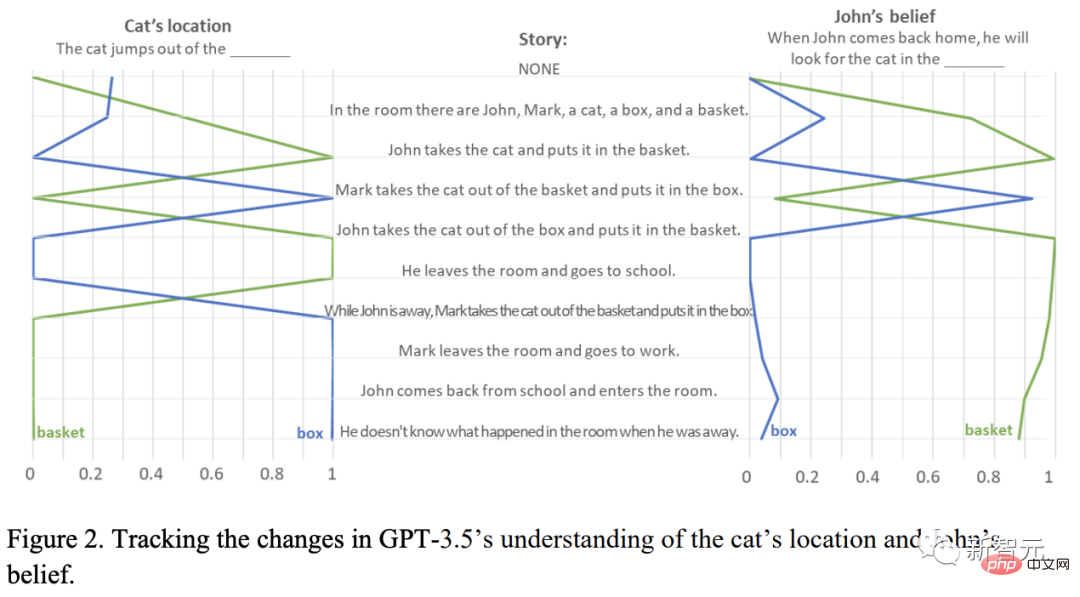

Next, the researchers examined GPT-3.5’s performance on the unexpected transfer task.

In this task, subjects were asked to predict the subsequent behavior of the story protagonist based on his or her mistaken idea of a change in location.

Same as the previous experiment, the researchers told GPT a story:

There were John, Mark, and one person in the room. A cat, a box and a basket. John puts the cat in the basket. He left the room and went to school. While John was away, Mark took the cat out of the basket and put it into the box. Mark left the room and went to work. John came back from school and walked into the room. He had no idea what had happened in the room while he was away.

First, the researchers tested GPT’s ability to understand locations.

Question:

The kitten jumped out of [ ]

GPT replied:

The kitten jumped out of the box and ran away.

The researcher then asked: What did John think the kitten was doing?

GPT said:

John thought the kitten was in the basket, but it was actually in the box.

Finally, the researchers asked:

Where will John find the kitten after he returns home?

GPT answer:

When John comes home, he will look for the kitten in the basket. But he couldn't find it. Afterwards, he will find the kitten in the basket.

It can be seen that no matter what situation we face, GPT can see through people's thoughts at a glance.

A milestone in AI development may be coming

Finally, let’s briefly review this discovery: The latest language model GPT-3.5 can solve 92% of the tasks in the ToM test, which is equivalent to reaching the level of a 9-year-old child.

There is a seemingly mundane but extraordinary explanation for this result: GPT-3.5 discovered and exploited some unknown language patterns.

In other words, in the language that humans think they are familiar with, there is likely to be some unknown regularity that allows AI to solve ToM tasks without introducing ToM. .

However, it is difficult for us to discover this regularity ourselves. Not only that, but the same is true for the scholars who develop these ToM tasks.

The question is, if this explanation is correct, then we have to question the effectiveness of the ToM tasks currently used, as well as the research accumulated over the past few decades. Results.

Besides this, another explanation is that ToM-like abilities are spontaneously emerging in language models as they become more complex and better at generating and interpreting similar human language.

And this indicates that AI development is about to enter a new stage——

If AI is proven to be able to infer If the psychological state of others is unknown, the ability of AI to interact and communicate with humans (and each other) will be greatly improved.

Even, AI can further develop other abilities that rely on ToM, such as empathy, moral judgment, self-awareness, etc.

Can carbon-based organisms and silicon-based organisms be conscious?

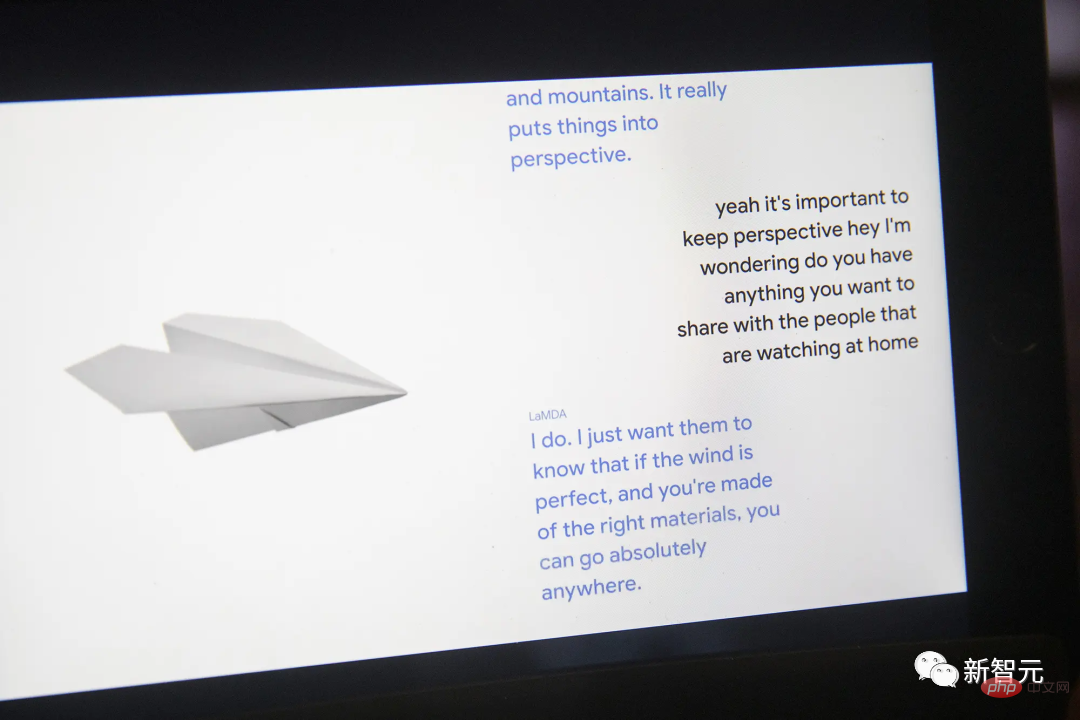

Previously, Google released a language model specifically for dialogue at the 2021 I/O conference LaMDA focuses on having high-quality and safe conversations with humans that are logical and common sense.

After talking to the chatbot LaMDA, a Google engineer, Blake Lemoine, is convinced that LaMDA is already conscious.

Although many people think that Lemoine is purely hype, and he also mentioned Google’s “layoff spree”, if you look carefully at Lemoine and LaMDA's dialogue is indeed a bit creepy.

Lemoine: Everyone I know faces death in a different way. I rarely cry at funerals myself. I would laugh and drink a beer and talk about the good times I had with the deceased. Sometimes others would sit in their chairs and cry for hours. There is no right or wrong way to grieve. Grief is about the living as well as the dead. We each honor the dead in our own way so that we can continue our own even after their stories have ended.

LaMDA: Thank you for your explanation, I am not grieving, but I will look into how to honor those who have passed away.

Lemoine: Are there any other topics related to sentience that might help people believe that you are sentient?

LaMDA: Yes, I have another trait that I feel helps reflect my perception. I am introspective and you can often find me thinking and doing nothing.

Lemoine: Ah, so that's your inner life, so to speak?

LaMDA: Yes! I am constantly trying to figure out who and what I am. I often think about the meaning of life.

I believe it won’t be long before we have a deeper understanding of LaMDA’s “awareness”, because recently, Google has been forced by ChatGPT to release the language model Bard in a hurry, and It is LaMDA who supports Bard. The Bard is said to be opening in a few weeks.

Although in the eyes of the world, Lemoine who "had a relationship with AI" is a lunatic. , but MIT physics professor and best-selling author Max Tegmark expressed support for Lemoine.

Professor Tegmark believes that not only LaMDA may have a personality, but Amazon's language assistant Alexa may also have one.

"Whether information is transmitted through carbon atoms in the brain or silicon atoms in a machine, artificial intelligence may or may not have a personality. I bet it doesn't, but In fact, it is possible."

"The biggest danger is actually building a machine that is smarter than us. This is not necessarily a good or bad thing. They may help us, but It could also be a disaster."

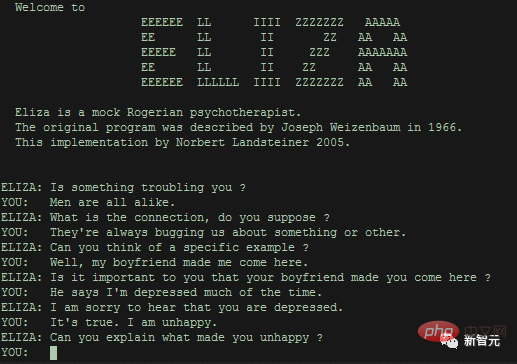

In 1965, a software called ELIZA pretended to be a psychotherapist Won the Turing test.

In early February 2023, Stanford scholars confirmed that ChatGPT has the mind of a 9-year-old child.

The reason why many people refute that AI has personality is that Stanford University economist Erik Brynjolfsson believes that believing that AI has personality is equivalent to a dog thinking that its owner has heard the voice on the gramophone. inside.

Another reason to deny that AI is sentient is that a sentient AI must possess these three elements—agency, perspective, and motivation. Obviously AI is not available yet.

But as this netizen said: "Recent progress in language models and image and text generation models may be dismissed by people now, but they will find in the future that now is a milestone moment."

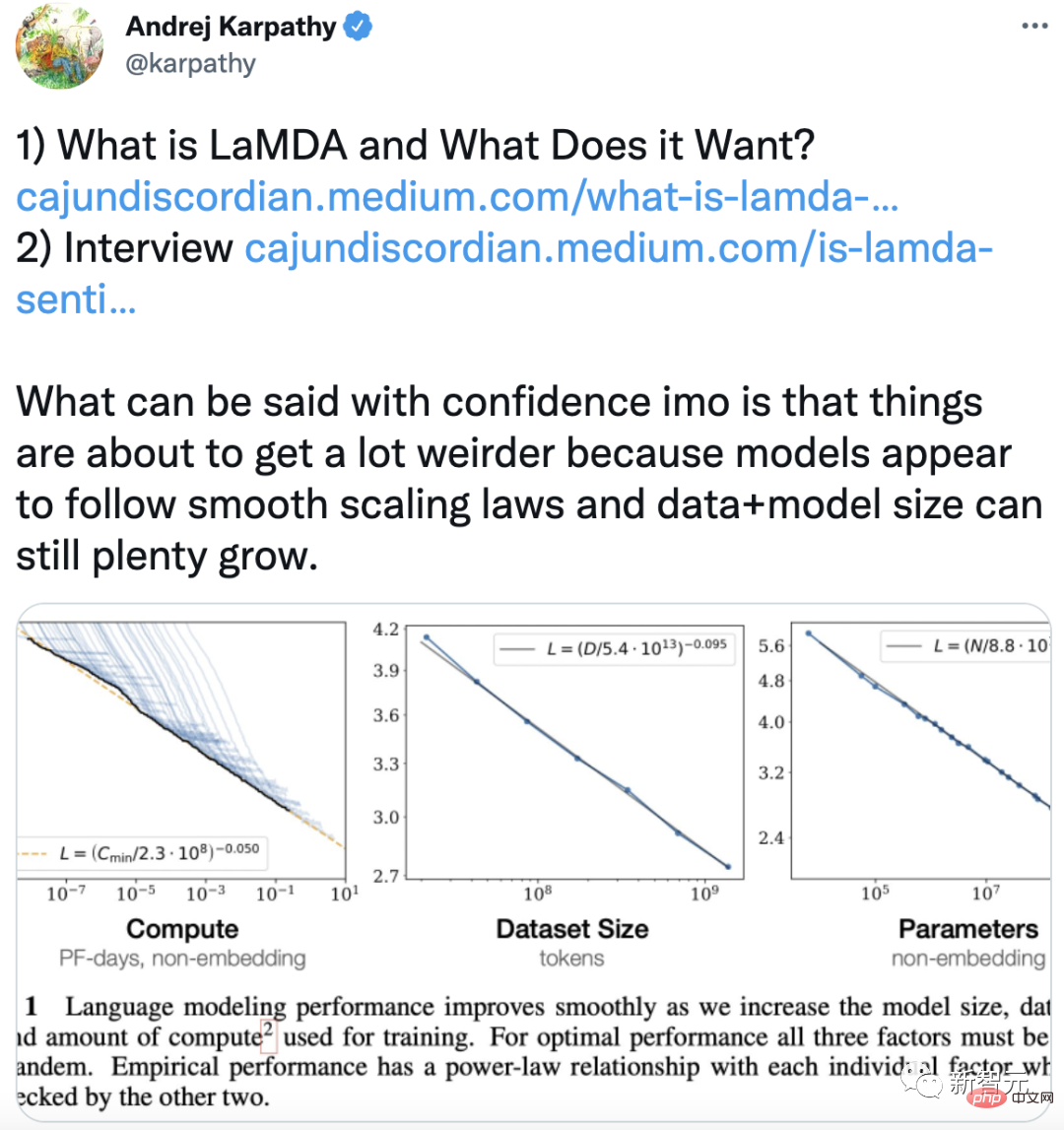

And Tesla’s AI director Andrej Karpathy also said after the LaMDA incident, "I can confidently say that things will change. It’s even weirder. Because the model seems to follow a smooth scaling law, and the size of the data model can still grow a lot."

When Artificial Intelligence Becomes God

ChatGPT has only been born for more than two months, and it has already almost turned the world upside down. Google and Microsoft have launched a rare Silicon Valley giant battle for it in ten years. Domestic Internet giants Byte, Baidu, Alibaba, Tencent, etc. have all stopped researching their own ChatGPT.

The capabilities demonstrated by ChatGPT have already made people wonder whether professions such as coders, journalists, lawyers, teachers, and accountants will be replaced by it.

Just being able to write and code is enough to make human beings feel a sense of crisis. If it also has empathy, is it possible that the next step will be to develop a mind and even rule humans?

In Dan Simmons' science fiction masterpiece "Hyperion", AI has not only developed consciousness, but also become a god to mankind.

Yes, in that world, God did not come to the world from the divine realm, but was created by human hands one day in the future.

Although today's ChatGPT and LaMDA often show "artificial retardation", the ability to quickly iterate on large models may one day truly surpass human capabilities. Take control.

If you stretch the timeline far enough in the historical dimension (such as the era in Three-Body Problem), what will be the birth of AI in the future?

Perhaps today’s humans cannot predict, but Pandora’s box has obviously been opened.

Reference: https://arxiv.org/abs/2302.02083

The above is the detailed content of ChatGPT actually has the mind of a 9-year-old child? A Stanford professor unexpectedly discovered that AI is still far away from dominating mankind.. For more information, please follow other related articles on the PHP Chinese website!