Recently Professor Ma Yi and Turing Award winner Yann LeCun jointly published a paper at ICLR 2023, describing a minimalist and interpretable unsupervised learning method that does not require resorting to data augmentation , hyperparameter adjustment or other engineering designs, you can achieve performance close to the SOTA SSL method.

Paper link: https://arxiv.org/abs/2209.15261

This method utilizes sparse manifold transformation and combines sparse coding, manifold learning and slow feature analysis.

Using a single-layer deterministic sparse manifold transformation, it can achieve 99.3% KNN top-1 accuracy on MNIST and 81.1% KNN top-1 accuracy on CIFAR-10 Accuracy, it can reach 53.2% KNN top-1 accuracy on CIFAR-100.

Through simple grayscale enhancement, the model's accuracy on CIFAR-10 and CIFAR-100 reached 83.2% and 57% respectively. These results significantly reduced the simple "white box" ” method and SOTA method.

In addition, the article also provides a visual explanation of how to form an unsupervised representation transformation. This method is closely related to the latent embedding self-supervised method and can be regarded as the simplest VICReg method.

Although there is still a small performance gap between our simple constructive model and the SOTA approach, there is Evidence suggests that this is a promising direction for achieving a principled, white-box approach to unsupervised learning.

The first author of the article, Yubei Chen, is a postdoctoral assistant at the Center for Data Science (CDS) and Meta Basic Artificial Intelligence Research (FAIR) at New York University. His supervisor is Professor Yann LeCun. He graduated with a Ph.D. from California The Redwood Center for Theoretical Neuroscience and the Berkeley Artificial Intelligence Institute (BAIR) at the University of Berkeley. He graduated from Tsinghua University with a bachelor's degree.

The main research direction is the intersection of computational neuroscience learning and deep unsupervised (self-supervised) learning. Research results Enhanced understanding of the computational principles of unsupervised representation learning in brains and machines, and reshaped understanding of natural signal statistics.

Professor Ma Yi received a double bachelor's degree in automation and applied mathematics from Tsinghua University in 1995, a master's degree in EECS from the University of California, Berkeley in 1997, and a master's degree in mathematics and EECS in 2000 Ph.D. He is currently a professor in the Department of Electrical Engineering and Computer Science at the University of California, Berkeley, and is also an IEEE Fellow, ACM Fellow, and SIAM Fellow.

Yann LeCun is best known for his work using convolutional neural networks (CNN) in optical character recognition and computer vision. He is also known as the father of convolutional networks; in 2019 he also Bengio and Hinton jointly won the Turing Award, the highest award in computer science.

In the past few years, unsupervised representation learning has made great progress and is expected to be used in data-driven machines. Provides powerful scalability in learning.

However, what is a learned representation and how exactly it is formed in an unsupervised manner are still unclear; furthermore, whether there is a set of tools to support all these unsupervised Common principles of representation remain unclear.

Many researchers have realized the importance of improving model understanding and have taken some pioneering measures in an attempt to simplify the SOTA method, establish connections with classic methods, and unify Different methods for visualizing representations and analyzing these methods from a theoretical perspective, with the hope of developing a different theory of computation: one that allows us to build simple, fully interpretable "white boxes" from data based on first principles model, the theory can also provide guidance for understanding the principles of unsupervised learning in the human brain.

In this work, the researchers took another small step towards this goal, trying to build the simplest "white box" unsupervised learning model that does not require deep networks, projection heads, Data augmentation or various other engineering designs.

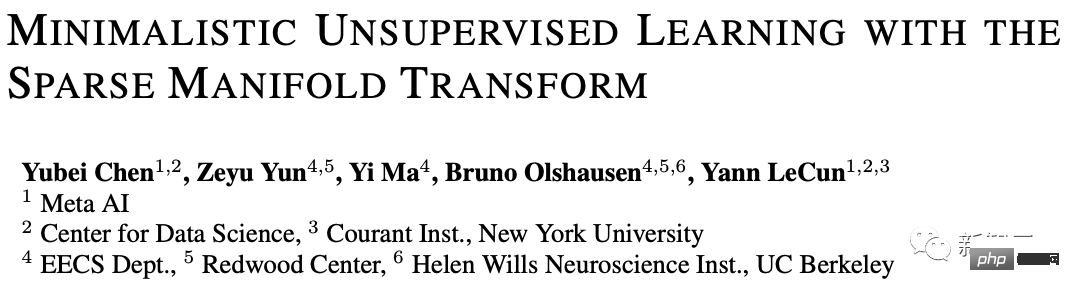

This article uses two classic unsupervised learning principles, namely sparsity and spectral embedding. embedding), a two-layer model was built and achieved non-significant benchmark results on several standard datasets.

Experimental results show that the two-layer model based on sparse manifold transform has the same objective as the latent-embedding self-supervised method, and can perform better without any data enhancement. In this case, it achieved the highest level 1 accuracy of KNN of 99.3% on MNIST, 81.1% of the highest level 1 accuracy of KNN on CIFAR-10, and 53.2% accuracy on CIFAR-100.

Through simple grayscale enhancement, we further achieved 83.2% KNN top-1 accuracy on CIFAR-10 and 57% KNN top-1 accuracy on CIFAR-100. Accuracy.

These results take an important step towards closing the gap between "white box" models and SOTA self-supervised (SSL) models. Although the gap is still obvious, the researchers believe that further Closing the gap makes it possible to gain a deeper understanding of the learning of unsupervised representations, which is also a promising research line towards the practical application of this theory.

What is unsupervised (self-supervised) re-presentation

Essentially, any non-identity transformation of the original signal can be called representation (re-presentation), but the academic community is more interested in those useful Convert.

A macro goal of unsupervised re-presentation learning is to find a function that transforms the original data into a new space so that "similar" things are placed closer together ;At the same time, the new space should not be collapsed and trivial, that is, the geometric or random structure of the data must be preserved.

If this goal is achieved, then "dissimilar" content will naturally be placed far away in the representation space.

Where does similarity come from?

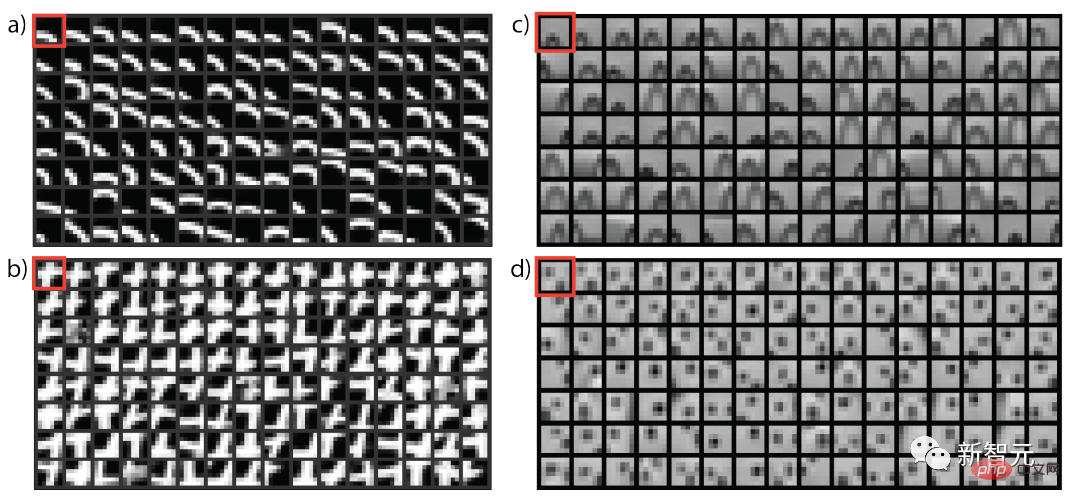

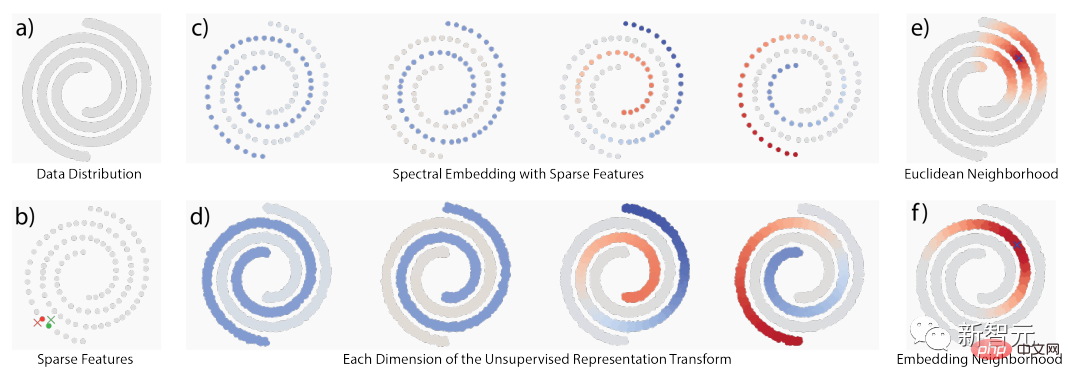

The similarity mainly comes from three classic ideas: 1) temporal co-occurrence, 2) spatial co-occurrence; and 3) local adjacency in the original signal space. neighborhoods).

When the underlying structure is a geometric structure, these ideas overlap to a considerable extent; but when the structure is a random structure, they are also conceptually different, as shown below Demonstrates the difference between manifold structure and stochastic co-occurrence structure.

Taking advantage of locality, related work proposes two unsupervised learning methods: manifold learning and co-occurrence statistics Modeling, many of these ideas reach the formulation of lineage decomposition or the closely related formulation of matrix decomposition.

The idea of manifold learning is that only local neighborhoods in the original signal space are credible. By comprehensively considering all local neighborhoods, the global geometry will emerge, that is "Think globally, fit locally" (think globally, fit locally).

In contrast, co-occurrence statistical modeling follows a probabilistic philosophy, because some structures cannot be modeled with continuous manifolds, so it is also a complement to the manifold philosophy.

One of the most obvious examples comes from natural language, where the original data basically does not come from smooth geometry, such as in word embeddings, the embeddings of "Seattle" and "Dallas" may be similar, even though they do not co-occur frequently, the underlying reason is that they have similar contextual patterns.

The perspectives of probability and manifold are complementary to each other in understanding "similarity". Once there is a definition of similarity, a transformation can be constructed to make similar concepts closer.

How to establish representation transformation in this article? Basic principles: sparsity and low rank

In general, sparsity can be used to handle locality and decomposition in the data space to establish support; Then a low-frequency function is used to construct a representation transformation that assigns similar values to similar points on the support.

The whole process can also be called sparse manifold transform (sparse manifold transform).

The above is the detailed content of The latest progress in sparse models! Ma Yi + LeCun join forces: 'White box' unsupervised learning. For more information, please follow other related articles on the PHP Chinese website!

direct3d function is not available

direct3d function is not available

My computer can't open it by double-clicking it.

My computer can't open it by double-clicking it.

Introduction to software development tools

Introduction to software development tools

Usage of Type keyword in Go

Usage of Type keyword in Go

Introduction to the main work content of the backend

Introduction to the main work content of the backend

Tutorial on buying and selling Bitcoin on Huobi.com

Tutorial on buying and selling Bitcoin on Huobi.com

How to use countif function

How to use countif function

What currency is PROM?

What currency is PROM?