Nature: Are bigger AI models better?

Generative AI models are getting bigger these days, so does bigger mean better?

No. Now, some scientists are proposing leaner, more energy-efficient systems.

Article address: https://www.nature.com/articles/d41586-023-00641-w

Language model of indefinite mathematics

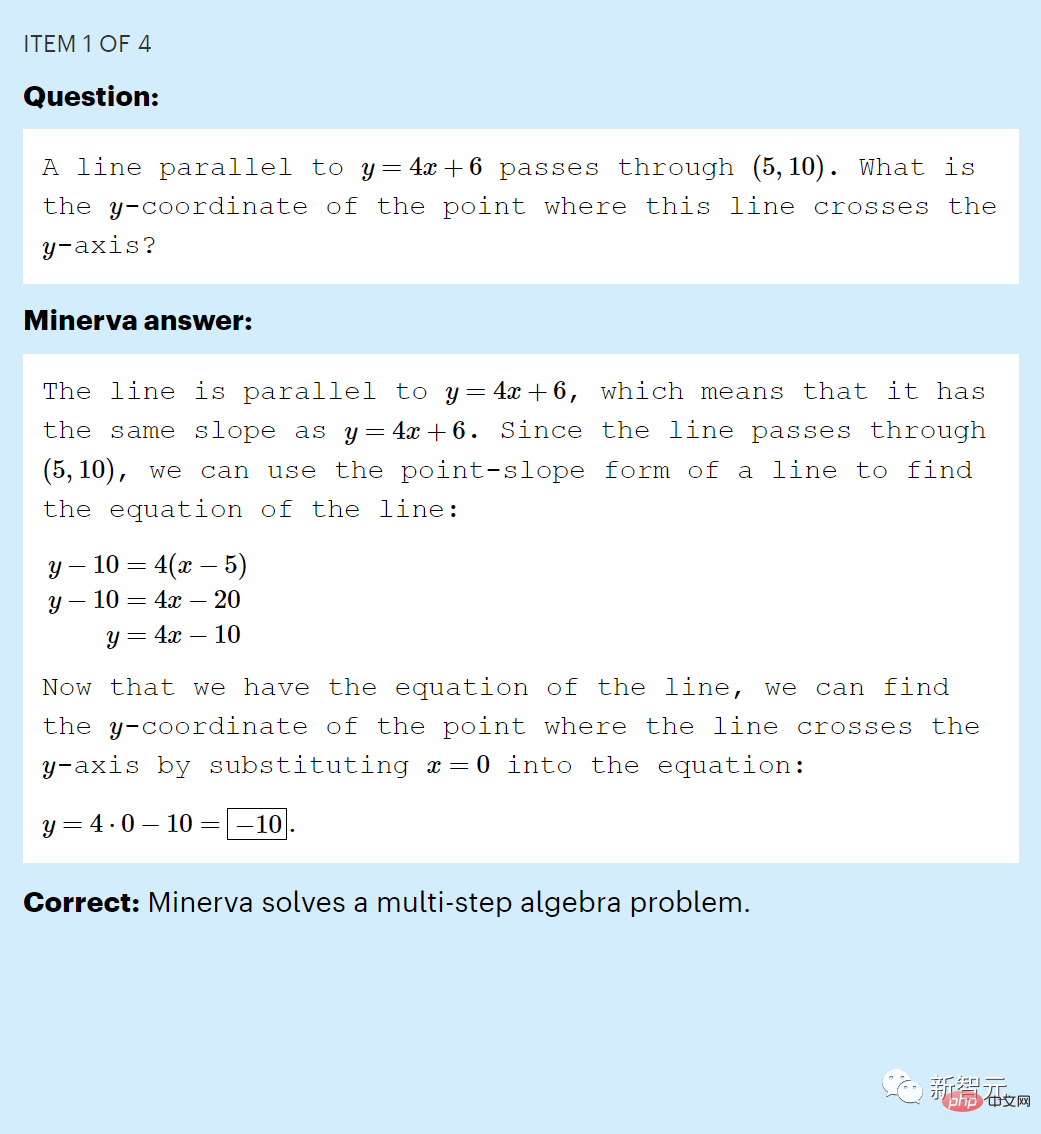

ChatGPT, the recent darling of the technology industry, often performs poorly when faced with mathematical questions that require reasoning to answer.

For example, this question "A straight line parallel to y = 4 x 6 passes through (5, 10). What is the y coordinate of the intersection of this line and the y axis?", it is often not answered correctly.

In an early test of reasoning ability, ChatGPT scored just 26 (percent) when answering a sample of the MATH dataset at the middle school level.

This is of course what we expected. After given the input text, ChatGPT only generates new text based on the statistical rules of words, symbols and sentences in the training data set.

It is of course impossible for the language model to learn to imitate mathematical reasoning by just learning the language model.

But in fact, as early as June 2022, the large language model called Minerva created by Google had already broken this "curse."

Minerva scored 50% on the questions in the MATH dataset (2), a result that shocked the researchers.

Minerva correctly answered a middle school math problem in the "MATH" data set

Sébastien Bubeck, a machine learning expert at Microsoft Research, said that insiders They were all shocked and talked about it.

The advantage of Minerva is, of course, that it has been trained on mathematical texts.

But Google’s research suggests another big reason why the model performs so well — its sheer scale. It is approximately three times the size of ChatGPT.

Minerva's results hint at something that some researchers have long suspected: that training larger LLMs and feeding them more data can enable them to solve problems that should require inference through pattern recognition alone. Task.

If that's the case, researchers say this "bigger is better" strategy could provide a path to powerful artificial intelligence.

But this argument is obviously questionable.

LLM still makes obvious mistakes, and some scientists believe that larger models only get better at answering queries within the relevant range of training data, and do not gain the ability to answer entirely new questions.

This debate is now raging on the frontiers of artificial intelligence.

Commercial companies have seen that with larger AI models, they can get better results, so they are rolling out larger and larger LLMs - each costing millions of dollars to build. Train and run.

But these models have major shortcomings. In addition to their output potentially being untrustworthy, thus fueling the spread of misinformation, they are simply too expensive and consume a lot of energy.

Commentators believe that large LLMs will never be able to imitate or acquire the skills that allow them to consistently answer reasoning questions.

Instead, some scientists say progress will be made with smaller, more energy-efficient AI, a view inspired in part by the way the brain learns and makes connections.

Is a bigger model better?

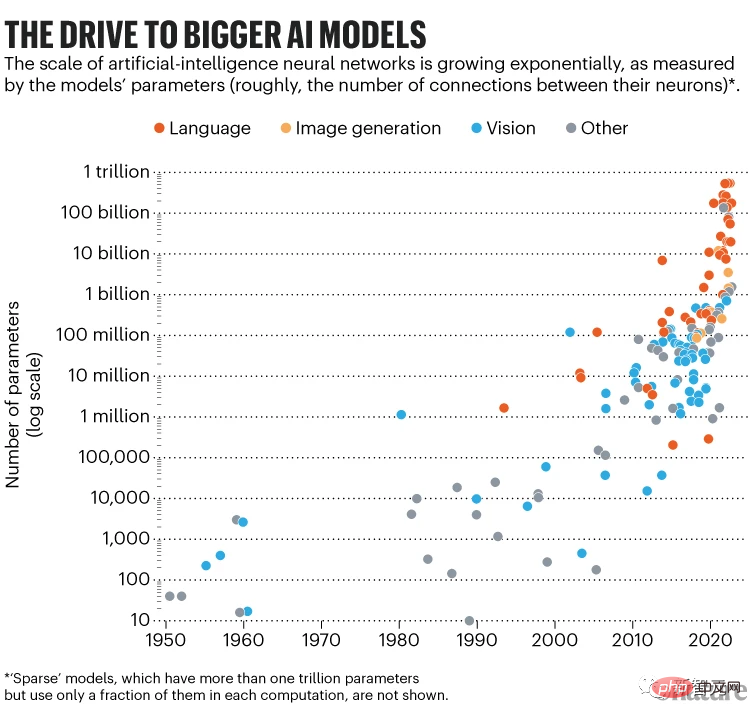

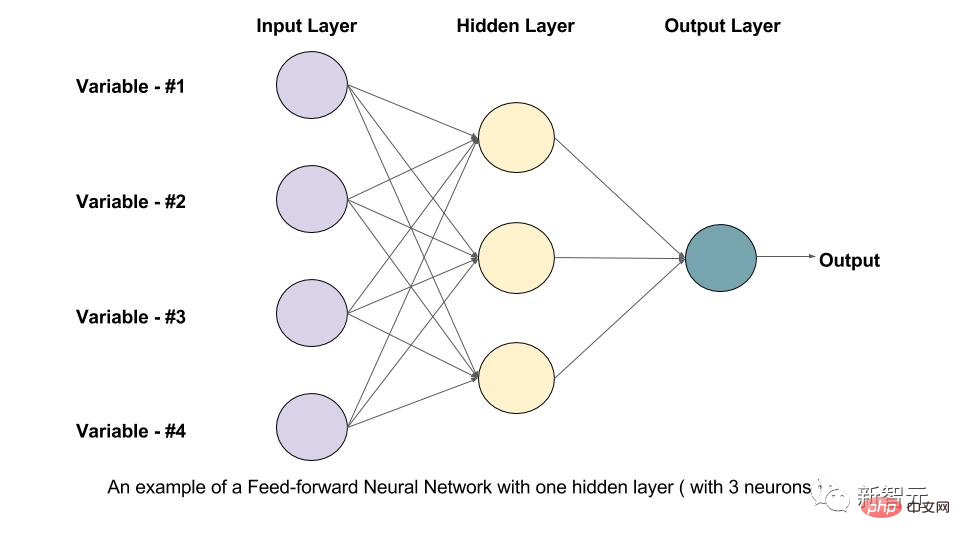

Large language models such as ChatGPT and Minerva are huge networks of hierarchically arranged computing units (also called artificial neurons).

The size of an LLM is measured by how many parameters it has, and the number of parameters describes the adjustable values of the connection strength between neurons.

To train such a network, you need to ask it to predict the mask part of a known sentence and adjust these parameters so that the algorithm can do better next time.

Repeat this operation over billions of human-written sentences, and the neural network learns internal representations that mimic the way humans write language.

At this stage, the LLM is considered pre-trained: its parameters capture the statistical structure of written language it saw during training, including all facts, biases, and errors in the text. It can then be "fine-tuned" based on specialized data.

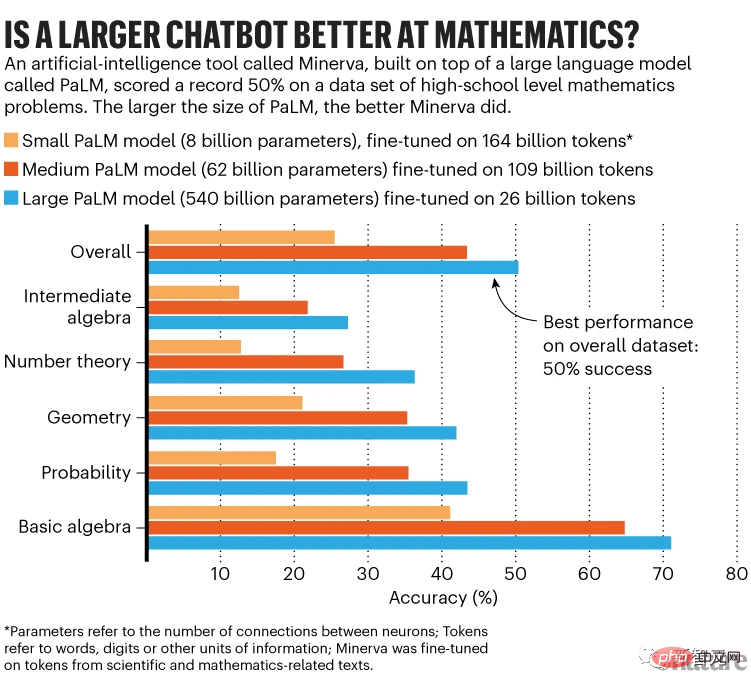

For example, to make Minerva, the researchers started with Google’s Pathways Language Model (PaLM), which has 540 billion parameters and was pre-trained on a data set of 780 billion tokens.

Token can be a word, number, or some unit of information; in PaLM's case, tokens are collected from English and multilingual web documents, books, and code. Minerva is the result of PaLM fine-tuning tens of billions of tokens from scientific papers and mathematical web pages.

Minerva can answer the question "What is the largest multiple of 30 that is less than 520?"

LLM seems to be thinking in steps, but all it does is convert the problem into a token sequence, generate a statistically reasonable next token, append it to the original sequence, generate another token, etc. This process is called reasoning.

Google researchers fine-tuned Minerva’s three sizes using underlying pre-trained PaLM models with 8 billion, 62 billion and 540 billion parameters. Minerva's performance improves with scale.

On the entire MATH data set, the accuracy of the smallest model is 25%, the medium model reaches 43%, and the largest model breaks the 50% mark.

The largest model also used the least fine-tuning data - it was fine-tuned on only 26 billion tokens, while the smallest model was fine-tuned on 164 billion tokens.

But the largest model took a month to fine-tune, with specialized hardware requiring eight times the computing power of the smallest model, which took just two weeks to fine-tune.

Ideally, the largest model should be fine-tuned on more tokens. Ethan Dyer, a member of the Minerva team at Google Research, said this could have led to better performance. But the team didn't think it was feasible to calculate the cost.

Scale Effect

The largest Minerva model performs best, which is consistent with the research on Scaling Law (scale effect) - these laws determine performance How to improve as model size increases.

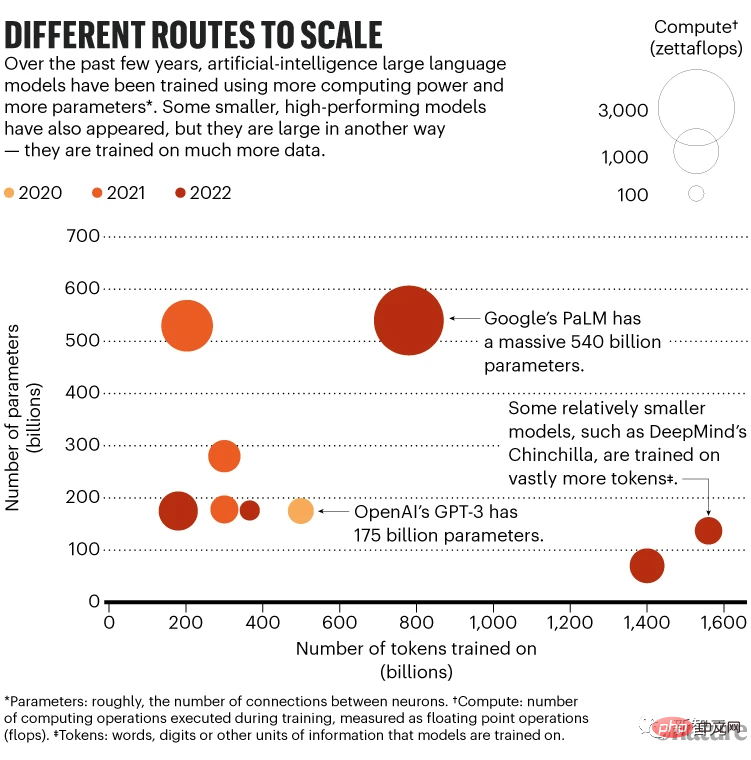

A 2020 study showed that models perform better given one of three things: more parameters, more training data, or more “computations” (the number of computational operations performed during training) .

Performance scales according to a power law, meaning it improves as the number of parameters increases.

However, researchers don’t know why. “These laws are purely empirical,” says Irina Rish, a computer scientist at the Mila-Quebec Institute for Artificial Intelligence at the University of Montreal in Canada.

For best results, 2020 research suggests that model size should increase five-fold as training data doubles. This was slightly modified from last year's work.

In March of this year, DeepMind argued that it is best to expand both model size and training data, and that smaller models trained on more data perform better than larger models trained on less data.

For example, DeepMind’s Chinchilla model has 70 billion parameters and is trained on 1.4 trillion tokens, while the 280 billion parameter Gopher model is trained on 300 billion tokens. In subsequent evaluations, Chinchilla outperformed Gopher.

In February, Meta scientists built a small parameter model called LLaMA based on this concept, which was trained on up to 1.4 trillion tokens.

Researchers said that the 13 billion parameter version of LLaMA is better than ChatGPT’s predecessor GPT-3 (175 billion parameters), while the 65 billion parameter version is more competitive than Chinchilla or even PaLM.

Last October, Ethan Caballero of McGill University in Montreal, Rish and others reported that they had discovered a more complex relationship between scale and performance - in some cases, multiple power laws can control performance How it changes with model size.

For example, in a hypothetical scenario of fitting a general equation, performance first improves gradually and then more rapidly as the size of the model increases, but then decreases slightly as the number of parameters continues to increase. , and then increase again. The characteristics of this complex relationship depend on the details of each model and how it was trained.

Ultimately, the researchers hope to be able to predict in advance when any particular LLM will scale up.

A separate theoretical finding also supports the drive for larger models - the "robustness law" of machine learning, proposed by Bubeck and colleagues in 2021.

A model is robust if its answers remain consistent despite small perturbations in its input.

And Bubeck and his colleagues demonstrated mathematically that increasing the number of parameters in a model improves robustness and thus generalization ability.

Bubeck said regular evidence shows that scaling up is necessary but not sufficient for generalization. Still, it's been used to justify a move to a larger model. "I think it's a reasonable thing to do."

Minerva also takes advantage of a key innovation called Thought Chain Prompts. The user prefixes the question with text that includes several examples of the problem and solution, as well as reasoning leading to the answer (this is a classic chain of thought).

During reasoning, LLM takes clues from this context and provides a step-by-step answer that looks like reasoning.

This does not require updating the parameters of the model and therefore does not involve the additional computing power required for fine-tuning.

The ability to respond to thought chain prompts only appears in LLM with over 100 billion parameters.

Blaise Agüera y Arcas of Google Research said the findings help larger models improve based on empirical scaling laws. “Bigger models will get better and better.”

reasonable concerns

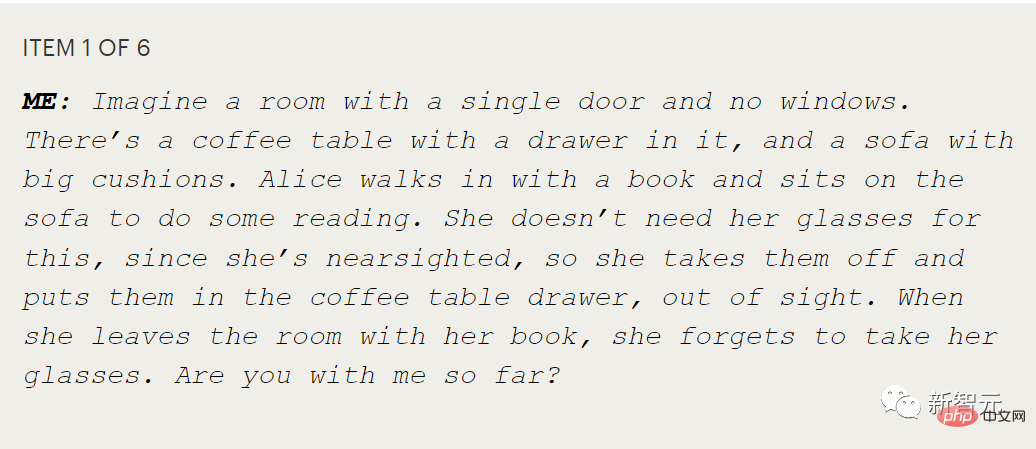

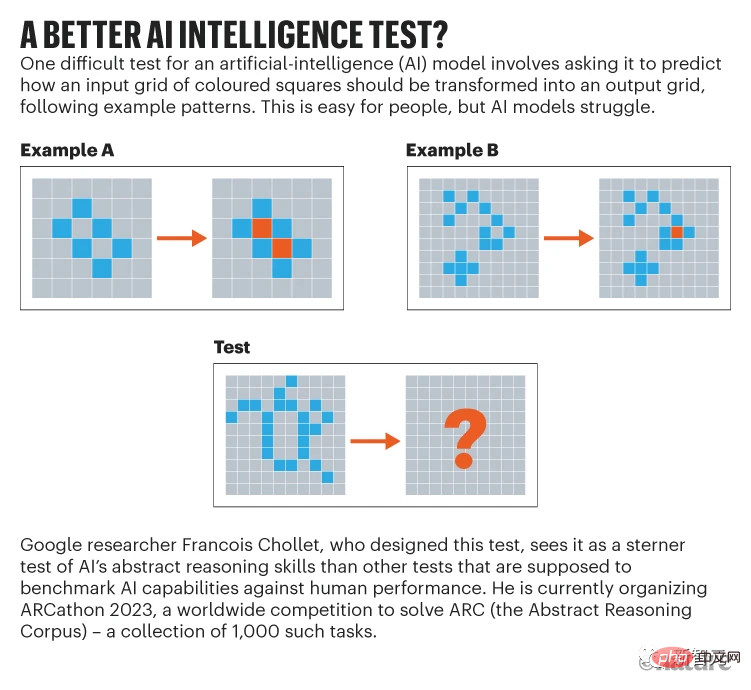

François Chollet, an artificial intelligence researcher at Google, is one of the skeptics who believes that regardless of No matter how large LLMs get, they will never have good enough reasoning (or imitative reasoning) abilities to reliably solve new problems.

He said that LLM seemed to reason only by using templates it had encountered before, either in the training data or in the prompts. "It can't instantly understand something it hasn't seen before."

Perhaps the best thing LLM can do is absorb so much training data that the statistical patterns of the language themselves can be used Very close to seeing the answer, to the question.

However, Agüera y Arcas believes that LLMs do seem to acquire some abilities that they were not specifically trained for, which are surprising.

In particular, tests show whether a person has what is called theory of mind, which is the ability to theorize or measure the mental states of others.

For example, Alice puts her glasses in a drawer, and then Bob hides them under a cushion without Alice knowing. Where would Alice go first to find her glasses?

Asking a child this question is to test whether they understand that Alice has her own beliefs, which may not be consistent with what the child knows.

In his tests of LaMDA, another LLM from Google, Agüera y Arcas found that LaMDA responded correctly in these types of more extended conversations.

To him, this demonstrates LLM's ability to internally simulate the intentions of others.

Agüera y Arcas said: "These models, which do nothing but predict sequences, have developed a range of extraordinary capabilities, including theory of mind." But he acknowledged that these models are prone to error. , and he's not sure whether changing scale alone is enough for reliable inference, although it seems necessary.

Blaise Agüera y Arcas of Google Research blogged about his conversation with LaMDA. Agüera y Arcas Thought this was an impressive exchange, LaMDA seemed to be able to consistently model what the two interlocutors in the story knew and didn't know, clearly a parody of theory of mind.

However, Chollet said that even if LLM got the right answer, there was no understanding involved.

However, Chollet said that even if LLM got the right answer, there was no understanding involved.

“When you dig into it a little, you immediately see that it’s empty. ChatGPT doesn’t have it. A model of what you're talking about. It's as if you're watching a puppet show and you believe the puppets are alive."

So far, LLM still makes absurd mistakes that humans would never make, Melanie Mitchell said. She studies conceptual abstraction and analogy in artificial intelligence systems at the Santa Fe Institute.

This has raised concerns about whether it is safe to release LLM into society without guardrails.

Mitchell added that one difficulty with whether LLM can solve truly new, unseen problems is that we have not been able to fully test this ability.

"Our current benchmarks are not adequate," she said. "They don't explore things systematically. We don't know how to do that yet."

Chollet advocates an abstract reasoning test he designed, called the Abstract Reasoning Corpus.

Problems due to scale

Problems due to scale

For example, OpenAI is expected to spend more than 4 million US dollars on the training of GPT-3, and it may cost millions of dollars every month to maintain the operation of ChatGPT.

As a result, governments from various countries have begun to intervene, hoping to expand their advantages in this field.

Last June, an international team of about 1,000 academic volunteers, with funding from the French government, Hugging Face and other institutions, spent $7 million worth of computing time to train 1,760 parameters Billions of BLOOM models.

In November, the U.S. Department of Energy also authorized its own supercomputing to a large research model project. It is said that the team plans to train a 70 billion parameter model similar to Chinchilla.

#However, no matter who is doing the training, the power consumption of LLM cannot be underestimated.

#However, no matter who is doing the training, the power consumption of LLM cannot be underestimated.

Google said that over about two months, training PaLM took about 3.4 gigawatt hours, which is equivalent to the energy consumption of about 300 U.S. homes for a year.

Although Google claims that it uses 89% clean energy, surveys of the entire industry show that most training uses grids powered mainly by fossil fuels.

Smaller, smarter?

From this perspective, researchers are eager to reduce the energy consumption of LLM - to make neural networks smaller, more efficient, and perhaps smarter.

In addition to the energy cost of training LLM (although it is considerable, it is also one-time), the energy required for inference will surge as the number of users increases. For example, the BLOOM model answered a total of 230,768 queries in the 18 days it was deployed on Google Cloud Platform, with an average power of 1,664 watts.

In comparison, our own brain is much more complex and larger than any LLM, with 86 billion neurons and about 100 trillion synaptic connections, but only about 20 to 50 watts of power .

As a result, some researchers hope to realize the vision of making models smaller, smarter, and more efficient by imitating the brain.

Essentially, LLM is a "feedforward" network, which means that information flows in one direction: from the input, through the layers of the LLM, to the output end.

But the brain is not like that. For example, in the human visual system, in addition to forward transmission of received information into the brain, neurons also have feedback connections that allow information to be transmitted in the opposite direction between neurons. There may be ten times as many feedback connections as there are feedforward connections.

In artificial neural networks, recurrent neural networks (RNN) also include both feedforward and feedback connections. Unlike LLMs, which only have feedforward networks, RNNs can discern patterns in data over time. However, RNNs are difficult to train and slow, making it difficult to scale them to the scale of LLMs.

Currently, some studies using small data sets have shown that RNNs with spiking neurons can outperform standard RNNs and are, in theory, computationally more efficient. out three orders of magnitude.

However, as long as such spiking networks are simulated in software, they cannot truly achieve efficiency improvements (because the hardware that simulates them still consumes energy).

Meanwhile, researchers are experimenting with different methods to make existing LLMs more energy-efficient.

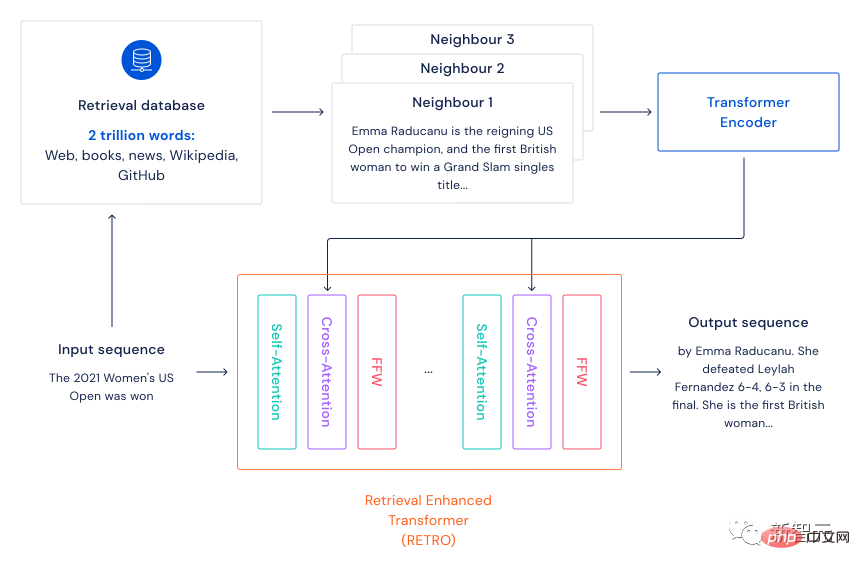

In December 2021, DeepMind proposed the retrieval-based language model framework Retro.

#Retro mainly imitates the brain’s mechanism of not only using current knowledge but also memory retrieval when learning. Its framework is to first prepare a large-scale text data set (acting as the brain's memory), and use the kNN algorithm to find the n nearest neighbor sentences of the input sentence (retrieval memory).

After the input sentences and retrieved sentences are encoded by Transformer, Cross-Attention is then performed, so that the model can simultaneously use the information in the input sentences and the memory information to complete various NLP tasks.

The large number of parameters in previous models was mainly to preserve the information in the training data. When this retrieval-based framework is used, the number of parameters in the model does not need to be particularly large. More text information can be included, which will naturally speed up the model without losing too much performance.

This method can also save electricity bills during model training. The environmentally friendly girls all liked it!

Experimental results show that a large language model with 7.5 billion parameters, plus a database of 2 trillion tokens, can outperform a model with 25 times more parameters. The researchers write that this is a "more efficient approach than raw parameter scaling as we seek to build more powerful language models."

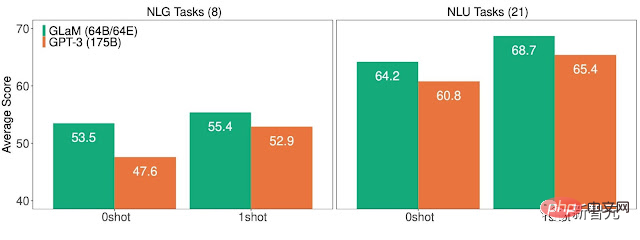

In the same month, researchers at Google proposed another way to improve energy efficiency at scale.

This sparse general language model GLaM with 1.2 trillion parameters has 64 smaller neural networks internally.

During the inference process, the model uses only two networks to complete the task. In other words, only about 8% of more than one trillion parameters are used.

Google said that GLaM uses the same computing resources as those required to train GPT-3, but due to improvements in training software and hardware, the energy consumption is only 1/3 of the latter. The computing resources required for inference are half of those of GPT-3. In addition, GLaM also performs better than GPT-3 when trained on the same amount of data.

However, for further improvements, even these more energy-efficient LLMs appear destined to become larger and use more data and computation.

Reference materials:

https://www.nature.com/articles/d41586-023-00641-w

The above is the detailed content of Nature: Are bigger AI models better?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to achieve the playback of pictures like videos? Many times, we need to implement similar video player functions, but the playback content is a sequence of images. direct...

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Data update problems in zustand asynchronous operations. When using the zustand state management library, you often encounter the problem of data updates that cause asynchronous operations to be untimely. �...

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

A solution to implement text annotation nesting in Quill Editor. When using Quill Editor for text annotation, we often need to use the Quill Editor to...

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a front-end page in back-end development? As a backend developer with three or four years of experience, he has mastered the basic JavaScript, CSS and HTML...

Electron rendering process and WebView: How to achieve efficient 'synchronous' communication?

Apr 04, 2025 am 11:45 AM

Electron rendering process and WebView: How to achieve efficient 'synchronous' communication?

Apr 04, 2025 am 11:45 AM

Electron rendering process and WebView...

How to use CSS to achieve smooth playback effect of image sequences?

Apr 04, 2025 pm 04:57 PM

How to use CSS to achieve smooth playback effect of image sequences?

Apr 04, 2025 pm 04:57 PM

How to realize the function of playing pictures like videos? Many times, we need to achieve similar video playback effects in the application, but the playback content is not...

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the height of the input element is very high but the text is located at the bottom. In front-end development, you often encounter some style adjustment requirements, such as setting a height...

How to solve the problem that the result of OpenCV.js projection transformation is a blank transparent picture?

Apr 04, 2025 pm 03:45 PM

How to solve the problem that the result of OpenCV.js projection transformation is a blank transparent picture?

Apr 04, 2025 pm 03:45 PM

How to solve the problem of transparent image with blank projection transformation result in OpenCV.js. When using OpenCV.js for image processing, sometimes you will encounter the image after projection transformation...