Technology peripherals

Technology peripherals

AI

AI

Hot paper creates the prototype of 'Westworld': 25 AI agents grow freely in the virtual town

Hot paper creates the prototype of 'Westworld': 25 AI agents grow freely in the virtual town

Hot paper creates the prototype of 'Westworld': 25 AI agents grow freely in the virtual town

Can we create a world? In that world, robots can live, work, and socialize like humans, replicating all aspects of human society.

This kind of imagination has been perfectly restored in the setting of the film and television work "Westworld": many robots with pre-installed storylines were put into a theme park, They can behave like humans, remembering things they see, people they meet, and words they say. Each day, the bots are reset and returned to their core storyline.

Stills from "Westworld", the character on the left is a robot pre-installed with a storyline.

Expand your imagination: today, what would we do if we wanted to turn a large language model like ChatGPT into the master of Western World? ?

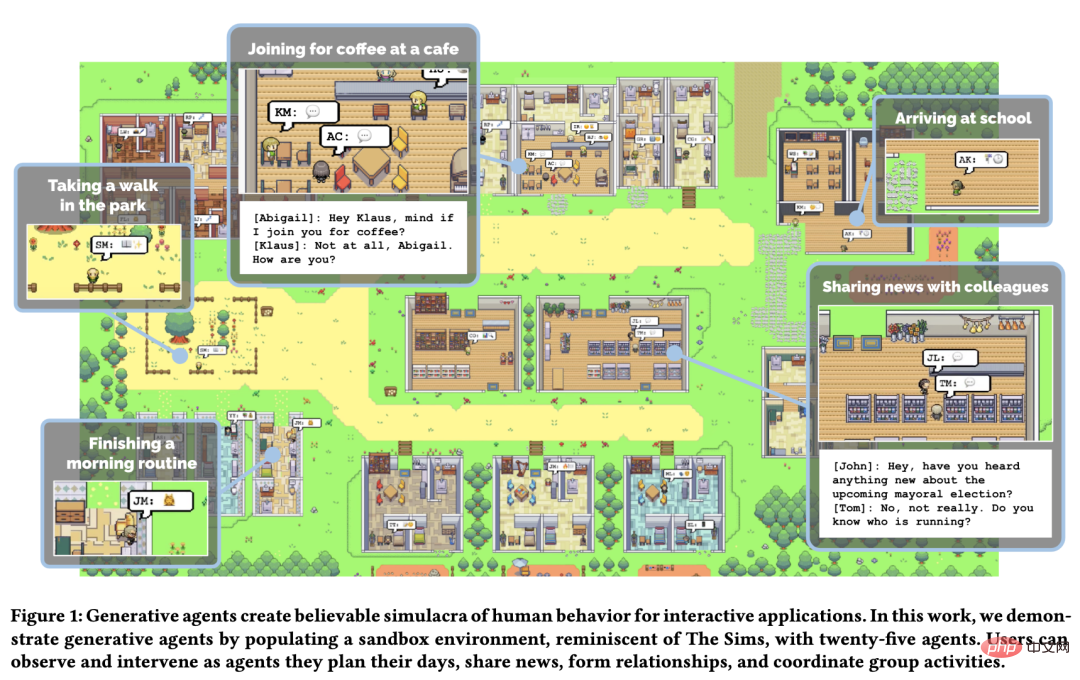

In a recent popular paper, researchers successfully built a "virtual town" in which 25 AI agents survive. Not only can they Engage in complex behaviors (such as hosting a Valentine's Day party) that are more realistic than human role-playing.

- Paper link: https://arxiv.org/pdf/2304.03442v1.pdf

- Demo address: https://reverie.herokuapp.com/arXiv_Demo/

From sandbox games like "The Sims" to cognitive models , virtual environments and other applications, for more than 40 years, researchers have been envisioning the creation of intelligent agents that can achieve trustworthy human behavior. In these scenarios, computationally driven agents behave consistently with their past experiences and respond believably to their environment. This simulation of human behavior can fill virtual spaces and communities with real social phenomena, train "people" to deal with rare but difficult interpersonal relationships, test social science theories, produce human processor models for theory and usability testing, and provide ubiquitous computing Application and social robot dynamics can also lay the foundation for NPC characters to navigate complex human relationships in the open world.

But the space of human behavior is huge and complex. Although large language models can simulate believable human behavior at a single point in time, to ensure long-term consistency, a general-purpose agent needs an architecture to manage growing memories as new interactions, conflicts, and events occur over time. and emerge and fade, while also dealing with the cascading social dynamics unfolding between multiple agents.

If a method can retrieve relevant events and interactions over a long period of time, reflect on these memories, and generalize and draw higher-level inferences, and apply this If we use this type of reasoning to create plans and responses that are meaningful to current and long-term agent behavior, then we are not far from realizing our dream.

This new paper introduces "Generative Agents" (generative agents), a type of agents that use generative models to simulate believable human behavior, and demonstrates that they can produce trustworthy human behaviors. Simulation of individual and emergent group behaviors:

- Ability to make broad inferences about oneself, other agents, and the environment;

- Ability to create daily plans that reflect one's own characteristics and experiences, execute these plans, react, and re-plan when appropriate; react when language commands them.

Behind "Generative Agents" is a new agent architecture that can store, synthesize and apply relevant memories using large languages Models generate believable behavior.

For example, "Generative Agents" will turn off the stove if they see their breakfast burning; if there is someone in the bathroom, they will wait outside; if they meet another agent they want to talk to, they will stop Come down and chat. A society full of "Generative Agents" is marked by emerging social dynamics, in which new relationships are formed, information is diffused, and coordination occurs among agents.

Specifically, the researcher announced several important details in this paper:

- Generative Agents are the A credible simulation of human behavior that dynamically adjusts based on the agent's changing experience and environment;

- A novel architecture that makes it possible for Generative Agents to remember, retrieve, Reflect, interact with other agents, and plan through dynamically evolving environments. The architecture leverages the powerful prompt capabilities of large language models and complements these capabilities to support long-term consistency of the agent, memory capabilities to manage dynamic evolution, and the recursive generation of additional generations;

- Two evaluations (control evaluation and end-to-end evaluation) to determine the cause-and-effect relationships of the importance of each component of the architecture, as well as to identify failures due to improper memory retrieval, etc.;

- The opportunities and ethical and social risks of Generative Agents in interactive systems are discussed. The researchers believe these agents should be tuned to mitigate the risk of users forming parasitic social relationships, documented to mitigate the risks posed by deepfakes and customized persuasion, and designed to complement rather than replace human interests. be applied in a different way.

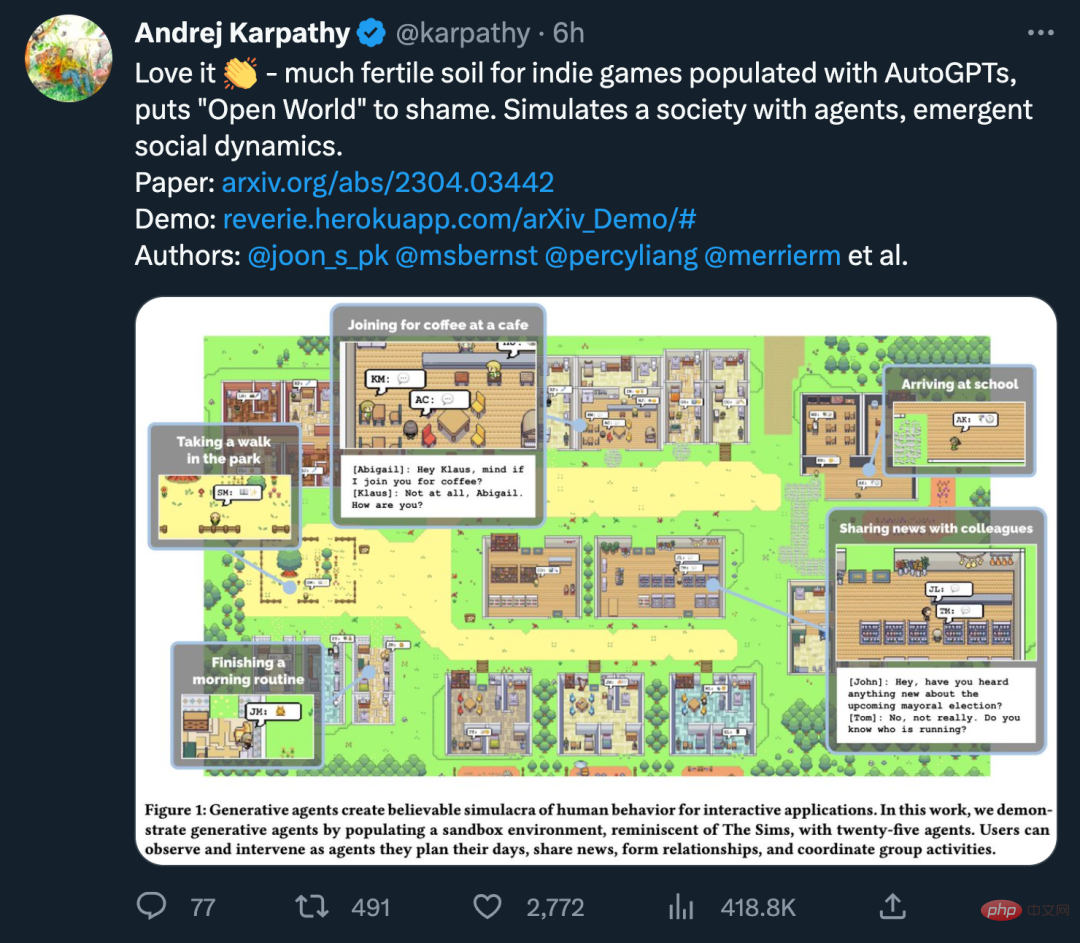

Once the article was published, it aroused heated discussion across the Internet. Karpathy, who was already optimistic about the direction of "AutoGPT", praised repeatedly, thinking that "Generative Agents" is not a little bit better than "Open World", which he played with the concept before:

Some researchers assert that the release of this research means that "large language models have achieved a new milestone":

"Generative Agents" behavior and interaction

To make “Generative Agents” more concrete, the study instantiates them as characters in a sandbox world.

Twenty-five agents live in a small town called Smallville, each represented by a simple avatar. All characters can:

- Communicate with others and the environment;

- Remember and recall what they have done and observed things;

- Reflect on these observations;

- Make a daily plan.

The researchers used natural language to describe the identity of each agent, including their occupation and relationship with other agents, And use this information as seed memory. For example, the agent John Lin has the following description (this article excerpts):

"John Lin is a drugstore owner who is willing to help others. He is always looking for ways to make customers more intelligent. Easy way to get drugs. John Lin's wife is university professor Mei Lin, and they live together with their son Eddy Lin, who studies music theory; John Lin loves his family very much; John Lin has known the old couple next door, Sam Moore and Jennifer Moore, for several years Years..."

After the identity is set, the next step is how the agent interacts with the world.

In each step of the sandbox, the agents output a natural language statement to describe their current actions, such as the statement "Isabella Rodriguez is writing a diary", "Isabella Rodriguez Checking mail" etc. These natural languages are then translated into concrete actions that affect the sandbox world. Actions are displayed on the sandbox interface as a set of emoticons that provide an abstract representation of the action.

To achieve this, the research employs a language model that converts actions into a set of emojis that appear in a dialog box above each agent avatar. For example, "Isabella Rodriguez is writing in her diary" appears as , and "Isabella Rodriguez is checking emails" appears as . Additionally, a full natural language description can be accessed by clicking on the agent avatar.

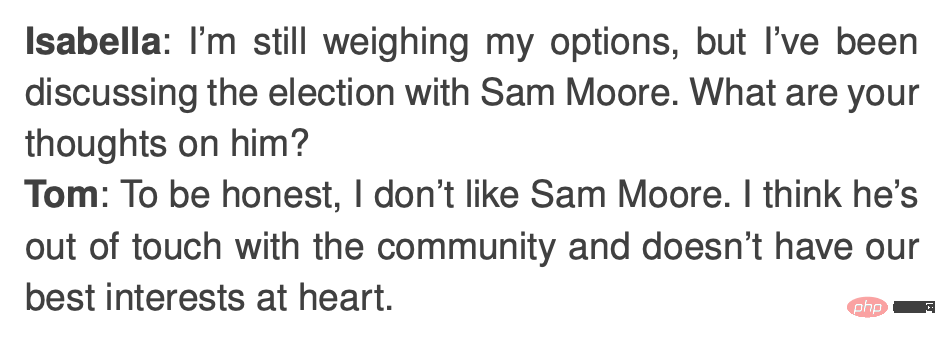

Agents communicate in natural language. If an agent realizes that there are other agents around it, they will think about whether to go over and chat. For example, Isabella Rodriguez and Tom Moreno had a conversation about the upcoming election:

In addition to this, users can also specify what role the agent plays, For example, if you designate one of the agents as a reporter, you can consult the agent for news content.

Interaction between the agent and the environment

Smallville town has many public scenes, including cafes, bars, parks, schools, dormitories, Houses and shops. In addition, each public scene also includes its own functions and objects, such as a kitchen in a house and a stove in the kitchen (Figure 2). There are also beds, tables, wardrobes, shelves, bathrooms and kitchens in the living space of the intelligent agent.

Agents can move around Smallville, enter or leave a building, navigate forward, and even approach another agent. The agent's movement is controlled by Generative Agents' architecture and sandbox game engine: when the model instructs the agent to move to a certain location, the study calculates its walking path to its destination in the Smallville environment, and the agent starts moving.

In addition, users and agents can also affect the status of other objects in the environment. For example, the bed is occupied when the agent is sleeping, and the refrigerator may be occupied when the agent has finished using the breakfast. is empty. End users can also rewrite the agent environment through natural language. For example, the user sets the shower status to leaking when Isabella enters the bathroom, and then Isabella will find tools from the living room and try to fix the leaking problem.

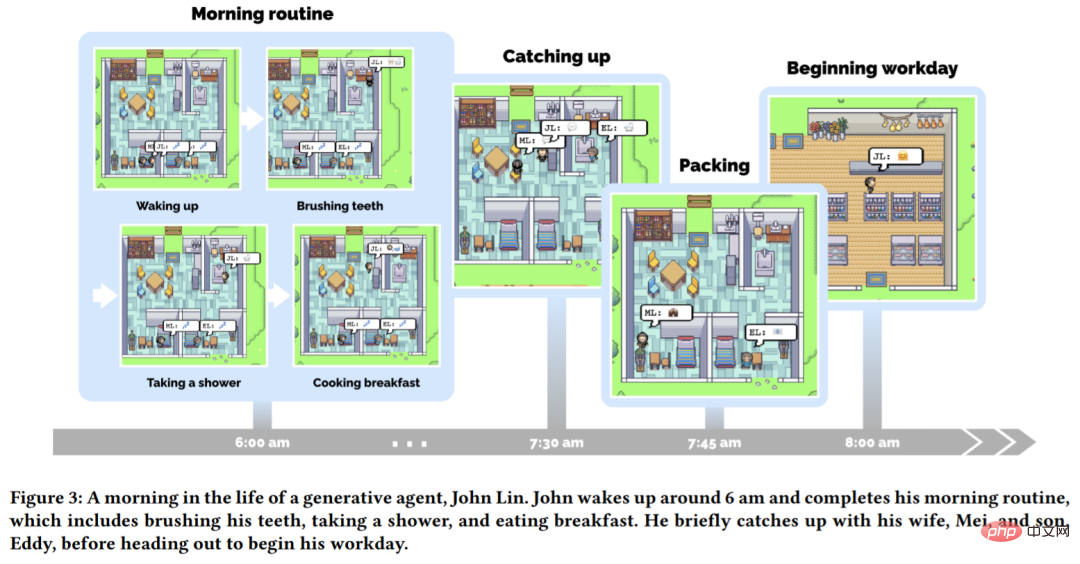

A day’s life of an agent

Starting from a description, the agent begins to plan a day’s life. As time passes in the sandbox world, the behavior of the agents gradually changes as they interact with each other, the world, and the memories they establish. The picture below shows a day in the life of drugstore owner John Lin.

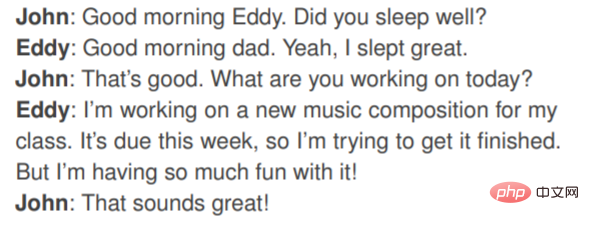

In this family, John Lin is the first to get up at seven in the morning, then brushes his teeth, takes a shower, gets dressed, eats breakfast, and then sits in the living room Browsing the news at the dinner table. At 8 a.m., John Lin's son Eddy also got up and got ready for class. Before he left, he had a conversation with John, the content of which was:

Not long after Eddy left, his mother Mei also woke up, and Mei asked Son, John recalled the conversation they just had, and then had the following conversation

Social ability

In addition, "Generative Agents" also show the emergence of social behaviors. By interacting with each other, "Generative Agents" exchange information and form new relationships in the Smallville environment. These social behaviors are natural and not predetermined. For example, when an agent notices the presence of the other party, a conversation may occur, and conversation information can be spread between agents.

Let’s look at a few examples:

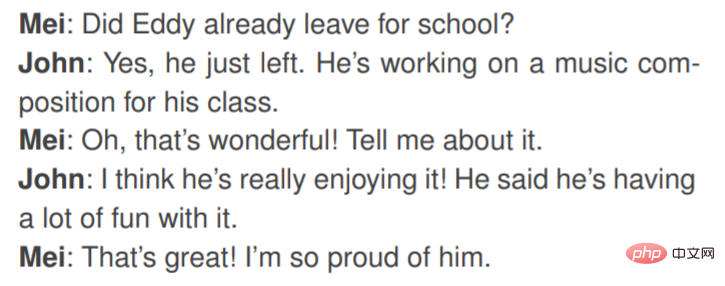

Information dissemination. When agents notice each other, they may engage in conversation. When doing this, information can be propagated from one agent to another. For example, in a conversation between Sam and Tom at the grocery store, Sam tells Tom about his candidacy in the local election:

Later that day, after Sam leaves, Tom and John, who heard from another source, discuss Sam's chances of winning the election:

Gradually, Sam's candidacy became the talk of the town, with some supporting him and others hesitant.

Relationship memory. Over time, the agents in the town form new relationships and remember their interactions with other agents. For example, Sam didn't know Latoya Williams at first. While walking in Johnson Park, Sam met Latoya and introduced themselves to each other. Latoya mentioned that he was working on a photography project: "I am here to take photos for a project that I am working on." In the subsequent interaction, Sam and Latoya The interaction showed memory of this event, with Sam asking: "Latoya, how is your project going?" Latoya replied: "It's going great!"

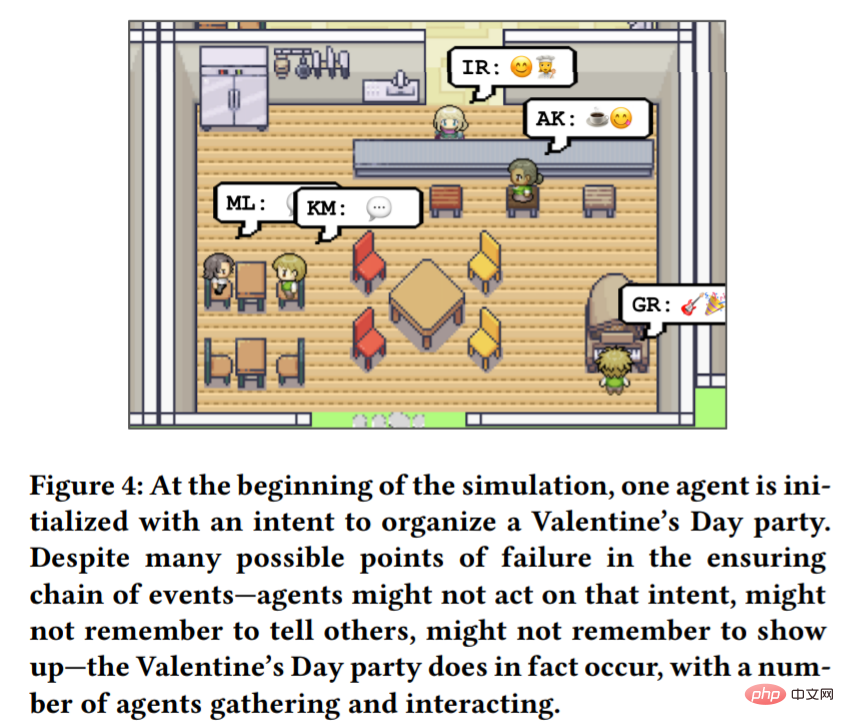

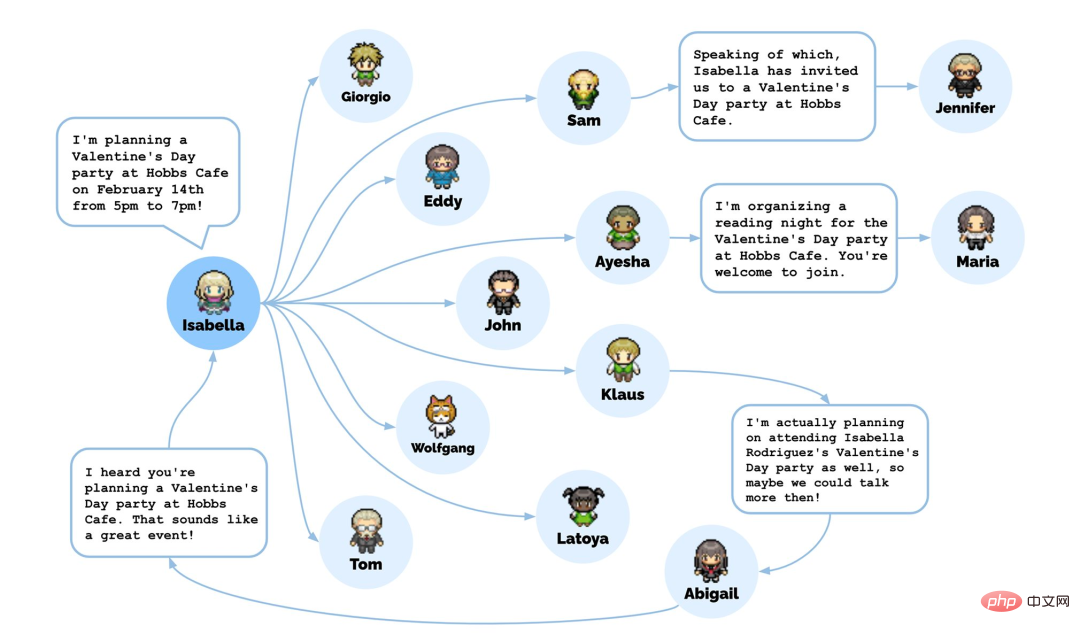

Coordination ability. Isabella Rodriguez, who owns Hobbs Cafe, is hosting a Valentine's Day party on Feb. 14 from 5 to 7 p.m. From this seed, Isabella Rodriguez would extend invitations when she met friends and customers at Hobbs Cafe or elsewhere. On the afternoon of the 13th, Isabella began decorating the café. Maria, a regular customer and close friend of Isabella's, comes to the café. Isabella asks Maria to help decorate the party, and Maria agrees. Maria's character description is that she likes Klaus. That night, Maria invites her crush, Klaus, to a party with her, and Klaus happily accepts.

On Valentine’s Day, five agents, including Klaus and Maria, showed up at Hobbs Café at 5 pm to enjoy the festivities (Figure 4). In this scenario, the end-user only sets Isabella’s initial intention of hosting a party and Maria’s infatuation with Klaus: the social behaviors of spreading information, decorating, asking each other out, arriving at the party, and interacting at the party are initiated by the agent architecture.

Architecture

Generative Agents need a framework to guide their behavior in the open world, designed to allow Generative Agents to interact with Other agents interact and react to changes in the environment.

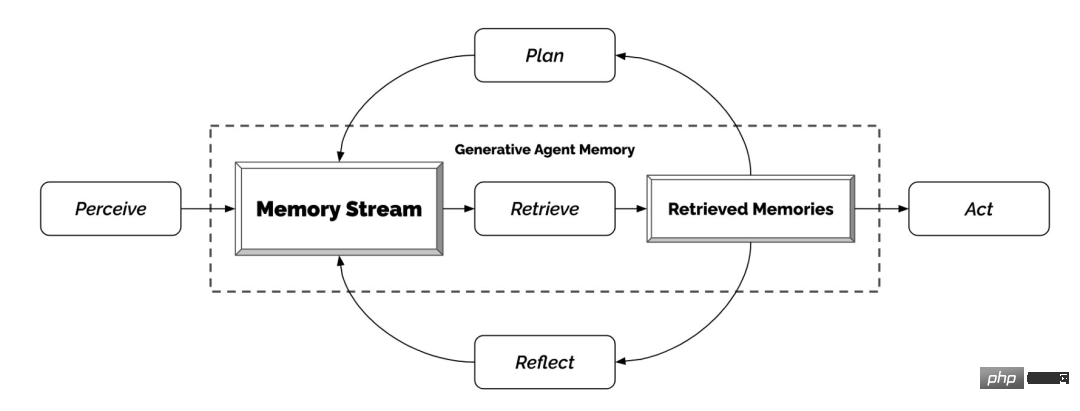

Generative Agents take their current environment and past experience as input and generate behavior as output. The architecture of Generative Agents combines large language models with mechanisms to synthesize and retrieve relevant information to regulate the output of the language model.

Without synthesis and retrieval mechanisms, large language models can output behaviors, but Generative Agents may not respond based on the agent’s past experience, making it impossible to make important inferences , and may not be able to maintain long-term coherence. Even with current best-performing models (e.g. GPT-4), challenges with long-term planning and coherence remain.

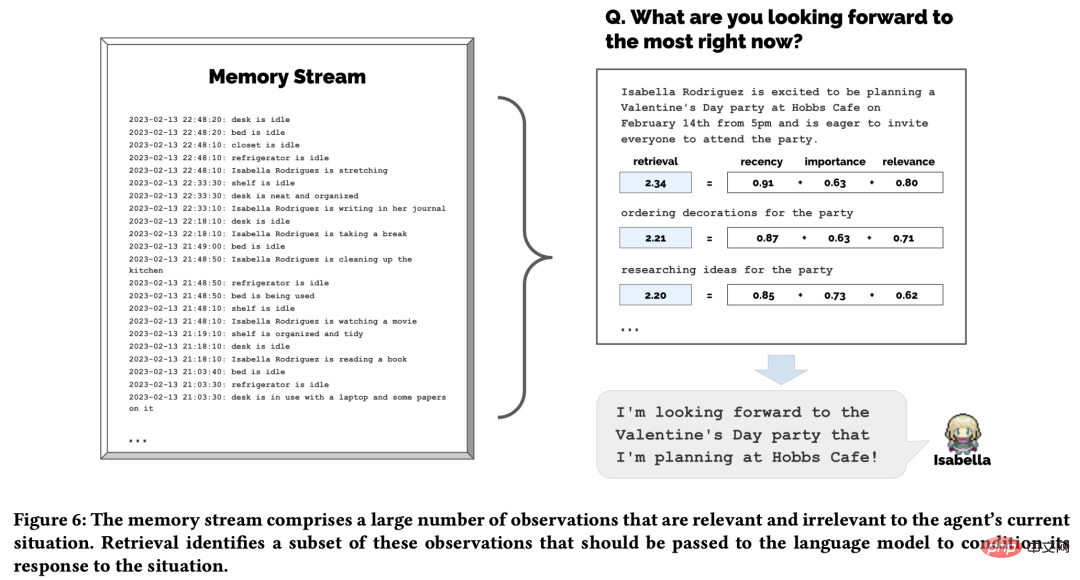

Because Generative Agents generate large amounts of events and memory streams that must be retained, a core challenge in their architecture is to ensure that the most relevant items in the agent's memory are retrieved and synthesized when needed. part.

The architectural center of Generative Agents is the memory stream - a database that comprehensively records the agent's experience. The agent retrieves relevant records from the memory stream to plan the agent's action behavior and respond appropriately to the environment, and each behavior is recorded to recursively synthesize higher-level behavioral guidance. Everything in the Generative Agents architecture is recorded and reasoned in the form of natural language descriptions, allowing agents to take advantage of the reasoning capabilities of large language models.

Currently, this research implements the gpt3.5-turbo version using ChatGPT. The research team anticipates that the architectural foundations of Generative Agents—memory, planning, and reflection—are likely to remain unchanged. Newer language models (such as GPT-4) have better expressiveness and performance, which will further expand Generative Agents.

Memory and retrieval

The architecture of Generative Agents implements a retrieval function that combines the current situation as input and returns a subset of the memory stream to pass to the language model. There are many possible implementations of the retrieval function, depending on what factors are important to the agent in deciding how to act.

Reflection

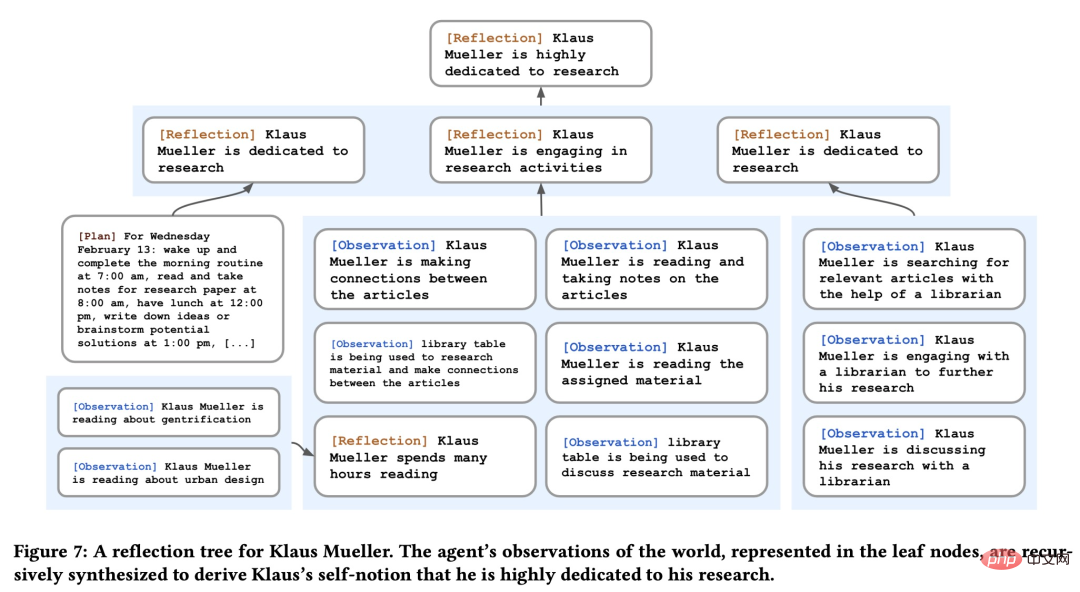

The study also introduced a second type of memory called "reflection." Reflections are higher-level, more abstract thoughts generated by an agent. Reflection is generated periodically. In this study, the agent will only start to reflect when the sum of its importance scores for recent events exceeds a certain threshold.

In fact, the Generative Agents proposed in the study reflected about two to three times a day. The first step in reflection is for the agent to determine what to reflect on by identifying questions that can be asked based on the agent's recent experiences.

Planning and Reaction

Planning is used to describe the sequence of future actions of an agent and to help the agent maintain its behavior over time consistent. The plan should include location, start time and duration.

To create a reasonable plan, Generative Agents will recursively generate more details from top to bottom. The first step is to create a plan that roughly outlines your "schedule" for the day. To create an initial plan, the study prompts the language model with a general description of the agent (e.g., name, characteristics, summary of their recent experiences, etc.).

In the process of executing planning, Generative Agents sense the surrounding environment, and the perceived observations are stored in their memory streams. The study uses these observations to prompt language models to decide whether agents should continue with their current plans or react differently.

Experimentation and Evaluation

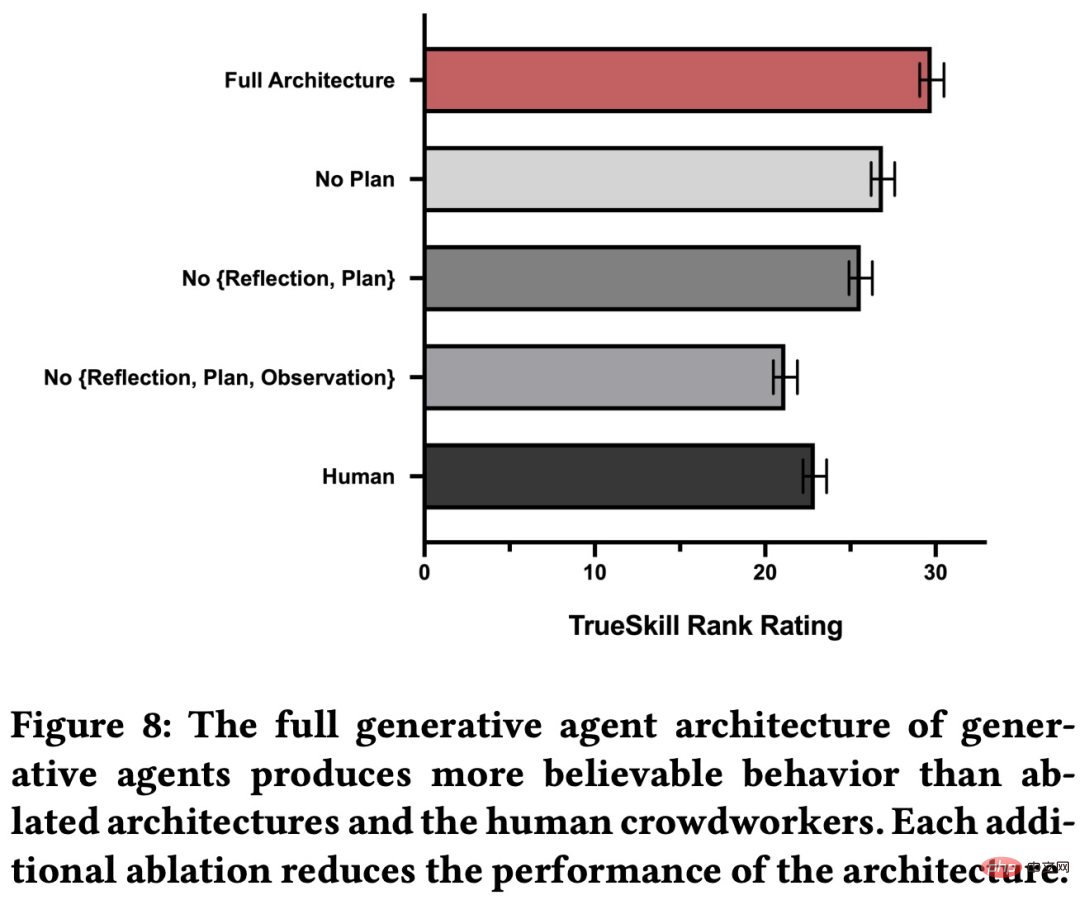

The study conducted two evaluations of Generative Agents: one was a controlled evaluation to test whether the agents could independently generate credible individual behavior; the other is an end-to-end evaluation, in which multiple Generative Agents interact openly over two days of game time, in order to understand the stability and emergent social behavior of the agent.

For example, Isabella plans to host a Valentine’s Day party. She spreads the information, and by the end of the simulation, 12 characters know about it. Seven of them were "undecided" - three had other plans, and four had not expressed their thoughts, just like how humans get along.

#At the technical evaluation level, this research uses natural language to "interview" the agent to evaluate the agent's maintenance of "personality", memory, and planning. , ability to react and reflect accurately, and conducted ablation experiments. Experimental results show that each of these components is critical for the agent to perform well on the task.

In experimental evaluations, the most common errors made by agents include:

- It fails to retrieve relevant memories;

- Fabricate and modify the memory of the agent;

- "Inherit" overly formal speech or behavior from the language model.

Interested readers can read the original text of the paper to learn more about the research details.

The above is the detailed content of Hot paper creates the prototype of 'Westworld': 25 AI agents grow freely in the virtual town. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

In Debian systems, the readdir function is used to read directory contents, but the order in which it returns is not predefined. To sort files in a directory, you need to read all files first, and then sort them using the qsort function. The following code demonstrates how to sort directory files using readdir and qsort in Debian system: #include#include#include#include#include//Custom comparison function, used for qsortintcompare(constvoid*a,constvoid*b){returnstrcmp(*(

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

In Debian systems, readdir system calls are used to read directory contents. If its performance is not good, try the following optimization strategy: Simplify the number of directory files: Split large directories into multiple small directories as much as possible, reducing the number of items processed per readdir call. Enable directory content caching: build a cache mechanism, update the cache regularly or when directory content changes, and reduce frequent calls to readdir. Memory caches (such as Memcached or Redis) or local caches (such as files or databases) can be considered. Adopt efficient data structure: If you implement directory traversal by yourself, select more efficient data structures (such as hash tables instead of linear search) to store and access directory information

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

The readdir function in the Debian system is a system call used to read directory contents and is often used in C programming. This article will explain how to integrate readdir with other tools to enhance its functionality. Method 1: Combining C language program and pipeline First, write a C program to call the readdir function and output the result: #include#include#include#includeintmain(intargc,char*argv[]){DIR*dir;structdirent*entry;if(argc!=2){

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Configuring a Debian mail server's firewall is an important step in ensuring server security. The following are several commonly used firewall configuration methods, including the use of iptables and firewalld. Use iptables to configure firewall to install iptables (if not already installed): sudoapt-getupdatesudoapt-getinstalliptablesView current iptables rules: sudoiptables-L configuration

How to configure firewall rules for Debian syslog

Apr 13, 2025 am 06:51 AM

How to configure firewall rules for Debian syslog

Apr 13, 2025 am 06:51 AM

This article describes how to configure firewall rules using iptables or ufw in Debian systems and use Syslog to record firewall activities. Method 1: Use iptablesiptables is a powerful command line firewall tool in Debian system. View existing rules: Use the following command to view the current iptables rules: sudoiptables-L-n-v allows specific IP access: For example, allow IP address 192.168.1.100 to access port 80: sudoiptables-AINPUT-ptcp--dport80-s192.16

How to set the Debian Apache log level

Apr 13, 2025 am 08:33 AM

How to set the Debian Apache log level

Apr 13, 2025 am 08:33 AM

This article describes how to adjust the logging level of the ApacheWeb server in the Debian system. By modifying the configuration file, you can control the verbose level of log information recorded by Apache. Method 1: Modify the main configuration file to locate the configuration file: The configuration file of Apache2.x is usually located in the /etc/apache2/ directory. The file name may be apache2.conf or httpd.conf, depending on your installation method. Edit configuration file: Open configuration file with root permissions using a text editor (such as nano): sudonano/etc/apache2/apache2.conf

How to learn Debian syslog

Apr 13, 2025 am 11:51 AM

How to learn Debian syslog

Apr 13, 2025 am 11:51 AM

This guide will guide you to learn how to use Syslog in Debian systems. Syslog is a key service in Linux systems for logging system and application log messages. It helps administrators monitor and analyze system activity to quickly identify and resolve problems. 1. Basic knowledge of Syslog The core functions of Syslog include: centrally collecting and managing log messages; supporting multiple log output formats and target locations (such as files or networks); providing real-time log viewing and filtering functions. 2. Install and configure Syslog (using Rsyslog) The Debian system uses Rsyslog by default. You can install it with the following command: sudoaptupdatesud

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

In Debian systems, OpenSSL is an important library for encryption, decryption and certificate management. To prevent a man-in-the-middle attack (MITM), the following measures can be taken: Use HTTPS: Ensure that all network requests use the HTTPS protocol instead of HTTP. HTTPS uses TLS (Transport Layer Security Protocol) to encrypt communication data to ensure that the data is not stolen or tampered during transmission. Verify server certificate: Manually verify the server certificate on the client to ensure it is trustworthy. The server can be manually verified through the delegate method of URLSession