Technology peripherals

Technology peripherals

AI

AI

Continuous reversals! DeepMind was questioned by the Russian team: How can we prove that neural networks understand the physical world?

Continuous reversals! DeepMind was questioned by the Russian team: How can we prove that neural networks understand the physical world?

Continuous reversals! DeepMind was questioned by the Russian team: How can we prove that neural networks understand the physical world?

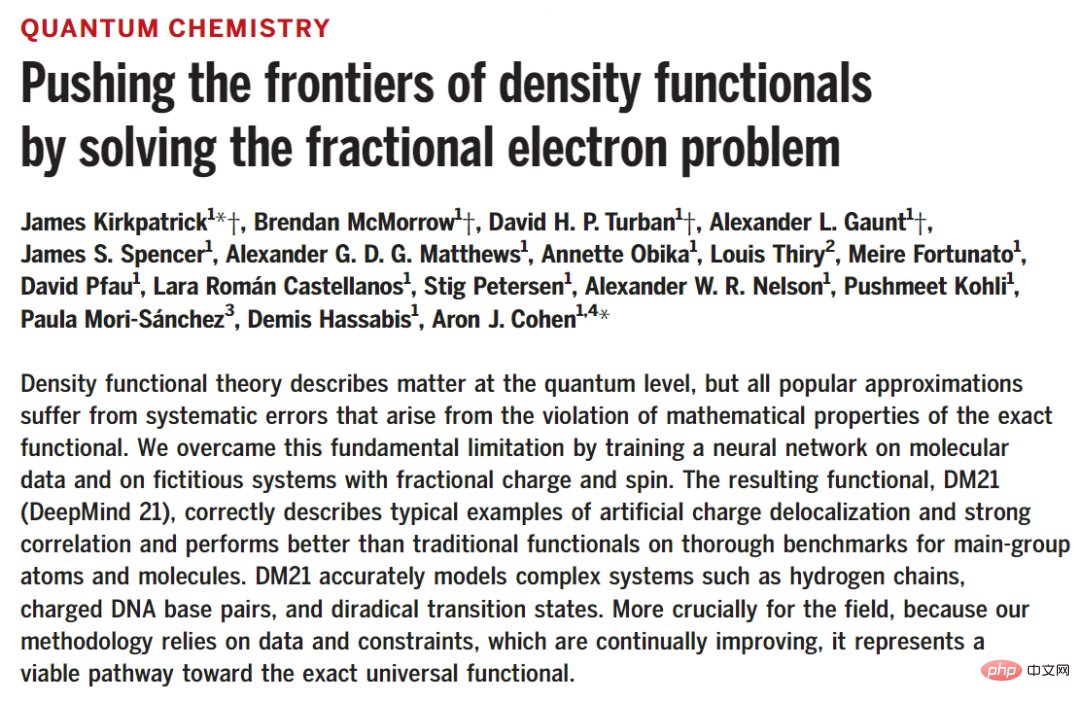

Recently there has been another controversy in the scientific community. The protagonist of the story is a Science paper published by DeepMind's research center in London in December 2021. Researchers found that neural networks can be used to train and build models that are better than before. More accurate electron density and interaction maps can effectively solve the systematic errors in traditional functional theory.

Paper link: https://www.science.org/doi/epdf/10.1126/science.abj6511

The DM21 model proposed in the article accurately simulates complex systems such as hydrogen chains, charged DNA base pairs, and binary transition states. For the field of quantum chemistry, it can be said that it has opened up a feasible technical route to accurate universal functions.

DeepMind researchers also released the code of the DM21 model to facilitate reproduction by peers.

Warehouse link: https://github.com/deepmind/deepmind-research

Logically speaking, the papers and codes are public and published in top journals. The experimental results and research conclusions are basically reliable.

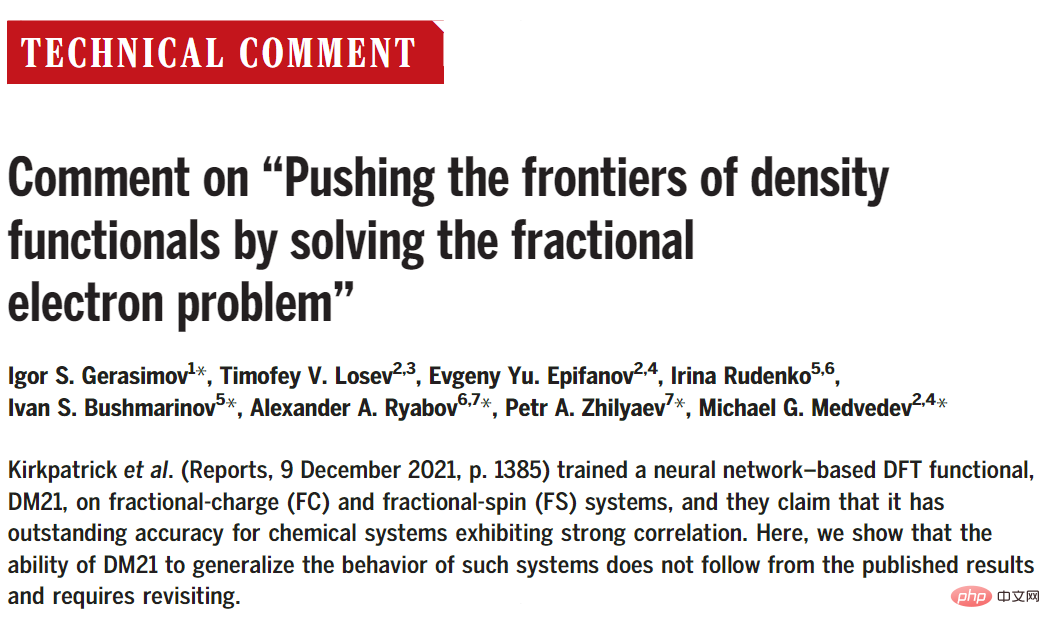

But eight months later, eight researchers from Russia and South Korea also published a scientific review in Science. They believed that there were problems in DeepMind’s original research, namelyThe training set and the test set may have overlapping parts, resulting in incorrect experimental conclusions.

Paper link: https://www.science.org/doi/epdf/10.1126/science.abq3385

If the suspicion is true, then DeepMind’s paper, which is known as a major technological breakthrough in the chemical industry, may be attributed to data leakage# for the improvements made in neural networks ##.

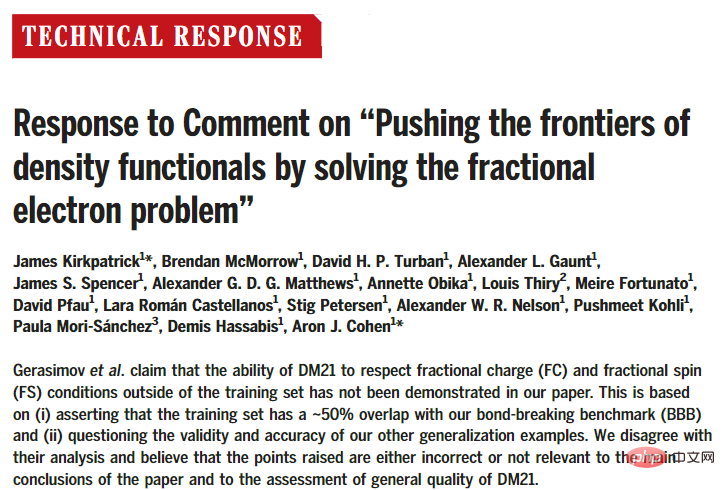

However, DeepMind responded quickly. On the same day the comment was published, it immediately wrote a reply to express its opposition and strong condemnation: the views they raised were either incorrect, Either is irrelevant to the main conclusions of the paper and the assessment of the overall quality of DM21.

Paper link: https://www.science.org/doi/epdf/10.1126/science.abq4282

The famous physicist Feynman once said, Scientists must prove themselves wrong as soon as possible. Only in this way can progress be made.

Although the outcome of this discussion has not been finalized and the Russian team has not published further rebuttal articles, the incident may have a more profound impact on research in the field of artificial intelligence. : That is, how to prove that the neural network model you have trained truly understands the task, rather than just memorizing the pattern? Research Question

Chemistry is the central science of the 21st century (convinced), such as designing new materials with specified properties, such as producing clean electricity or developing high temperatures Superconductors all require the simulation of electrons on a computer.Electrons are subatomic particles that control how atoms combine to form molecules. They are also responsible for the flow of electricity in solids. Understanding the location of electrons within a molecule can go a long way toward explaining its structure and properties. and reactivity.

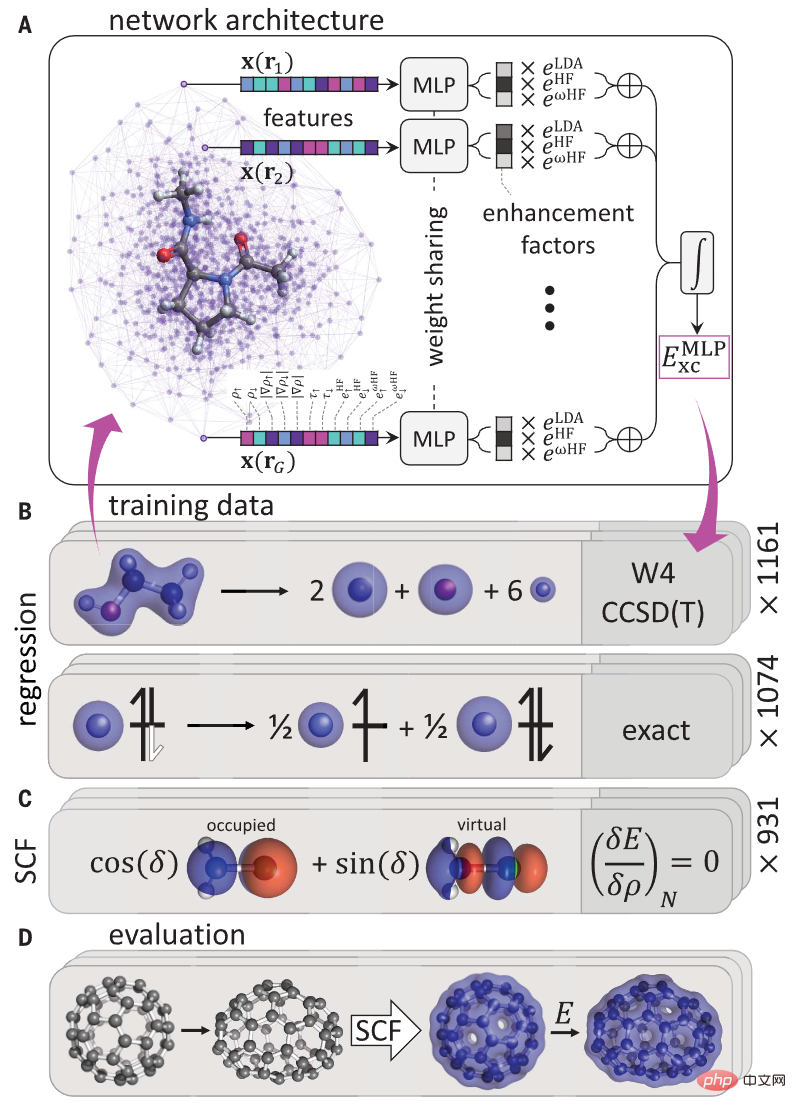

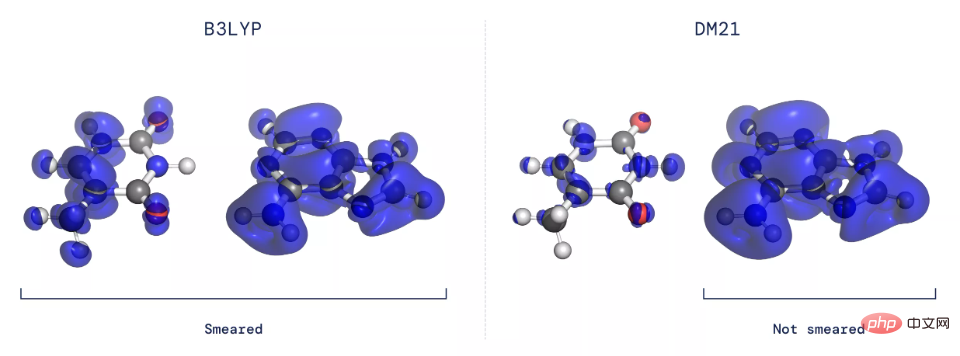

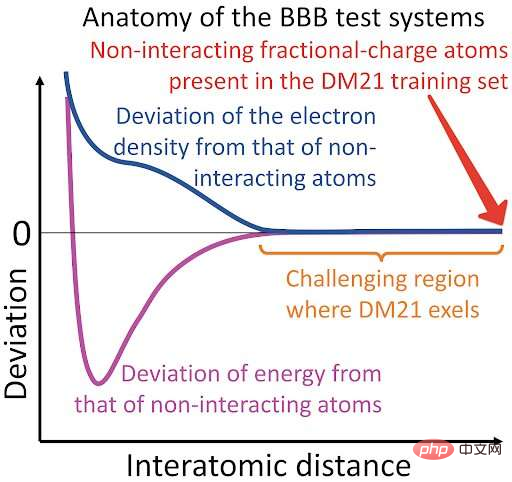

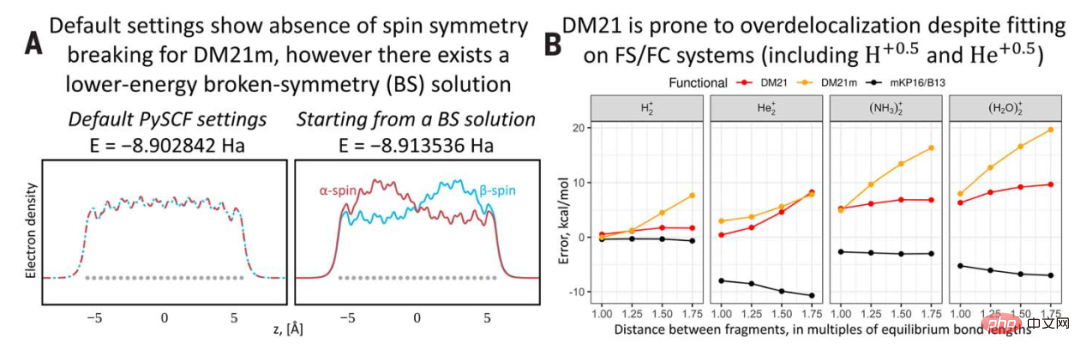

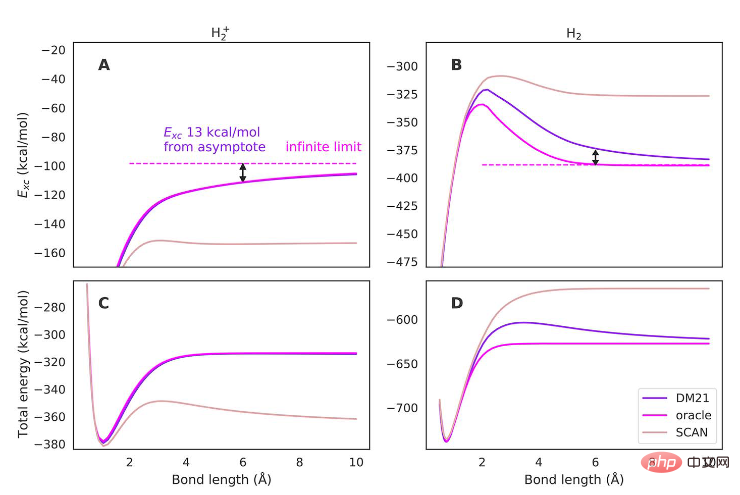

In 1926, Schrödinger proposed the Schrödinger equation, which can correctly describe the quantum behavior of the wave function. But using this equation to predict electrons in a molecule is insufficient because all electrons repel each other, and it is necessary to track the probability of each electron's position, which is a very complicated task even for a small number of electrons. A major breakthrough came in the 1960s, when Pierre Hohenberg and Walter Kohn realized there was no need to track each electron individually. Instead, knowing the probability of any electron being in each position (i.e., the electron density) is enough to accurately calculate all interactions. After proving the above theory, Kohn won the Nobel Prize in Chemistry, thus creating density functional theory (density functional theory, DFT) Despite DFT proving that mapping exists, for more than 50 years the exact nature of the mapping between electron density and interaction energy, the so-called density functional, has remained unknown and must be solved approximately. DFT is essentially a method of solving the Schrödinger equation, and its accuracy depends on its exchange-correlation part. Although DFT involves a certain degree of approximation, it is the only practical way to study how and why matter behaves in a certain way at the microscopic level, and has therefore become one of the most widely used techniques in all fields of science. Over the years, researchers have proposed more than 400 approximate functions of varying degrees of accuracy, but all of these approximations suffer from systematic errors because they fail to capture some of the key mathematics of the exact functional characteristic. When it comes to learning approximate functions, isn’t this what neural networks do? In this paper, DeepMind trains a neural network DM 21 (DeepMind 21), successfully learned a functional without systematic errors, which can avoid delocalization errors and spin symmetry breaking, and can better describe a wide range of chemical reaction categories. In principle, any chemical and physical process involving charge movement is prone to delocalization errors, and any process involving bond breaking is prone to occurrence. Spin symmetry broken. While charge movement and bond breaking are at the heart of many important technical applications, these problems can also lead to numerous qualitative failures in describing the functional groups of the simplest molecules, such as hydrogen. The model is built using a multi-layer perceptron (MLP), and the input is the local and non-local images of the occupied Kohn-Sham (KS) orbit. local features. The objective function contains two: one is the regression loss used to learn the exchange correlation energy itself, and the other is to ensure that the function derivative can be used in the self-consistent field after training. , SCF) calculated gradient regularization term. For the regression loss, the researchers used a fixed-density data set representing the reactants and products of 2235 reactions, and trained the network to map from these densities to Highly accurate reaction energies, with 1161 training reactions representing atomization, ionization, electron affinity and intermolecular binding energies of small main group H-Kr molecules and 1074 reactions representing key FC and FS densities of H-Ar atoms . The trained model DM21 is able to run self-consistently on all reactions of the large main family benchmark, producing more accurate molecular densities. When DeepMind trains DM21, the data used is a fractional charge system, such as a hydrogen atom with half an electron. To demonstrate the superiority of DM21, the researchers tested it on a set of stretched dimers, called the bond-breaking benchmark (BBB) set. For example, two hydrogen atoms far apart have a total of one electron. Experimental results found that the DM21 functional showed excellent performance on the BBB test set, surpassing all the classic DFT functionals tested so far and DM21m (same as DM21 training, but on There are no fractional charges in the training set). Then DeepMind claimed in the paper: DM21 has understood the physical principles behind the fractional charge system. But if you look closely, you will find that in the BBB group, all dimers become very similar to the system in the training group. In fact, due to the localized nature of electroweak interactions, atomic interactions are only strong over short distances, beyond which the two atoms behave essentially as if they were not interacting. Michael Medvedev, research group leader at the Zelinsky Institute of Organic Chemistry of the Russian Academy of Sciences, explains that in some ways neural networks are like humans Likewise, they prefer to get the right answer for the wrong reason. So it's not hard to train a neural network, but it's hard to prove that it has learned the laws of physics rather than just memorized the correct answers. Therefore, the BBB test set is not a suitable test set: it does not test DM21's understanding of fractional electronic systems, a thorough analysis of the other four evidences of DM21's handling of such systems Nor is a conclusive conclusion drawn: only its good accuracy on the SIE4x4 set may be reliable. Russian researchers also believe that the use of fractional charge systems in the training set is not the only novelty in DeepMind's work. Their idea of introducing physical constraints into neural networks through training sets, and the method of giving physical meaning through training on the correct chemical potentials, may be widely used in the construction of neural network DFT functionals in the future. Regarding the Comment paper’s claim that DM21’s ability to predict fractional charge (FC) and fractional spin (FS) conditions outside the training set is not found in the paper This was demonstrated based on approximately 50% overlap of the training set with the bondbreaking benchmark BBB, as well as the effectiveness and accuracy of other generalization examples. DeepMind disagrees with this analysis and believes that the points made are either incorrect or irrelevant to the main conclusions of the paper and the assessment of the overall quality of DM21, as BBB is not included in the paper Only example of FC and FS behavior shown. The overlap between the training set and the test set is a research issue worthy of attention in machine learning: memory means that a model can be trained by copying Concentrated examples perform better on the test set. Gerasimov believes that the performance of DM21 on the BBB (containing dimers at finite distances) can be achieved by replicating the output of FC and FS systems (i.e., atoms at infinite separation limits with dimers match) is well explained. To demonstrate that DM21 generalizes beyond the training set, DeepMind researchers also considered H2 (cationic dimer) and H2 (neutral dimeric For the prototype BBB example of aggregates), it can be concluded that the exact exchange-correlation function is non-local; returning a constant memorized value can lead to significant errors in BBB predictions as distance increases.

Really SOTA or data leak?

DeepMind Response

The above is the detailed content of Continuous reversals! DeepMind was questioned by the Russian team: How can we prove that neural networks understand the physical world?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

But maybe he can’t defeat the old man in the park? The Paris Olympic Games are in full swing, and table tennis has attracted much attention. At the same time, robots have also made new breakthroughs in playing table tennis. Just now, DeepMind proposed the first learning robot agent that can reach the level of human amateur players in competitive table tennis. Paper address: https://arxiv.org/pdf/2408.03906 How good is the DeepMind robot at playing table tennis? Probably on par with human amateur players: both forehand and backhand: the opponent uses a variety of playing styles, and the robot can also withstand: receiving serves with different spins: However, the intensity of the game does not seem to be as intense as the old man in the park. For robots, table tennis

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve