Technology peripherals

Technology peripherals

AI

AI

Smooth video is generated based on GAN, and the effect is very impressive: no texture adhesion, jitter reduction

Smooth video is generated based on GAN, and the effect is very impressive: no texture adhesion, jitter reduction

Smooth video is generated based on GAN, and the effect is very impressive: no texture adhesion, jitter reduction

In recent years, research on image generation based on Generative Adversarial Network (GAN) has made significant progress. In addition to being able to generate high-resolution, realistic pictures, many innovative applications have also emerged, such as personalized picture editing, picture animation, etc. However, how to use GAN for video generation is still a challenging problem.

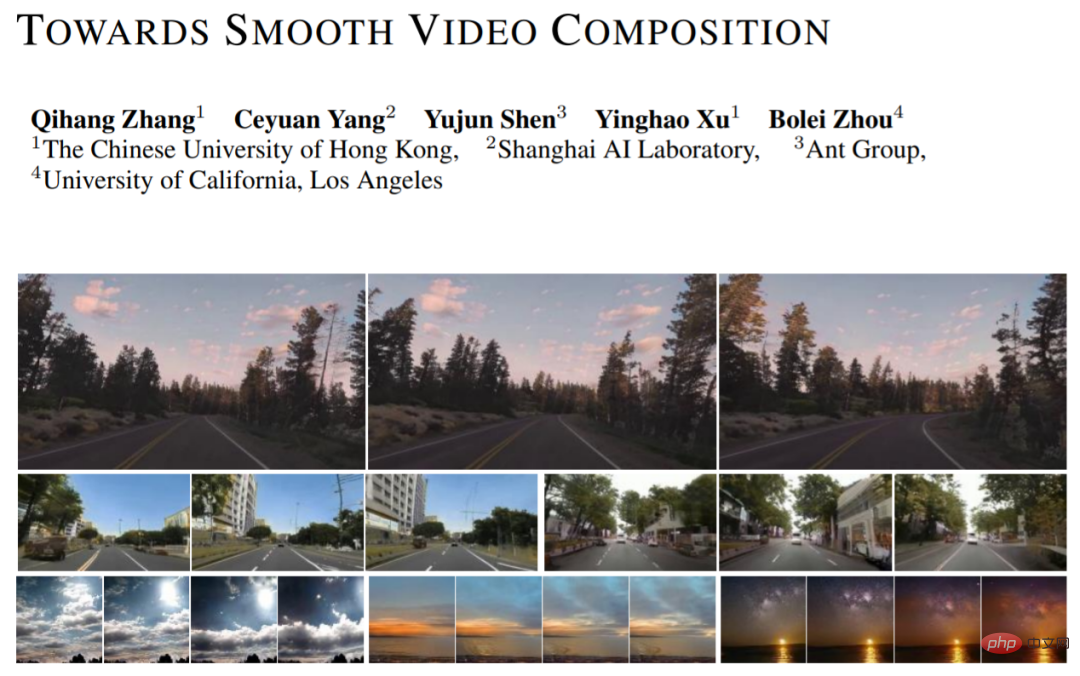

In addition to modeling single-frame images, video generation also requires learning complex temporal relationships. Recently, researchers from the Chinese University of Hong Kong, Shanghai Artificial Intelligence Laboratory, Ant Technology Research Institute and the University of California, Los Angeles proposed a new video generation method (Towards Smooth Video Composition). In the article, they conducted detailed modeling and improvements on the timing relationships of different spans (short-term range, moderate range, long range), and achieved significant improvements in multiple data sets compared with previous work. This work provides a simple and effective new benchmark for GAN-based video generation.

- Paper address: https://arxiv.org/pdf/2212.07413.pdf

- Project code link: https://github.com/genforce/StyleSV

Model architecture

The image generation network based on GAN can be expressed as: I=G(Z), where Z is a random variable, G is the generation network, and I is the generated image. We can simply extend this framework to the category of video generation: I_i=G(z_i), i=[1,...,N], where we sample N random variables z_i at one time, and each random variable z_i generates a corresponding A frame of picture I_i. The generated video can be obtained by stacking the generated images in the time dimension.

MoCoGAN, StyleGAN-V and other works have proposed a decoupled expression on this basis: I_i=G(u, v_i), i=[1,..., N], where u represents the random variable that controls the content, and v_i represents the random variable that controls the action. This representation holds that all frames share the same content and have unique motion. Through this decoupled expression, we can better generate action videos with consistent content styles and changeable realism. The new work adopts the design of StyleGAN-V and uses it as a baseline.

Difficulties in video generation: How to effectively and reasonably model temporal relationships?

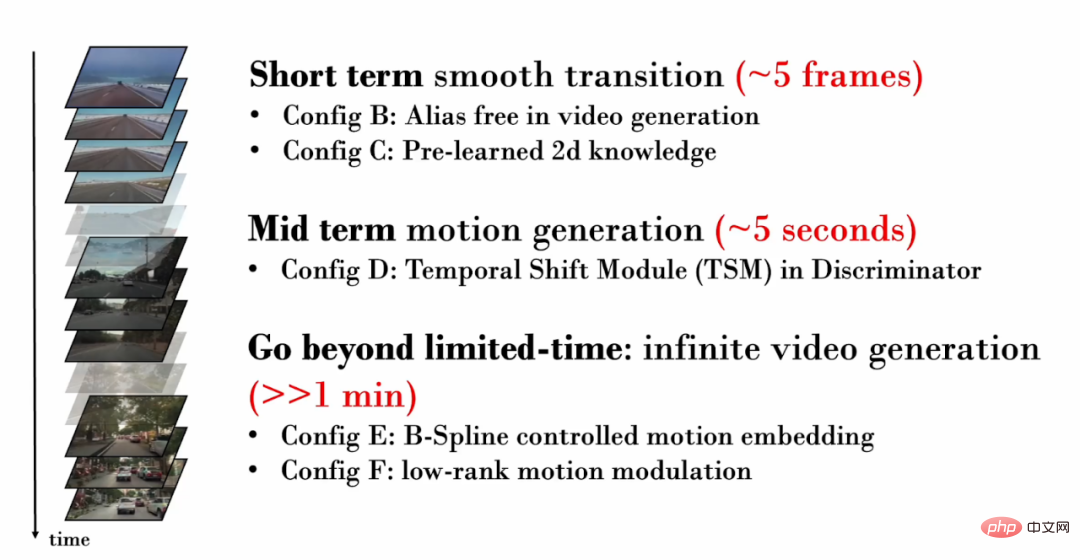

The new work focuses on the timing relationships of different spans (short range, moderate range, long range), and conducts detailed modeling and improvement respectively:

1. Short-term (~5 frames) timing relationships

#Let us first consider a video with only a few frames . These short video frames often contain very similar content, showing only very subtle movements. Therefore, it is crucial to realistically generate subtle movements between frames. However, serious texture sticking occurs in the videos generated by StyleGAN-V.

Texture adhesion refers to the dependence of part of the generated content on specific coordinates. This causes the phenomenon of "sticking" to a fixed area. In the field of image generation, StyleGAN3 alleviates the problem of texture adhesion through detailed signal processing, expanded padding range and other operations. This work verifies that the same technique is still effective for video generation.

In the visualization below, we track pixels at the same location in each frame of the video. It is easy to find that in the StyleGAN-V video, some content has been "sticky" at fixed coordinates for a long time and has not moved over time, thus producing a "brush phenomenon" in the visualization. In the videos generated by the new work, all pixels exhibit natural movement.

However, researchers found that referencing the backbone of StyleGAN3 will reduce the quality of image generation. To alleviate this problem, they introduced image-level pre-training. In the pre-training stage, the network only needs to consider the generation quality of a certain frame in the video, and does not need to learn the modeling of the temporal range, making it easier to learn knowledge about image distribution.

2. Medium length (~5 seconds) timing relationship

As the generated video has more of frames, it will be able to show more specific actions. Therefore, it is important to ensure that the generated video has realistic motion. For example, if we want to generate a first-person driving video, we should generate a gradually receding ground and street scene, and the approaching car should also follow a natural driving trajectory.

In adversarial training, in order to ensure that the generative network receives sufficient training supervision, the discriminative network is crucial. Therefore, in video generation, in order to ensure that the generative network can generate realistic actions, the discriminative network needs to model the temporal relationships in multiple frames and capture the generated unrealistic motion. However, in previous work, the discriminant network only used a simple concatenation operation (concatenation operation) to perform temporal modeling: y = cat (y_i), where y_i represents the single-frame feature and y represents the feature after time domain fusion.

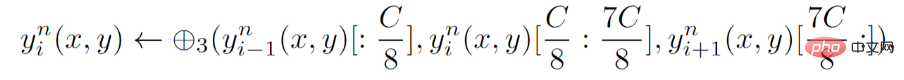

For the discriminant network, the new work proposes an explicit timing modeling, that is, introducing the Temporal Shift Module (TSM) at each layer of the discriminant network. . TSM comes from the field of action recognition and realizes temporal information exchange through simple shift operations:

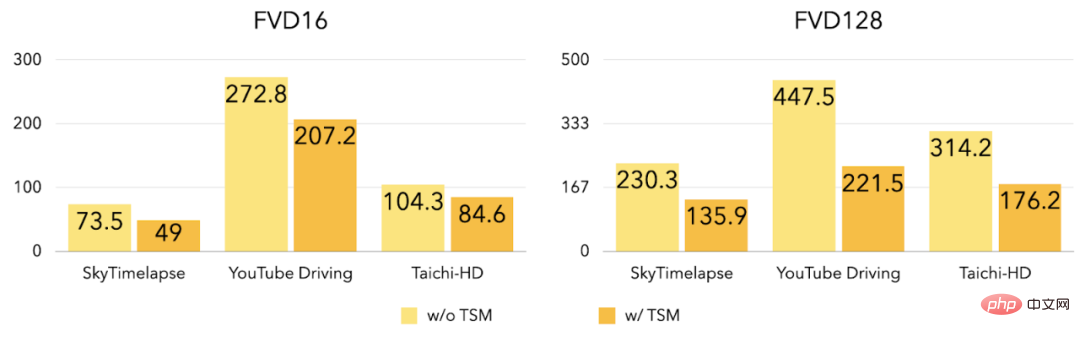

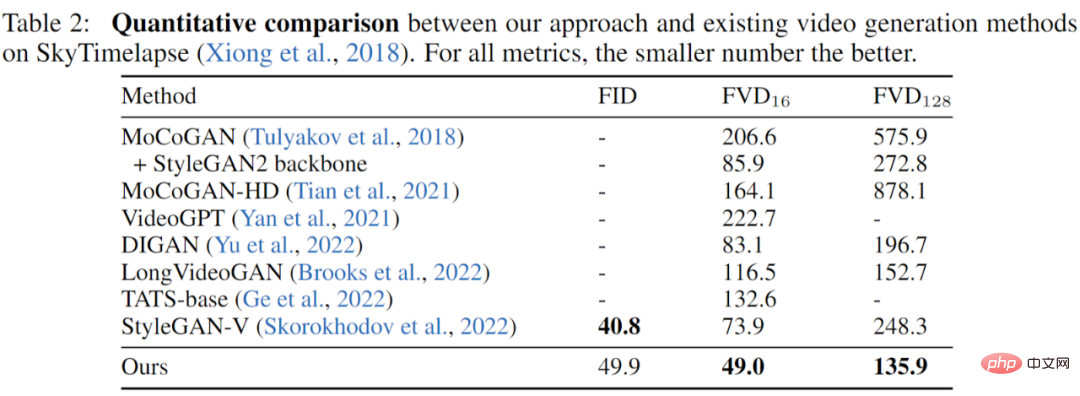

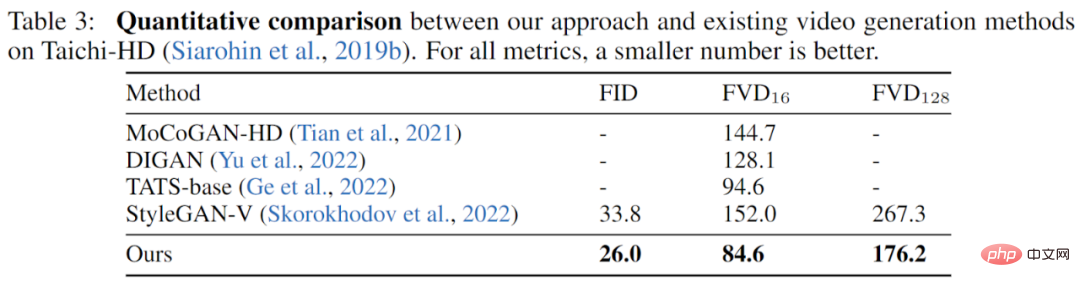

Experiments show that after the introduction of TSM, three FVD16, FVD128 on the data set have been reduced to a great extent.

##3. Unlimited video generation

While previously introduced improvements focused on short and moderate length video generation, the new work further explores how to generate high-quality videos of any length (including infinite length). Previous work (StyleGAN-V) can generate infinitely long videos, but the video contains very obvious periodic jitter:

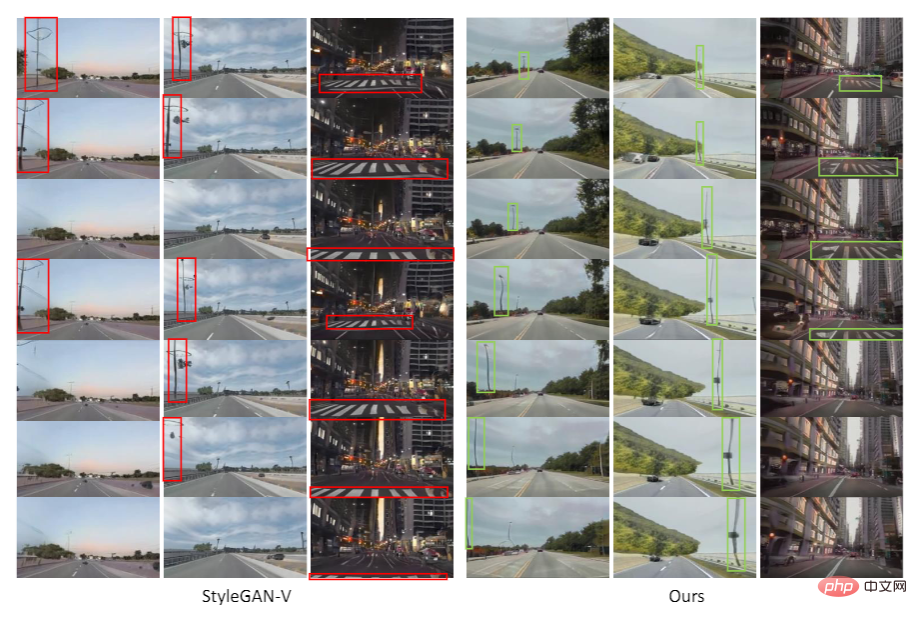

As shown in the figure, in the video generated by StyleGAN-V, as the vehicle advances, the zebra crossing originally retreated normally, but then suddenly changed to move forward. This work found that discontinuity in motion features (motion embedding) caused this jitter phenomenon.

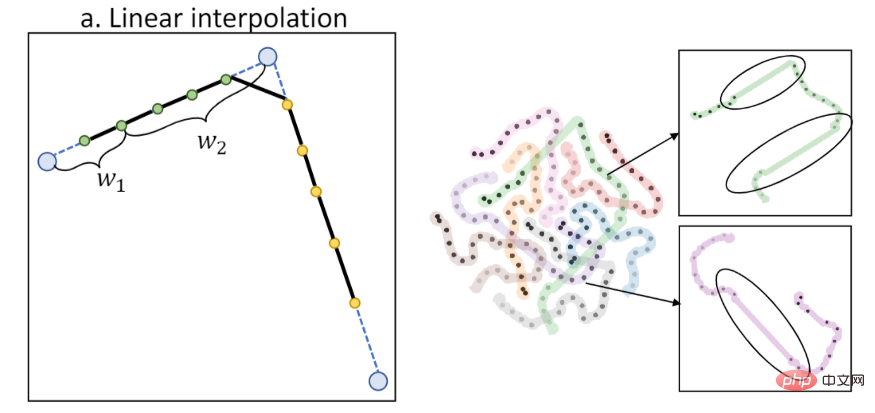

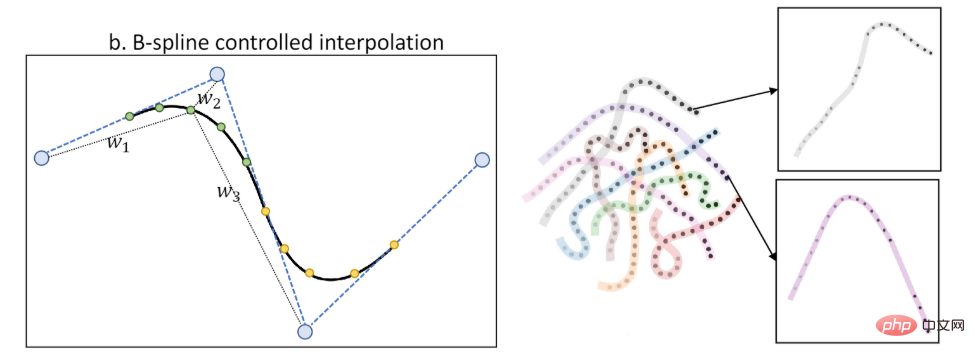

Previous work used linear interpolation to calculate action features. However, linear interpolation will lead to first-order discontinuity, as shown in the following figure (the left is the interpolation diagram, and the right is the T-SNE feature) Visualization):

This work proposes the motion characteristics of B-spline control (B-Spline based motion embedding). Interpolation through B-spline can obtain smoother action features with respect to time, as shown in the figure (the left is the interpolation diagram, the right is the T-SNE feature visualization):

By introducing the action characteristics of B-spline control, the new work alleviates the jitter phenomenon:

##As shown in the figure, in the video generated by StyleGAN-V, the street lights and the ground will suddenly change the direction of movement. In the videos generated by the new work, the direction of movement is consistent and natural.

At the same time, the new work also proposes a low rank constraint on action features to further alleviate the occurrence of periodic repetitive content.

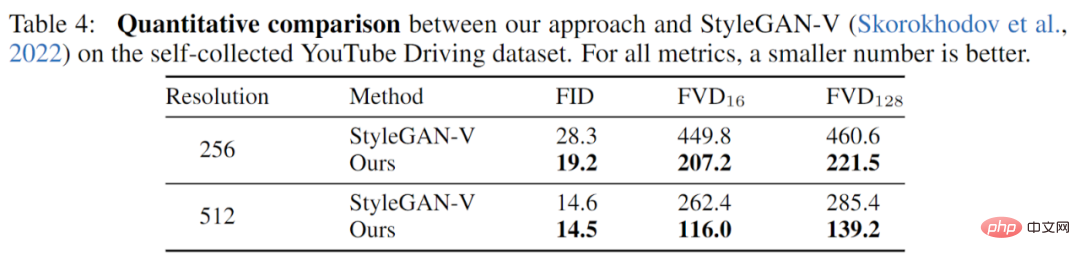

ExperimentsThe work has been fully experimented on three data sets (YouTube Driving, Timelapse, Taichi-HD) and fully compared with previous work. The results show that the new work has achieved sufficient improvements in picture quality (FID) and video quality (FVD).

SkyTimelapse Experimental results:

##

##

Taichi-HD Experiment Result:

## Summary

Summary

The above is the detailed content of Smooth video is generated based on GAN, and the effect is very impressive: no texture adhesion, jitter reduction. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to write a novel in the Tomato Free Novel app. Share the tutorial on how to write a novel in Tomato Novel.

Mar 28, 2024 pm 12:50 PM

How to write a novel in the Tomato Free Novel app. Share the tutorial on how to write a novel in Tomato Novel.

Mar 28, 2024 pm 12:50 PM

Tomato Novel is a very popular novel reading software. We often have new novels and comics to read in Tomato Novel. Every novel and comic is very interesting. Many friends also want to write novels. Earn pocket money and edit the content of the novel you want to write into text. So how do we write the novel in it? My friends don’t know, so let’s go to this site together. Let’s take some time to look at an introduction to how to write a novel. Share the Tomato novel tutorial on how to write a novel. 1. First open the Tomato free novel app on your mobile phone and click on Personal Center - Writer Center. 2. Jump to the Tomato Writer Assistant page - click on Create a new book at the end of the novel.

Is it infringing to post other people's videos on Douyin? How does it edit videos without infringement?

Mar 21, 2024 pm 05:57 PM

Is it infringing to post other people's videos on Douyin? How does it edit videos without infringement?

Mar 21, 2024 pm 05:57 PM

With the rise of short video platforms, Douyin has become an indispensable part of everyone's daily life. On TikTok, we can see interesting videos from all over the world. Some people like to post other people’s videos, which raises a question: Is Douyin infringing upon posting other people’s videos? This article will discuss this issue and tell you how to edit videos without infringement and how to avoid infringement issues. 1. Is it infringing upon Douyin’s posting of other people’s videos? According to the provisions of my country's Copyright Law, unauthorized use of the copyright owner's works without the permission of the copyright owner is an infringement. Therefore, posting other people’s videos on Douyin without the permission of the original author or copyright owner is an infringement. 2. How to edit a video without infringement? 1. Use of public domain or licensed content: Public

How to recover deleted contacts on WeChat (simple tutorial tells you how to recover deleted contacts)

May 01, 2024 pm 12:01 PM

How to recover deleted contacts on WeChat (simple tutorial tells you how to recover deleted contacts)

May 01, 2024 pm 12:01 PM

Unfortunately, people often delete certain contacts accidentally for some reasons. WeChat is a widely used social software. To help users solve this problem, this article will introduce how to retrieve deleted contacts in a simple way. 1. Understand the WeChat contact deletion mechanism. This provides us with the possibility to retrieve deleted contacts. The contact deletion mechanism in WeChat removes them from the address book, but does not delete them completely. 2. Use WeChat’s built-in “Contact Book Recovery” function. WeChat provides “Contact Book Recovery” to save time and energy. Users can quickly retrieve previously deleted contacts through this function. 3. Enter the WeChat settings page and click the lower right corner, open the WeChat application "Me" and click the settings icon in the upper right corner to enter the settings page.

How to make money from posting videos on Douyin? How can a newbie make money on Douyin?

Mar 21, 2024 pm 08:17 PM

How to make money from posting videos on Douyin? How can a newbie make money on Douyin?

Mar 21, 2024 pm 08:17 PM

Douyin, the national short video platform, not only allows us to enjoy a variety of interesting and novel short videos in our free time, but also gives us a stage to show ourselves and realize our values. So, how to make money by posting videos on Douyin? This article will answer this question in detail and help you make more money on TikTok. 1. How to make money from posting videos on Douyin? After posting a video and gaining a certain amount of views on Douyin, you will have the opportunity to participate in the advertising sharing plan. This income method is one of the most familiar to Douyin users and is also the main source of income for many creators. Douyin decides whether to provide advertising sharing opportunities based on various factors such as account weight, video content, and audience feedback. The TikTok platform allows viewers to support their favorite creators by sending gifts,

How to publish Xiaohongshu video works? What should I pay attention to when posting videos?

Mar 23, 2024 pm 08:50 PM

How to publish Xiaohongshu video works? What should I pay attention to when posting videos?

Mar 23, 2024 pm 08:50 PM

With the rise of short video platforms, Xiaohongshu has become a platform for many people to share their lives, express themselves, and gain traffic. On this platform, publishing video works is a very popular way of interaction. So, how to publish Xiaohongshu video works? 1. How to publish Xiaohongshu video works? First, make sure you have a video content ready to share. You can use your mobile phone or other camera equipment to shoot, but you need to pay attention to the image quality and sound clarity. 2. Edit the video: In order to make the work more attractive, you can edit the video. You can use professional video editing software, such as Douyin, Kuaishou, etc., to add filters, music, subtitles and other elements. 3. Choose a cover: The cover is the key to attracting users to click. Choose a clear and interesting picture as the cover to attract users to click on it.

How to post videos on Weibo without compressing the image quality_How to post videos on Weibo without compressing the image quality

Mar 30, 2024 pm 12:26 PM

How to post videos on Weibo without compressing the image quality_How to post videos on Weibo without compressing the image quality

Mar 30, 2024 pm 12:26 PM

1. First open Weibo on your mobile phone and click [Me] in the lower right corner (as shown in the picture). 2. Then click [Gear] in the upper right corner to open settings (as shown in the picture). 3. Then find and open [General Settings] (as shown in the picture). 4. Then enter the [Video Follow] option (as shown in the picture). 5. Then open the [Video Upload Resolution] setting (as shown in the picture). 6. Finally, select [Original Image Quality] to avoid compression (as shown in the picture).

The secret of hatching mobile dragon eggs is revealed (step by step to teach you how to successfully hatch mobile dragon eggs)

May 04, 2024 pm 06:01 PM

The secret of hatching mobile dragon eggs is revealed (step by step to teach you how to successfully hatch mobile dragon eggs)

May 04, 2024 pm 06:01 PM

Mobile games have become an integral part of people's lives with the development of technology. It has attracted the attention of many players with its cute dragon egg image and interesting hatching process, and one of the games that has attracted much attention is the mobile version of Dragon Egg. To help players better cultivate and grow their own dragons in the game, this article will introduce to you how to hatch dragon eggs in the mobile version. 1. Choose the appropriate type of dragon egg. Players need to carefully choose the type of dragon egg that they like and suit themselves, based on the different types of dragon egg attributes and abilities provided in the game. 2. Upgrade the level of the incubation machine. Players need to improve the level of the incubation machine by completing tasks and collecting props. The level of the incubation machine determines the hatching speed and hatching success rate. 3. Collect the resources required for hatching. Players need to be in the game

How to set font size on mobile phone (easily adjust font size on mobile phone)

May 07, 2024 pm 03:34 PM

How to set font size on mobile phone (easily adjust font size on mobile phone)

May 07, 2024 pm 03:34 PM

Setting font size has become an important personalization requirement as mobile phones become an important tool in people's daily lives. In order to meet the needs of different users, this article will introduce how to improve the mobile phone use experience and adjust the font size of the mobile phone through simple operations. Why do you need to adjust the font size of your mobile phone - Adjusting the font size can make the text clearer and easier to read - Suitable for the reading needs of users of different ages - Convenient for users with poor vision to use the font size setting function of the mobile phone system - How to enter the system settings interface - In Find and enter the "Display" option in the settings interface - find the "Font Size" option and adjust it. Adjust the font size with a third-party application - download and install an application that supports font size adjustment - open the application and enter the relevant settings interface - according to the individual