Technology peripherals

Technology peripherals

AI

AI

IEEE Fellow Li Xuelong: Multimodal cognitive computing is the key to realizing general artificial intelligence

IEEE Fellow Li Xuelong: Multimodal cognitive computing is the key to realizing general artificial intelligence

IEEE Fellow Li Xuelong: Multimodal cognitive computing is the key to realizing general artificial intelligence

In today's data-driven artificial intelligence research, the information provided by single modal data can no longer meet the needs of improving machine cognitive capabilities. Similar to how humans use multiple sensory information such as vision, hearing, smell, and touch to perceive the world, machines also need to simulate human synesthesia to improve cognitive levels.

At the same time, with the explosion of multi-modal spatio-temporal data and the improvement of computing power, researchers have proposed a large number of methods to cope with the growing diverse needs. However, current multi-modal cognitive computing is still limited to imitating human apparent abilities and lacks theoretical basis at the cognitive level. Facing more complex intelligent tasks, the intersection of cognitive science and computing science has become inevitable.

Recently, Professor Li Xuelong of Northwestern Polytechnical University published the article "Multimodal Cognitive Computing" in the journal "Science China: Information Science", with the theme of "Information Capacity" ) as a basis, established an information transfer model of the cognitive process, and put forward the view that "multimodal cognitive computing can improve the information extraction capability of the machine", theoretically studying multimodal cognition Computational tasks were unified.

Li Xuelong believes thatMultimodal cognitive computing is one of the keys to realizing general artificial intelligence and has broad applications in fields such as "Vicinagearth Security" prospect. This article explores the unified cognitive model of humans and machines, and inspires research on multi-modal cognitive computing.

Li Xuelong is a professor at Northwestern Polytechnical University. He focuses on the relationship between intelligent acquisition, processing and management of high-dimensional data. He has worked on "Vicinagearth Security" and other projects. play a role in the application system. He was elected as an IEEE Fellow in 2011 and is the first mainland scholar elected to the executive committee of the International Association for Artificial Intelligence (AAAI).

AI Technology Review summarized the key points of the article "Multimodal Cognitive Computing" and conducted an in-depth dialogue with Professor Li Xuelong along this direction.

1

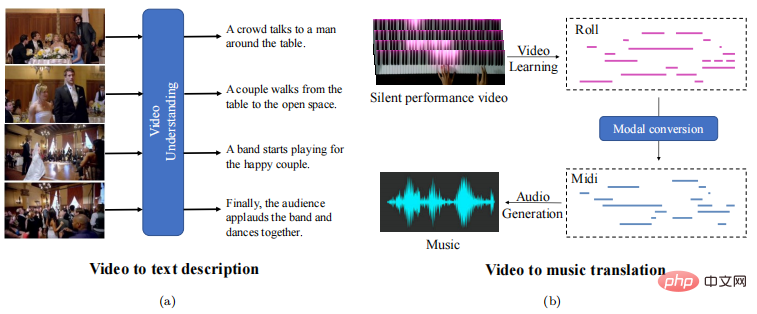

Machine cognitive ability lies in information utilizationBased on information theory, Li Xuelong proposed: Multimodal cognitive computing can improve machine information Capabilities are extracted and this perspective is modeled theoretically (below).

First, we need to understand how humans extract event information.

In 1948, Shannon, the founder of information theory, proposed the concept of "information entropy" to express the degree of uncertainty of random variables. The smaller the probability of an event, the greater the amount of information provided by its occurrence. big. That is to say, in a given cognitive task T, the amount of information brought by the occurrence of event x is inversely proportional to the probability of the event p(x):

And information is transmitted using various modalities as carriers. Assume that the event space The amount of information obtained in the event space can be defined as:

And information is transmitted using various modalities as carriers. Assume that the event space The amount of information obtained in the event space can be defined as:

Humans have limited attention within a certain time and space range (assumed to be 1) , so when space-time events change from single modality to multi-modality, humans do not need to constantly adjust their attention and focus on unknown event information to obtain the maximum amount of information:

Humans have limited attention within a certain time and space range (assumed to be 1) , so when space-time events change from single modality to multi-modality, humans do not need to constantly adjust their attention and focus on unknown event information to obtain the maximum amount of information:

It can be seen from this that the more modalities a time-space event contains, the greater the amount of information an individual can acquire, and the higher his or her cognitive level.

It can be seen from this that the more modalities a time-space event contains, the greater the amount of information an individual can acquire, and the higher his or her cognitive level.

So for a machine, will the greater the amount of information it obtains, the closer the machine will be to the human cognitive level?

The answer is not so. In order to measure the cognitive ability of the machine, Li Xuelong expressed the process of the machine extracting information from the event space as follows based on the "Confidence" theory. Among them, D is the data amount of event space x.

Thus, the cognitive ability of a machine can be defined as the ability to obtain the maximum amount of information from a unit of data. In this way, humans and machines Cognitive learning is unified into the process of improving information utilization.

#So, how to improve the machine’s utilization of multi-modal data and thereby improve multi-modal cognitive computing capabilities?

Just as the improvement of human cognition is inseparable from association, reasoning, induction and deduction of the real world, if you want to improve the cognitive ability of machines, you also need to start from the corresponding three aspects: Association, generation, collaboration, These are also the three basic tasks of today’s multi-modal analysis.

2 Three main lines of multimodal cognitive computing

Three main lines of multimodal association, cross-modal generation and multimodal collaboration The focus of task processing multi-modal data is different, but the core is to maximize the amount of information using as little data as possible.

Multi-modal association

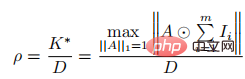

How content originating from different modalities is related in space, time and Correspondence at the semantic level? This is the goal of multi-modal association tasks and the prerequisite for improving information utilization.The alignment of multi-modal information at the spatial, temporal and semantic levels is the basis of cross-modal perception, and multi-modal retrieval is the application of perception in real life , for example, relying on multimedia search technology, we can enter vocabulary phrases to retrieve video clips.

Inspired by the human cross-sensory perception mechanism, AI researchers have used computable models for cross-modal perception tasks such as lip reading and missing modality generation.

It also further assists the cross-modal perception of disabled groups. In the future, the main application scenarios of cross-modal perception will no longer be limited to perception replacement applications for disabled people, but will be more integrated with human cross-sensory perception to improve the level of human multi-sensory perception.

Nowadays, digital modal content is growing rapidly, and the application requirements for cross-modal retrieval are becoming more abundant. This undoubtedly presents new opportunities and challenges for multi-modal association learning.

Cross-modal generation

When we read the plot of a novel, the corresponding picture will naturally appear in our mind , which is a manifestation of human cross-modal reasoning and generation capabilities.

Similarly, in multimodal cognitive computing, the goal of the cross-modal generation task is to give the machine the ability to generate unknown modal entities. From the perspective of information theory, the essence of this task is to improve the cognitive ability of the machine within the multi-modal information channel. There are two ways: one is to increase the amount of information, that is, cross-modal synthesis, ##The second is

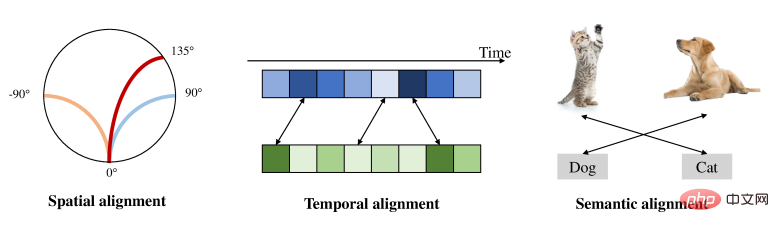

Reduce the amount of data, that is, cross-modal conversion.The cross-modal synthesis task is to enrich existing information when generating new modal entities, thereby increasing the amount of information. Taking image generation based on text as an example, in the early days, entity association was mainly used, which often relied heavily on retrieval libraries. Today, image generation technology is mainly based on generative adversarial networks, which can generate realistic and high-quality images. However, facial image generation is still very challenging, because from an information level, even small expression changes may convey a very large amount of information. At the same time, converting complex modalities into simple modalities and finding more concise expressions can reduce the amount of data and improve information acquisition capabilities. Caption: Common cross-modal conversion tasks As An example of the combination of computer vision and natural language processing technologies, cross-modal conversion can greatly improve online retrieval efficiency. For example, give a brief natural language description of a lengthy video, or generate audio signals related to a piece of video information. The two current mainstream generative models, VAE (variational autoencoder) and GAN (generative adversarial network), each have their own strengths and weaknesses. Li Xuelong believes that VAE relies on assumptions, while GAN can The explanation is poor, and the two need to be combined reasonably. A particularly important point is that the challenge of multi-modal generation tasks lies not only in the quality of generation, but also in the semantic and representation gaps between different modalities. How to perform knowledge reasoning under the premise of semantic gaps needs to be solved in the future. difficulty. Multimodal collaboration In the human cognitive mechanism, induction and deduction play an important role. We Multimodal perceptions such as what is seen, heard, smelled, and touched can be summarized, fused, and jointly deduced as a basis for decision-making. Similarly, multi-modal cognitive computing also requires the coordination of two or more modal data, cooperate with each other to complete more complex multi-modal tasks, and improve accuracy and Generalization. From the perspective of information theory, its essence is the mutual fusion of multi-modal information to achieve the purpose of information complementation, and it is the optimization of attention. First of all, modal fusion is to solve the problems of multi-modal data differences caused by data format, spatiotemporal alignment, noise interference, etc. At present, the fusion methods of chance rules include serial fusion, parallel fusion and weighted fusion, and the learning-based fusion methods include attention mechanism model, transfer learning and knowledge distillation. Secondly, after the fusion of multi-modal information is completed, joint learning of modal information is required to help the model mine the relationship between modal data and establish a relationship between modalities. auxiliary or complementary connections. Through joint learning, on the one hand, it can improve modal performance, such as visual guidance audio, audio guidance vision, depth guidance vision and other applications; on the other hand, it can solve the problem of previous single-mode Tasks that are difficult to achieve in a modal manner, such as complex emotional computing, audio matching face modeling, audio-visual guided music generation, etc. are all the development directions of multi-modal cognitive computing in the future. In recent years, deep learning technology has greatly promoted multi-modal cognitive computing in theory and engineering. development of. But nowadays, application requirements are becoming more diversified and data iteration speed is accelerating, which poses new challenges and many opportunities for multi-modal cognitive computing. We can look at the four levels of improving machine cognitive capabilities: At the data level, traditional multi-modal research will The collection and calculation of data are separated into two independent processes, which has drawbacks. The human world is composed of continuous analog signals, while machines process discrete digital signals, and the conversion process will inevitably cause information distortion and loss. In this regard, Li Xuelong believes that Intelligent optoelectronics represented by optical neural networks can bring solutions. If the sensing and calculation of multi-modal data can be integrated, the information processing efficiency of the machine will be improved. and intelligence level will be greatly improved. At the information level, the key to cognitive computing isthe processing of high-level semantics in information,such as the positional relationship in vision, the style of images, and the emotion of music wait. Currently, multimodal tasks are limited to simple targets and interactions in scenarios, and cannot understand deep logical semantics or subjective semantics. For example, a machine can generate an image of a flower blooming in a meadow, but it cannot understand the common sense that flowers wither in winter. Therefore, building a communication bridge between complex logic and sensory semantic information in different modes, and establishing a unique machine measurement system is a major trend in multi-modal cognitive computing in the future. At the fusion mechanism level, how to perform high-quality optimization of multi-modal models composed of heterogeneous components is currently a difficulty. Most of the current multi-modal cognitive computing optimizes the model under a unified learning goal. This optimization strategy lacks targeted adjustments to the heterogeneous components within the model, resulting in large problems in the existing multi-modal models. Under-optimization problems need to be approached from multiple aspects such as multi-modal machine learning and optimization theoretical methods. At the task level, the cognitive learning method of the machine varies with the task. We need to design a learning strategy for task feedback to improve the ability to solve a variety of related tasks. In addition, in view of the shortcomings of the current "spectator-style" learning method of machine learning to understand the world from images, texts and other data, we can learn from the research results of cognitive science, such asEmbodied AI is a potential solution: intelligent agents need multi-modal interaction with the environment in order to continuously evolve and form the ability to solve complex tasks. AI Technology Review: Why should we pay attention to multimodality in artificial intelligence research? Data and multimodal cognitive computing? What benefits and obstacles does the growth of multimodal data bring to model performance? Li Xuelong: Thank you for your question. The reason why we pay attention to and study multi-modal data is that artificial intelligence is essentially data-dependent. The information that single-modal data can provide is always very limited, while multi-modal data can provide multiple Hierarchical, multi-perspective information; on the other hand, because the objective physical world is multi-modal, the research of many practical problems cannot be separated from multi-modal data, such as searching for pictures by text, identifying objects by listening to music, etc. We analyze multimodal issues from the perspective of cognitive computing, starting from the nature of artificial intelligence. By building a multimodal analysis system that can simulate human cognitive patterns, we hope that Machines perceive their surroundings intelligently like humans. Complex and interleaved multi-modal information will also bring a lot of noise and redundancy, which will increase the learning pressure of the model, making the performance of multi-modal data worse than that of a single modality in some cases. , which poses greater challenges to model design and optimization. AI Technology Review: From the perspective of information theory, what are the similarities between human cognitive learning and machine cognitive learning? What guiding significance does research on human cognitive mechanisms have for multimodal cognitive computing? What difficulties will multimodal cognitive computing face without understanding human cognition? Li Xuelong: Aristotle believed that people’s understanding of things begins with feeling, while Plato believed that through feeling What comes out cannot be called knowledge. Human beings receive a large amount of external information from birth, and gradually establish a self-cognition system through perception, memory, reasoning, etc., and the learning ability of machines is achieved through the training of large amounts of data. What is achieved is mainly to find the correspondence between perception and human knowledge. According to Plato, what machines learn is not knowledge yet. We quoted the theory of "Information Capacity" in the article and tried to establish a cognitive connection between humans and machines starting from the ability to extract information. Humans transmit multimodal information to the brain through multiple sensory channels such as sight, hearing, smell, taste, touch, etc., producing joint stimulation of the cerebral cortex. Psychological research has found that the combined action of multiple senses can produce cognitive learning models such as "multisensory integration", "synesthesia", "perceptual reorganization", and "perceptual memory". These human cognitive mechanisms are multimodal. Cognitive computing has brought significant inspiration, such as deriving typical multimodal analysis tasks such as multimodal collaboration, multimodal association, and cross-modal generation. It has also given rise to local sharing, long and short-term memory, and attention mechanisms. and other typical machine analysis mechanisms. Currently, the human cognitive mechanism is actually not clear. Without the guidance of human cognitive research, multi-modal cognitive computing will fall into the trap of data fitting. We cannot judge whether the model has learned the knowledge that people need. This is also a controversial issue in artificial intelligence. a little. AI Technology Comment: The view you put forward from the perspective of information theory that "multimodal cognitive computing can improve the machine's information extraction capabilities" is in specific What evidence supports this in multimodal cognitive computing tasks? Li Xuelong: This question can be answered from two aspects. First, multimodal information can improve the performance of a single modality in different tasks. A large amount of work has verified that when adding sound information, the performance of computer vision algorithms will be significantly improved, such as target recognition, scene understanding, etc. We have also built an environmental camera and found that by fusing multi-modal information from sensors such as temperature and humidity, the imaging quality of the camera can be improved. Second, joint modeling of multi-modal information provides the possibility to achieve more complex intelligent tasks. For example, we have done work on "Listen to the Image" to Visual information is encoded into sound, allowing blind people to "see" the scene in front of them, which also proves that multi-modal cognitive computing helps machines extract more information. AI Technology Review: What is the interconnection between alignment, perception and retrieval in multi-modal association tasks? Li Xuelong: The relationship between these three is relatively complicated in nature. In this article, I only give some of my own Preliminary views. The premise for the correlation of different modal information is that they jointly describe the same/similar objective existence. However, this correlation is difficult to determine when external information is complicated or interfered with. This requires first aligning the different modalities. information to determine the associated correspondence. Then, based on alignment, perception from one modality to another is achieved. This is like when we only see a person's lip movements, we can seem to hear what he said. This phenomenon is also based on the correlation and alignment of visual elements (Viseme) and phonemes (Phoneme). In real life, we have further applied this cross-modal perception to applications such as retrieval, retrieving pictures or video content of products through text, and realizing computable multi-modal correlation applications. AI Technology Review: The recently very popular DALL-E and other models are an example of cross-modal generation tasks, and they perform well in text generation image tasks. , but there are still great limitations in the semantic relevance and interpretability of the generated images. How do you think this problem should be solved? What's the difficulty? Li Xuelong: Generating images from text is an "imagination" task. People see or hear a sentence and understand it. Semantic information, and then rely on brain memory to imagine the most suitable scene to create a "picture sense". Currently, DALL-E is still in the stage of using statistical learning for data fitting to summarize and summarize large-scale data sets, which is what deep learning is currently best at. However, if you really want to learn people’s “imagination”, you also need to consider the human cognitive model to achieve a “high level” of intelligence. This requires the cross-integration of neuroscience, psychology, and information science, which is both a challenge and an opportunity. In recent years, many teams have also done top-notch work in this area. Through the cross-integration of multiple disciplines, exploring the computability theory of human cognitive models is also one of the directions of our team's efforts. We believe it will also bring new breakthroughs in "high-level" intelligence. AI Technology Review: How do you draw inspiration from cognitive science in your research work? What research in cognitive science are you particularly interested in? Li Xuelong: Ask him how clear it is? Come for living water from a source. I often observe and think about some interesting phenomena from my daily life. Twenty years ago, I browsed a webpage with pictures of Jiangnan landscapes. When I clicked on the music on the webpage, I suddenly felt like I was there. At this time, I began to think about the relationship between hearing and vision from a cognitive perspective. In the process of studying cognitive science, I learned about the phenomenon of "Synaesthesia". Combined with my own scientific research direction, I completed an article titled "Visual Music and Musical Vision", which was also the first For the first time, "synesthesia" was introduced into the information field. Later, I opened the first cognitive computing course in the information field, and also created the IEEE SMC Cognitive Computing Technical Committee, trying to break the boundaries between cognitive science and computing science. Cognitive computing was also defined at that time, which is the current description on the technical committee's homepage. In 2002, I proposed the ability to provide information per unit amount of data, which is the concept of "Information Capacity", and tried to measure the cognitive ability of machines. I am honored to present it in 2020 with the title of "Multimodal" "Cognitive Computing" won the Tencent Scientific Exploration Award. Up to now, I have continued to pay attention to the latest developments in synesthesia and perception. In nature, there are many modes beyond the five human senses, and there are even potential modes that are not yet clear. For example, quantum entanglement may indicate that the three-dimensional space we live in is just a projection of a high-dimensional space. If this is indeed the case , then our detection methods are also limited. Perhaps these potential modes can be exploited to allow machines to approach or even surpass human perception. AI Technology Comment: On the issue of how to better combine human cognition and artificial intelligence, you proposed to build a "meta-modality" (Meta- Modal) as the core modal interaction network, can you introduce this point of view? What is its theoretical basis? Li Xuelong: Metamodality itself is a concept derived from the field of cognitive neuroscience. It refers to the brain having such a Organization, which does not make specific assumptions about the sensory category of the input information when performing a certain function or representation operation, but can still achieve good execution performance. Metamodality is not a whimsical concept. It is essentially the hypothesis and mechanism of cognitive scientists integrating cross-modal perception, neuronal plasticity and other phenomena and mechanisms. guess. It also inspires us to construct efficient learning architectures and methods between different modalities to achieve more generalized modal representation capabilities. AI Technology Review: What are the main applications of multi-modal cognitive computing in the real world? for example. Li Xuelong: Multimodal cognitive computing is a research that is very close to practical applications. Our team has previously done work on cross-modal perception, which encodes visual information into sound signals and stimulates the primary visual cortex of the cerebral cortex. It has been applied in assisting the disabled and helping blind people see the outside world. In daily life, we often use multi-modal cognitive computing technology. For example, short video platforms will combine voice, image and text tags to recommend videos that users may be interested in. More broadly, multi-modal cognitive computing is also widely used in on-site security mentioned in the article, such as intelligent search and rescue, drones and ground robots collecting sounds, Various data such as images, temperature, humidity, etc. need to be integrated and analyzed from a cognitive perspective, and different search and rescue strategies can be implemented according to the on-site situation. There are many similar applications, such as intelligent inspection, cross-domain remote sensing, etc. AI Technology Comment: You mentioned in your article that current multi-modal tasks are limited to simple targets and interactions in scenarios. Once more complex tasks are involved, Deep logical semantics or subjective semantics is difficult to achieve. So, is this an opportunity for the renaissance of symbolic artificial intelligence? What other feasible solutions are available to improve the ability of machines to process high-level semantic information? Li Xuelong:Russell believes that most of the value of knowledge lies in its uncertainty. The learning of knowledge requires warmth and the ability to interact and feedback with the outside world. Most of the research we see currently is single-modal, passive, and oriented to given data, which can meet the research needs of some simple goals and scenarios. However, for deeper logical semantics or subjective semantics, it is necessary to fully explore and excavate situations that are multi-dimensional in time and space, supported by more modalities, and capable of active interaction. In order to achieve this goal, research methods and methods may draw more from cognitive science. For example, some researchers refer to the "embodied experience" hypothesis in cognitive science Introduced into the field of artificial intelligence, we explore new learning problems and tasks when machines actively interact with the outside world and input multiple modal information, and get some gratifying results. This also demonstrates the role and positive significance of multimodal cognitive computing in connecting artificial intelligence and cognitive science. AI Technology Review: Smart optoelectronics is also one of your research directions. You mentioned in your article that smart optoelectronics can bring exploration to the digitization of information. solution ideas. What can smart optoelectronics do in terms of sensing and computing multi-modal data? Li Xuelong: Optical signals and electrical signals are the main ways for people to understand the world. Most of the information humans receive every day comes from vision. Going one step further, visual information mainly comes from light. The five human senses of sight, hearing, smell, taste, and touch also convert different sensations such as light, sound waves, pressure, smell, and stimulation into electrical signals for high-level cognition. Therefore, photoelectricity is the main source of information for human beings to perceive the world. In recent years, with the help of various advanced optoelectronic devices, we have sensed more information besides visible light and audible sound waves. It can be said that photoelectric equipment is the forefront of human perception of the world. The smart optoelectronics research we are engaged in is committed to exploring the integration of photoelectric sensing hardware and intelligent algorithms, introducing physical priors into the algorithm design process, using algorithm results to guide hardware design, and forming "sense" and "calculation" mutual feedback, expand the boundaries of perception, and achieve the purpose of imitating or even surpassing human multi-modal perception. AI Technology Review: What research work are you currently doing in the direction of multi-modal cognitive computing? What are your future research goals? Li Xuelong: Thanks for the question. My current focus is on multimodal cognitive computing in Vicinagearth Security. Security in the traditional sense usually refers to urban security. At present, human activity space has expanded to low altitude, ground and underwater. We need to establish a three-dimensional security and defense system in the near-ground space to perform a series of practical tasks such as cross-domain detection and autonomous unmanned systems. A big problem facing on-site security is how to intelligently process a large amount of multi-modal data generated by different sensors, such as allowing machines to understand unmanned systems from a human perspective Targets observed simultaneously by aircraft and ground monitoring equipment. This involves multi-modal cognitive computing and the combination of multi-modal cognitive computing and smart optoelectronics. In the future, I will continue to study the application of multi-modal cognitive computing in on-site security, hoping to open up the connection between data acquisition and processing, and rationally utilize "forward "Pi-Noise" to establish an on-site security system supported by multi-modal cognitive computing and intelligent optoelectronics.

3 Opportunities and Challenges

4 Conversation with Li Xuelong

The above is the detailed content of IEEE Fellow Li Xuelong: Multimodal cognitive computing is the key to realizing general artificial intelligence. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

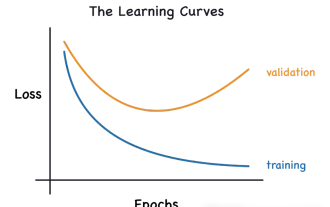

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

This article will introduce how to effectively identify overfitting and underfitting in machine learning models through learning curves. Underfitting and overfitting 1. Overfitting If a model is overtrained on the data so that it learns noise from it, then the model is said to be overfitting. An overfitted model learns every example so perfectly that it will misclassify an unseen/new example. For an overfitted model, we will get a perfect/near-perfect training set score and a terrible validation set/test score. Slightly modified: "Cause of overfitting: Use a complex model to solve a simple problem and extract noise from the data. Because a small data set as a training set may not represent the correct representation of all data." 2. Underfitting Heru

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing