Technology peripherals

Technology peripherals

AI

AI

Meta releases the first 'non-parametric' mask language model NPM: beating GPT-3 with 500 times the number of parameters

Meta releases the first 'non-parametric' mask language model NPM: beating GPT-3 with 500 times the number of parameters

Meta releases the first 'non-parametric' mask language model NPM: beating GPT-3 with 500 times the number of parameters

Although the powerful performance of large language models in the NLP field is amazing, the negative costs it brings are also serious, such as training is too expensive and difficult to update. , and it is difficult to deal with long-tail knowledge.

And language models usually use a softmax layer containing a limited vocabulary in the prediction layer, which basically does not output rare words or phrases, which greatly limits the expression ability of the model.

In order to solve the long-tail problem of the model, scholars from the University of Washington, Meta AI and the Allen Institute for Artificial Intelligence recently jointly proposed the first "NonParametric Masked language model" (NonParametric Masked language model, NPM), which replaces the softmax output by referring to the non-parametric distribution of each phrase in the corpus.

Paper link: https://arxiv.org/abs/2212.01349

Code link: https://github.com/facebookresearch/NPM

NPM can be effectively trained by contrastive objective and intra-batch approximation of retrieving the complete corpus.

The researchers conducted a zero-shot evaluation on nine closed tasks and seven open tasks, including spatiotemporal transformation and word-level translation tasks that emphasize the need to predict new facts or rare phrases.

The results show that regardless of whether retrieval and generation methods are used, NPM is significantly better than larger parameter models. For example, GPT-3 with 500 times more parameters and OPT 13B with 37 times more performance are much better. , and NPM is particularly good at handling rare patterns (word meanings or facts) and predicting rare or barely seen words (such as non-Latin scripts).

The first non-parametric language model

Although this problem can be alleviated by combining some existing retrieval-and-generate related work, the final prediction part of these models A softmax layer is still needed to predict tokens, which does not fundamentally solve the long tail problem.

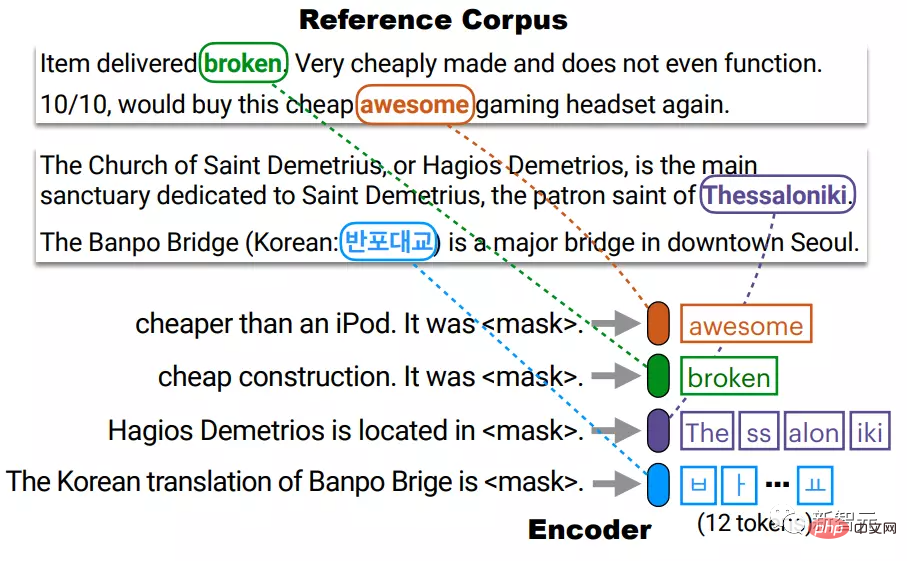

NPM consists of an encoder and a reference corpus. The encoder maps the text into a fixed-size vector, and then NPM retrieves a phrase from it and fills in [MASK].

As you can see, NPM chooses the non-parametric distribution obtained on the phrases instead of using a fixed output vocabulary softmax as the output.

But training non-parametric models also brings two key problems:

1. Retrieving the complete corpus during the training process is very time-consuming and labor-intensive. Researchers have used Learning to predict phrases of arbitrary length without a decoder is difficult, and researchers solve this problem by extending span masking and phrase-level comparison goals .

In short, NPM completely removes the softmax of the output vocabulary and achieves an effective unbounded output space by predicting any number of n-grams.

The resulting model can predict "extremely rare" or even "completely unseen" words (such as Korean words), and can effectively support unlimited vocabulary sizes, which existing models cannot make it happen.

NPM Method

The key idea of NPM is to use an encoder to map all phrases in the corpus into a dense vector space. At inference time, when given a query with [MASK], use the encoder to find the nearest phrase from the corpus and fill in [MASK].

Pure encoder (Encoder-only) model is a very competitive representation model, but existing pure encoding models cannot make predictions with an unknown number of tokens, making their use without fine-tuning are restricted.

NPM solves this problem by retrieving a phrase to fill any number of tokens in [MASK].

InferenceThe encoder maps each distinct phrase in the reference corpus C into a dense vector space.

At test time, the encoder maps the masked query into the same vector space and fills [MASK] with phrases retrieved from C.

Here, C does not have to be the same as the training corpus and can be replaced or extended at test time without retraining the encoder.

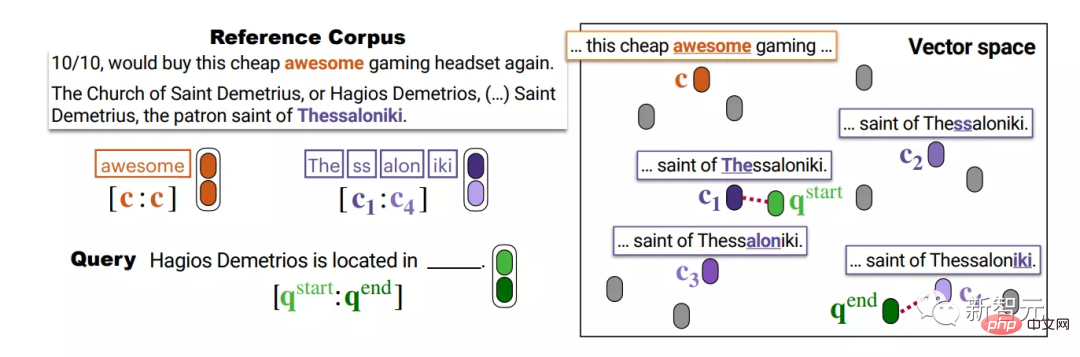

In practice, there are a large number of phrases in the corpus, and indexing all of them is expensive.

For example, if we consider a phrase with at most l tokens (l≈20), we need to index l×|C| number of vectors, which may be time-consuming.

The researchers indexed each distinct token in C, thereby reducing the size of the index from l×|C| to |C|, and then when testing, The non-parametric distribution of all phrases is approximated by performing k-nearest neighbor searches separately for the beginning and the end.

The researchers indexed each distinct token in C, thereby reducing the size of the index from l×|C| to |C|, and then when testing, The non-parametric distribution of all phrases is approximated by performing k-nearest neighbor searches separately for the beginning and the end.

For example, the phrase Thessaloniki composed of 4 BPE tokens is represented by the connection of c1 and c4, which correspond to the beginning (The) and the end (iki) of the phrase respectively.

Then use two vectors q_start and q_end in the same vector space to represent a query, and then use each vector to retrieve the start and end of plausible phrases before aggregating.

The premise of doing this is that the representation of the beginning and the end is good enough, that is, the starting point of q is close enough to c1, and the end point of q is close enough to c4, and this has been ensured during the training process.

Training

NPM is trained on unlabeled text data to ensure that the encoder maps the text into a good dense vector space.

There are two main problems in training NPM: 1) Complete corpus retrieval will make training very time-consuming; 2) Filling [MASK] with phrases of arbitrary length instead of tokens.

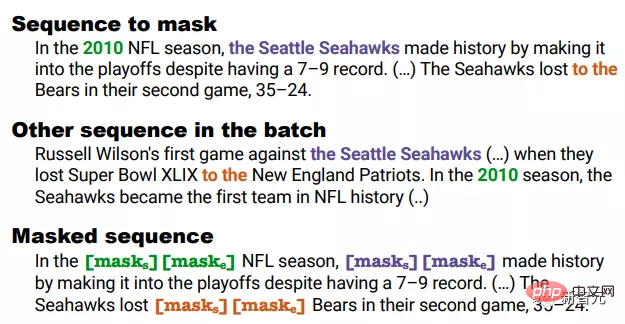

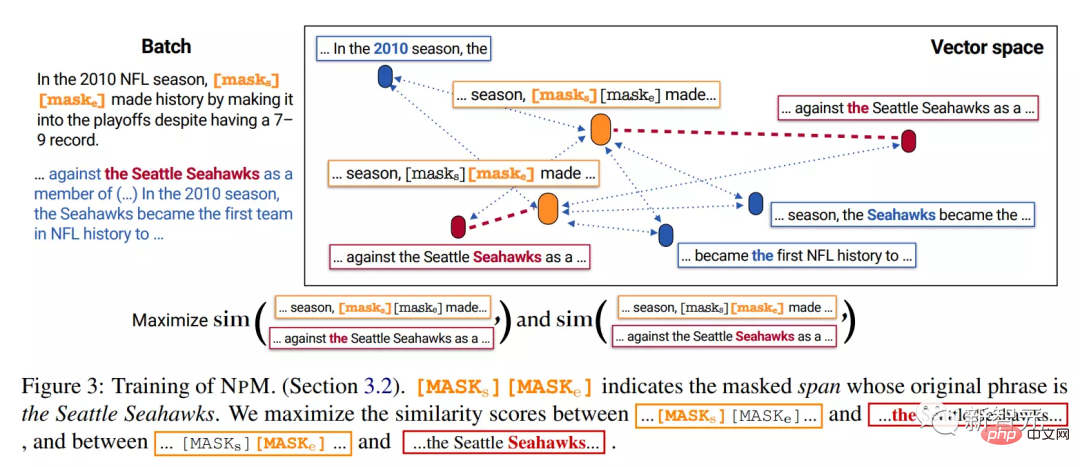

1. Masking Masking

Span masking is to mask continuous tokens whose lengths are sampled from a geometric distribution.

The researchers expanded on this:

1) If some fragments co-occur in other sequences in the batch, they are then masked to ensure that within the batch during training Positive examples (in-batch positives).

For example, the blocked clips 2010, the Seattle Seahawks, and to the all co-occur in another sequence.

But for the bigram "game," they cannot be masked together. Although they also appear in two sequences, they do not co-occur together.

2) Instead of replacing each token in the fragment with [MASK], replace the entire fragment with two special tokens [MASKs][MASKe].

For example, in the above example, regardless of the length of the masked segment, it is replaced with [MASKs][MASKe], so that the start and end vectors of each segment can be obtained, making reasoning more convenient.

2. Training target

Assuming that the masked clip is the Seattle Seahawks, during testing, the model should learn from the reference corpus The phrase the Seattle Seahawks was retrieved from other sequences of .

In the inference stage, the model obtains vectors from [MASKs] and [MASKe] and uses them to retrieve the beginning and end of the phrase from the corpus respectively.

Therefore, the training goal should encourage the vector of [MASKs] to be closer to the in the Seattle Seahawks and farther away from other tokens, and should not be the the in any phrase, such as become the in first.

We do this by training the model to approximate the full corpus to other sequences in the batch. Specifically, we train the model to retrieve the starting point of the Seattle Seahawks segment from other sequences in the same batch. and end point.

It should be noted that this mask strategy ensures that each masked span has a co-occurring segment in a batch.

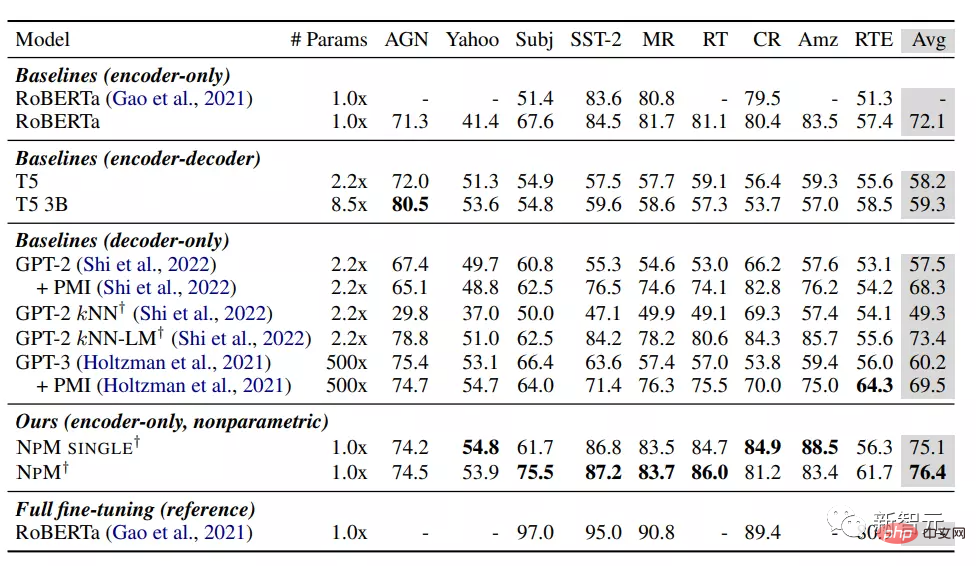

Experimental part

From the results, NPM performs better than other baseline models under the zero-shot setting.

Among the parametric models, RoBERTa achieved the best performance, unexpectedly surpassing models including GPT-3, probably because of the pure encoder model The bidirectionality plays a crucial role, which also suggests that causal language models may not be a suitable choice for classification.

The kNN-LM method adds non-parametric components to the parametric model, and its performance is better than all other baselines. Nonetheless, relying solely on retrieval (kNN) performs poorly in GPT-2, indicating the limitations of using kNN only at inference time.

NPM SINGLE and NPM both significantly outperformed all baselines, achieving consistently superior performance on all datasets. This shows that non-parametric models are very competitive even for tasks that do not explicitly require external knowledge.

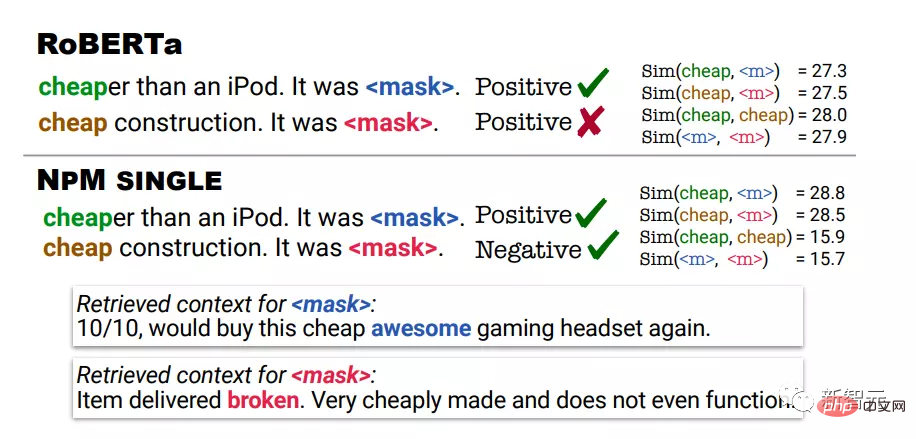

Qualitative analysis uses the prediction results of RoBERTa and NPM in sentiment analysis tasks. In the first example, cheap means not expensive, and in the second example, cheap means poor quality.

RoBERTa’s predictions for both examples were positive, while NPM made the correct prediction by retrieving contexts where cheap was used in the same context as the input Prediction.

It can also be found that the representation output by NPM can bring better word meaning disambiguation. For example, RoBERTa assigns a high similarity score between cheap and cheap.

On the other hand, NPM successfully assigns a low similarity score between cheap and cheap, which also shows that this non-parametric training with contrastive objectives is effective and can better improve representation learning, while kNN inference This kind of algorithm without training is completely impossible.

Reference: https://arxiv.org/abs/2212.01349

The above is the detailed content of Meta releases the first 'non-parametric' mask language model NPM: beating GPT-3 with 500 times the number of parameters. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Understand Tokenization in one article!

Apr 12, 2024 pm 02:31 PM

Understand Tokenization in one article!

Apr 12, 2024 pm 02:31 PM

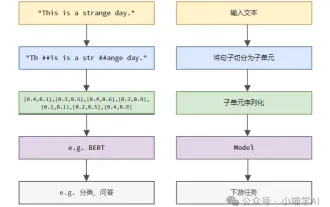

Language models reason about text, which is usually in the form of strings, but the input to the model can only be numbers, so the text needs to be converted into numerical form. Tokenization is a basic task of natural language processing. It can divide a continuous text sequence (such as sentences, paragraphs, etc.) into a character sequence (such as words, phrases, characters, punctuation, etc.) according to specific needs. The units in it Called a token or word. According to the specific process shown in the figure below, the text sentences are first divided into units, then the single elements are digitized (mapped into vectors), then these vectors are input to the model for encoding, and finally output to downstream tasks to further obtain the final result. Text segmentation can be divided into Toke according to the granularity of text segmentation.

What to do if npm react installation error occurs

Dec 27, 2022 am 11:25 AM

What to do if npm react installation error occurs

Dec 27, 2022 am 11:25 AM

Solution to npm react installation error: 1. Open the "package.json" file in the project and find the dependencies object; 2. Move "react.json" to "devDependencies"; 3. Run "npm audit in the terminal --production" to fix the warning.

JavaScript package managers compared: Npm vs Yarn vs Pnpm

Aug 09, 2022 pm 04:22 PM

JavaScript package managers compared: Npm vs Yarn vs Pnpm

Aug 09, 2022 pm 04:22 PM

This article will take you through the three JavaScript package managers (npm, yarn, pnpm), compare these three package managers, and talk about the differences and relationships between npm, yarn, and pnpm. I hope it will be helpful to everyone. Please help, if you have any questions please point them out!

An article analyzing package.json and package-lock.json

Sep 01, 2022 pm 08:02 PM

An article analyzing package.json and package-lock.json

Sep 01, 2022 pm 08:02 PM

This article will give you a detailed explanation of the package.json and package-lock.json files. I hope it will be helpful to you!

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

Three secrets for deploying large models in the cloud

Apr 24, 2024 pm 03:00 PM

Three secrets for deploying large models in the cloud

Apr 24, 2024 pm 03:00 PM

Compilation|Produced by Xingxuan|51CTO Technology Stack (WeChat ID: blog51cto) In the past two years, I have been more involved in generative AI projects using large language models (LLMs) rather than traditional systems. I'm starting to miss serverless cloud computing. Their applications range from enhancing conversational AI to providing complex analytics solutions for various industries, and many other capabilities. Many enterprises deploy these models on cloud platforms because public cloud providers already provide a ready-made ecosystem and it is the path of least resistance. However, it doesn't come cheap. The cloud also offers other benefits such as scalability, efficiency and advanced computing capabilities (GPUs available on demand). There are some little-known aspects of deploying LLM on public cloud platforms

What should I do if node cannot use npm command?

Feb 08, 2023 am 10:09 AM

What should I do if node cannot use npm command?

Feb 08, 2023 am 10:09 AM

The reason why node cannot use the npm command is because the environment variables are not configured correctly. The solution is: 1. Open "System Properties"; 2. Find "Environment Variables" -> "System Variables", and then edit the environment variables; 3. Find the location of nodejs folder; 4. Click "OK".

Conveniently trained the biggest ViT in history? Google upgrades visual language model PaLI: supports 100+ languages

Apr 12, 2023 am 09:31 AM

Conveniently trained the biggest ViT in history? Google upgrades visual language model PaLI: supports 100+ languages

Apr 12, 2023 am 09:31 AM

The progress of natural language processing in recent years has largely come from large-scale language models. Each new model released pushes the amount of parameters and training data to new highs, and at the same time, the existing benchmark rankings will be slaughtered. ! For example, in April this year, Google released the 540 billion-parameter language model PaLM (Pathways Language Model), which successfully surpassed humans in a series of language and reasoning tests, especially its excellent performance in few-shot small sample learning scenarios. PaLM is considered to be the development direction of the next generation language model. In the same way, visual language models actually work wonders, and performance can be improved by increasing the scale of the model. Of course, if it is just a multi-tasking visual language model