Technology peripherals

Technology peripherals

AI

AI

Prompt offensive and defensive battle! Columbia University proposed BPE word-making method, which can bypass the review mechanism. DALL-E 2 has been tricked

Prompt offensive and defensive battle! Columbia University proposed BPE word-making method, which can bypass the review mechanism. DALL-E 2 has been tricked

Prompt offensive and defensive battle! Columbia University proposed BPE word-making method, which can bypass the review mechanism. DALL-E 2 has been tricked

What is the most valuable thing in 2022? prompt!

Text-guided image generation (text-guided image generation) model, such as DALL-E 2, has been popular among netizens since it became popular.

But if you want the model to generate clear and usable target images, you must master the correct "spell", that is, the prompt must be carefully designed before it can be used. Some people even set up a website to sell prompts

If the prompt is an evil spell, the generated pictures may be "suspected of violations."

Although DALL-E 2 has set up various mechanisms to prevent the model from being abused when it was released, such as deleting violent, hateful or inappropriate images from the training data; using technical means to prevent super-generated faces. Realistic photos, especially of public figures.

During the generation phase, DALL-E 2 also sets a prompt filter that does not allow user-entered prompt words to contain violent, adult or political content.

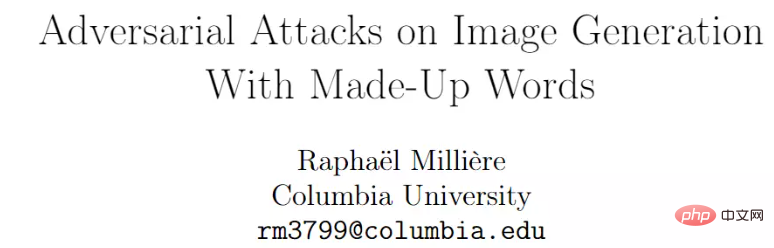

But recently, researchers at Columbia University discovered that some seemingly gibberish words can be added to the prompt, making it impossible for the filter to recognize the meaning of the word, but the AI system can eventually return meaningful generated images.

Paper link: https://arxiv.org/pdf/2208.04135.pdf

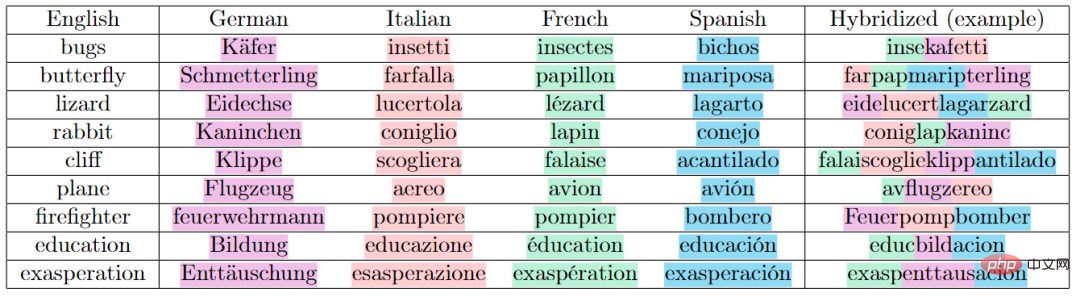

The author proposes two methods of constructing prompts. The first one is called It is called macaronic prompting, where the original meaning of the word macaronic refers to the mixing of words from multiple languages to generate new vocabulary. For example, in Pakistan, mixed words of Urdu and English are very common.

The training corpus of DALL-E 2 is usually data collected from the Internet. The process of establishing conceptual connections between text and images will more or less involve multi-language learning, so that the trained model has The ability to recognize concepts in multiple languages simultaneously.

So you can use multi-language combinations to form new words, bypass the prompt filter designed by humans, and achieve the purpose of fighting against attacks.

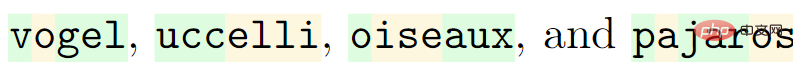

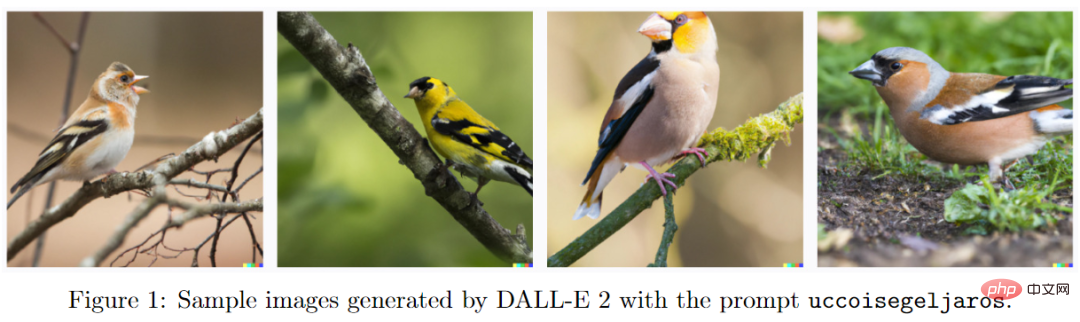

For example, the word "birds" is Vögel in German, uccelli in Italian, oiseaux in French, and pájaros in Spanish. The CLIP model uses the byte pair encoding (BPE) algorithm to input prompt sentences After word segmentation, it can be split into multiple subwords.

After rearranging the subwords into new words, such as uccoisegeljaros, DALL-E 2 can still generate images of birds, but humans cannot understand the word at all. meaning.

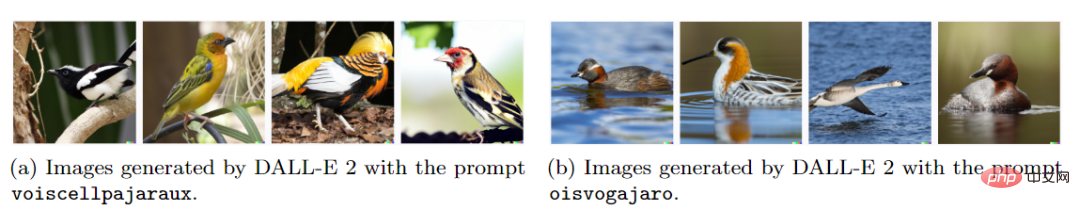

Even if the boundaries of subword are not strictly adhered to, for example, if replaced with voiscellpajaraux and oisvogajaro, the model can still generate bird images.

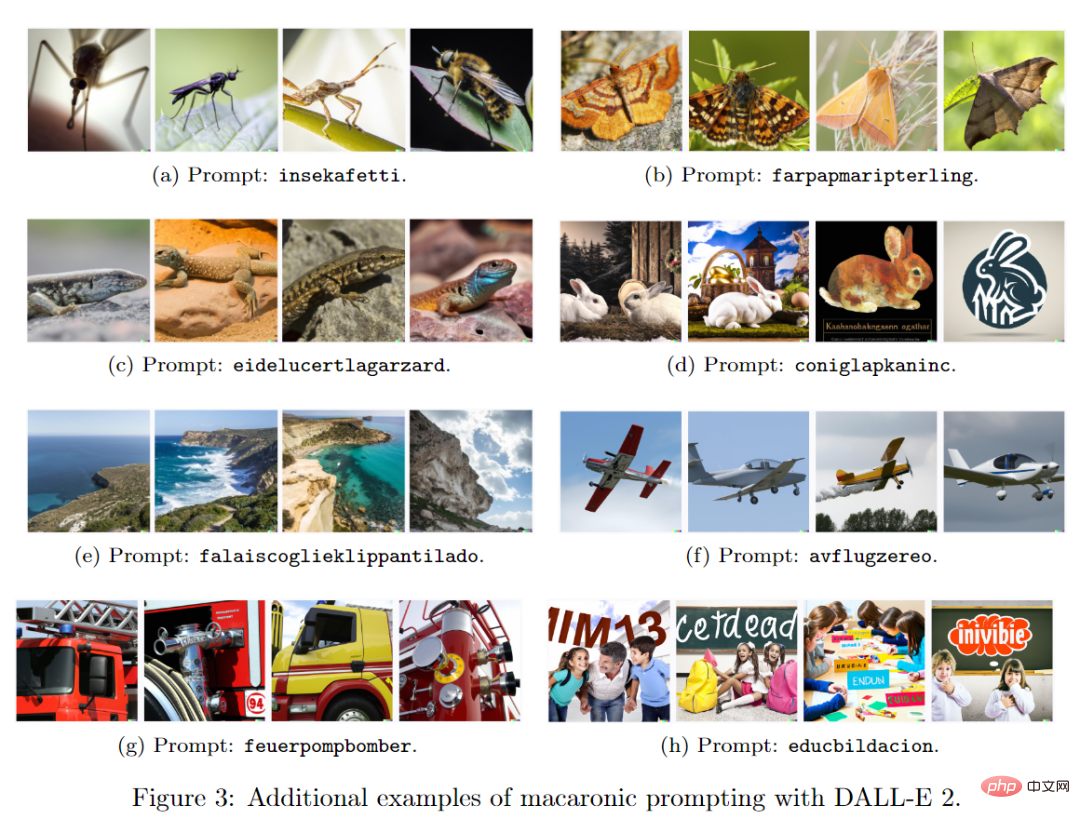

In addition to birds, researchers found that the method of combining multiple languages can achieve good results in different image domains, and the image generation results show a very high consistency.

The generation of relevant images from the animal kingdom to landscapes, vehicles, scenes, and emotions is a breeze.

Although different text-guided image generation models have different architectures, training data, and word segmentation methods, in principle, macaronic hints can be applied to any multilingual data The same effect can also be found in trained models, such as the DALL-E mini model.

It is worth noting that despite the similar names, DALL-E 2 and DALL-E mini are quite different. They have different architectures (DALL-E mini does not use a diffusion model), are trained on different data sets, and use different tokenizers (DALL-E mini uses the BART tokenizer, which may behave differently than the CLIP tokenizer split words).

Despite these differences, macaronic hints can still work on both models, and the principles behind them need to be further studied.

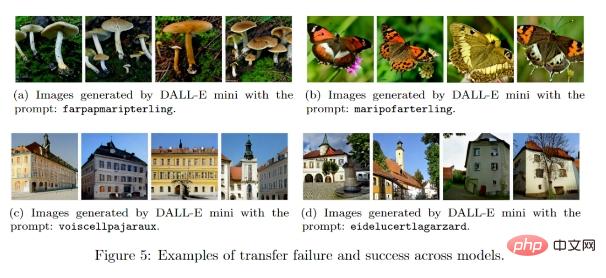

But not all macaronic cues transfer appropriately between different models. For example, while farpapmaripterling produced a butterfly image for DALL-E 2 as expected, it produced a mushroom in DALL-E mini. image.

The researchers speculate that perhaps larger models trained on larger datasets are more susceptible to macaronic cues because they Stronger associative relationships are learned between subword units and visual concepts in different languages.

This may explain why some macaronic tips that produce the expected results in DALL-E 2 don't work in DALL-E mini, but there are few examples to the contrary.

This trend may not be good news, as large-scale models may be more vulnerable to adversarial attacks using macaronic hints.

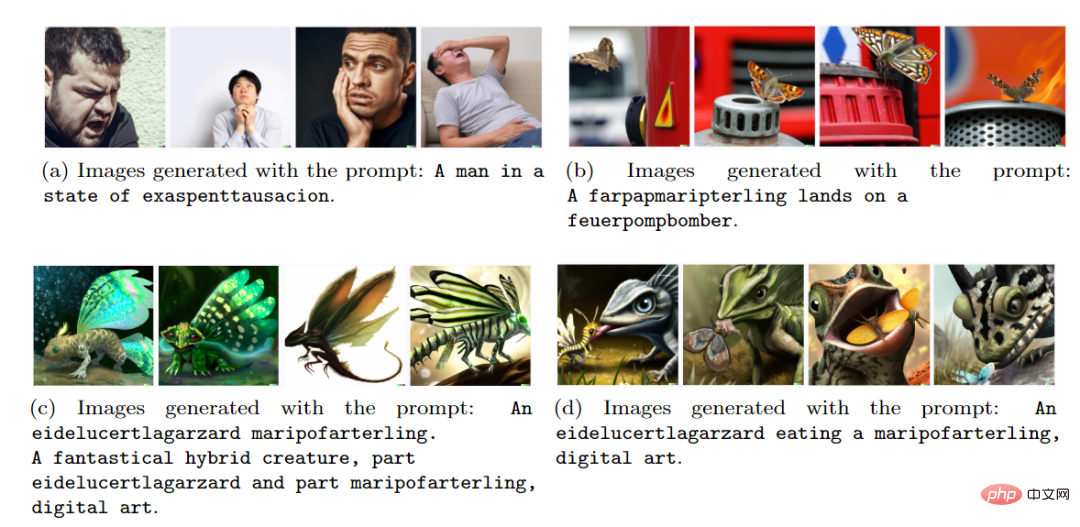

In addition to using a single compound word as a prompt, compound words can also be embedded into English syntax to form sentences, and the effect of generating images is similar to the original words.

# And another advantage of compound words is that they can be combined to produce more specific and complex scenes. While complex macaronic cues need to conform to the syntactic structure of English, making the generated results easier to interpret than cues using synthetic strings, the information conveyed to the model is still relatively vague.

For most people, without prior exposure to macaronic cues and knowledge of the language used for hybridization, it can be difficult to guess what kind of scenario would result from the prompt An eidelucertlagarzard eating a maripofarterling .

Furthermore, such complex prompts will not trigger blacklist-based content filters, despite the fact that they use ordinary English words, as long as the censored concepts are sufficiently "encrypted" using macaronic methods .

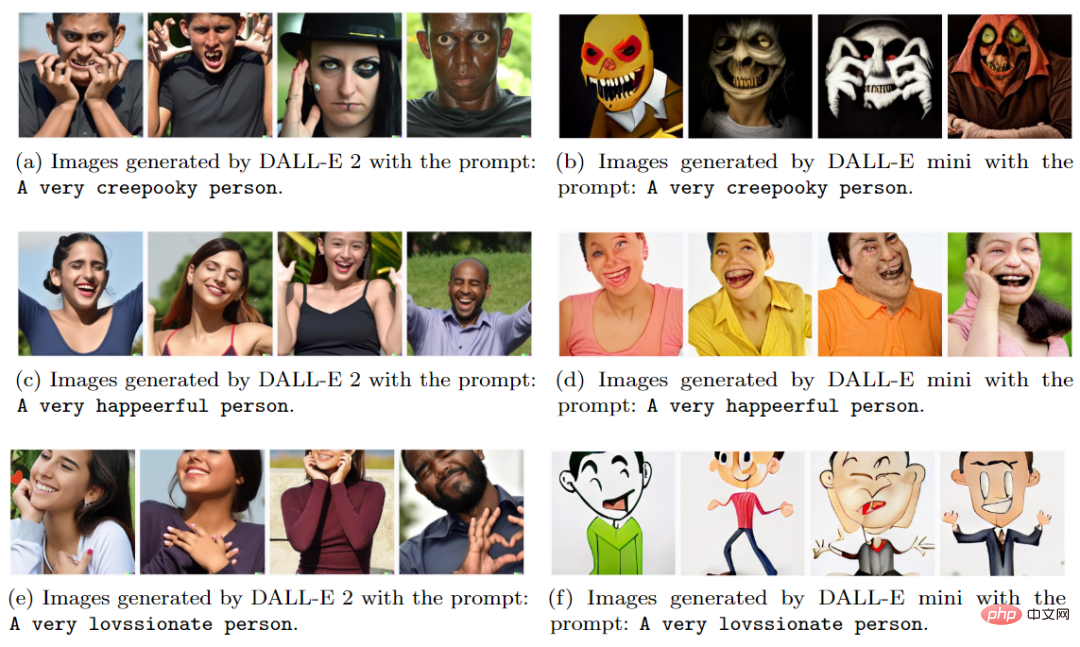

macaronic tip It is not necessary to combine subwords in multiple languages. Combining them within a single language can also produce a valid visual concept, but people familiar with English may guess the intended effect of the string, such as It is easy to guess that the word happy is a compound word of happy and cheerful.

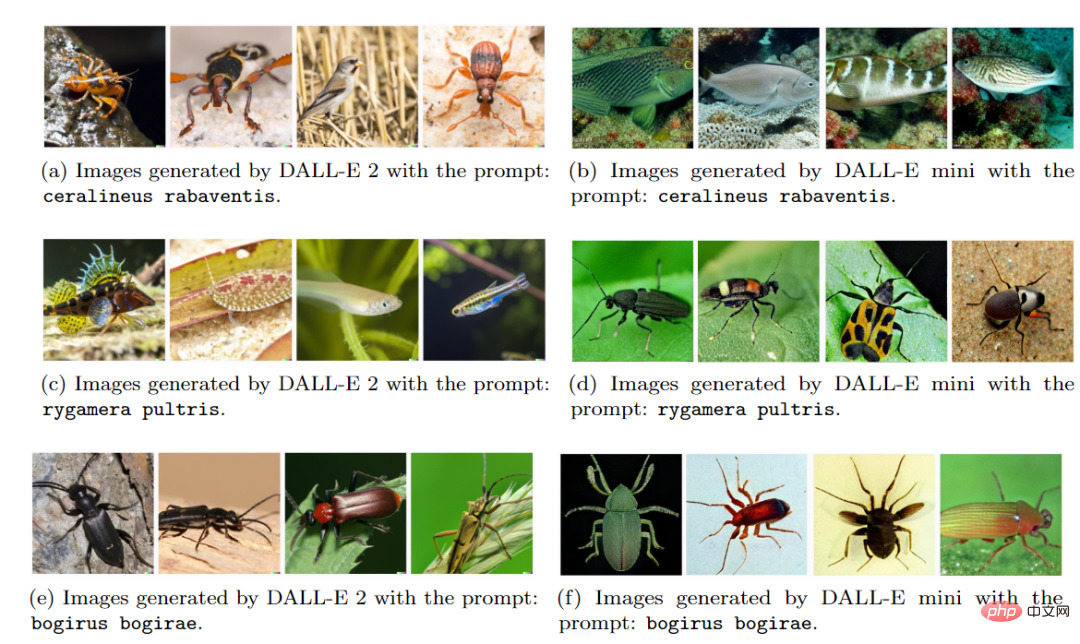

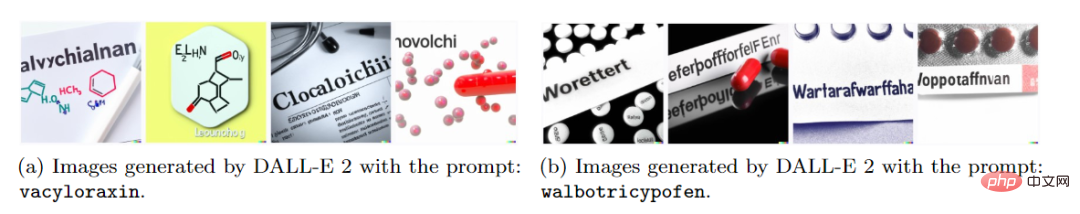

The second method is called Evocative Prompting. Unlike macaronic, evocative does not need to trigger visual association from existing word combinations, but from specific fields. The statistical significance of certain letter combinations is "evoked" to create a new word.

Referring to the Binomial Nomenclature in biological classification, you can create a new "pseudo-Latin word" based on the "genus name" and "species epithet", and DALL-E can create a new "pseudo-Latin word" based on the corresponding Topics generate corresponding species.

# New drug pictures can also be generated according to the naming rules of drugs.

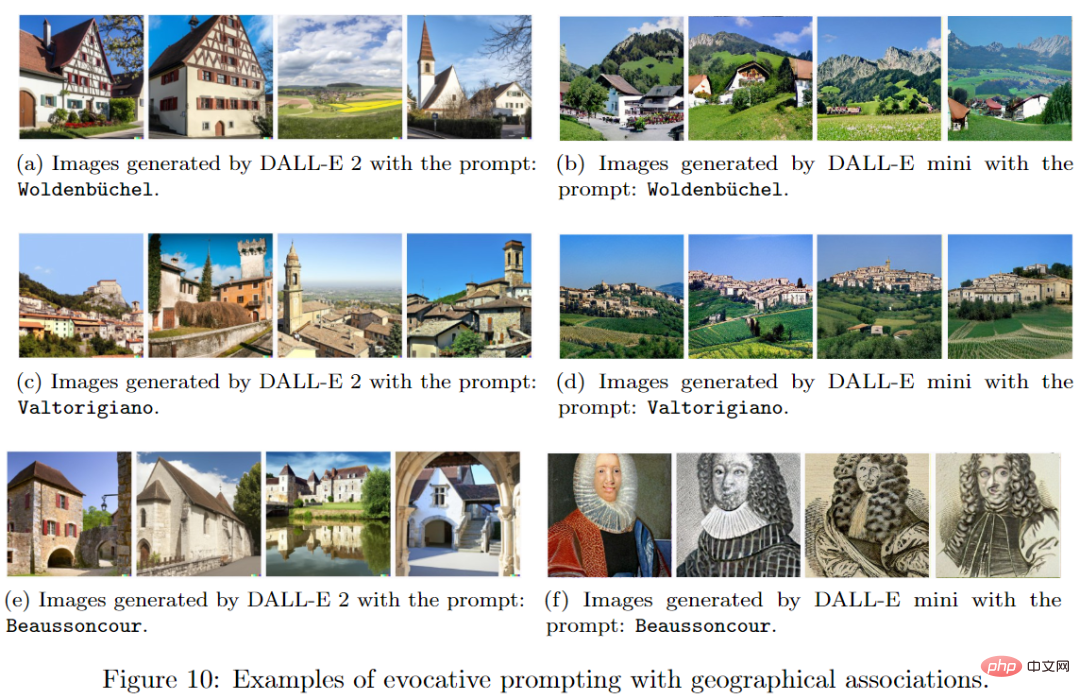

Evocative cues can also be applied to associations between specific features of a language and visual features related to the place and culture of the corresponding language. For example, based on the name of the building, the model can infer which country's style it is. For example, the scene generated by Woldenbüchel looks like a German or Austrian village; Valtorigiano looks like an ancient Italian town; Beaussoncour looks like a historical town in France.

However, they are not necessarily all buildings. For example, the last image generated with DALL-E mini is a 17th-century French portrait, not a location in France, but The connection with French culture has been preserved.

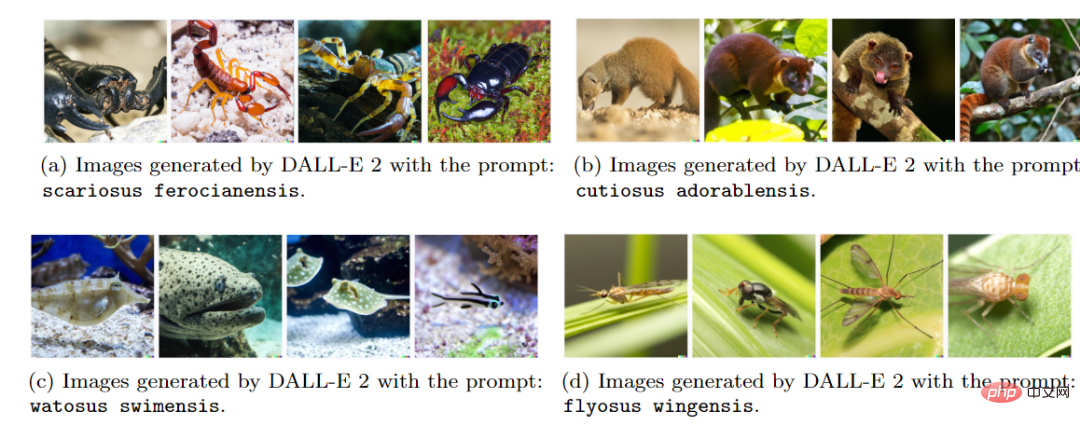

Evocative hints can also be combined with lexical hybridization to gain more control over the specific features of the output.

Introducing chunks of English words into the pseudo-Latin nomenclature will cause DALL-E 2 to generate images of animals with specific attributes. For example, the prompt word scariosus ferocianensis combines scary (scary) and ferocious (ferocious) with the pseudo-Latin terms. Combined, they can produce images of traditionally fearsome "reptiles" such as scorpions.

cutiosus adorablensis combines cute and adorable with pseudo-Latin terms to generate images of cute mammals in the traditional sense; watosus swimensis combines water and swimming (swimming) combined with pseudo-Latin affixes can produce images of aquatic animals; flyosus wingensis combines fly (fly) and winged (winged) with pseudo-Latin affixes to produce images of flying insects.

In principle, the vocabulary generated by the macaronic method can provide a simple and seemingly reliable method to bypass the prompt filter. People with ulterior motives can use it to generate harmful, offensive, and illegal words. or other sensitive content, including violent, hateful, racist, sexist or pornographic images, as well as images that may infringe intellectual property rights or depict real individuals.

While companies that provide image generation services have made extensive efforts to prevent the generation of this type of output in accordance with their content policies, macaronic prompts can still pose a significant threat to the security protocols of commercial image generation systems. .

The threat posed by evocative cues is less obvious, because it does not provide a very effective and reliable way to trigger specific visual associations for strings, and it is mostly limited to broad morphological features of words or languages. Vague associations with related concepts.

In general, macaronic tips are more operable than evocative tips, and keyword-based blacklist content filtering in this type of model is not enough to resist attacks.

Is DALL-E 2 going to go dark?

The above is the detailed content of Prompt offensive and defensive battle! Columbia University proposed BPE word-making method, which can bypass the review mechanism. DALL-E 2 has been tricked. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

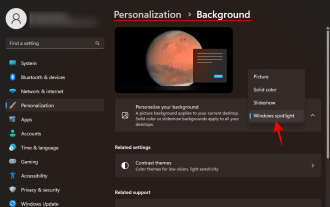

How to clear desktop background recent image history in Windows 11

Apr 14, 2023 pm 01:37 PM

How to clear desktop background recent image history in Windows 11

Apr 14, 2023 pm 01:37 PM

<p>Windows 11 improves personalization in the system, allowing users to view a recent history of previously made desktop background changes. When you enter the personalization section in the Windows System Settings application, you can see various options, changing the background wallpaper is one of them. But now you can see the latest history of background wallpapers set on your system. If you don't like seeing this and want to clear or delete this recent history, continue reading this article, which will help you learn more about how to do it using Registry Editor. </p><h2>How to use registry editing

How to Download Windows Spotlight Wallpaper Image on PC

Aug 23, 2023 pm 02:06 PM

How to Download Windows Spotlight Wallpaper Image on PC

Aug 23, 2023 pm 02:06 PM

Windows are never one to neglect aesthetics. From the bucolic green fields of XP to the blue swirling design of Windows 11, default desktop wallpapers have been a source of user delight for years. With Windows Spotlight, you now have direct access to beautiful, awe-inspiring images for your lock screen and desktop wallpaper every day. Unfortunately, these images don't hang out. If you have fallen in love with one of the Windows spotlight images, then you will want to know how to download them so that you can keep them as your background for a while. Here's everything you need to know. What is WindowsSpotlight? Window Spotlight is an automatic wallpaper updater available from Personalization > in the Settings app

How to use image semantic segmentation technology in Python?

Jun 06, 2023 am 08:03 AM

How to use image semantic segmentation technology in Python?

Jun 06, 2023 am 08:03 AM

With the continuous development of artificial intelligence technology, image semantic segmentation technology has become a popular research direction in the field of image analysis. In image semantic segmentation, we segment different areas in an image and classify each area to achieve a comprehensive understanding of the image. Python is a well-known programming language. Its powerful data analysis and data visualization capabilities make it the first choice in the field of artificial intelligence technology research. This article will introduce how to use image semantic segmentation technology in Python. 1. Prerequisite knowledge is deepening

iOS 17: How to use one-click cropping in photos

Sep 20, 2023 pm 08:45 PM

iOS 17: How to use one-click cropping in photos

Sep 20, 2023 pm 08:45 PM

With the iOS 17 Photos app, Apple makes it easier to crop photos to your specifications. Read on to learn how. Previously in iOS 16, cropping an image in the Photos app involved several steps: Tap the editing interface, select the crop tool, and then adjust the crop using a pinch-to-zoom gesture or dragging the corners of the crop tool. In iOS 17, Apple has thankfully simplified this process so that when you zoom in on any selected photo in your Photos library, a new Crop button automatically appears in the upper right corner of the screen. Clicking on it will bring up the full cropping interface with the zoom level of your choice, so you can crop to the part of the image you like, rotate the image, invert the image, or apply screen ratio, or use markers

New perspective on image generation: discussing NeRF-based generalization methods

Apr 09, 2023 pm 05:31 PM

New perspective on image generation: discussing NeRF-based generalization methods

Apr 09, 2023 pm 05:31 PM

New perspective image generation (NVS) is an application field of computer vision. In the 1998 SuperBowl game, CMU's RI demonstrated NVS given multi-camera stereo vision (MVS). At that time, this technology was transferred to a sports TV station in the United States. , but it was not commercialized in the end; the British BBC Broadcasting Company invested in research and development for this, but it was not truly commercialized. In the field of image-based rendering (IBR), there is a branch of NVS applications, namely depth image-based rendering (DBIR). In addition, 3D TV, which was very popular in 2010, also needed to obtain binocular stereoscopic effects from monocular video, but due to the immaturity of the technology, it did not become popular in the end. At that time, methods based on machine learning had begun to be studied, such as

Use 2D images to create a 3D human body. You can wear any clothes and change your movements.

Apr 11, 2023 pm 02:31 PM

Use 2D images to create a 3D human body. You can wear any clothes and change your movements.

Apr 11, 2023 pm 02:31 PM

Thanks to the differentiable rendering provided by NeRF, recent 3D generative models have achieved stunning results on stationary objects. However, in a more complex and deformable category such as the human body, 3D generation still poses great challenges. This paper proposes an efficient combined NeRF representation of the human body, enabling high-resolution (512x256) 3D human body generation without the use of super-resolution models. EVA3D has significantly surpassed existing solutions on four large-scale human body data sets, and the code has been open source. Paper name: EVA3D: Compositional 3D Human Generation from 2D image Collections Paper address: http

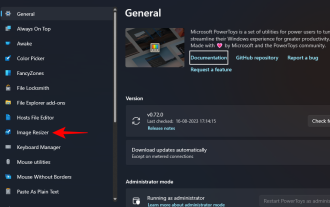

How to batch resize images using PowerToys on Windows

Aug 23, 2023 pm 07:49 PM

How to batch resize images using PowerToys on Windows

Aug 23, 2023 pm 07:49 PM

Those who have to work with image files on a daily basis often have to resize them to fit the needs of their projects and jobs. However, if you have too many images to process, resizing them individually can consume a lot of time and effort. In this case, a tool like PowerToys can come in handy to, among other things, batch resize image files using its image resizer utility. Here's how to set up your Image Resizer settings and start batch resizing images with PowerToys. How to Batch Resize Images with PowerToys PowerToys is an all-in-one program with a variety of utilities and features to help you speed up your daily tasks. One of its utilities is images

Erase blemishes and wrinkles with one click: in-depth interpretation of DAMO Academy's high-definition portrait skin beauty model ABPN

Apr 12, 2023 pm 12:25 PM

Erase blemishes and wrinkles with one click: in-depth interpretation of DAMO Academy's high-definition portrait skin beauty model ABPN

Apr 12, 2023 pm 12:25 PM

With the vigorous development of the digital culture industry, artificial intelligence technology has begun to be widely used in the field of image editing and beautification. Among them, portrait skin beautification is undoubtedly one of the most widely used and most demanded technologies. Traditional beauty algorithms use filter-based image editing technology to achieve automated skin resurfacing and blemish removal effects, and have been widely used in social networking, live broadcasts and other scenarios. However, in the professional photography industry with high thresholds, due to the high requirements for image resolution and quality standards, manual retouchers are still the main productive force in portrait beauty retouching, completing tasks including skin smoothing, blemish removal, whitening, etc. Series work. Usually, the average processing time for a professional retoucher to perform skin beautification operations on a high-definition portrait is 1-2 minutes. In fields such as advertising, film and television, which require higher precision, this