Understand Gunicorn and Python GIL in one article

What is the Python GIL, how it works, and how it affects gunicorn.

Which Gunicorn worker type should I choose for production environment?

Python has a global lock (GIL) which only allows one thread to run (i.e. interpret bytecode). In my opinion, understanding how Python handles concurrency is essential if you want to optimize your Python services.

Python and gunicorn give you different ways to handle concurrency, and since there is no magic bullet that covers all use cases, it's a good idea to understand the options, tradeoffs, and advantages of each option.

Gunicorn worker types

Gunicorn exposes these different options under the concept of "workers types". Each type is suitable for a specific set of use cases.

- sync——Fork the process into N processes running in parallel to handle the request.

- gthread——Generate N threads to concurrently serve requests.

- eventlet/gevent - Spawn green threads to serve concurrent requests.

Gunicorn sync worker

This is the simplest type of job where the only concurrency option is to fork N processes that will serve requests in parallel.

They can work well, but they incur a lot of overhead (such as memory and CPU context switching), and if most of your request time is waiting for I/O, scaling Sex is bad.

Gunicorn gthread worker

gthread worker improves on this by allowing you to create N threads per process. This improves I/O performance because you can run more instances of your code simultaneously. This is the only one of the four affected by GIL.

Gunicorn eventlet and gevent workers

eventlet/gevent workers attempt to further improve the gthread model by running lightweight user threads (aka green threads, greenlets, etc.).

#This allows you to have thousands of said greenlets at very little cost compared to system threads. Another difference is that it follows a collaborative work model rather than a preemptive one, allowing uninterrupted work until they block. We will first analyze the behavior of the gthread worker thread when processing requests and how it is affected by the GIL.

Unlike sync where each request is served directly by one process, with gthread, each process has N threads to scale better without spawning multiple processes s expenses. Since you are running multiple threads in the same process, the GIL will prevent them from running in parallel.

GIL is not a process or special thread. It is just a boolean variable whose access is protected by a mutex, which ensures that only one thread is running within each process. How it works can be seen in the picture above. In this example we can see that we have 2 system threads running concurrently, each thread handling 1 request. The process is like this:

- Thread A holds GIL and starts serving the request.

- After a while, thread B tries to serve the request but cannot hold the GIL.

- B Set a timeout to force the GIL to be released if this does not happen before the timeout is reached.

- A The GIL will not be released until the timeout is reached.

- B sets the gil_drop_request flag to force A to release the GIL immediately.

- A releases the GIL and will wait until another thread grabs the GIL, to avoid a situation where A keeps releasing and grabbing the GIL without other threads being able to grab it.

- B Start running.

- B Releases the GIL while blocking I/O.

- A starts running.

- B Tried to run again but was suspended.

- A completes before the timeout is reached.

- B Running completed.

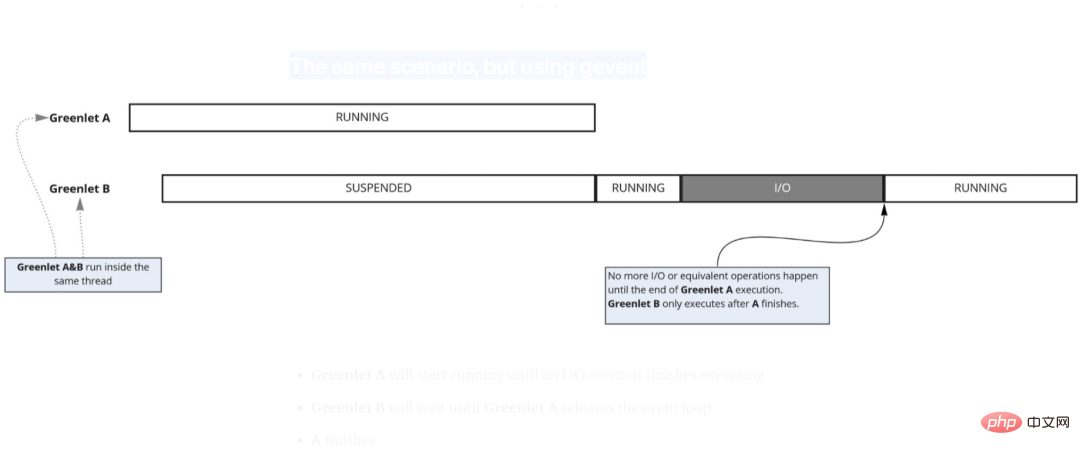

Same scenario but using gevent

Another option to increase concurrency without using processes is to use greenlets. This worker spawns "user threads" instead of "system threads" to increase concurrency.

While this means that they are not affected by the GIL, it also means that you still cannot increase the parallelism because they cannot be scheduled by the CPU in parallel.

- Greenlet A will start running until an I/O event occurs or execution is completed.

- Greenlet B will wait until Greenlet A releases the event loop.

- A is over.

- BStart.

- B releases the event loop to wait for I/O.

- B completed.

For this case, it is obvious that having a greenlet type worker is not ideal. We end up having the second request wait until the first request completes and then idle waiting for I/O again.

In these scenarios, the greenlet collaboration model really shines because you don't waste time on context switches and avoid the overhead of running multiple system threads.

We will witness this in the benchmark test at the end of this article. Now, this begs the following question:

- Will changing the thread context switch timeout affect service latency and throughput?

- How to choose between gevent/eventlet and gthread when you mix I/O and CPU work.

- How to use gthread worker to select the number of threads.

- Should I just use sync workers and increase the number of forked processes to avoid the GIL?

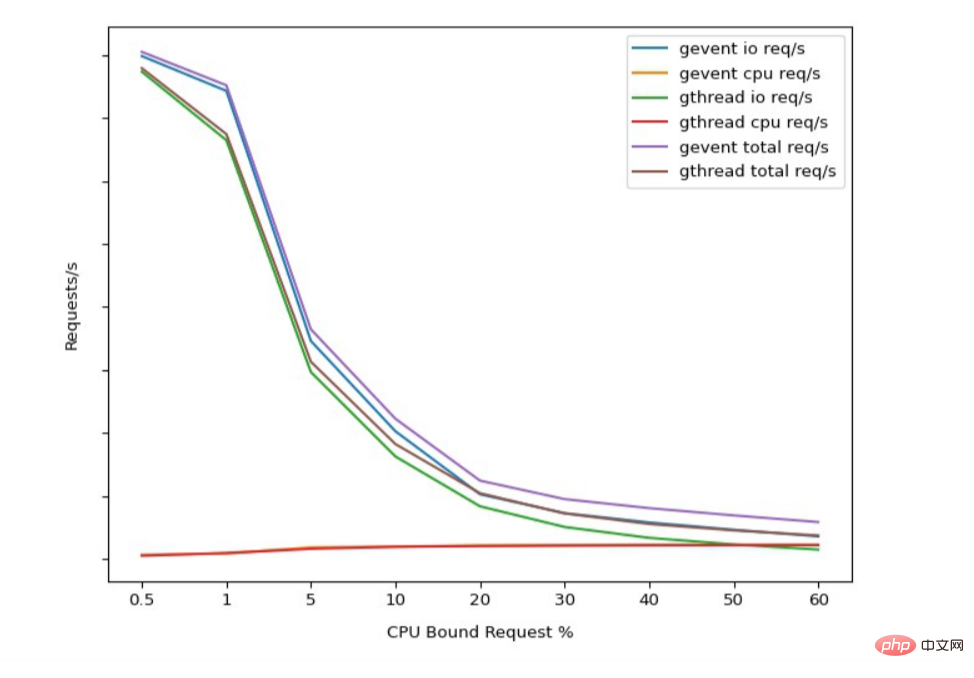

To answer these questions, you need to monitor to collect the necessary metrics and then run tailored benchmarks against those same metrics. There is no use running synthetic benchmarks that have zero correlation to your actual usage patterns. The graph below shows latency and throughput metrics for different scenarios to give you an idea of how it all works together.

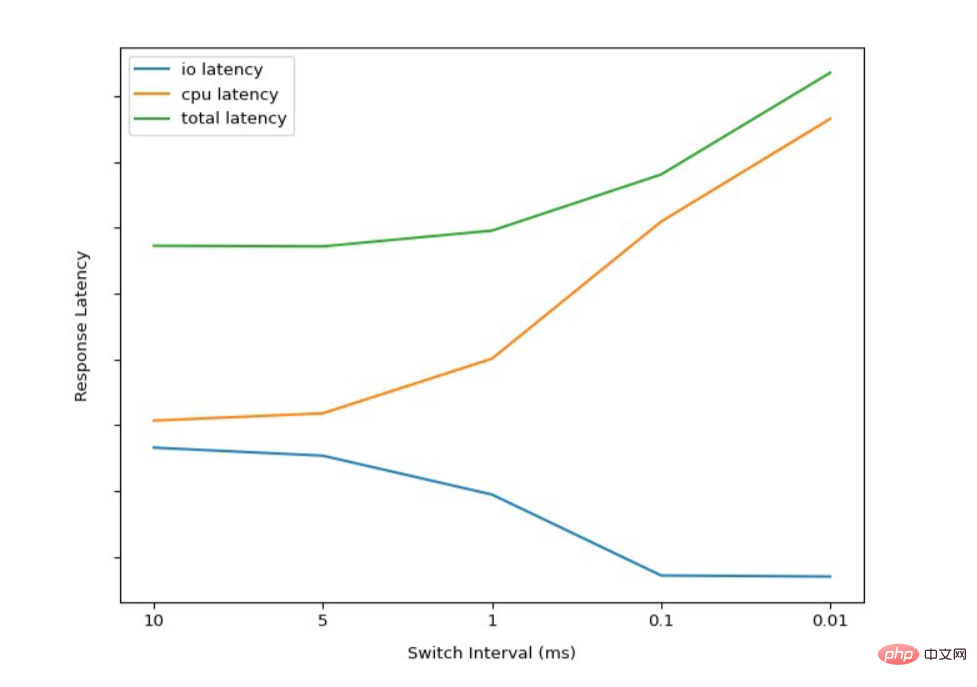

Benchmarking the GIL switching interval

Here we can see how changing the GIL thread switching interval/timeout affects request latency. As expected, IO latency gets better as the switching interval decreases. This happens because CPU-bound threads are forced to release the GIL more frequently and allow other threads to complete their work.

Here we can see how changing the GIL thread switching interval/timeout affects request latency. As expected, IO latency gets better as the switching interval decreases. This happens because CPU-bound threads are forced to release the GIL more frequently and allow other threads to complete their work.

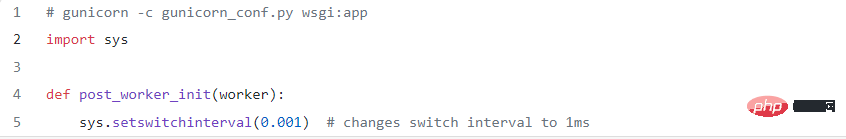

But this is not a panacea. Reducing the switch interval will make CPU-bound threads take longer to complete. We can also see an increase in overall latency and a decrease in timeouts due to the increased overhead of constant thread switching. If you want to try it yourself, you can change the switching interval using the following code:

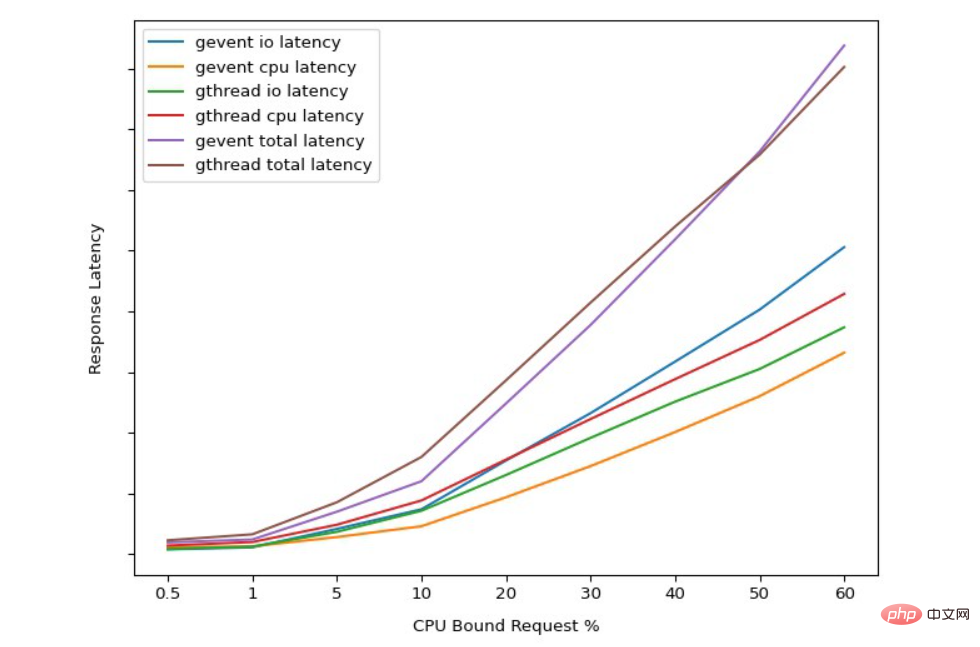

Benchmarking gthread vs. gevent latency using CPU bound requests

Overall, we can see that the benchmarks reflect our intuition from our previous analysis of how GIL-bound threads and greenlets work.

Gthread has better average latency for IO-bound requests because the switching interval forces long-running threads to release.

gevent CPU-bound requests have better latency than gthreads because they are not interrupted to service other requests.

Benchmarking gthread vs. gevent throughput using CPU bound requests

The results here also reflect our previous comparison of gevent vs. gthread Intuition for better throughput. These benchmarks are highly dependent on the type of work being done and may not necessarily translate directly to your use case.

The main goal of these benchmarks is to give you some guidance on what to test and measure in order to maximize each CPU core that will serve requests.

Since all gunicorn workers allow you to specify the number of processes that will run, what changes is how each process handles concurrent connections. Therefore, make sure to use the same number of workers to make the test fair. Let's now try to answer the previous question using the data collected from our benchmark.

Will changing the thread context switch timeout affect service latency and throughput?

Indeed. However, for the vast majority of workloads, it's not a game changer.

How to choose between gevent/eventlet and gthread when you are mixing I/O and CPU work? As we can see, ghtread tends to allow better concurrency when you have more CPU-intensive work.

How to choose the number of threads for gthread worker?

As long as your benchmarks can simulate production-like behavior, you will clearly see peak performance and then it will start to degrade due to too many threads.

Should I just use sync workers and increase the number of forked processes to avoid the GIL?

Unless your I/O is almost zero, scaling with just processes is not the best option.

Conclusion

Coroutines/Greenlets can improve CPU efficiency because they avoid interrupts and context switches between threads. Coroutines trade latency for throughput.

Coroutines can cause more unpredictable latencies if you mix IO and CPU-bound endpoints - CPU-bound endpoints are not interrupted to service other incoming requests. If you take the time to configure gunicorn correctly, the GIL is not a problem.

The above is the detailed content of Understand Gunicorn and Python GIL in one article. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

MySQL has a free community version and a paid enterprise version. The community version can be used and modified for free, but the support is limited and is suitable for applications with low stability requirements and strong technical capabilities. The Enterprise Edition provides comprehensive commercial support for applications that require a stable, reliable, high-performance database and willing to pay for support. Factors considered when choosing a version include application criticality, budgeting, and technical skills. There is no perfect option, only the most suitable option, and you need to choose carefully according to the specific situation.

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

The article introduces the operation of MySQL database. First, you need to install a MySQL client, such as MySQLWorkbench or command line client. 1. Use the mysql-uroot-p command to connect to the server and log in with the root account password; 2. Use CREATEDATABASE to create a database, and USE select a database; 3. Use CREATETABLE to create a table, define fields and data types; 4. Use INSERTINTO to insert data, query data, update data by UPDATE, and delete data by DELETE. Only by mastering these steps, learning to deal with common problems and optimizing database performance can you use MySQL efficiently.

MySQL download file is damaged and cannot be installed. Repair solution

Apr 08, 2025 am 11:21 AM

MySQL download file is damaged and cannot be installed. Repair solution

Apr 08, 2025 am 11:21 AM

MySQL download file is corrupt, what should I do? Alas, if you download MySQL, you can encounter file corruption. It’s really not easy these days! This article will talk about how to solve this problem so that everyone can avoid detours. After reading it, you can not only repair the damaged MySQL installation package, but also have a deeper understanding of the download and installation process to avoid getting stuck in the future. Let’s first talk about why downloading files is damaged. There are many reasons for this. Network problems are the culprit. Interruption in the download process and instability in the network may lead to file corruption. There is also the problem with the download source itself. The server file itself is broken, and of course it is also broken when you download it. In addition, excessive "passionate" scanning of some antivirus software may also cause file corruption. Diagnostic problem: Determine if the file is really corrupt

MySQL can't be installed after downloading

Apr 08, 2025 am 11:24 AM

MySQL can't be installed after downloading

Apr 08, 2025 am 11:24 AM

The main reasons for MySQL installation failure are: 1. Permission issues, you need to run as an administrator or use the sudo command; 2. Dependencies are missing, and you need to install relevant development packages; 3. Port conflicts, you need to close the program that occupies port 3306 or modify the configuration file; 4. The installation package is corrupt, you need to download and verify the integrity; 5. The environment variable is incorrectly configured, and the environment variables must be correctly configured according to the operating system. Solve these problems and carefully check each step to successfully install MySQL.

Solutions to the service that cannot be started after MySQL installation

Apr 08, 2025 am 11:18 AM

Solutions to the service that cannot be started after MySQL installation

Apr 08, 2025 am 11:18 AM

MySQL refused to start? Don’t panic, let’s check it out! Many friends found that the service could not be started after installing MySQL, and they were so anxious! Don’t worry, this article will take you to deal with it calmly and find out the mastermind behind it! After reading it, you can not only solve this problem, but also improve your understanding of MySQL services and your ideas for troubleshooting problems, and become a more powerful database administrator! The MySQL service failed to start, and there are many reasons, ranging from simple configuration errors to complex system problems. Let’s start with the most common aspects. Basic knowledge: A brief description of the service startup process MySQL service startup. Simply put, the operating system loads MySQL-related files and then starts the MySQL daemon. This involves configuration

How to optimize database performance after mysql installation

Apr 08, 2025 am 11:36 AM

How to optimize database performance after mysql installation

Apr 08, 2025 am 11:36 AM

MySQL performance optimization needs to start from three aspects: installation configuration, indexing and query optimization, monitoring and tuning. 1. After installation, you need to adjust the my.cnf file according to the server configuration, such as the innodb_buffer_pool_size parameter, and close query_cache_size; 2. Create a suitable index to avoid excessive indexes, and optimize query statements, such as using the EXPLAIN command to analyze the execution plan; 3. Use MySQL's own monitoring tool (SHOWPROCESSLIST, SHOWSTATUS) to monitor the database health, and regularly back up and organize the database. Only by continuously optimizing these steps can the performance of MySQL database be improved.

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

MySQL database performance optimization guide In resource-intensive applications, MySQL database plays a crucial role and is responsible for managing massive transactions. However, as the scale of application expands, database performance bottlenecks often become a constraint. This article will explore a series of effective MySQL performance optimization strategies to ensure that your application remains efficient and responsive under high loads. We will combine actual cases to explain in-depth key technologies such as indexing, query optimization, database design and caching. 1. Database architecture design and optimized database architecture is the cornerstone of MySQL performance optimization. Here are some core principles: Selecting the right data type and selecting the smallest data type that meets the needs can not only save storage space, but also improve data processing speed.

Does mysql need the internet

Apr 08, 2025 pm 02:18 PM

Does mysql need the internet

Apr 08, 2025 pm 02:18 PM

MySQL can run without network connections for basic data storage and management. However, network connection is required for interaction with other systems, remote access, or using advanced features such as replication and clustering. Additionally, security measures (such as firewalls), performance optimization (choose the right network connection), and data backup are critical to connecting to the Internet.