Back propagation technology is the core of deep learning, driving the success of AI in many fields such as vision, speech, natural language processing, games, and biological prediction. The operating mechanism of backpropagation is to calculate the gradient of the prediction error to the neural network connection weight in a reverse manner, and reduce the prediction error by fine-tuning the weight of each layer. Although backpropagation is very efficient and is the key to the current success of artificial intelligence, a considerable number of researchers do not believe that the learning method of backpropagation is consistent with the way the brain works.

With the development of deep learning technology, everyone has gradually seen some disadvantages of backpropagation, such as over-reliance on label data and computing power, and a series of confrontational security issues. , can only be used for specific tasks, etc., and it has also raised some concerns about the development of large models.

For example, Hinton, one of the authors of the proposal of backpropagation and a pioneer of deep learning, has said many times, “If you want to achieve substantial progress, you must abandon backpropagation and start all over again [2] ", "My current belief is that backpropagation, the way deep learning currently works, is completely different from what the brain does, and the brain obtains gradients in a different way [3]", "I believe the brain uses There are many local small objective functions, it is not an end-to-end system chain, and the objective function is optimized through training [3]".

LeCun, who is also a Turing Award winner, said, "The current deep learning model may be an integral part of future intelligent systems, but I think it lacks the necessary parts. I think they It is necessary but not sufficient[4]”. His old rival, New York University professor Gary Marcus, said the same thing had been said before, "If we are to achieve general artificial intelligence, deep learning must be supplemented by other technologies [4]".

How to start all over again? The development of artificial intelligence technology is undoubtedly inseparable from understanding the inspiration of the brain. Although we are still far from fully understanding the working mechanism of the brain - its core is how to adjust the connection weights between neurons based on external information, we can still get some preliminary understanding of the brain, which can inspire us to design new models.

First of all, the brain's learning process is inextricably linked to Hebb's rule, that is, the connection weight between neurons that are activated at the same time will be strengthened, which can It is said to be the most important basic rule of neuroscience and has been verified by a large number of biological experiments.

Secondly, learning in the brain is mainly based on unsupervised learning, which can obtain rich local representations and correlations between representations from a small number of samples, and feedback signals are also in the learning process. Play a more important role. In addition, the brain is a learning system that supports general tasks. The features learned by the brain should be relatively opposite to specific tasks. A reasonable goal is that the brain can well learn the statistical distribution of various input signals and the differences between different contents. connections between.

Recently, Zhou Hongchao, a researcher at Shandong University, submitted an article on arXiv, "Activation Learning by Local Competitions", proposing an AI model inspired by the brain, called Activation learning(activation learning). Its core is to build a multi-layer neural network so that the network output activation intensity can reflect the relative probability of the input.

This model completely abandons the backpropagation method, but starts from improving the basic Hebbian rule (a method closer to the brain) , a new set of neural network training and inference methods has been established, which can achieve significantly better performance than backpropagation in small sample learning experiments, and can also be used as a generation model for images. The work suggests that the potential of bioinspired learning methods may be far underestimated.

##Paper link: https://arxiv.org/pdf/2209.13400.pdf

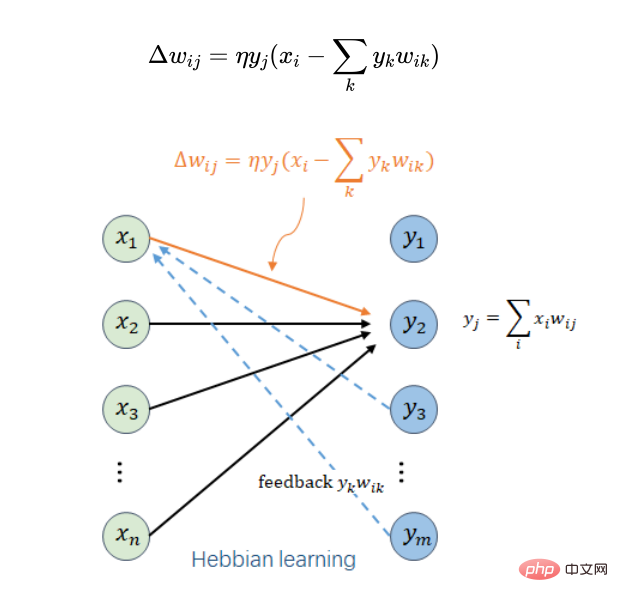

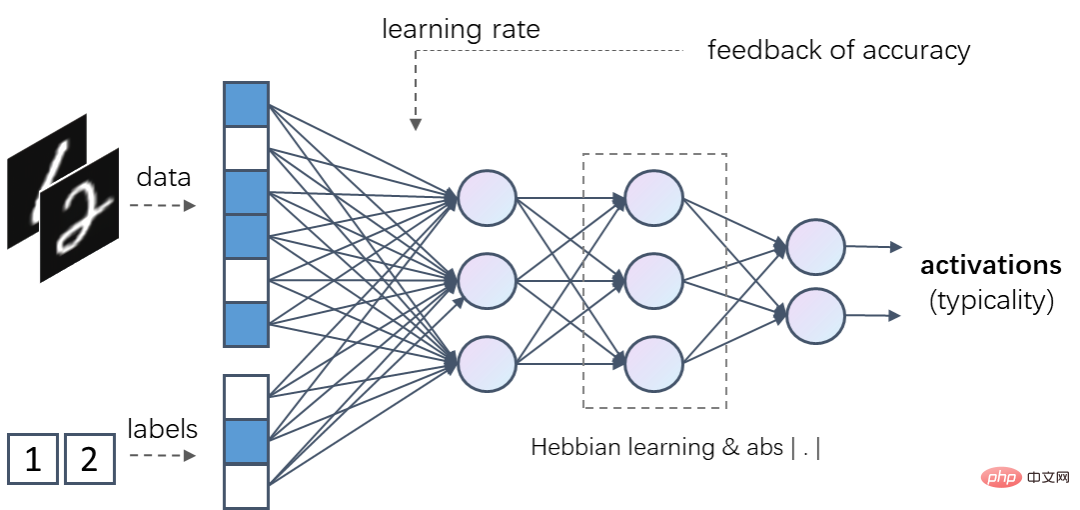

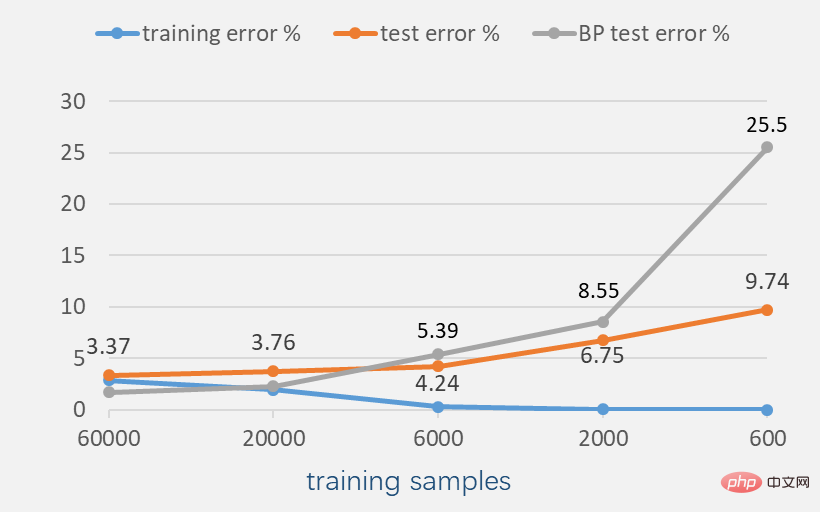

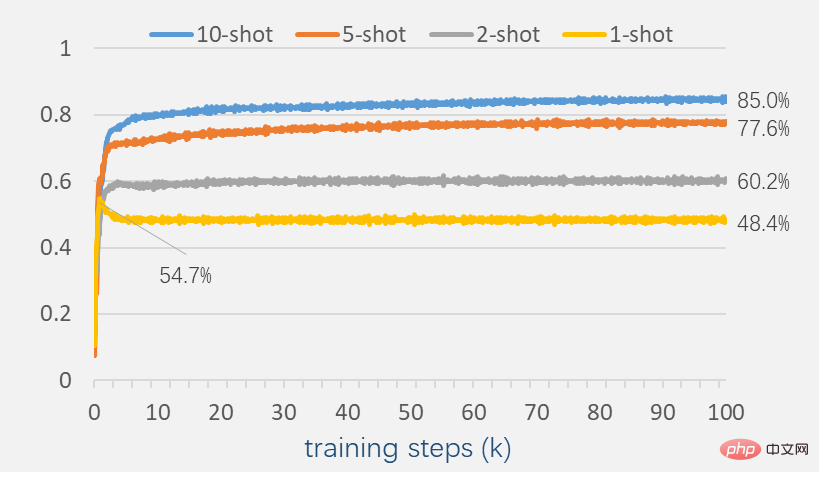

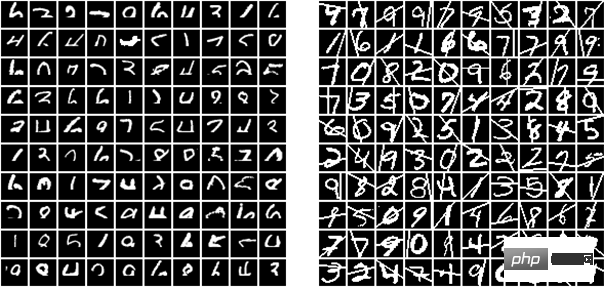

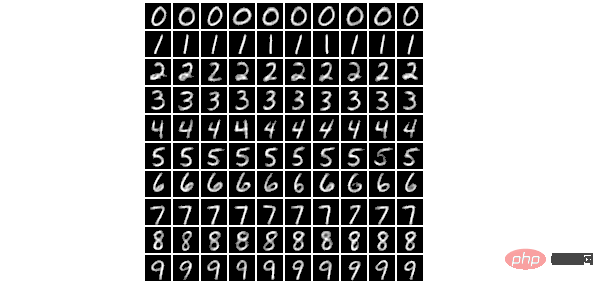

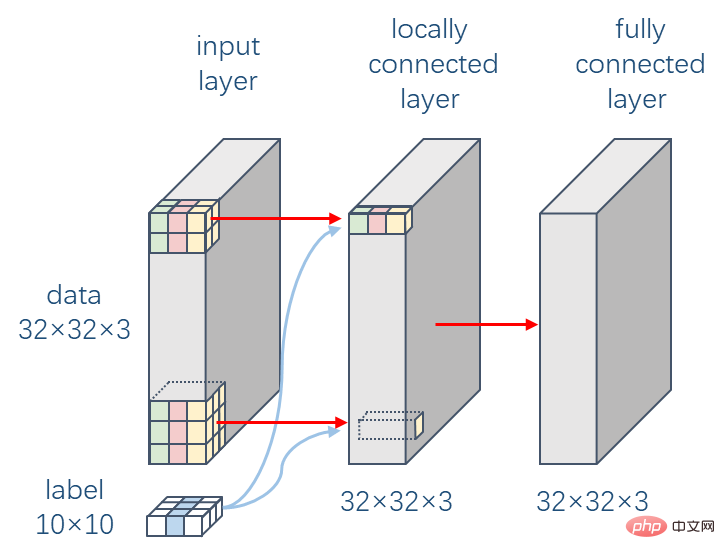

2One possible reason is that people do not fully understand some mechanisms in the Hebbian rule, especially the competition mechanism between neurons, and competition plays a very important role in the process of neural network feature learning and weight adjustment. important role. An intuitive understanding is that if each neuron tries its best to compete for activation, and at the same time there is some kind of inhibition to make the characteristics represented by different neurons as different as possible, then the neural network will approach To transfer the most useful information to the next layer (is it similar to socioeconomics? When each individual in a large social group maximizes income and the group is large enough, under certain rules, The total benefit of the entire group tends to be maximized, at which time each individual exhibits different behaviors). In fact, there are a large number of inhibitory neurons in the brain, and competition and inhibition between neurons play an important role in the brain's learning process. Rumelhart, the first author of backpropagation (the highest award in the field of cognitive science is the Rumelhart Award) is the promoter of this idea. At the same time that he proposed backpropagation (1985), he also proposed a method called competitive learning. The core of the model [5] is to divide the neurons in each layer into several clusters. Only the strongest neuron in each cluster will be activated (called winner-take-all) and trained through the Hebbian rule. . However, in comparison, backpropagation has shown better network training efficiency in practical applications, and has since attracted the attention of most AI researchers, promoting the emergence and success of deep learning. But some researchers still believe in the potential of biologically inspired learning methods. In 2019, Krotov and Hopfield (yes, the proposer of the Hopfield network) demonstrated the winner-take-all rule. Combined with Hebbian learning, it may be possible to achieve performance comparable to backpropagation [6]. But the winner-take-all rule, which only allows one neuron to be activated, also limits the learning and expression capabilities of neural networks to a certain extent. The researcher in this work directly introduced the competition mechanism into the Hebbian learning rule and obtained a concise local learning rule: Assume here that neuron i is the input of neuron j in the layer above it, By mathematically analyzing the above local learning rules (assuming that the learning rate is small enough and the number of learning steps is large enough), some interesting conclusions can be obtained. (1) Given the (2) Each layer feature extraction based on local learning rules has a certain similarity with principal component analysis (PCA). Their reconstruction loss is the same, but it is different from principal component analysis. The difference is that the components obtained by the local learning rules do not need to be orthogonal. This is a very reasonable thing, because principal component analysis is to extract the main information of each layer, but if the neurons corresponding to the main components fail, it will affect the performance of the entire network, and local learning rules solve this problem robustly. Sexual issues. (3) The sum of the squares of the connection weights of each layer tends not to exceed the number of neurons in that layer, ensuring the convergence of the network learning process. (4) The output intensity of each layer (sum of the squares of the output) tends to be no higher than the input intensity of the layer (the sum of the squares of the input), and for about typical input The output intensity will generally be higher, so the input probability can be approximated by the output intensity. This conclusion is a very critical point for the activation learning model to be proposed. Based on the above local learning rules, a multi-layer neural network can be trained bottom-up layer by layer to achieve automatic unsupervised features extract. The trained network can be used as a pre-training model for various supervised learning tasks, such as recognition, translation, etc., to improve the accuracy of learning tasks. Various supervised learning tasks here are still based on back-propagation model training, and the unsupervised pre-training model is fine-tuned. But what’s more interesting is that based on the above local learning rules, a new AI model that does not use backpropagation at all can be built, called activation learning (Activation Learning). The core is to use local unsupervised training to enable the output strength of the entire network (the sum of squares of the output) to estimate the relative probability of the input sample, that is, the more often the input sample is seen, the stronger the output strength will usually be. In activation learning, input samples are fed into a multi-layer neural network after normalization. Each layer contains linear transformations that can be trained through local learning rules. The nonlinear activation function of each layer needs to ensure that the input strength (sum of squares) and output strength are unchanged. For example, the absolute value function If a nonlinear activation function is added to the local learning rule, that is, For example, activation learning networks can take data and labels (such as one-hot encoding) as input at the same time. For such a trained network, when given a data and a correct label input, its output activation intensity is usually higher than the output activation intensity of this data and an incorrect label. Such an activation learning model can be used as both a discriminative model and a generative model. When used as a discriminative model, it infers missing categories from the given data; when used as a generative model, it infers from the given categories combined with a certain amount of randomness. Missing data. In addition, experiments have found that if recognition feedback information is introduced, such as giving a higher global learning rate to misidentified samples, the learning effect of the discriminant model can be improved. Experiments on the MNIST data set (black and white handwritten digit images) show that if the training sample Often enough, the accuracy of backpropagation is higher than the accuracy of activation learning. For example, in the case of 60,000 training samples, based on a neural network of similar complexity, backpropagation can achieve an error rate of about 1.62%, while activation learning can only achieve an error rate of about 3.37% (if feedback from the recognition results is introduced To activate learning, the error rate can be reduced to 2.28%). But as the number of training samples decreases, activation learning can show better performance. For example, in the case of 6,000 training samples, the error rate of activation learning is already lower than the error rate of back propagation; in the case of 600 training samples, the recognition error rate of back propagation is as high as 25.5%, but the error rate of activation learning The error rate is only 9.74%, which is also significantly lower than the method combined with an unsupervised pre-trained model (the error rate is about 20.3%). In order to explore the performance of activation learning with a small number of samples, continue to reduce the number of samples to a few samples. At this time, activation learning still shows a certain recognition ability. When there are 2 samples in each category, the recognition accuracy reaches 60.2%; when there are 10 samples in each category, the recognition accuracy can reach 85.0%. A noteworthy phenomenon is that when there are at least 2 samples for each category, the test accuracy does not decrease during the entire training process. This is different from many models based on backpropagation. From one aspect, it reflects that activation learning may have better generalization ability. Given a trained activation learning network, try to add certain interference to the image to be recognized. As shown in the figure below, cover a certain proportion of the pixels of the image or add some random lines. These disturbed pictures have not been encountered by the model during the training process, but activation learning still shows a certain recognition ability. For example, when 1/3 of the image is covered (lower part), activation learning can achieve a recognition error rate of about 7.5%. The same trained activation learning network can also be used for image generation. Given a category, a locally optimal generated image can be obtained through gradient descent or iteration so that the output activation strength of the entire network is maximum. In the process of image generation, the output of some neurons can be controlled based on random noise, thereby improving the randomness of the generated images. The picture below is a randomly generated picture based on the activation learning network. In the visual perception layer of the brain, neurons have a limited receptive field, that is, neurons can only receive other neurons within a certain spatial range. Yuan input. This inspired the proposal of convolutional neural network (CNN), which is widely used in a large number of visual tasks. The working mechanism of the convolutional layer is still very different from the human visual system. An essential difference is that the convolutional layer shares parameters, that is, the weight parameters are the same at every two-dimensional position, but it is difficult to imagine that the human visual system There will be such parameter sharing in the visual system. The following experiment studies the impact of local connections on activation learning based on the CIFAR-10 data set (colorful 10 categories of object pictures). The neural network experimented here consists of two layers. The first layer is a local connection layer, which is convolved with a convolution kernel size of 9. The layers have the same connection structure, but each position has its own weight parameter; the second layer is a fully connected layer, and the number of nodes in each layer is , Experiments show that local connections can make the learning process more stable and improve learning performance to a certain extent. Based on this two-layer neural network and combined with feedback from the recognition results, activation learning can achieve an error rate of 41.59% on CIFAR-10. The previous benchmark for biologically inspired models was established by Krotov and Hopfield, which reported an error rate of 49.25%. They used a two-layer neural network, with the first layer containing 2,000 nodes and being unsupervised trained through biologically inspired methods, and the second output layer being supervised through backpropagation. For comparison, the same network can achieve an error rate of 44.74% if trained entirely through backpropagation, while activation learning does not use backpropagation at all and achieves better results. If data augmentation including random cropping is used and the number of nodes in the first layer is increased to Why do most deep learning models only apply to specific tasks? One reason is that we artificially divide the samples into data and labels, and use the data as the input of the model and the labels as the output supervision information. This makes the model more inclined to retain only the features that are more useful for predicting labels, while ignoring some. Features useful for other tasks. Activation learning takes all visible information as input and can learn the probability and statistical distribution of training samples and the correlation between each part. This information can be used for all related learning tasks, so activation learning can be regarded as a model for general tasks. . In fact, when we see an object and others tell us what it is, it is difficult for us to define that the brain will definitely use the sound signal as the output label and the visual signal as Input; at least this learning should be two-way, that is, when we see this object, we will think of what it is, and when given what it is, we will also think of what this object looks like. Activation learning can also be used for multi-modal learning. For example, when a given training sample contains picture and text modalities, it may establish an association between pictures and text; when a given training sample contains text and sound modalities, it may establish an association between text and sound. Activation learning has the potential to become an associative memory model, establishing associations between various types of related content, and querying or activating related content through propagation. It is generally believed that this associative memory ability plays a very important role in human intelligence. However, the problem of local input data training and the problem of catastrophic forgetting still need to be solved here. In addition to being a new AI model, the research on activation learning also has other values. For example, it can more easily support on-chip training of neural network systems based on physical implementations such as optical neural networks and memristor neural networks, avoiding a decrease in the computing accuracy of the entire system due to the accuracy of basic physical components or programming noise. It may also be combined with biological experiments to inspire us to better understand the working mechanism of the brain, such as whether there is a certain biological explanation for local training rules. Researcher Zhou Hongchao said, "I believe that most complex systems are dominated by simple mathematical rules, and the brain is such a wonderful system; ultimately our goal is to design smarter machines."

is the connection weight between neuron i and neuron j,

is the connection weight between neuron i and neuron j,  is the adjustment amount of the weight under a certain training sample,

is the adjustment amount of the weight under a certain training sample,  is the output of neuron i and an input of neuron j,

is the output of neuron i and an input of neuron j,  is the neuron The total weighted input of j (or the output of neuron j),

is the neuron The total weighted input of j (or the output of neuron j),  is a smaller learning rate,

is a smaller learning rate,  traverses all the neurons in the same layer as neuron j Neurons. If you only consider

traverses all the neurons in the same layer as neuron j Neurons. If you only consider  , it is the most original Hebbian rule. A key here is the introduction of an output feedback item

, it is the most original Hebbian rule. A key here is the introduction of an output feedback item  from the same layer. It plays two roles: the first is to ensure that all weights will not increase infinitely and the learning process is convergent; the second is to introduce competition between neuron j and other neurons in this layer to improve feature expression. Diversity.

from the same layer. It plays two roles: the first is to ensure that all weights will not increase infinitely and the learning process is convergent; the second is to introduce competition between neuron j and other neurons in this layer to improve feature expression. Diversity.  of each layer, the input ## can be reconstructed through

of each layer, the input ## can be reconstructed through

#Make the reconstruction error as small as possible. This layer-by-layer reconstruction capability can improve the security of the model against adversarial attacks and prevent the addition of adversarial noise to some object images from being recognized as other objects.

#Make the reconstruction error as small as possible. This layer-by-layer reconstruction capability can improve the security of the model against adversarial attacks and prevent the addition of adversarial noise to some object images from being recognized as other objects.

3 Activation learning

can be used as the activation function, so that the output strength of the network changes after The activation function will not attenuate or enhance, and the final output strength of the entire network can reflect the relative probability of the input sample.

can be used as the activation function, so that the output strength of the network changes after The activation function will not attenuate or enhance, and the final output strength of the entire network can reflect the relative probability of the input sample.  represents the output of neuron j, the activation function does not need to require input and output strength unchanged, other nonlinear functions such as RELU can be used as the activation function. The inference process of activation learning is to deduce the missing part based on the known part of the input, so that the final output strength of the network is maximum.

represents the output of neuron j, the activation function does not need to require input and output strength unchanged, other nonlinear functions such as RELU can be used as the activation function. The inference process of activation learning is to deduce the missing part based on the known part of the input, so that the final output strength of the network is maximum.

4 Small sample classification and image generation

The same dimension as the input image is Consistent.

The same dimension as the input image is Consistent.  , the recognition error rate of activation learning can be reduced to 37.52%.

, the recognition error rate of activation learning can be reduced to 37.52%.

5 Towards general tasks

The above is the detailed content of Brain-inspired AI models: Activation learning, challenging backpropagation. For more information, please follow other related articles on the PHP Chinese website!