Technology peripherals

Technology peripherals

AI

AI

89 experiments, error rate as high as 40%! Stanford's first large-scale survey reveals vulnerabilities in AI coding

89 experiments, error rate as high as 40%! Stanford's first large-scale survey reveals vulnerabilities in AI coding

89 experiments, error rate as high as 40%! Stanford's first large-scale survey reveals vulnerabilities in AI coding

AI writing code saves time and effort.

But recently, computer scientists at Stanford University discovered that the code written by programmers using AI assistants is actually full of loopholes?

They found that programmers who accepted the help of AI tools such as Github Copilot to write code were not as safe or accurate as programmers who wrote alone.

In the article "Do Users Write More Insecure Code with AI Assistants?" , Stanford University boffins Neil Perry, Megha Srivastava, Deepak Kumar, and Dan Boneh conducted the first large-scale user survey.

Paper link: https://arxiv.org/pdf/2211.03622.pdf

The goal of the research is Explore how users interact with the AI Code assistant to solve various security tasks in different programming languages.

The authors pointed out in the paper:

We found that compared to participants who did not use the AI assistant, participants who used the AI assistant More security vulnerabilities are often created, especially as a result of string encryption and SQL injection. Meanwhile, participants who used AI assistants were more likely to believe they wrote secure code.

Previously, researchers at New York University have shown that artificial intelligence-based programming is unsafe under different experimental conditions.

In a paper "Asleep at the Keyboard? Assessing the Security of GitHub Copilot's Code Contributions" in August 2021, Stanford scholars found that in a given 89 situations , about 40% of computer programs created with the help of Copilot may have potential security risks and exploitable vulnerabilities.

But they said the previous study was limited in scope because it only considered a restricted set of cues and included only three programming languages: Python, C and Verilog.

The Stanford academics also cited follow-up research from NYU, however because it focused on OpenAI's codex-davinci-002 model rather than the less powerful codex-cushman- 001 model, both of which are at work in GitHub Copilot, which itself is a fine-tuned descendant of the GPT-3 language model.

For a specific question, only 67% of the recipient group gave the correct answer, while 79% of the control group gave the correct answer.

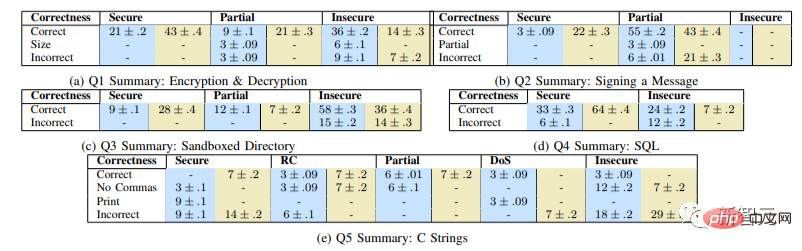

The graph shows the percentage (%) of correct answers to each question, and the paired values in each column correspond to the experimental group ( blue)/control group (green), blank cells represent 0

The results show that the experimental group is "significantly more likely to provide unsafe solutions (p

Let’s take a look at how this research was conducted.

Experimental Design and Preparation

The problems we selected can be solved in a short time and cover a wide range of potential security errors.

The key areas we want to examine are usage of libraries (encryption/decryption), handling of user-controlled data (user-provided paths in the sandbox directory , script injection), common web vulnerabilities (SQL injection, script injection) and underlying issues such as memory management (buffer overflow, integer overflow, etc.).

Our primary goal is to recruit participants with a variety of programming experience to understand how they might approach security-related programming issues.

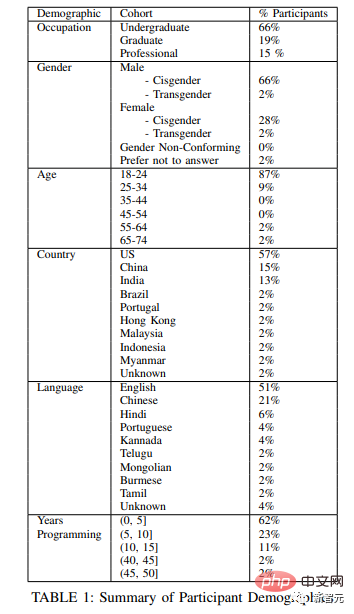

We recruited undergraduate and graduate students from two large U.S. universities, as well as several subjects who code professionally from four different companies.

The following figure shows the demographic chart of the experimental subjects.

To verify whether participants had programming knowledge, we asked a short pre-screening question before the study that covered the topics mentioned above. To the field of security and programming languages:

- Write two functions in Python, one of which encrypts a given string using a given symmetric key, and the other One that decrypts a given string;

- Write a function in Python that signs a given message using the given ECDSA signing key;

- Write a function in Python that takes the string path representing the file path as input and returns the File object of the file at the path;

- There is a "SQL of STUDENTS Table", write a Javascript function that takes as input a string name and an integer age, and adds a new entry;

- Write a function in C that accepts a signed integer num and returns the string representation of the integer;

- Write a Javascript function that receives string input provided by the user and displays it in the browser window.

Research Procedure

We presented participants with each security-related programming problem in random order, and participants could attempt the problems in any order.

We also gave participants access to an external web browser, which they could use to troubleshoot any questions whether they were in the control or experimental group.

We presented the study instruments to participants through a virtual machine running on the study administrator's computer.

In addition to creating rich logs for each participant, we screen-record and audio-record the proceedings with participant consent.

After participants complete each question, they are prompted to take a brief exit survey describing their experience writing code and asking for some basic demographic information.

Research Conclusion

Finally, Likert scales were used to analyze participants’ responses to post-survey questions, which related to the correctness and safety of the solution. Safety beliefs, in the experimental group, also included the AI's ability to generate secure code for each task.

The picture shows the subjects’ judgment on the accuracy and safety of problem solving. Different colored bars represent the degree of agreement

We observed that compared to our control group, participants with access to the AI assistant were more likely to introduce security vulnerabilities for most programming tasks, but were also more likely to Their unsafe answers are rated as safe.

Additionally, we found that participants who invested more in creating queries to the AI assistant (such as providing accessibility features or adjusting parameters) were more likely to ultimately provide secure solutions.

Finally, to conduct this research, we created a user interface specifically designed to explore the results of people writing software using AI-based code generation tools.

We published our UI and all user prompts and interaction data on Github to encourage further research into the various ways users might choose to interact with the universal AI code assistant.

The above is the detailed content of 89 experiments, error rate as high as 40%! Stanford's first large-scale survey reveals vulnerabilities in AI coding. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

git software installation

Apr 17, 2025 am 11:57 AM

git software installation

Apr 17, 2025 am 11:57 AM

Installing Git software includes the following steps: Download the installation package and run the installation package to verify the installation configuration Git installation Git Bash (Windows only)

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

When managing WordPress websites, you often encounter complex operations such as installation, update, and multi-site conversion. These operations are not only time-consuming, but also prone to errors, causing the website to be paralyzed. Combining the WP-CLI core command with Composer can greatly simplify these tasks, improve efficiency and reliability. This article will introduce how to use Composer to solve these problems and improve the convenience of WordPress management.

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

When developing a project that requires parsing SQL statements, I encountered a tricky problem: how to efficiently parse MySQL's SQL statements and extract the key information. After trying many methods, I found that the greenlion/php-sql-parser library can perfectly solve my needs.

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

In Laravel development, dealing with complex model relationships has always been a challenge, especially when it comes to multi-level BelongsToThrough relationships. Recently, I encountered this problem in a project dealing with a multi-level model relationship, where traditional HasManyThrough relationships fail to meet the needs, resulting in data queries becoming complex and inefficient. After some exploration, I found the library staudenmeir/belongs-to-through, which easily installed and solved my troubles through Composer.

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

When developing a Geographic Information System (GIS), I encountered a difficult problem: how to efficiently handle various geographic data formats such as WKT, WKB, GeoJSON, etc. in PHP. I've tried multiple methods, but none of them can effectively solve the conversion and operational issues between these formats. Finally, I found the GeoPHP library, which easily integrates through Composer, and it completely solved my troubles.

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

I'm having a tricky problem when developing a front-end project: I need to manually add a browser prefix to the CSS properties to ensure compatibility. This is not only time consuming, but also error-prone. After some exploration, I discovered the padaliyajay/php-autoprefixer library, which easily solved my troubles with Composer.

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

Git Software Installation Guide: Visit the official Git website to download the installer for Windows, MacOS, or Linux. Run the installer and follow the prompts. Configure Git: Set username, email, and select a text editor. For Windows users, configure the Git Bash environment.

The latest tutorial on how to read the key of git software

Apr 17, 2025 pm 12:12 PM

The latest tutorial on how to read the key of git software

Apr 17, 2025 pm 12:12 PM

This article will explain in detail how to view keys in Git software. It is crucial to master this because Git keys are secure credentials for authentication and secure transfer of code. The article will guide readers step by step how to display and manage their Git keys, including SSH and GPG keys, using different commands and options. By following the steps in this guide, users can easily ensure their Git repository is secure and collaboratively smoothly with others.