Technology peripherals

Technology peripherals

AI

AI

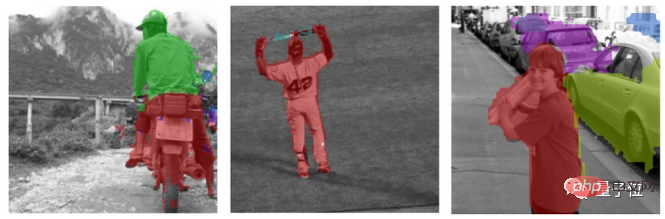

Unknown objects can also be easily identified and segmented, and the effect can be transferred

Unknown objects can also be easily identified and segmented, and the effect can be transferred

Unknown objects can also be easily identified and segmented, and the effect can be transferred

It can also segment new objects that have never been seen before.

This is a new learning framework developed by DeepMind: Object discovery and representation networks (Odin for short)

Previous The self-supervised learning (SSL) method can describe the entire large scene well, but it is difficult to distinguish individual objects.

Now, the Odin method does it, and does it without any supervision.

It is not easy to distinguish a single object in an image. How is it done?

Method Principle

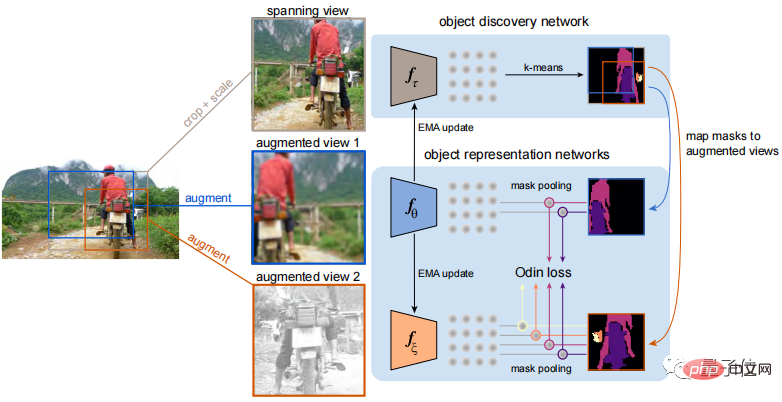

It can well distinguish various objects in the image, mainly due to the "self-circulation" of the Odin learning framework.

Odin learned two sets of networks that work together, namely the target discovery network and the target representation network.

Target Discovery Network takes a cropped part of the image as input. The cropped part should contain most of the image area, and this part of the image has not been enhanced in other aspects.

Then perform cluster analysis on the feature map generated from the input image, and segment each object in the image according to different features.

The input view of the target representation network is the segmented image generated in the target discovery network.

After the views are input, random preprocessing is performed on them, including flipping, blurring, and point-level color conversion.

In this way, two sets of masks can be obtained. Except for the differences in cropping, other information is the same as the underlying image content.

Then the two masks will learn features that can better represent the objects in the image through contrast loss.

Specifically, through contrast detection, a network is trained to identify the characteristics of different target objects, and there are also many "negative" characteristics from other irrelevant objects.

Then, maximize the similarity of the same target object in different masks, minimize the similarity between different target objects, and then perform better segmentation to distinguish different target objects.

#At the same time, the target discovery network will be updated regularly based on the parameters of the target representation network.

The ultimate goal is to ensure that these object-level characteristics are roughly unchanged in different views, in other words, to separate the objects in the image.

So what is the effect of the Odin learning framework?

Can distinguish unknown objects very well

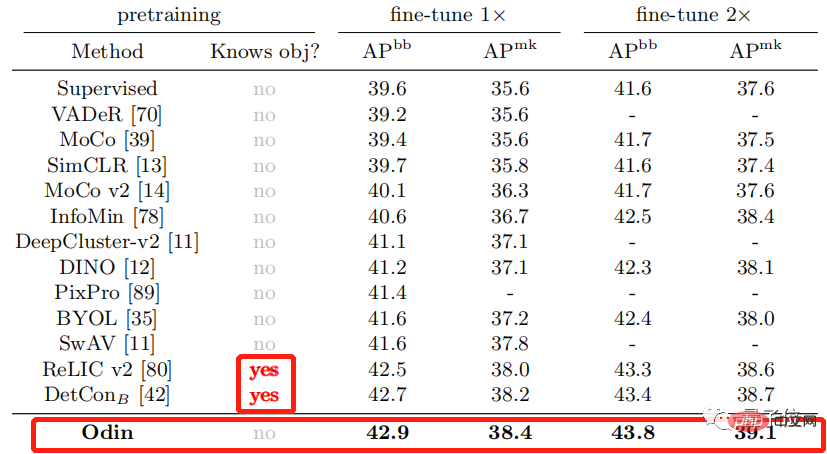

The performance of transfer learning of the Odin method in scene segmentation without prior knowledge is also very powerful.

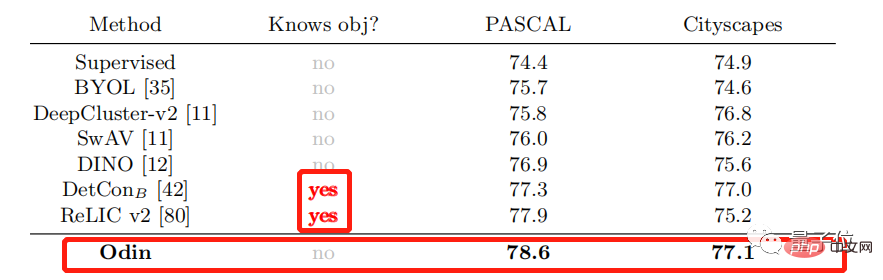

First, use the Odin method to pre-train on the ImageNet dataset, and then evaluate its effect on the COCO dataset as well as PASCAL and Cityscapes semantic segmentation.

Already know the target object, that is, the method that obtains prior knowledge is significantly better than other methods that do not obtain prior knowledge when performing scene segmentation.

Even if the Odin method does not obtain prior knowledge, its effect is better than DetCon and ReLICv2 which obtain prior knowledge.

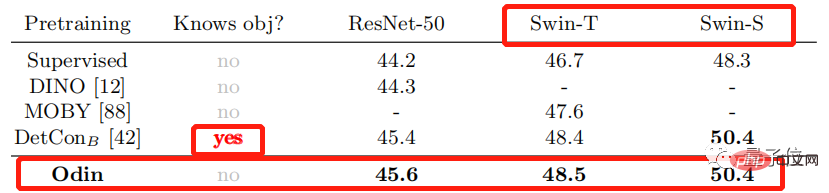

In addition, the Odin method can be applied not only to ResNet models, but also to more complex models, such as Swim Transformer.

In terms of data, the advantages of Odin framework learning are obvious. So where are the advantages of Odin reflected in the visual images?

Compare the segmented images generated using Odin with those obtained from a randomly initialized network (Column 3), an ImageNet-supervised network (Column 4).

Columns 3 and 4 fail to clearly depict the boundaries of objects, or lack the consistency and locality of real-world objects, and the image effects generated by Odin are obviously better.

Reference link:

[1] https://twitter.com/DeepMind/status/1554467389290561541

[2] https://arxiv.org/abs/2203.08777

The above is the detailed content of Unknown objects can also be easily identified and segmented, and the effect can be transferred. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

MobileSAM: A high-performance, lightweight image segmentation model for mobile devices

Jan 05, 2024 pm 02:50 PM

MobileSAM: A high-performance, lightweight image segmentation model for mobile devices

Jan 05, 2024 pm 02:50 PM

1. Introduction With the popularization of mobile devices and the improvement of computing power, image segmentation technology has become a research hotspot. MobileSAM (MobileSegmentAnythingModel) is an image segmentation model optimized for mobile devices. It aims to reduce computational complexity and memory usage while maintaining high-quality segmentation results, so as to run efficiently on mobile devices with limited resources. This article will introduce the principles, advantages and application scenarios of MobileSAM in detail. 2. Design ideas of the MobileSAM model. The design ideas of the MobileSAM model mainly include the following aspects: Lightweight model: In order to adapt to the resource limitations of mobile devices, the MobileSAM model adopts a lightweight model.

How to use image semantic segmentation technology in Python?

Jun 06, 2023 am 08:03 AM

How to use image semantic segmentation technology in Python?

Jun 06, 2023 am 08:03 AM

With the continuous development of artificial intelligence technology, image semantic segmentation technology has become a popular research direction in the field of image analysis. In image semantic segmentation, we segment different areas in an image and classify each area to achieve a comprehensive understanding of the image. Python is a well-known programming language. Its powerful data analysis and data visualization capabilities make it the first choice in the field of artificial intelligence technology research. This article will introduce how to use image semantic segmentation technology in Python. 1. Prerequisite knowledge is deepening

Python Tutorial: How to split and merge large files using Python?

Apr 22, 2023 am 11:43 AM

Python Tutorial: How to split and merge large files using Python?

Apr 22, 2023 am 11:43 AM

Sometimes, we need to send a large file to others, but due to limitations of the transmission channel, such as the limit on the size of email attachments, or the network condition is not very good, we need to divide the large file into small files and send them in multiple times. Then merge these small files. Today I will share how to split and merge large files using Python. Idea and implementation If it is a text file, it can be divided by the number of lines. Whether it is a text file or a binary file, it can be split according to the specified size. Using Python's file reading and writing function, you can split and merge files, set the size of each file, and then read bytes of the specified size and write them into a new file. The receiving end reads the small files in sequence and writes the The bytes are written to a file in order, so

How to implement speech recognition and speech synthesis in C++?

Aug 26, 2023 pm 02:49 PM

How to implement speech recognition and speech synthesis in C++?

Aug 26, 2023 pm 02:49 PM

How to implement speech recognition and speech synthesis in C++? Speech recognition and speech synthesis are one of the popular research directions in the field of artificial intelligence today, and they play an important role in many application scenarios. This article will introduce how to use C++ to implement speech recognition and speech synthesis functions based on Baidu AI open platform, and provide relevant code examples. 1. Speech recognition Speech recognition is a technology that converts human speech into text. It is widely used in voice assistants, smart homes, autonomous driving and other fields. The following is the implementation of speech recognition using C++

Face detection and recognition technology implemented using Java

Jun 18, 2023 am 09:08 AM

Face detection and recognition technology implemented using Java

Jun 18, 2023 am 09:08 AM

With the continuous development of artificial intelligence technology, face detection and recognition technology has become more and more widely used in daily life. Face detection and recognition technologies are widely used in various occasions, such as face access control systems, face payment systems, face search engines, etc. As a widely used programming language, Java can also implement face detection and recognition technology. This article will introduce how to use Java to implement face detection and recognition technology. 1. Face detection technology Face detection technology refers to the technology that detects faces in images or videos. in J

Golang and FFmpeg: How to implement audio synthesis and segmentation

Sep 27, 2023 pm 10:52 PM

Golang and FFmpeg: How to implement audio synthesis and segmentation

Sep 27, 2023 pm 10:52 PM

Golang and FFmpeg: How to implement audio synthesis and segmentation, specific code examples are required Summary: This article will introduce how to use Golang and FFmpeg libraries to implement audio synthesis and segmentation. We will use some specific code examples to help readers understand better. Introduction: With the continuous development of audio processing technology, audio synthesis and segmentation have become common functional requirements in daily life and work. As a fast, efficient and easy to write and maintain programming language, Golang, coupled with FFmpeg

Tips to reduce win10 screen recording file size

Jan 04, 2024 pm 12:05 PM

Tips to reduce win10 screen recording file size

Jan 04, 2024 pm 12:05 PM

Many friends need to record screens for office work or transfer files, but sometimes the problem of files that are too large causes a lot of trouble. The following is a solution to the problem of files that are too large, let’s take a look. What to do if the win10 screen recording file is too large: 1. Download the software Format Factory to compress the file. Download address >> 2. Enter the main page and click the "Video-MP4" option. 3. Click "Add File" on the conversion format page and select the MP4 file to be compressed. 4. Click "Output Configuration" on the page to compress the file according to the output quality. 5. Select "Low Quality and Size" from the drop-down configuration list and click "OK". 6. Click "OK" to complete the import of video files. 7. Click "Start" to start the conversion. 8. After completion, you can

An article talking about the traffic sign recognition system in autonomous driving

Apr 12, 2023 pm 12:34 PM

An article talking about the traffic sign recognition system in autonomous driving

Apr 12, 2023 pm 12:34 PM

What is a traffic sign recognition system? The traffic sign recognition system of the car safety system, whose English translation is: Traffic Sign Recognition, or TSR for short, uses a front-facing camera combined with a mode to recognize common traffic signs (speed limit, parking, U-turn, etc.). This feature alerts the driver to traffic signs ahead so they can obey them. The TSR function improves safety by reducing the likelihood that drivers will disobey traffic laws such as stop signs and avoid illegal left turns or other unintentional traffic violations. These systems require flexible software platforms to enhance detection algorithms and adjust to traffic signs in different areas. Traffic sign recognition principle Traffic sign recognition is also called TS