Technology peripherals

Technology peripherals

AI

AI

Exploration and practice of Meituan search rough ranking optimization

Exploration and practice of Meituan search rough ranking optimization

Exploration and practice of Meituan search rough ranking optimization

Author: Xiaojiang Suogui Li Xiang et al

Rough ranking is the search and promotion in the industry important module of the system. In the exploration and practice of optimizing the rough ranking effect, the Meituan search ranking team optimized rough ranking from two aspects: fine ranking linkage and joint optimization of effect and performance based on actual business scenarios, improving the effect of rough ranking.

1. Foreword

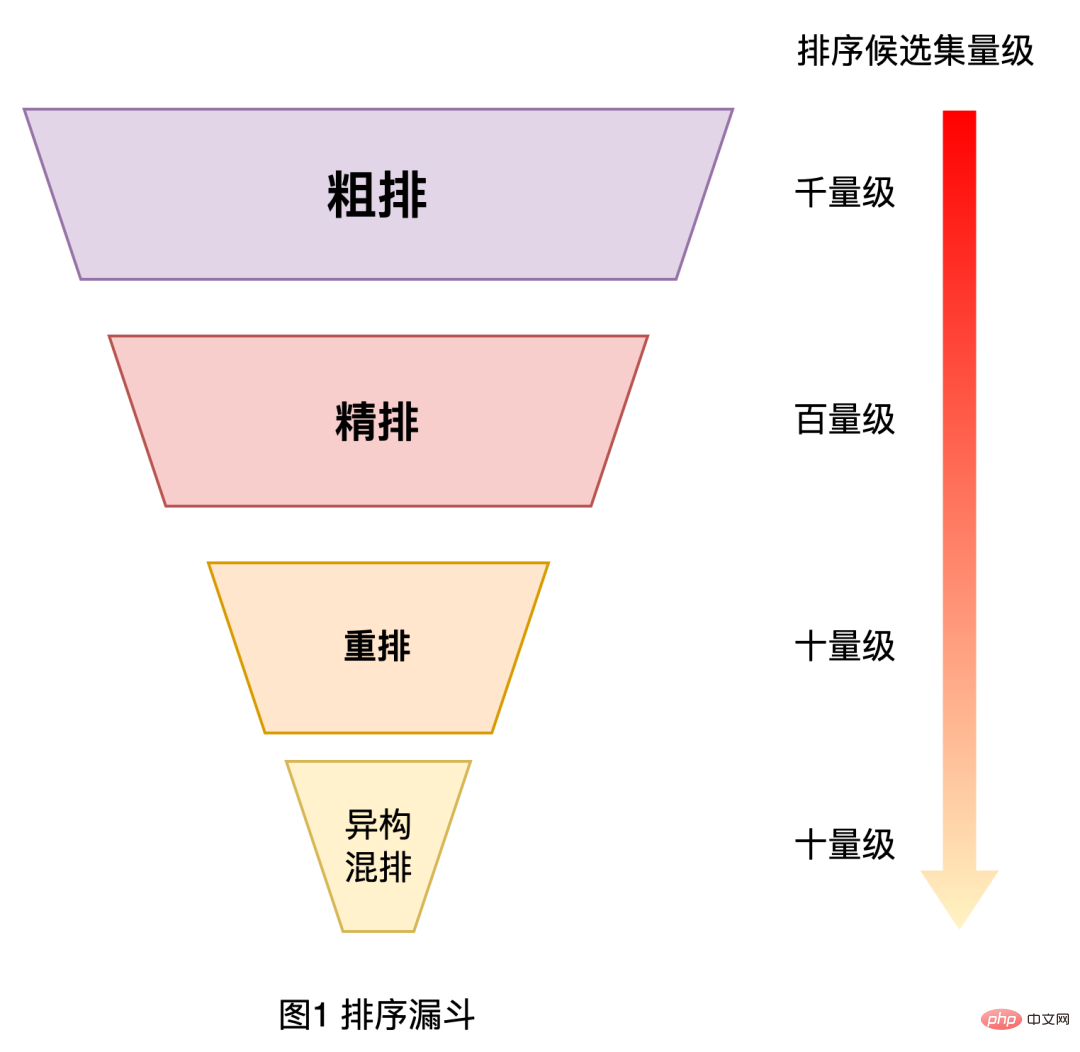

As we all know, in large-scale industrial application fields such as search, recommendation, and advertising, in order to balance performance and effect, ranking systems are commonly used Cascade architecture [1,2], as shown in Figure 1 below. Taking the Meituan search ranking system as an example, the entire ranking is divided into rough sorting, fine sorting, rearrangement and mixed sorting levels; rough sorting is located between recall and fine sorting, and it is necessary to filter out a hundred-level item set from a thousand-level candidate item set. Give it to the fine rowing layer.

Figure 1 Sorting funnel

Examine the rough ranking module from the perspective of the full link of Meituan search ranking , there are currently several challenges in coarse sorting layer optimization:

- Sample selection bias: Under the cascade sorting system, Rough sorting is far away from the final result display, which leads to a large difference between the offline training sample space of the rough sorting model and the sample space to be predicted, and there is a serious sample selection bias.

- Rough sorting and fine sorting linkage: Rough sorting is between recall and fine sorting. Rough sorting requires more acquisition and utilization of subsequent chains. road information to improve the effect.

- Performance Constraints: The candidate set for online rough ranking prediction is much higher than that of the fine ranking model. However, the actual entire search system has strict requirements on performance, resulting in Coarse sorting needs to focus on predictive performance.

This article will focus on the above challenges to share the relevant exploration and practice of Meituan search rough ranking layer optimization. Among them, we put the problem of sample selection bias together with the fine ranking linkage problem. solve. This article is mainly divided into three parts: the first part will briefly introduce the evolution route of the rough ranking layer of Meituan search ranking; the second part introduces the related exploration and practice of rough ranking optimization, the first of which is to use knowledge distillation and comparative learning to make fine Linkage between rough sorting and rough sorting to optimize the rough sorting effect. The second task is to consider the rough sorting performance and effect trade-off optimization of rough sorting. All related work has been fully online, and the effect is significant; the last part is the summary and outlook. I hope these The content is helpful and inspiring to everyone.

2. Rough ranking evolution route

The evolution of Meituan Search’s rough ranking technology is divided into the following stages:

- 2016: Linear weighting based on information such as correlation, quality, conversion rate, etc. This method is simple but has poor expression ability of features. Weak, the weights are manually determined, and there is a lot of room for improvement in the sorting effect.

- 2017: Pointwise estimated ranking using a simple LR model based on machine learning.

- 2018: Using the two-tower model based on vector inner product, query terms, users, contextual features and merchants are input on both sides. Features, after deep network calculation, the user & query word vectors and merchant vectors are respectively generated, and then the estimated scores are obtained through inner product calculation for sorting. This method can calculate and save merchant vectors in advance, so online prediction is fast, but the ability to cross information on both sides is limited.

- 2019: In order to solve the problem that the twin-tower model cannot model cross-features well, the output of the twin-tower model is used as Features are fused with other cross-features through the GBDT tree model.

- 2020 to present: Due to the improvement of computing power, I began to explore the NN end-to-end rough model and continue to iterate the NN model.

At this stage, the two-tower model is commonly used in the industrial rough ranking model, such as Tencent [3] and iQiyi [4]; the interactive NN model, such as Alibaba Baba[1,2]. The following mainly introduces the related optimization work of Meituan Search in the process of upgrading rough ranking to NN model, which mainly includes two parts: rough ranking effect optimization and effect & performance joint optimization.

3. Coarse ranking optimization practice

With a large amount of effect optimization work [5,6] implemented in Meituan Search Fine Ranking NN model, we also began to explore the optimization of coarse ranking NN model . Considering that rough sorting has strict performance constraints, it is not applicable to directly reuse the optimization work of fine sorting into rough sorting. The following will introduce the optimization work of fine sorting linkage effects on migrating the sorting capabilities of fine sorting to coarse sorting, as well as the effect and performance trade-off optimization of automatic search based on neural network structure.

3.1 Optimizing the linkage effect of fine ranking

The rough ranking model is limited by the scoring performance constraints, which will lead to a simpler model structure and a smaller number of features than the fine ranking model. Much less than fine sorting, so the sorting effect is worse than fine sorting. In order to make up for the effect loss caused by the simple structure and few features of the rough ranking model, we tried the knowledge distillation method [7] to link the fine ranking to optimize the rough ranking.

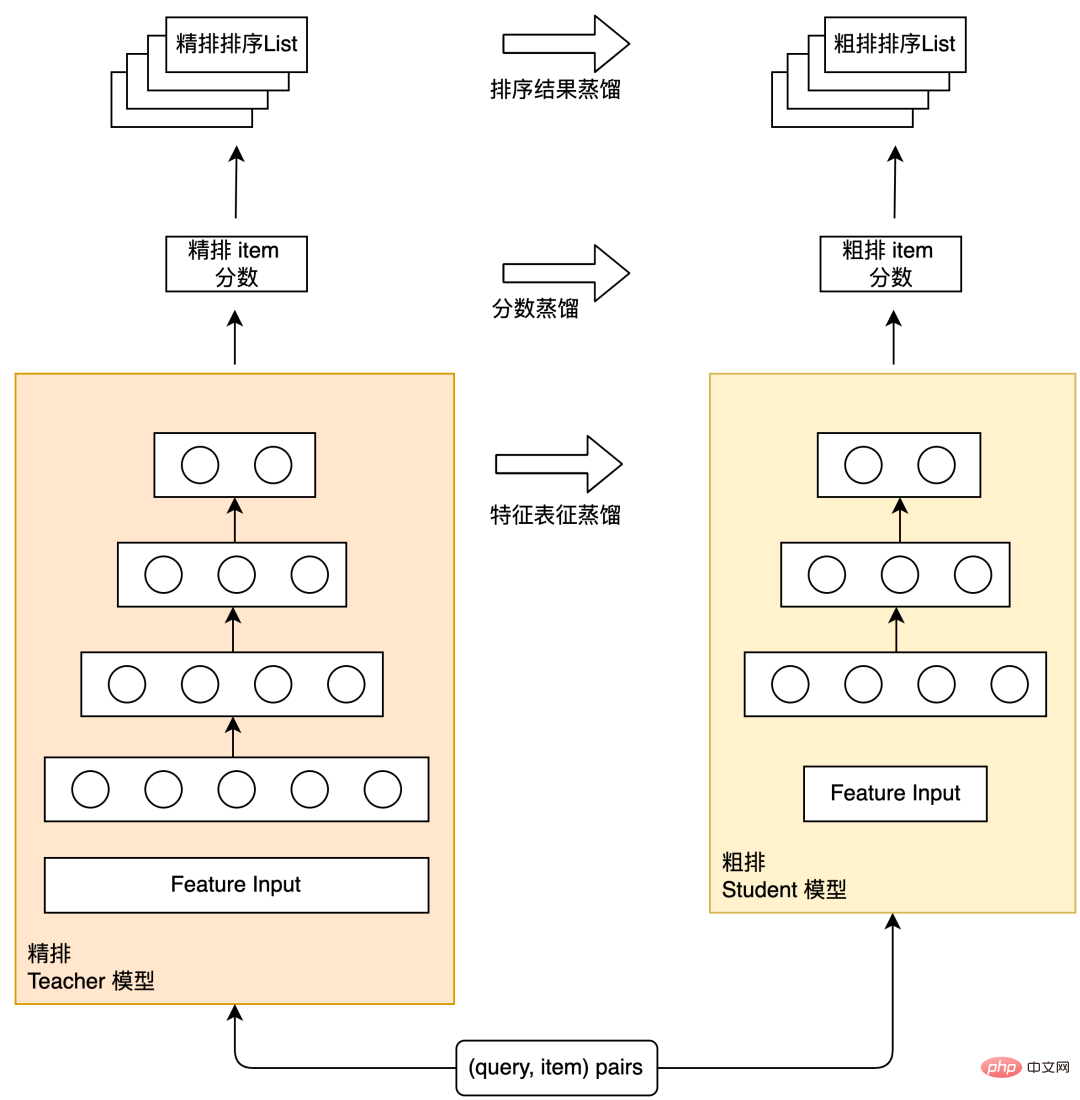

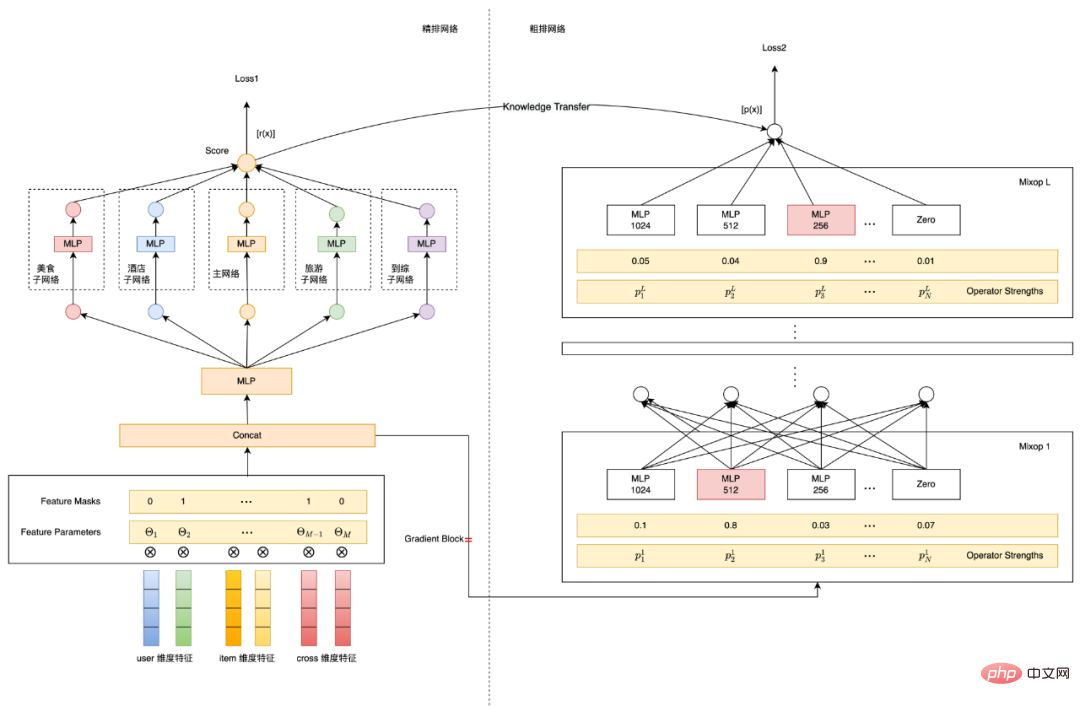

Knowledge distillation is a common method in the industry to simplify the model structure and minimize the effect loss. It adopts a Teacher-Student paradigm: a model with a complex structure and strong learning ability is used as a Teacher model. The model with a relatively simple structure is used as the Student model, and the Teacher model is used to assist the Student model training, thereby transferring the "knowledge" of the Teacher model to the Student model to improve the effect of the Student model. The schematic diagram of fine row distillation and rough row distillation is shown in Figure 2 below. The distillation scheme is divided into the following three types: fine row result distillation, fine row prediction score distillation, and feature representation distillation. The practical experience of these distillation schemes in Meituan search rough ranking will be introduced below.

Figure 2 Fine row distillation rough row diagram

3.1.1 Fine row distillation result list

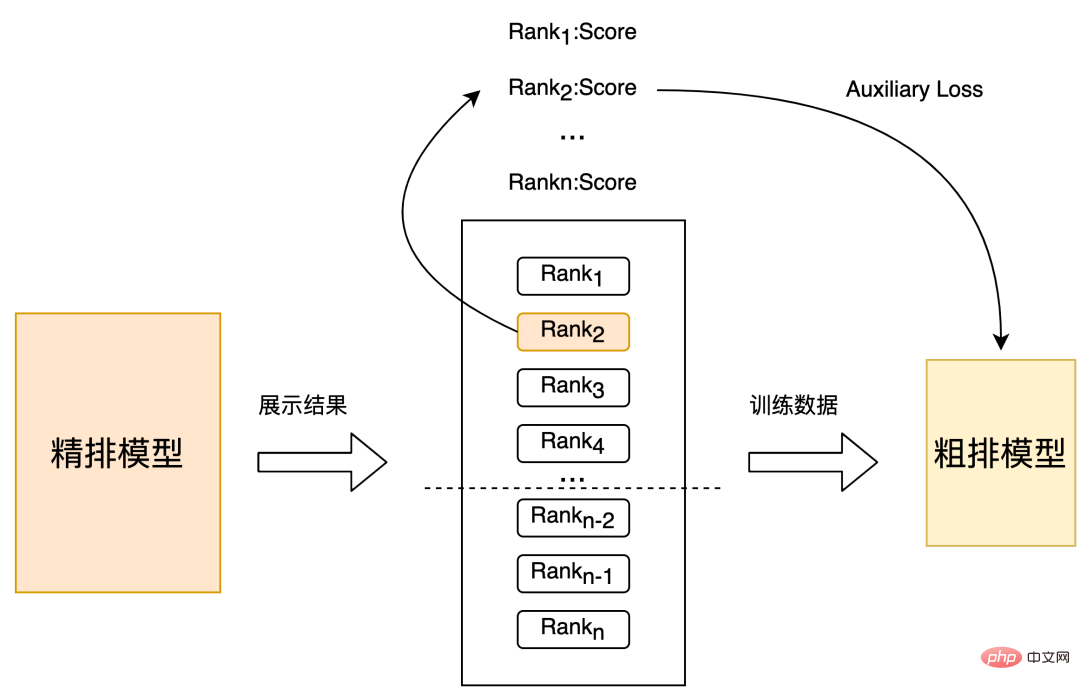

Rough sorting is a pre-module for fine sorting. Its goal is to initially screen out a set of candidates with better quality to enter fine sorting. From the perspective of training sample selection, in addition to regular user behaviors (click , placing orders, paying ) as positive samples, and exposing items that have not occurred as negative samples, you can also introduce some positive and negative samples constructed through the sorting results of the fine sorting model, which can alleviate rough sorting to a certain extent. The sample selection bias of the model can also transfer the sorting ability of fine sorting to coarse sorting. The following will introduce the practical experience of using the fine sorting results to distill the coarse sorting model in the Meituan search scenario.

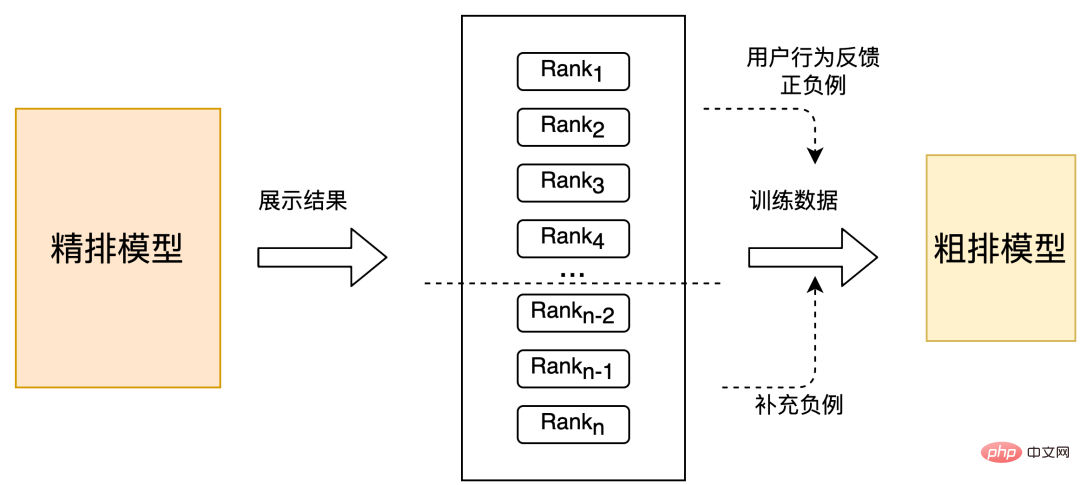

Strategy 1: Based on the positive and negative samples fed back by users, randomly select a small number of unexposed samples at the bottom of the fine sorting to supplement the rough sorting of negative samples. ,As shown in Figure 3. This change has an offline Recall@150 (see appendix for indicator explanation) 5PP, and an online CTR of 0.1%.

Figure 3 Supplementary sorting result negative example

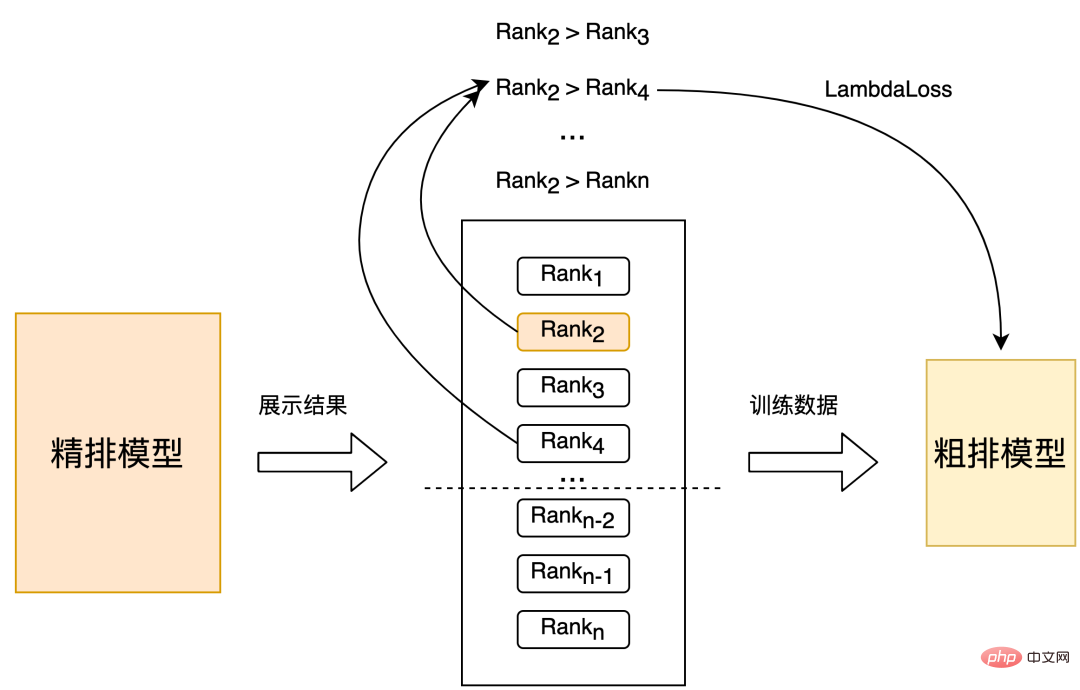

Strategy 2 : Directly perform random sampling in the finely sorted set to obtain training samples. The finely sorted positions are used as labels to construct pairs for training, as shown in Figure 4 below. The offline effect is compared to Strategy 1 Recall@150 2PP, and the online CTR is 0.06%.

Figure 4 Sort front and back to form a pair sample

Strategy 3: Based on the sample set selection of strategy 2, the label is constructed by classifying the refined sorting positions, and then pairs are constructed according to the classified labels for training. Compared with Strategy 2 Recall@150 3PP, the offline effect is 0.1% online CTR.

3.1.2 Fine ranking prediction score distillation

The previous use of sorting result distillation is a relatively rough way of using fine ranking information. We further add on this basis Prediction score distillation [8], it is hoped that the score output by the rough ranking model and the score distribution output by the fine ranking model will be as aligned as possible, as shown in Figure 5 below:

Figure 5 Fine ranking prediction score construction auxiliary loss

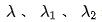

In terms of specific implementation, we use a two-stage distillation paradigm to distill the coarse ranking model based on the pre-trained fine ranking model. The distillation loss uses the minimum square error of the coarse ranking model output and the fine ranking model output. , and add a parameter Lambda to control the impact of distillation Loss on the final Loss, as shown in formula (1). Using the precise fractional distillation method, the offline effect is Recall@150 5PP, and the online effect CTR is 0.05%.

3.1.3 Feature Representation Distillation

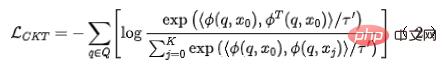

The industry uses knowledge distillation to achieve fine ranking guidance and coarse ranking representation modeling, which has been verified to be an effective way to improve the model effect [ 7], however, directly using traditional methods to distill representations has the following shortcomings: First, it is impossible to distill the sorting relationship between rough sorting and fine sorting, and as mentioned above, the sorting result distillation in our scenario, offline, online The effect has been improved; the second is the traditional knowledge distillation scheme that uses KL divergence as a representation metric, which treats each dimension of representation independently and cannot effectively distill highly relevant and structured information [9]. However, in the United States, In a group search scenario, the data is highly structured, so using traditional knowledge distillation strategies for representation distillation may not be able to capture this structured knowledge well.

We apply contrastive learning technology to coarse ranking model, so that the coarse ranking model can also distill the order relationship when distilling the representation of the fine ranking model. We use  to represent the rough model and

to represent the rough model and  to represent the fine model. Suppose q is a request in the data set

to represent the fine model. Suppose q is a request in the data set  is a positive example under the request, and

is a positive example under the request, and  is the corresponding k negative examples under the request.

is the corresponding k negative examples under the request.

We input  into the coarse and fine ranking networks respectively, and obtain their corresponding representations

into the coarse and fine ranking networks respectively, and obtain their corresponding representations  , and At the same time, we input

, and At the same time, we input  into the coarse ranking network and obtain the representation

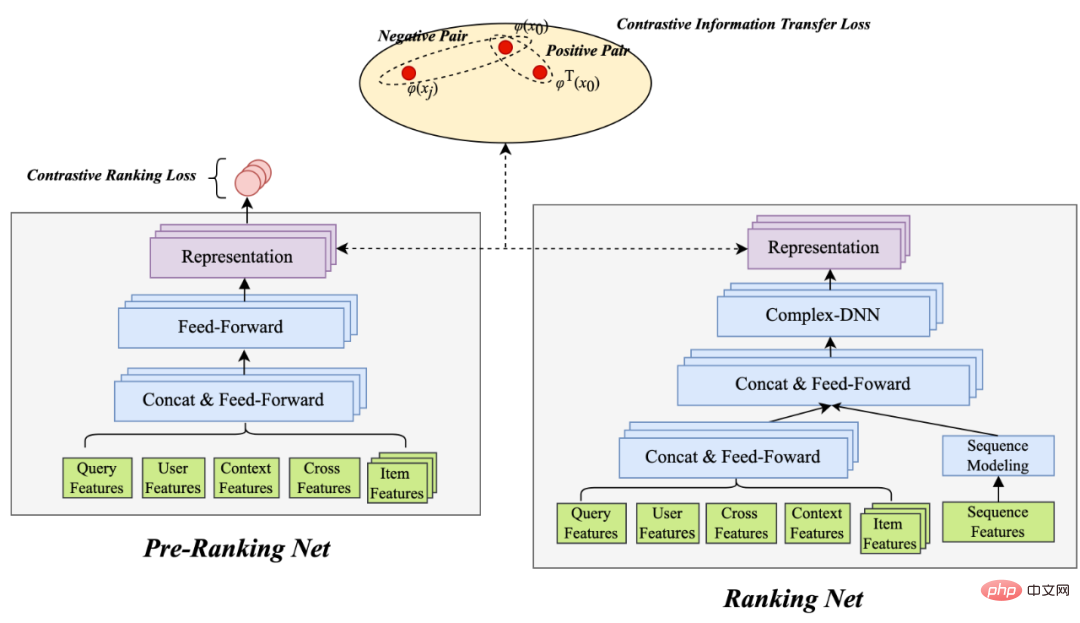

into the coarse ranking network and obtain the representation  encoded by the coarse ranking model. For the selection of negative example pairs for contrastive learning, we adopt the solution in Strategy 3 to divide the order of fine sorting into bins. The representation pairs of fine sorting and rough sorting in the same bin are regarded as positive examples, and the rough and fine sorting between different bins are regarded as positive examples. The representation pair is regarded as a negative example, and then InfoNCE Loss is used to optimize this goal:

encoded by the coarse ranking model. For the selection of negative example pairs for contrastive learning, we adopt the solution in Strategy 3 to divide the order of fine sorting into bins. The representation pairs of fine sorting and rough sorting in the same bin are regarded as positive examples, and the rough and fine sorting between different bins are regarded as positive examples. The representation pair is regarded as a negative example, and then InfoNCE Loss is used to optimize this goal:

where represents the dot product of two vectors, and is the temperature coefficient. By analyzing the properties of InfoNCE loss, it is not difficult to find that the above formula is essentially equivalent to a lower bound that maximizes the mutual information between coarse representation and fine representation. Therefore, this method essentially maximizes the consistency between fine representation and coarse representation at the mutual information level, and can distill structured knowledge more effectively.

Figure 6 Comparative learning of fine-ranking information transfer

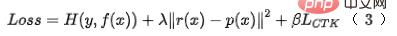

Based on the above formula (1) On top of this, supplementary contrastive learning representation distillation loss, offline effect Recall@150 14PP, online CTR 0.15%. For details of related work, please refer to our paper [10] (under submission).

3.2 Joint optimization of effect and performance

As mentioned earlier, the rough ranking candidate set for online prediction is relatively large. Considering the constraints of the system's full link performance, rough ranking needs to consider prediction efficiency. The work mentioned above is all optimized based on the paradigm of simple DNN distillation, but there are two problems:

- Currently, it is limited by online performance and only uses Simple features do not introduce richer cross-features, resulting in room for further improvement of the model effect.

- Distillation with a fixed rough model structure will lose the distillation effect, resulting in a suboptimal solution [11].

According to our practical experience, directly introducing cross features into the rough layer cannot meet online delay requirements. Therefore, in order to solve the above problems, we have explored and implemented a rough ranking modeling solution based on neural network architecture search. This solution simultaneously optimizes the effect and performance of the rough ranking model and selects the best feature combination and model that meets the coarse ranking delay requirements. Structure, the overall architecture diagram is shown in Figure 7 below:

Figure 7 Features and model structure based on NAS

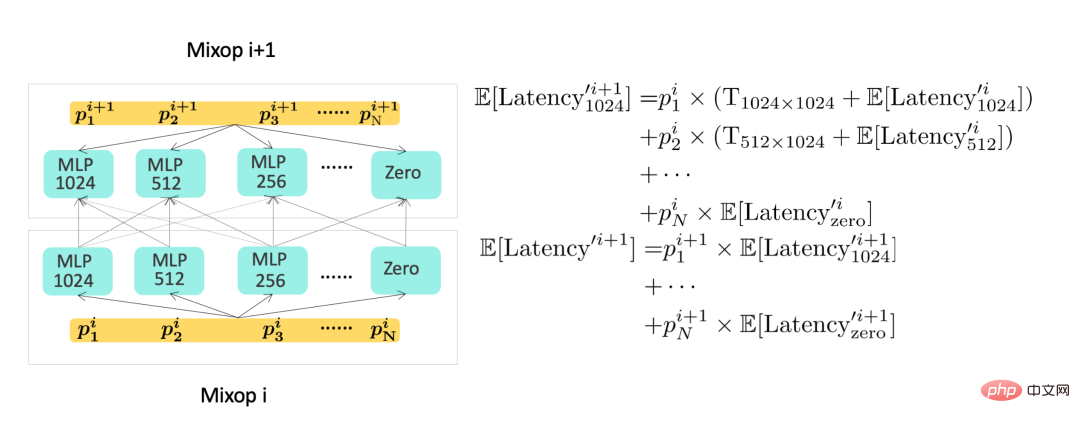

Select Below we briefly introduce the two key technical points of neural network architecture search (NAS) and the introduction of efficiency modeling:

- Neural network architecture search: As shown in Figure 7 above, we adopt a modeling method based on ProxylessNAS [12]. In addition to the network parameters, the entire model training adds feature Masks parameters and network architecture parameters. These parameters It is differentiable and learned along with the model target. In the feature selection part, we introduce a Mask parameter based on Bernoulli distribution to each feature, see formula (4), in which the θ parameter of Bernoulli distribution is updated through backpropagation, and finally the importance of each feature is obtained . In the structure selection part, L-layer Mixop representation is used. Each group of Mixop includes N optional network structural units. In the experiment, we used multi-layer perceptrons with different numbers of hidden layer neural units, where N= {1024 , 512, 256, 128, 64}, and we also added a structural unit with a hidden unit number of 0, which is used to select neural networks with different numbers of layers.

- Efficiency modeling: In order to model the efficiency metric in the model objective, we need to adopt a differentiable learning objective To represent the model time consumption, the time consumption of the rough model is mainly divided into feature time consumption and model structure time consumption.

For feature time consumption, the delay expectation of each feature fi can be modeled as shown in formula (5), where  is the delay of each characteristic recorded by the server.

is the delay of each characteristic recorded by the server.

In actual situations, the characteristics can be divided into two categories. One part is the upstream transparent transmission type characteristics, and its delay mainly comes from the upstream transmission delay. time; another type of feature comes from local acquisition (reading KV or calculation), then the delay of each feature combination can be modeled as:

where  and

and  represent the number of corresponding feature sets,

represent the number of corresponding feature sets,  and

and  Modeling system feature pull concurrency.

Modeling system feature pull concurrency.

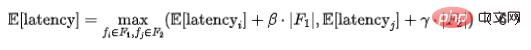

For the delay modeling of the model structure, please refer to the right part of Figure 7 above. Since the execution of these Mixops is performed sequentially, we can calculate the model structure delay recursively. At this time, the time consumption of the entire model part can be expressed by the last layer of Mixop. The schematic diagram is shown in Figure 8 below:

Figure 8 Model extension Time calculation diagram

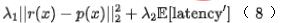

The left side of Figure 8 is a rough network equipped with network architecture selection, where represents the weight of the th neural unit of the th layer. On the right is a schematic diagram of network delay calculation. Therefore, the time consumption of the entire model prediction part can be expressed by the last layer of the model, as shown in formula (7):

Finally, we introduce the efficiency index into the model, The final loss of model training is shown in the following formula (8), where f represents the fine ranking network,  represents the balance factor, and

represents the balance factor, and  represents the scoring output of rough ranking and fine ranking respectively.

represents the scoring output of rough ranking and fine ranking respectively.

Jointly optimize the effect and prediction performance of the rough ranking model through the modeling of neural network architecture search, offline Recall@150 11PP, and finally online When the delay does not increase, the online indicator CTR is 0.12%; detailed work can be found in [13], which has been accepted by KDD 2022.

4. Summary

Starting in 2020, we have implemented a rough-layer MLP model through a large number of engineering performance optimizations. In 2021, we will continue to implement Based on the MLP model, the coarse ranking model is continuously iterated to improve the coarse ranking effect.

First of all, we draw on the distillation scheme commonly used in the industry to link fine ranking to optimize rough ranking, and conduct three levels of fine ranking result distillation, fine ranking prediction score distillation, and feature representation distillation. A large number of experiments were carried out to improve the effect of the rough layout model without increasing online delay. Secondly, considering that traditional distillation methods cannot handle feature structured information well in sorting scenarios, we developed a self-developed scheme for transferring fine sorting information to coarse sorting based on contrastive learning.

Finally, we further considered that rough optimization is essentially a trade-off between effect and performance. We adopted the idea of multi-objective modeling to simultaneously optimize effect and performance, and implemented the neural network architecture automatically. Search technology is used to solve the problem, allowing the model to automatically select the feature set and model structure with the best efficiency and effect. In the future, we will continue to iterate on the rough layer technology from the following aspects:

- Rough row multi-objective modeling: The current coarse row is essentially a single-objective model. We are currently trying to apply the multi-objective modeling of the fine row layer to the coarse row. Row.

- System-wide dynamic computing power allocation linked with rough sorting: Rough sorting can control the computing power of recall and the computing power of fine sorting. For different scenarios, the model needs The computing power is different, so dynamic computing power allocation can reduce the system computing power consumption without reducing the online effect. At present, we have achieved certain online effects in this aspect.

5. Appendix

Traditional sorting offline indicators are mostly based on NDCG, MAP, and AUC indicators. For rough sorting, their essence is more It is biased toward recall tasks that target set selection, so traditional ranking indicators are not conducive to measuring the iteration effect of the rough ranking model. We refer to the Recall indicator in [6] as a measure of the offline effect of rough sorting, that is, using the fine sorting results as the ground truth to measure the alignment degree of the TopK results of rough sorting and fine sorting. The specific definition of the Recall indicator is as follows:

The physical meaning of this formula is to measure the overlap between the top K of rough sorting and the top K of fine sorting. This indicator is more consistent with the selection of rough sorting sets. the essence of.

6. Author introduction

Xiao Jiang, Suo Gui, Li Xiang, Cao Yue, Pei Hao, Xiao Yao, Dayao, Chen Sheng, Yun Sen, Li Qian etc., all from Meituan Platform/Search Recommendation Algorithm Department.

The above is the detailed content of Exploration and practice of Meituan search rough ranking optimization. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

1.3ms takes 1.3ms! Tsinghua's latest open source mobile neural network architecture RepViT

Mar 11, 2024 pm 12:07 PM

1.3ms takes 1.3ms! Tsinghua's latest open source mobile neural network architecture RepViT

Mar 11, 2024 pm 12:07 PM

Paper address: https://arxiv.org/abs/2307.09283 Code address: https://github.com/THU-MIG/RepViTRepViT performs well in the mobile ViT architecture and shows significant advantages. Next, we explore the contributions of this study. It is mentioned in the article that lightweight ViTs generally perform better than lightweight CNNs on visual tasks, mainly due to their multi-head self-attention module (MSHA) that allows the model to learn global representations. However, the architectural differences between lightweight ViTs and lightweight CNNs have not been fully studied. In this study, the authors integrated lightweight ViTs into the effective

In-depth interpretation: Why is Laravel as slow as a snail?

Mar 07, 2024 am 09:54 AM

In-depth interpretation: Why is Laravel as slow as a snail?

Mar 07, 2024 am 09:54 AM

Laravel is a popular PHP development framework, but it is sometimes criticized for being as slow as a snail. What exactly causes Laravel's unsatisfactory speed? This article will provide an in-depth explanation of the reasons why Laravel is as slow as a snail from multiple aspects, and combine it with specific code examples to help readers gain a deeper understanding of this problem. 1. ORM query performance issues In Laravel, ORM (Object Relational Mapping) is a very powerful feature that allows

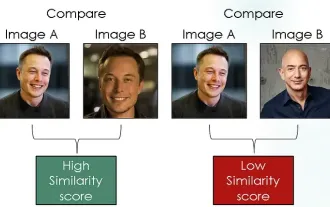

Exploring Siamese networks using contrastive loss for image similarity comparison

Apr 02, 2024 am 11:37 AM

Exploring Siamese networks using contrastive loss for image similarity comparison

Apr 02, 2024 am 11:37 AM

Introduction In the field of computer vision, accurately measuring image similarity is a critical task with a wide range of practical applications. From image search engines to facial recognition systems and content-based recommendation systems, the ability to effectively compare and find similar images is important. The Siamese network combined with contrastive loss provides a powerful framework for learning image similarity in a data-driven manner. In this blog post, we will dive into the details of Siamese networks, explore the concept of contrastive loss, and explore how these two components work together to create an effective image similarity model. First, the Siamese network consists of two identical subnetworks that share the same weights and parameters. Each sub-network encodes the input image into a feature vector, which

Discussion on Golang's gc optimization strategy

Mar 06, 2024 pm 02:39 PM

Discussion on Golang's gc optimization strategy

Mar 06, 2024 pm 02:39 PM

Golang's garbage collection (GC) has always been a hot topic among developers. As a fast programming language, Golang's built-in garbage collector can manage memory very well, but as the size of the program increases, some performance problems sometimes occur. This article will explore Golang’s GC optimization strategies and provide some specific code examples. Garbage collection in Golang Golang's garbage collector is based on concurrent mark-sweep (concurrentmark-s

C++ program optimization: time complexity reduction techniques

Jun 01, 2024 am 11:19 AM

C++ program optimization: time complexity reduction techniques

Jun 01, 2024 am 11:19 AM

Time complexity measures the execution time of an algorithm relative to the size of the input. Tips for reducing the time complexity of C++ programs include: choosing appropriate containers (such as vector, list) to optimize data storage and management. Utilize efficient algorithms such as quick sort to reduce computation time. Eliminate multiple operations to reduce double counting. Use conditional branches to avoid unnecessary calculations. Optimize linear search by using faster algorithms such as binary search.

Decoding Laravel performance bottlenecks: Optimization techniques fully revealed!

Mar 06, 2024 pm 02:33 PM

Decoding Laravel performance bottlenecks: Optimization techniques fully revealed!

Mar 06, 2024 pm 02:33 PM

Decoding Laravel performance bottlenecks: Optimization techniques fully revealed! Laravel, as a popular PHP framework, provides developers with rich functions and a convenient development experience. However, as the size of the project increases and the number of visits increases, we may face the challenge of performance bottlenecks. This article will delve into Laravel performance optimization techniques to help developers discover and solve potential performance problems. 1. Database query optimization using Eloquent delayed loading When using Eloquent to query the database, avoid

Laravel performance bottleneck revealed: optimization solution revealed!

Mar 07, 2024 pm 01:30 PM

Laravel performance bottleneck revealed: optimization solution revealed!

Mar 07, 2024 pm 01:30 PM

Laravel performance bottleneck revealed: optimization solution revealed! With the development of Internet technology, the performance optimization of websites and applications has become increasingly important. As a popular PHP framework, Laravel may face performance bottlenecks during the development process. This article will explore the performance problems that Laravel applications may encounter, and provide some optimization solutions and specific code examples so that developers can better solve these problems. 1. Database query optimization Database query is one of the common performance bottlenecks in Web applications. exist

You can understand the principles of convolutional neural networks even with zero foundation! Super detailed!

Jun 04, 2024 pm 08:19 PM

You can understand the principles of convolutional neural networks even with zero foundation! Super detailed!

Jun 04, 2024 pm 08:19 PM

I believe that friends who love technology and have a strong interest in AI like the author must be familiar with convolutional neural networks, and must have been confused by such an "advanced" name for a long time. The author will enter the world of convolutional neural networks from scratch today ~ share it with everyone! Before we dive into convolutional neural networks, let’s take a look at how images work. Image Principle Images are represented in computers by numbers (0-255), and each number represents the brightness or color information of a pixel in the image. Among them: Black and white image: Each pixel has only one value, and this value varies between 0 (black) and 255 (white). Color image: Each pixel contains three values, the most common is the RGB (Red-Green-Blue) model, which is red, green and blue