Edge computing technology in autonomous driving systems

Edge computing is a new computing model that performs calculations at the edge of the network. Its data processing mainly includes two parts, one is the downlink cloud service, and the other is the uplink Internet of Everything service. "Edge" is actually a relative concept, referring to any computing, storage and network-related resources on the path from data to the cloud computing center. From one end of the data to the other end of the cloud service center, the edge can be represented as one or more resource nodes on this path based on the specific needs of the application and actual application scenarios. The business essence of edge computing is the extension and evolution of cloud computing's aggregation nodes outside the data center. It is mainly composed of three types of implementation forms: edge cloud, edge network, and edge gateway.

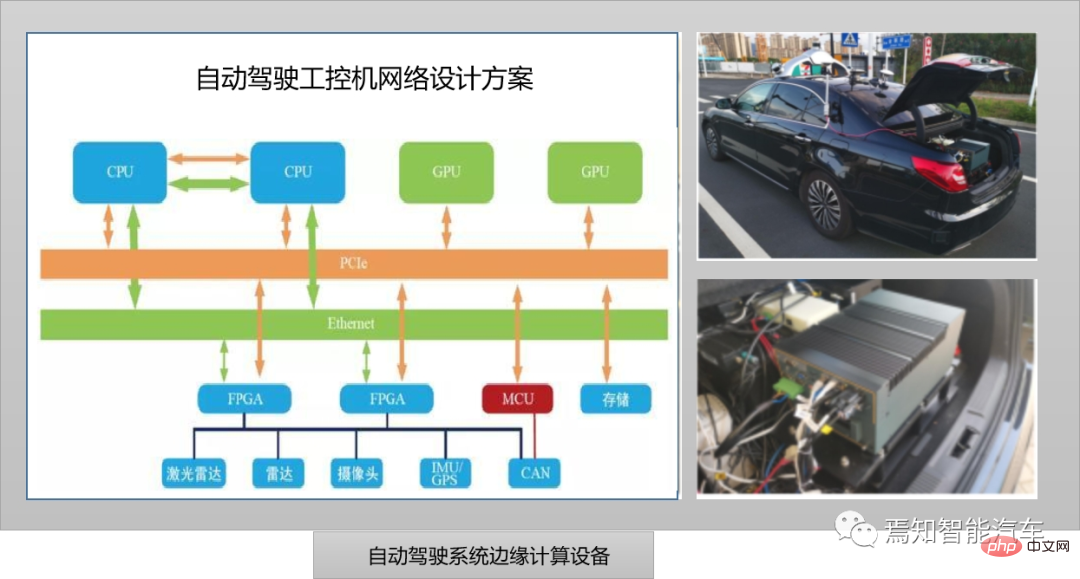

As shown in the picture above, it represents an industrial computer currently used in autonomous driving. In fact, it is a ruggedized enhanced personal computer. It can operate reliably in an industrial environment as an industrial controller. It uses an all-steel industrial chassis that complies with EIA standards to enhance its ability to resist electromagnetic interference. It also uses bus structure and modular design technology to prevent single points of failure. The above autonomous driving industrial computer network design plan fully considers the requirements of ISO26262. Among them, the CPU, GPU, FPGA and bus are all designed for redundancy. When the overall IPC system fails, redundant MCU control can ensure computing security and directly send instructions to the vehicle CAN bus to control vehicle parking. At present, this centralized architecture is suitable for the next generation of centralized autonomous driving system solutions. The industrial computer is equivalent to the next generation of centralized domain controller, which unifies all computing work into one. Algorithm iteration does not require excessive consideration of hardware. Overall upgrade and vehicle regulations requirements.

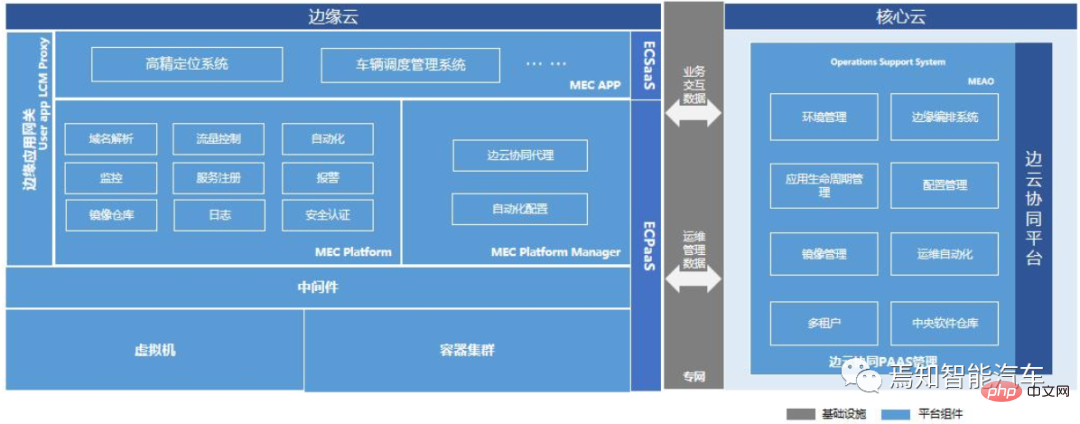

Edge Computing and Edge Cloud

In current autonomous driving, large-scale artificial intelligence algorithm models and large-scale data centralized analysis are performed on the cloud. Because the cloud has a large amount of computing resources and can complete data processing in a very short time, but relying solely on the cloud to provide services for autonomous vehicles is not feasible in many cases. Because autonomous vehicles will generate a large amount of data that needs to be processed in real time during driving. If these data are transmitted to the remote cloud for processing through the core network, then the data transmission alone will cause a large delay and cannot meet the data processing requirements. Real-time requirements. The bandwidth of the core network is also difficult to support a large number of self-driving cars sending a large amount of data to the cloud at the same time. Moreover, once the core network is congested and the data transmission is unstable, the driving safety of self-driving cars cannot be guaranteed.

Edge computing focuses on local services, has high real-time requirements, is under great network pressure, and the computing method is oriented to localization. Edge computing is more suitable for local small-scale intelligent analysis and preprocessing based on integrated algorithm models. Applying edge computing to the field of autonomous driving will help solve the problems faced by autonomous vehicles in acquiring and processing environmental data.

As two important computing methods for the digital transformation of the industry, edge computing and cloud computing basically coexist at the same time, complement each other, and promote each other to jointly solve computing problems in the big data era. .

Edge computing refers to a computing model that performs calculations at the edge of the network. Its operation objects come from downlink data from cloud services and uplink data from Internet of Everything services. The "edge" in edge computing refers to data from Any computing and network resources between the source and the path to the cloud computing center. In short, edge computing deploys servers to edge nodes near users to provide services to users at the edge of the network (such as wireless access points), avoiding long-distance data transmission and providing users with faster responses. Task offloading technology offloads the computing tasks of autonomous vehicles to other edge nodes for execution, solving the problem of insufficient computing resources for autonomous vehicles.

Edge computing has the characteristics of proximity, low latency, locality and location awareness. Among them, proximity means that edge computing is close to the information source. It is suitable for capturing and analyzing key information in big data through data optimization. It can directly access the device, serve edge intelligence more efficiently, and easily derive specific application scenarios. Low latency means that edge computing services are close to the terminal devices that generate data. Compared with cloud computing, latency is greatly reduced, especially in smart driving application scenarios, making the feedback process faster. Locality means that edge computing can run in isolation from the rest of the network to achieve localized, relatively independent computing. On the one hand, it ensures local data security, and on the other hand, it reduces the dependence of computing on network quality. Location awareness means that when the edge network is part of a wireless network, edge computing-style local services can use relatively little information to determine the location of all connected devices. These services can be applied to location-based service application scenarios.

At the same time, the development trend of edge computing will gradually evolve towards heterogeneous computing, edge intelligence, edge-cloud collaboration and 5G edge computing. Heterogeneous computing requires the use of computing units of different types of instruction sets and architectures to form a system of computing to meet the needs of edge services for diverse computing. Heterogeneous computing can not only meet the infrastructure construction of a new generation of "connected computing", It can also meet the needs of fragmented industries and differentiated applications, improve computing resource utilization, and support flexible deployment and scheduling of computing power.

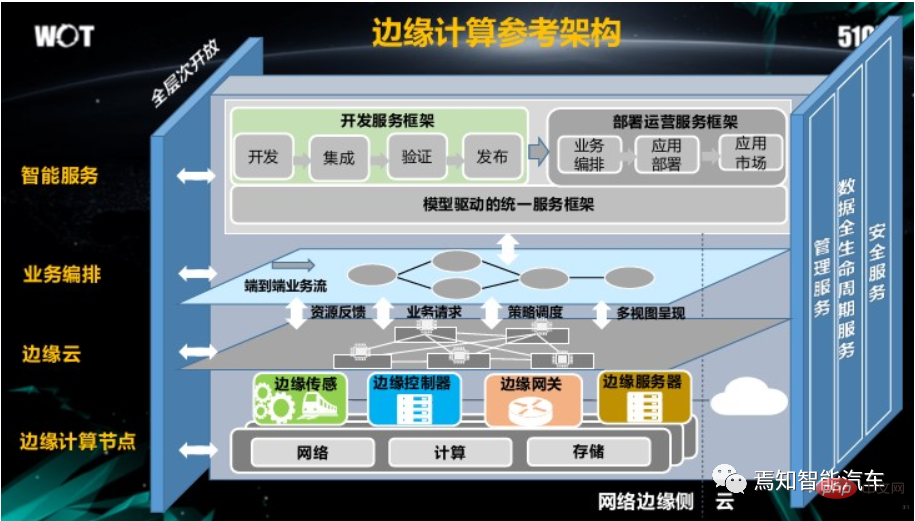

Edge Computing Reference Architecture

Each layer of the edge computing reference architecture provides a modeled open interface, realizing full-level openness of the architecture, through vertical management services, and data full life cycle services and security services to realize intelligent services throughout the entire business process and life cycle.

As shown in the figure above, the edge computing reference architecture mainly includes the following contents:

The entire system is divided into intelligent services, business orchestration, edge cloud and edge computing On the fourth layer of nodes, edge computing is located between the cloud and field devices. The edge layer supports the access of various field devices downwards and can connect with the cloud upwards. The edge layer includes two main parts: edge node and edge manager. The edge node is a hardware entity and is the core of carrying edge computing services. The core of the edge manager is software, and its main function is to uniformly manage edge nodes. Edge computing nodes generally have computing resources, network resources and storage resources. The edge computing system uses resources in two ways: First, it directly encapsulates computing resources, network resources and storage resources and provides a calling interface. The edge manager uses Edge node resources are used in code download, network policy configuration, and database operations; secondly, edge node resources are further encapsulated into functional modules according to functional areas, and the edge manager combines and calls functional modules through model-driven business orchestration to achieve Integrated development and agile deployment of edge computing services.

Hardware infrastructure of edge computing

1. Edge server

The edge server is the main computing carrier for edge computing and edge data centers and can be deployed in a computer room of the operator. . Since edge computing environments vary greatly, and edge services have personalized requirements in terms of latency, bandwidth, GPU, and AI, engineers should minimize on-site operations and have strong management and operation capabilities, including status Collection, operation control and management interface to achieve remote and automated management.

In autonomous driving systems, intelligent edge all-in-one machines are usually used to organically integrate computing, storage, network, virtualization, environmental power and other products. Integrated into an industrial computer to facilitate the normal operation of the automatic driving system.

2. Edge access network

The edge computing access network refers to a series of network infrastructure that passes from the user system to the edge computing system, including but not limited to campus network, access network Network and edge gateways, etc. It also has features such as convergence, low latency, large bandwidth, large connections, and high security.

3. Edge internal network

The edge computing internal network refers to the internal network infrastructure of the edge computing system, such as the network equipment connected to the server, the network equipment interconnected with the external network, and the network equipment built by it. Network etc. The internal network of edge computing has the characteristics of simplified architecture, complete functions, and greatly reduced performance loss; at the same time, it can achieve edge-cloud collaboration and centralized management and control.

Since the edge computing system naturally exhibits distributed attributes, the individual scale is small but the number is large. If a single point management mode is adopted, it is difficult to meet the operational needs, and it will also occupy industrial computer resources and reduce efficiency; on the other hand, edge computing business More emphasis is placed on end-to-end latency, bandwidth, and security, so collaboration between edge cloud and edge is also very important. Generally, it is necessary to introduce an intelligent cross-domain management and orchestration system into the cloud computing system to uniformly manage and control all edge computing system network infrastructure within a certain range, and ensure the automation of network and computing resources by supporting a centralized management model based on edge-cloud collaboration. Efficient configuration.

4. Edge computing interconnection network

The edge computing interconnection network includes from edge computing systems to cloud computing systems (such as public clouds, private clouds, communication clouds, user-built clouds, etc.), and others The network infrastructure passed by edge computing systems and various data centers. The edge computing interconnection network has the characteristics of diversified connections and low cross-domain latency.

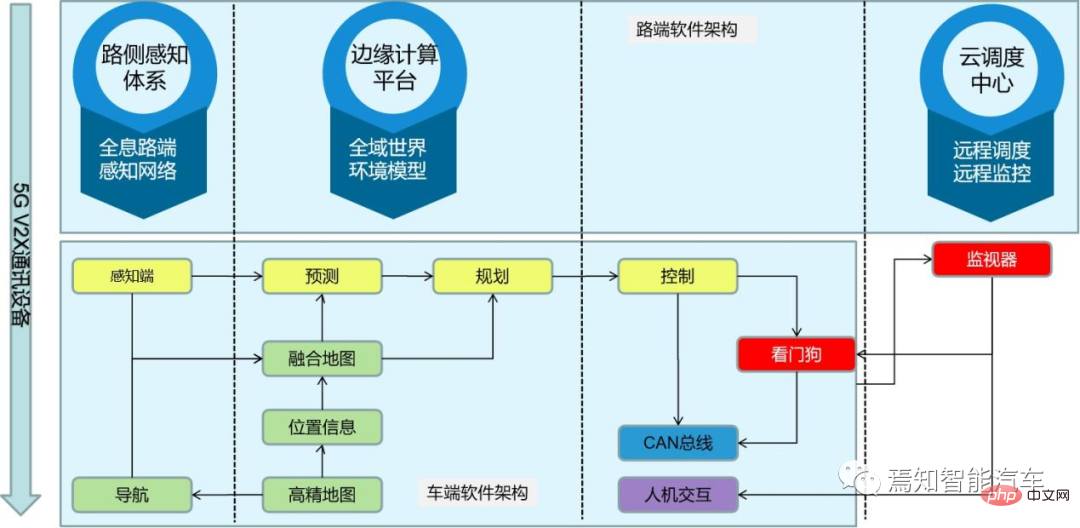

How to combine edge computing and autonomous driving systems

In the next stage, in order to achieve higher-order autonomous driving system tasks, relying solely on single-vehicle intelligence is completely insufficient.

Cooperative sensing and task offloading are the main applications of edge computing in the field of autonomous driving. These two technologies make it possible to achieve high-level autonomous driving. Collaborative sensing technology allows cars to obtain sensor information from other edge nodes, expanding the sensing range of autonomous vehicles and increasing the integrity of environmental data. Taking autonomous driving as an example, cars will integrate sensors such as lidar and cameras. At the same time, it is necessary to achieve comprehensive perception of vehicles, roads and traffic data through vehicle network V2X, etc., to obtain more information than the internal and external sensors of a single vehicle, and to enhance the visibility beyond visual range. Perception of the environment within the range and sharing the autonomous driving position in real time through high-definition 3D dynamic maps. The collected data will be interacted with road edge nodes and surrounding vehicles to expand perception capabilities and achieve vehicle-to-vehicle and vehicle-to-road collaboration. The cloud computing center is responsible for collecting data from widely distributed edge nodes, sensing the operating status of the transportation system, and issuing reasonable dispatching instructions to edge nodes, traffic signal systems and vehicles through big data and artificial intelligence algorithms, thereby improving system operation. efficiency. For example, in bad weather such as rain, snow, heavy fog, or in scenes such as intersections and turns, radars and cameras cannot clearly identify obstacles ahead. Using V2x to obtain real-time data on roads, driving, etc., intelligent prediction of road conditions can be achieved. Avoid accidents.

With the improvement of autonomous driving levels and the increase in the number of smart sensors equipped, autonomous vehicles generate a large amount of raw data every day. These raw data require local real-time processing, fusion, and feature extraction, including target detection and tracking based on deep learning. At the same time, V2X needs to be used to improve the perception of the environment, roads and other vehicles, and use 3D high-definition maps for real-time modeling and positioning, path planning and selection, and driving strategy adjustment to safely control the vehicle. Since these tasks require real-time processing and response in the vehicle at all times, a powerful and reliable edge computing platform is required to perform them. Considering the diversity of computing tasks, in order to improve execution efficiency and reduce power consumption and cost, it is generally necessary to support heterogeneous computing platforms.

The edge computing architecture of autonomous driving relies on edge-cloud collaboration and the communication infrastructure and services provided by LTE/5G. The edge side mainly refers to vehicle-mounted units, roadside units (RSU) or mobile edge computing (MEC) servers. Among them, the vehicle-mounted unit is the main body of environmental perception, decision-making planning and vehicle control, but it relies on the cooperation of RSU or MEC server. For example, RSU provides the vehicle-mounted unit with more information about roads and pedestrians, but some functions are more suitable and even better run in the cloud. Irreplaceable. For example, vehicle remote control, vehicle simulation and verification, node management, data persistence and management, etc.

For edge computing of autonomous driving systems, it can achieve advantages such as load integration, heterogeneous computing, real-time processing, connection and interoperability, and safety optimization.

1. "Load integration"

Run loads with different attributes such as ADAS, IVI, digital instruments, head-up display and rear entertainment system on the same hardware platform through virtualized computing . At the same time, load integration based on virtualization and hardware abstraction layer makes it easier to implement cloud business orchestration, deep learning model updates, software and firmware upgrades for the entire vehicle driving system.

2. "Heterogeneous computing"

refers to the computing tasks with different attributes inherited by the edge platform of the autonomous driving system, based on the differences in performance and energy consumption ratio when running on different hardware platforms. Take a different calculation approach. For example, geolocation and path planning, target recognition and detection based on deep learning, image preprocessing and feature extraction, sensor fusion and target tracking, etc. GPUs are good at handling convolutional calculations for target recognition and tracking. The CPU will produce better performance and lower energy consumption for logical computing capabilities. The digital signal processing DSP produces more advantages in feature extraction algorithms such as positioning. This heterogeneous computing method greatly improves the performance and energy consumption ratio of the computing platform and reduces computing latency. Heterogeneous computing selects appropriate hardware implementations for different computing tasks, gives full play to the advantages of different hardware platforms, and shields hardware diversity by unifying upper-layer software interfaces.

3. "Real-time processing"

As we all know, the automatic driving system has extremely high requirements for real-time performance, because in dangerous situations only a few seconds may be available for the automatic driving system. Brake to avoid collision. Moreover, the braking reaction time includes the response time of the entire driving system, involving cloud computing processing, workshop negotiation processing time, vehicle itself system calculation and braking processing time. If the autonomous driving response is divided into real-time requirements for each functional module of its edge computing platform. It needs to be refined into perception detection time, fusion analysis time and behavioral path planning time. At the same time, the entire network latency must also be considered, because the low latency and high reliability application scenarios brought by 5G are also very critical. It can enable self-driving cars to achieve end-to-end latency of less than 1ms and reliability close to 100%. At the same time, 5G can flexibly allocate network processing capabilities according to priority, thereby ensuring a faster response speed for vehicle control signal transmission.

4. "Connectivity and Interoperability"

Edge computing for self-driving cars is inseparable from the support of vehicle wireless communication technology (V2X, vehicle-to-everything), which provides self-driving cars with Means of communication with other elements in intelligent transportation systems are the basis for cooperation between autonomous vehicles and edge nodes.

Currently, V2X is mainly based on dedicated short range communication (DSRC, dedicated short range communication) and cellular networks [5]. DSRC is a communication standard specifically used between vehicles (V2V, vehicle-to-vehicle) and vehicles and road infrastructure (V2I, vehicle-to-infrastructure). It has high data transmission rate and low latency. , Support point-to-point or point-to-multipoint communication and other advantages. Cellular networks represented by 5G have the advantages of large network capacity and wide coverage, and are suitable for V2I communications and communications between edge servers.

5. "Security Optimization"

Edge computing security is an important guarantee for edge computing. Its design combines the in-depth security protection system of cloud computing and edge computing to enhance edge infrastructure and network , application, data identification and ability to resist various security threats, building a safe and trusted environment for the development of edge computing. The control plane and data plane of the 5G core network of the next-generation autonomous driving system are separated. NFV makes network deployment more flexible, thereby ensuring the success of edge distributed computing deployment. Edge computing spreads more data computing and storage from the central unit to the edge. Its computing power is deployed close to the data source. Some data no longer has to go through the network to reach the cloud for processing, thus reducing latency and network load and improving data efficiency. Security and privacy. For future mobile communication devices close to vehicles, such as base stations, roadside units, etc., edge computing of the Internet of Vehicles may be deployed, which can well complete local data processing, encryption and decision-making, and provide real-time, highly reliable communication capabilities. .

Summary

Edge computing has extremely important applications in environmental perception and data processing of autonomous driving. Self-driving cars can expand their perception range by obtaining environmental information from edge nodes, and can also offload computing tasks to edge nodes to solve the problem of insufficient computing resources. Compared with cloud computing, edge computing avoids the high delays caused by long-distance data transmission, can provide faster responses to autonomous vehicles, and reduces the load on the backbone network. Therefore, the use of edge computing in the staged autonomous driving research and development process will be an important option for its continuous optimization and development.

The above is the detailed content of Edge computing technology in autonomous driving systems. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

In the past month, due to some well-known reasons, I have had very intensive exchanges with various teachers and classmates in the industry. An inevitable topic in the exchange is naturally end-to-end and the popular Tesla FSDV12. I would like to take this opportunity to sort out some of my thoughts and opinions at this moment for your reference and discussion. How to define an end-to-end autonomous driving system, and what problems should be expected to be solved end-to-end? According to the most traditional definition, an end-to-end system refers to a system that inputs raw information from sensors and directly outputs variables of concern to the task. For example, in image recognition, CNN can be called end-to-end compared to the traditional feature extractor + classifier method. In autonomous driving tasks, input data from various sensors (camera/LiDAR

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

Original title: SIMPL: ASimpleandEfficientMulti-agentMotionPredictionBaselineforAutonomousDriving Paper link: https://arxiv.org/pdf/2402.02519.pdf Code link: https://github.com/HKUST-Aerial-Robotics/SIMPL Author unit: Hong Kong University of Science and Technology DJI Paper idea: This paper proposes a simple and efficient motion prediction baseline (SIMPL) for autonomous vehicles. Compared with traditional agent-cent

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.