Technology peripherals

Technology peripherals

AI

AI

An article talking about the traffic sign recognition system in autonomous driving

An article talking about the traffic sign recognition system in autonomous driving

An article talking about the traffic sign recognition system in autonomous driving

What is a traffic sign recognition system?

The traffic sign recognition system of the car safety system, whose English translation is: Traffic Sign Recognition, or TSR for short, uses the front camera combined with the mode to recognize common traffic signs "speed limit, stop, U-turn" wait). This feature alerts the driver to traffic signs ahead so they can obey them. The TSR function improves safety by reducing the likelihood that drivers will disobey traffic laws such as stop signs and avoid illegal left turns or other unintentional traffic violations. These systems require flexible software platforms to enhance detection algorithms and adjust to traffic signs in different areas.

Traffic Sign Recognition Principle

Traffic sign recognition, also known as TSR (Traffic Sign Recognition), refers to the ability to collect and identify road traffic sign information that appears while the vehicle is driving. , give prompt instructions or warnings to the driver, or directly control the vehicle to operate to ensure smooth traffic and prevent accidents. In vehicles equipped with safety-assisted driving systems, if the vehicle can provide an efficient TSR system, it can provide drivers with reliable road traffic sign information in a timely manner, effectively improving driving safety and comfort.

The following will introduce a typical road traffic sign recognition method.

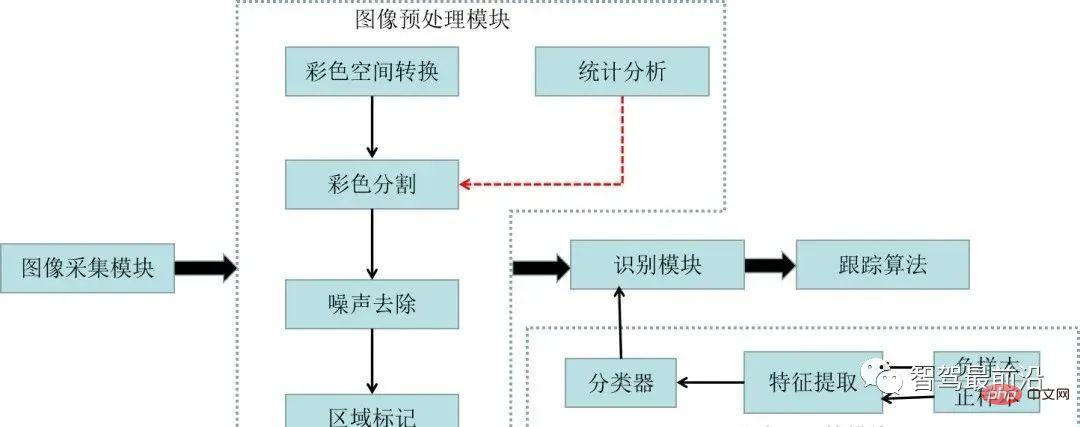

TSR is based on the characteristics of objects recognized by the human visual system. Its recognition principle is to use the rich color information and fixed shape information of road signs for feature recognition. Specifically, the recognition process can be divided into two steps: "separation" and "recognition". Separation refers to finding candidate targets in the acquired images and performing corresponding preprocessing, followed by traffic sign recognition, including feature extraction and classification, and finally further determining the authenticity of the target.

1. Traffic sign separation

Traffic sign separation actually requires quickly obtaining interesting information that may be traffic signs from complex scene images. area. Then the pattern recognition method is used to further identify the area of interest and locate its specific location. Since traffic signs function as indicators, reminders and warnings, they are designed to be eye-catching, bright in color, concise in graphics, and clear in meaning. Therefore regions of interest are usually mapped using their color and shape.

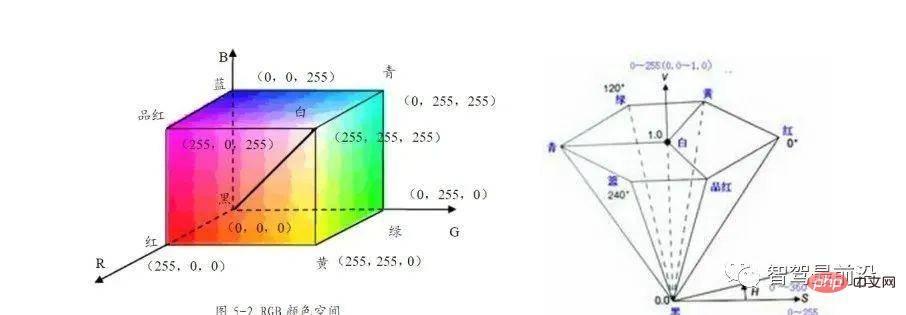

Currently, the color spaces commonly used in traffic sign recognition include RGB, HIS, and CIE. RGB, as the three primary colors commonly used in image processing, is the basis for constructing various other colors. Others Color representation can be obtained by RGB conversion.

We know that for traffic signs, most colors are relatively single and fixed. For example, red signs generally indicate prohibitions, blue signs generally indicate instructions, and yellow signs generally indicate instructions. Generally speaking, it represents a warning type. Here, the three primary colors of RGB, red, yellow, and blue, are used to identify and match them.

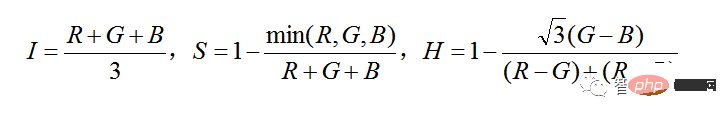

Because the color space also contains more information such as saturation, in order to better distinguish color and brightness information, researchers use more color models suitable for human visual characteristics HSI models to process traffic For logo recognition, H represents different colors, S represents color depth, and I represents the degree of lightness and darkness. The biggest feature of HSI is that there is minimal correlation between H, S, and I. Each color image in the HSI space corresponds to a relative Consistent shade H.

#2. Traffic sign recognition

When the area of interest of the traffic sign information is segmented in the test driving environment, a certain algorithm needs to be used Discriminate it in order to determine which specific traffic sign it belongs to. General discrimination methods include template matching methods, cluster analysis-based methods, shape analysis-based methods, neural network analysis methods, and support vector machine-based methods.

(1) Based on template matching method

(2) Based on cluster analysis method

(3) Method based on neural network

(4) Support vector machine method

Support vector machine is a typical feedforward neural network method used to solve pattern classification and nonlinear problems. The main idea is to establish an optimal decision-making hyperplane to maximize the distance between the two types of samples closest to the plane on both sides of the plane, providing better generalization capabilities for classification. For nonlinear separable pattern classification problems, the responsible pattern classification problem needs to be nonlinearly projected into a high-dimensional feature space. Therefore, as long as the transformation is nonlinear and the dimensionality of the feature space is high enough, the original pattern space can become a new A high-dimensional feature space in which patterns become linearly separable with high probability. The transformation process requires generating a kernel function for convolution. The corresponding typical kernel function is expressed as follows:

Gaussian function: picture; used for radial set function classifier;

Inner product function: picture; used for high-order polynomial set classifier;

Sigmoid kernel function: picture; used to implement a single hidden layer perceptron neural network.

Some specific application scenarios of TSR

Because the complexity of road traffic conditions may cause traffic signs to be stained, their colors and shapes to change, and the appearance of trees and buildings Occlusion may cause it to be unable to be recognized in time. At the same time, during high-speed driving, factors such as vehicle jitter may cause errors in the image frame matching process, making it impossible to stably recognize the corresponding traffic signs. Therefore, traffic sign recognition has not yet been widely used in the field of driving assistance. The more mature application solutions include the following:

Automatic speed limit based on speed limit signs

The automatic speed limit based on the speed limit sign mainly uses the speed limit value displayed by the recognized speed limit sign, and the vehicle predicts it in advance. Here we set several different speed values for comparison.

VReal represents the current actual cruising speed of the vehicle, Vtarget represents the target cruising speed of the vehicle, Vlim represents the speed limit value information, and Vfront represents the recognized speed of the vehicle ahead.

Based on the sensitive information of your own speed, the following speed limit strategies are implemented to varying degrees:

1) Cruise control of this vehicle

When it is detected that the vehicle VReal>Vlim and Vtarget

When it detects that the vehicle VReal

2) The vehicle follows the vehicle in front

When detecting VReal>VFront>Vlim of the vehicle, the system will ensure that the vehicle does not collide with the vehicle in front. Automatic deceleration control;

When detecting that the vehicle VReal

3) Control logic after passing the speed limit sign

After the above vehicle automatically limits the speed, when the vehicle passes the speed limit sign, it will recognize the new speed limit sign at the same time. It is necessary to re-control the speed when speeding. If the speed limit value of the new speed limit plate is smaller than the current value, further speed restriction will be carried out according to the logic in 1) 2). If the speed limit value of the new speed limit plate is greater than the current value, , then the acceleration needs to be redistributed based on the current updated actual speed of the vehicle, the speed of the vehicle in front, and the target cruise speed of the vehicle to ensure that speed limits and collisions are prioritized and properly controlled.

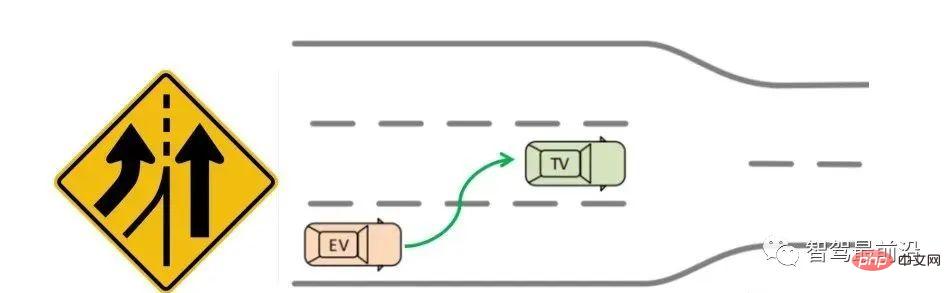

Advance merging based on merging strategy

For vehicles driving on highways, driving vehicles need to consider There are currently two feasible solutions for the problem of early lane changes in different scenarios:

First, when a lane merging sign information is detected ahead at a certain distance, the vehicle can be changed in advance through voice or instrument images. Prompt the driver to control the lane change of the vehicle and change the vehicle to the target lane;

Secondly, if the system receives lane level information related to high-precision map before a certain distance, it can directly control the vehicle When changing lanes to the target lane, it is necessary to detect whether the target lane line is a dotted line, whether the target lane is safe to change, etc.

Advance braking based on traffic light recognition

The driving assistance system based on traffic light sign recognition requires the system to control vehicle cruising and lane changing in advance based on the recognized traffic lights. .

There are mainly the following control scene strategies:

1) When the green light is recognized

If the vehicle is following the vehicle in front , the vehicle in front is driving at a lower speed. On the premise of ensuring collision safety, the vehicle continues to follow the vehicle in front and monitors the changes in the lights in real time. Once the light turns yellow, it will immediately stop following the strategy and maintain a certain deceleration to Brake;

2) Recognize the yellow light

If the vehicle recognizes the yellow light, it is required regardless of whether the vehicle is following the vehicle or not. Control the vehicle's deceleration and stop. During the deceleration process, you can decelerate for comfort, and switch from engine reverse drag to brake cut-in;

3) Recognize the red light

If the red light has been recognized, according to the stopping state of the vehicle in front, and on the premise of ensuring collision avoidance, the vehicle will be controlled to decelerate to a stop and maintain a distance of more than 1m from the vehicle in front of the vehicle in the braking state;

(Among them, the application of the first function is easier to understand; the application of the second function is more significant for development, and it involves the automatic lane change logic from L2 to L3 level; the application of the third function , seems smarter - braking in advance, similar to the logic of V2X.)

Development status of China's advanced driving assistance system

Combining the development of the technology itself and China's road traffic environment and the specific needs of consumers, we can summarize the development trends of advanced driving assistance system technology in the Chinese market:

(1) From the perspective of technological development, as consumers will only pay more and more attention to automobile safety, advanced driving assistance systems will maintain a continuous development trend for a long time to come. At the same time, advanced driving assistance systems are changing from the independent development of a single technology to the development of integrated active safety systems. Multiple technologies can share platforms such as sensors and control systems. Once the vehicle is equipped with basic ESP, ACC and other technologies, it can be easily And adding other safe driving assistance technologies at a lower cost will further promote the application of advanced driving assistance system technologies in automobiles.

(2) Some relatively low-end and highly practical advanced driving assistance system technologies, such as tire pressure monitoring systems, ESP electronic stability systems, etc., have been fully recognized by the market. Under the strong pressure Driven by demand, its penetration rate in the low-end market will steadily increase.

(3) Chinese consumers have shown obvious attention and demand for hedging assistance and vision improvement technologies, which will surely become the main growth point in this field in the next stage.

(4) Some technologies with higher road requirements, such as lane change assist, lane departure warning, ACC, etc., as well as technologies that are inconsistent with the driving habits of Chinese consumers, such as lane keeping systems, driving Staff fatigue detection, alcohol ban lockout system, etc. may face slow development for a long period of time.

Some Difficulties of TSR

Today, current technology cannot determine all traffic signs, nor can it operate under all conditions. There are several conditions that limit the performance of the TSR system, including the following:

- Dirty or improperly adjusted headlights

- Dirty, fogged, or clogged windshield

- Signs of warping, twisting or bending

- Abnormal tire or wheel condition

- Vehicle tilt due to heavy objects or modified suspension

While TSR and similar vehicle sensing technologies are helpful in moving toward fully autonomous driving, we are not there yet. Even TSR is just a driving assistance system. Drivers cannot rely entirely on any ADAS system to drive for them.

In general, the basic functions of TSR are relatively mature, but there is still some way to go for advanced functions and the simplification of the ecological chain.

The above is the detailed content of An article talking about the traffic sign recognition system in autonomous driving. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1371

1371

52

52

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

Original title: SIMPL: ASimpleandEfficientMulti-agentMotionPredictionBaselineforAutonomousDriving Paper link: https://arxiv.org/pdf/2402.02519.pdf Code link: https://github.com/HKUST-Aerial-Robotics/SIMPL Author unit: Hong Kong University of Science and Technology DJI Paper idea: This paper proposes a simple and efficient motion prediction baseline (SIMPL) for autonomous vehicles. Compared with traditional agent-cent

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

In the past month, due to some well-known reasons, I have had very intensive exchanges with various teachers and classmates in the industry. An inevitable topic in the exchange is naturally end-to-end and the popular Tesla FSDV12. I would like to take this opportunity to sort out some of my thoughts and opinions at this moment for your reference and discussion. How to define an end-to-end autonomous driving system, and what problems should be expected to be solved end-to-end? According to the most traditional definition, an end-to-end system refers to a system that inputs raw information from sensors and directly outputs variables of concern to the task. For example, in image recognition, CNN can be called end-to-end compared to the traditional feature extractor + classifier method. In autonomous driving tasks, input data from various sensors (camera/LiDAR