Technology peripherals

Technology peripherals

AI

AI

The first 100 billion model compression algorithm SparseGPT is here, reducing computing power costs while maintaining high accuracy

The first 100 billion model compression algorithm SparseGPT is here, reducing computing power costs while maintaining high accuracy

The first 100 billion model compression algorithm SparseGPT is here, reducing computing power costs while maintaining high accuracy

Since the emergence of GPT-3 in 2020, the popularity of ChatGPT has once again brought the GPT family’s generative large-scale language models into the spotlight, and they have shown strong performance in various tasks.

But the huge scale of the model also brings about an increase in computing costs and an increase in deployment difficulty.

For example, the GPT‑175B model occupies a total of at least 320GB of storage space in half-precision (FP16) format. During inference, at least five A100 GPUs with 80 GB storage space are required.

Model compression is currently a commonly used method to reduce the computational cost of large models, but so far, almost all existing GPT compression methods focus on quantification. (quantization), that is, reducing the accuracy of the numerical representation of a single weight.

Another model compression method is pruning, which removes network elements, ranging from individual weights (unstructured pruning) to higher-granular components such as weight matrices of the entire row/column (structured pruning). This approach works well in vision and smaller-scale language models, but it results in a loss of accuracy, requiring extensive retraining of the model to restore accuracy, so the cost becomes again when it comes to large-scale models like GPT. Too expensive. Although there are some single-shot pruning methods that can compress the model without retraining, they are too computationally intensive and difficult to apply to models with billions of parameters.

So for a large model of the size of GPT-3, is there a way to accurately prune it while maintaining minimal accuracy loss and reducing computational costs?

Recently, two researchers from the Austrian Institute of Science and Technology (ISTA), Elias Frantar and Dan Alistarh, collaborated on a study that, for the first time, targeted a model scale of 10 to 100 billion parameters. An accurate single-shot pruning method SparseGPT is proposed.

There are currently many methods to quantify post-training of GPT-scale models, such as ZeroQuant, LLM.int8() and nuQmm, etc., but activation quantization may be difficult due to the presence of abnormal features. GPTQ utilizes approximate second-order information to accurately quantize weights to 2‑4 bits, suitable for the largest models, and when combined with efficient GPU cores, can lead to 2‑5x inference acceleration.

But since GPTQ focuses on sparsification rather than quantification, SparseGPT is a complement to the quantification method, and the two can be applied in combination.

In addition, in addition to unstructured pruning, SparseGPT is also suitable for semi-structured patterns, such as the popular n:m sparse format, which can be used in a ratio of 2:4 on Ampere NVIDIA GPUs Achieve acceleration.

SparseGPT: High sparsification level, low precision loss

After evaluating the effectiveness of the SparseGPT compression model, researchers found that large languages The difficulty of model sparsification is proportional to the model size. Compared with the existing magnitude pruning (Magnitude Pruning) method, using SparseGPT can achieve a higher degree of model sparseness while maintaining a minimum loss of accuracy.

The researchers implemented SparseGPT on PyTorch and used HuggingFace’s Transformers library to process the model and dataset, all on a single NVIDIA A100 GPU with 80GB of memory. Under such experimental conditions, SparseGPT can achieve complete sparsification of a 175 billion parameter model in approximately 4 hours.

The researchers sparse Transformer layers sequentially, which significantly reduces memory requirements and also greatly improves the accuracy of processing all layers in parallel. All compression experiments were performed in one go without any fine-tuning.

The evaluation objects are mainly OPT series models, which include a set of models from 125 million to 175 billion parameters, making it easy to observe the scaling performance of pruning relative to the model size. Additionally, 176 billion parameter variants of BLOOM were analyzed.

In terms of data sets and evaluation indicators, the experiment used the perplexity of the original WikiText2 test set to evaluate the accuracy of the SparseGPT compression method. At the same time, in order to increase the interpretability, some ZeroShot accuracy metric. Additionally, the evaluation focuses on the accuracy of the sparse model relative to the dense model baseline, rather than on absolute numbers.

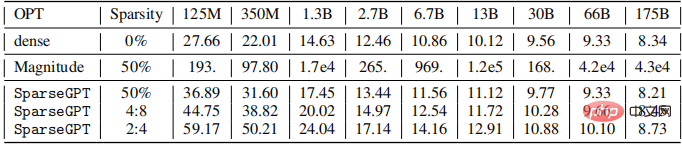

The researchers pruned all linear layers of the entire OPT model series (excluding standard embeddings and headers) to achieve 50% unstructured sparsity, full 4 :8 or full 2:4 semi-structured sparsity, the result is as shown below.

It can be seen that the accuracy of the model compressed using amplitude pruning is poor at all sizes, and the model becomes smaller. The larger the value, the greater the accuracy decreases.

The trend of the model compressed using SparseGPT is different. Under 2.7 billion parameters, the perplexity loss is

Larger models are more likely to be sparsified

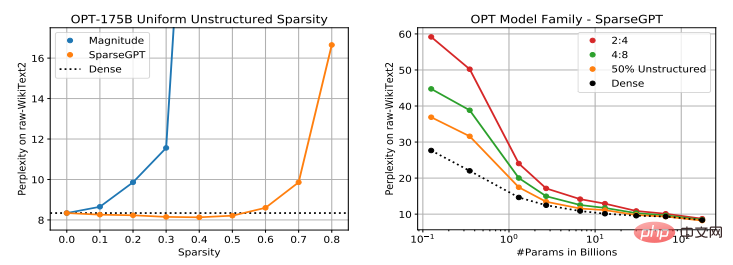

A general trend is that larger models are more likely to be sparsified. At sparsity levels, the relative accuracy drop of sparse models relative to dense models shrinks as the model size increases. The authors speculate that this may be due to their higher degree of parameterization and overall greater noise immunity.

Compared to the dense model baseline, at the maximum scale, when using SparseGPT to compress the model to 4:8 and 2:4 sparsity, the perplexity increases are only 0.11 and 0.39 respectively. . This result means that we can achieve a 2x speedup in practice, and commercial NVIDIA Ampere GPUs already support 2:4 sparsity.

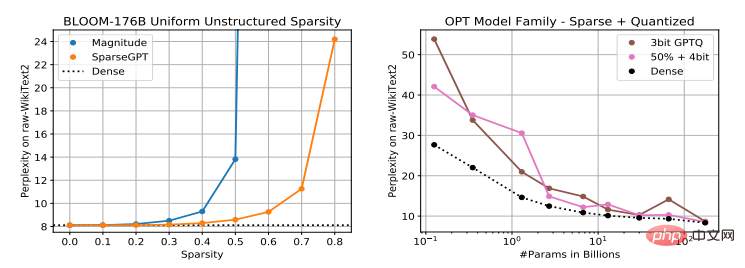

The author studied the relationship between the performance of two hundred billion models, OPT-175B and BLOOM-176B, and the degree of sparsity brought about by using SparseGPT. The results are shown in the figure below.

For the BLOOM-176B model, although amplitude pruning can achieve 30% sparsity without significant accuracy loss, in comparison, SparseGPT can achieve 50% sparsity, a 1.66x improvement. Moreover, at 80% sparsity, the perplexity of the model compressed using SparseGPT still remains at a reasonable level, but when amplitude pruning reaches 40% sparsity of OPT and 60% sparsity of BLOOM, the perplexity is already > 100.

Additionally, SparseGPT is able to remove approximately 100 billion weights from these models, with limited impact on model accuracy.

Finally, this study shows for the first time that a large-scale pre-trained model based on Transformer can be compressed to high sparsity through one-time weight pruning without any retraining and a small accuracy loss. Low.

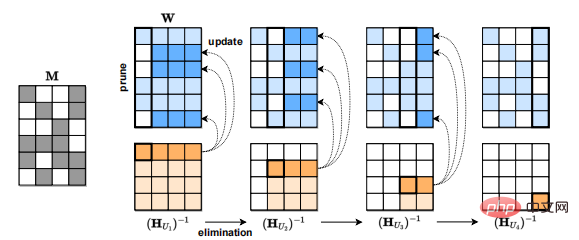

It is worth noting that SparseGPT’s approach is local: after each pruning step, it performs weight updates designed to preserve the input-output relationships of each layer. These updates is calculated without any global gradient information. Therefore, the high degree of parameterization of large-scale GPT models appears to enable this approach to directly identify sparse accurate models among the "neighbors" of dense pre-trained models.

In addition, because the accuracy indicator (perplexity) used in the experiment is very sensitive, the generated sparse model output seems to be closely related to the output of the dense model.

This research has great positive significance in alleviating the computing power limitations of large models. One future work direction is to study the fine-tuning mechanism of large models to further restore accuracy. At the same time, Expanding the applicability of SparseGPT's methods during model training will reduce the computational cost of training large models.

The above is the detailed content of The first 100 billion model compression algorithm SparseGPT is here, reducing computing power costs while maintaining high accuracy. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

Roadmap shows the trend of AI 'replacing” human occupations

Jan 04, 2024 pm 04:32 PM

Roadmap shows the trend of AI 'replacing” human occupations

Jan 04, 2024 pm 04:32 PM

I saw an interesting picture yesterday that was a "level map of AI replacing human paths". As shown in the picture, the game is divided into six different levels, from E1 to E8+. We can observe from the figure that artificial intelligence (AI) is replacing human applications in different fields. The application field path of artificial intelligence is determined by its fault tolerance rate. In short, the error tolerance here refers to the cost of trial and error. AI will gradually replace industries with higher to lower error tolerance rates and gradually "replace" human occupations. In the past, we often thought that creative work relied on human thinking and was not easily replaced. However, with the development of artificial intelligence, this view does not seem to be entirely correct. Creative jobs often don’t have fixed answers

The first 100 billion model compression algorithm SparseGPT is here, reducing computing power costs while maintaining high accuracy

Apr 12, 2023 pm 01:01 PM

The first 100 billion model compression algorithm SparseGPT is here, reducing computing power costs while maintaining high accuracy

Apr 12, 2023 pm 01:01 PM

Since the emergence of GPT-3 in 2020, the popularity of ChatGPT has once again brought the GPT family’s generative large-scale language models into the spotlight, and they have shown strong performance in various tasks. However, the huge scale of the model also brings about increased computational costs and increased deployment difficulty. For example, the GPT‑175B model totals at least 320GB of storage in half-precision (FP16) format and requires at least five A100 GPUs with 80 GB of storage for inference. Model compression is currently a commonly used method to reduce the computational cost of large models, but so far, almost all existing

Generative AI in the cloud: Build or buy?

Dec 19, 2023 pm 08:15 PM

Generative AI in the cloud: Build or buy?

Dec 19, 2023 pm 08:15 PM

Compiled by David Linsigao | Products produced by Yanzheng 51CTO Technology Stack (WeChat ID: blog51cto) There is an unwritten rule in the technology field: everyone likes to use other people’s technology. But for many businesses, generative AI doesn’t seem to fit that mold. Generative AI is rapidly driving some critical decisions. Every organization faces an important choice: whether to build a custom generative AI platform in-house or buy a prepackaged solution from an AI vendor (often offered as a cloud service). DIY favors volume and opportunity. It's weird, but the reason might surprise you. They might even lead you to rethink your enterprise genAI strategy 1. Complete customization and control Rewrite the content as follows: Build a

C++ program to calculate the total cost required for a robot to complete a trip in a grid

Aug 25, 2023 pm 04:53 PM

C++ program to calculate the total cost required for a robot to complete a trip in a grid

Aug 25, 2023 pm 04:53 PM

Suppose we have a grid of size hxw. Each cell in the grid contains a positive integer. Now there is a path finding robot placed on a specific cell (p,q) (where p is the row number and q is the column number) and it can move to the cell (i,j). The move operation has a specific cost equal to |p-i|+|q-j|. There are now q trips with the following properties. Each trip has two values (x, y) and has a common value d. The robot is placed on a cell with value x and then moves to another cell with value x+d. Then it moves to another cell with value x+d+d. This process will continue until the robot reaches a cell with a value greater than or equal to y. y-x is a multiple of d

Minimum cost of converting 1 to N, which can be achieved by multiplying by X or right rotation of the number

Sep 12, 2023 pm 08:09 PM

Minimum cost of converting 1 to N, which can be achieved by multiplying by X or right rotation of the number

Sep 12, 2023 pm 08:09 PM

We can use the following technique to find the cheapest way to multiply X or right-rotate its number from 1 to N. To monitor the initial minimum cost, create a cost variable. When going from N to 1, check whether N is divisible by X at each stage. If it is, then divide N by X to update it and continue the process. If N is not divisible by X, loop the digits of N to the right to increase its value. Add the cost variable in this case. The final cost variable value will be the minimum amount required to turn 1 into N. The algorithm efficiently determines the minimum operations required to perform the desired transformation using numeric rotation or multiplication. Method used NaiveApproach: Right rotation of numbers Efficient method: Multiply by X Simple method: Right rotation of numbers Naive approach is to start with the number 1 and repeatedly

Exploring the Mystery of Electric Vehicle Tire Prices: The Cost Behind Environmental Power Revealed

Aug 25, 2023 am 10:21 AM

Exploring the Mystery of Electric Vehicle Tire Prices: The Cost Behind Environmental Power Revealed

Aug 25, 2023 am 10:21 AM

As awareness of environmental protection continues to increase around the world, various countries have advocated the development of new energy vehicles, making electric vehicles a highlight of the automotive market. However, although electric vehicles have significant advantages in reducing carbon emissions and lowering vehicle costs, they face a problem: the tires of electric vehicles are not only more expensive, but also more prone to wear and tear. The issue has attracted widespread attention, with experts explaining why tires for electric vehicles are different from conventional tires and need to meet higher performance standards. Because electric vehicles are heavier, tires need to have higher load-bearing capacity and structural strength, which increases design and manufacturing costs. In addition, electric vehicles usually have stronger acceleration and braking performance, so the tires need better grip and heat resistance, which also increases the manufacturing cost.

What are the cost and pricing factors for using Java functions?

Apr 24, 2024 pm 12:54 PM

What are the cost and pricing factors for using Java functions?

Apr 24, 2024 pm 12:54 PM

Cost and pricing factors: Instance pricing: billed by time of use and function configuration. Memory and CPU usage: The higher the usage, the higher the cost. Network traffic: Communications with other services incur charges. Storage: Persistent storage is billed separately. Practical case: A function that is called 10,000 times and lasts 100 milliseconds costs approximately US$0.000067 (instance pricing is US$0.000055, and network traffic is US$0.000012).

It's revealed that Meta has given up on developing a competing Apple Vision Pro product, mainly because the cost is too high

Aug 27, 2024 pm 03:33 PM

It's revealed that Meta has given up on developing a competing Apple Vision Pro product, mainly because the cost is too high

Aug 27, 2024 pm 03:33 PM

According to foreign media reports, Meta’s chief technology officer has all but confirmed that the company has given up on developing a device comparable to Apple’s Vision Pro. MetaLaJolla Before the release of VisionPro, Meta announced its four-year development plan for virtual reality and mixed reality headsets. An important part of that was developing a product, internally codenamed LaJolla, that could have become VisionPro's main competitor. It is understood that Meta started the development of LaJolla in November 2023, but stopped the project around mid-August 2024, possibly due to cost issues. It is said that this decision was made by Meta CEO Zuckerberg and CTO Andrew Bosworth.