Technology peripherals

Technology peripherals

AI

AI

Let's talk about AI noise reduction technology in real-time communication

Let's talk about AI noise reduction technology in real-time communication

Let's talk about AI noise reduction technology in real-time communication

Part 01 Overview

##In real-time audio and video communication Scenario, when the microphone collects the user's voice, it also collects a large amount of environmental noise. The traditional noise reduction algorithm only has a certain effect on stationary noise (such as fan sound, white noise, circuit noise floor, etc.), and has a certain effect on non-stationary transient noise (such as a noisy restaurant). Noise, subway environmental noise, home kitchen noise, etc.) The noise reduction effect is poor, seriously affecting the user's call experience. In response to hundreds of non-stationary noise problems in complex scenarios such as home and office, the ecological empowerment team of the Department of Integrated Communications Systems independently developed AI audio noise reduction technology based on the GRU model, and through algorithm and engineering optimization, reduced the size of the noise reduction model. Compressed from 2.4MB to 82KB, the running memory is reduced by about 65%; the computational complexity is optimized from about 186Mflops to 42Mflops, and the running efficiency is improved by 77%; in the existing test data set (in the experimental environment), human voice and noise can be effectively separated , improving the call voice quality Mos score (average opinion value) to 4.25.

#This article will introduce how our team does real-time noise suppression based on deep learning and implement it on mobile terminals and Jiaqin APP. The full text will be organized as follows, introducing the classification of noise and how to choose algorithms to solve these noise problems; how to design algorithms and train AI models through deep learning; finally, it will introduce the effects and key applications of current AI noise reduction. Scenes.

Part 02 Noise classification and noise reduction algorithm selection

In real-time audio and video application scenarios, the device is in a complex acoustic environment. When the microphone collects voice signals, it also collects a large amount of noise, which is a very big challenge to the quality of real-time audio and video. There are many types of noise. According to the mathematical statistical properties of noise, noise can be divided into two categories:

Stationary noise: Statistics of noise Characteristics will not change over time over a relatively long period of time, such as white noise, electric fans, air conditioners, car interior noise, etc.;

##Non-stationary noise: The statistical characteristics of noise change over time, such as noisy restaurants, subway stations, offices, homes Kitchen etc.

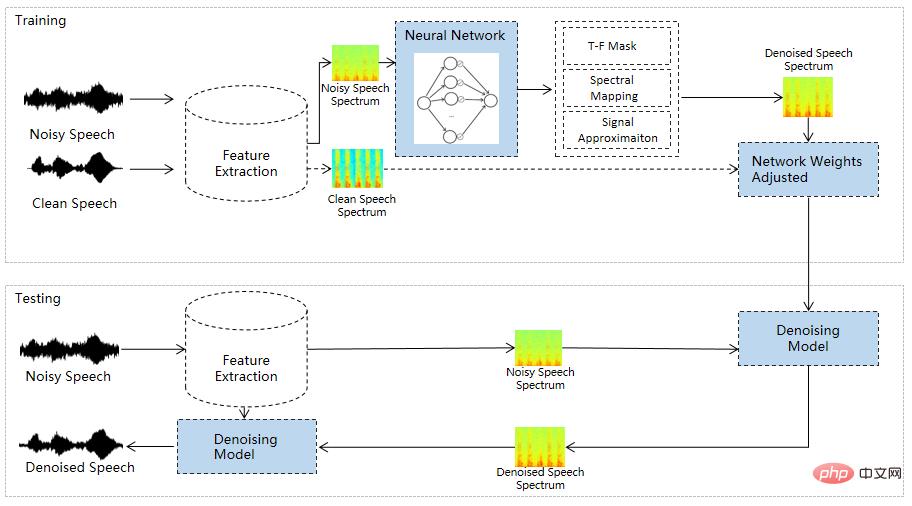

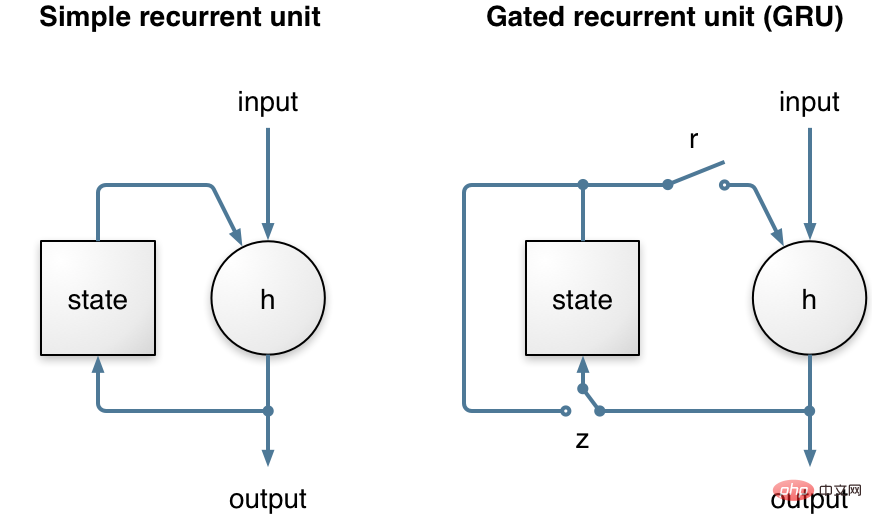

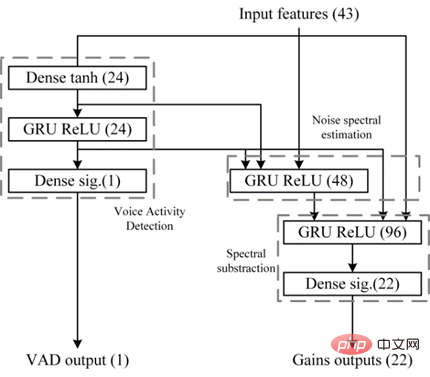

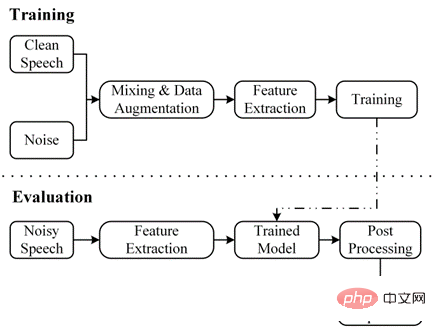

In real-time audio and video applications, calls are susceptible to various types of noise interference This affects the experience, so real-time audio noise reduction has become an important function in real-time audio and video. For steady noise, such as the whirring of air conditioners or the noise floor of recording equipment, it will not change significantly over time. You can estimate and predict it and remove it through simple subtraction. Common There are spectral subtraction, Wiener filtering and wavelet transform. Non-stationary noises, such as the sound of cars whizzing by on the road, the banging of plates in restaurants, and the banging of pots and pans in home kitchens, all appear randomly and unexpectedly, and it is impossible to estimate and predict them. fixed. Traditional algorithms are difficult to estimate and eliminate non-stationary noise, which is why we use deep learning algorithms. In order to improve the noise reduction capabilities of the audio SDK for various noise scenes and make up for the shortcomings of traditional noise reduction algorithms, we developed an AI noise reduction module based on RNN, combined with traditional noise reduction technology and deep learning technology. Focusing on noise reduction processing for home and office usage scenarios, a large number of indoor noise types are added to the noise data set, such as keyboard typing in the office, friction sounds of desks and office supplies being dragged, chair dragging, and kitchens at home. Noises, floor slams, etc. #At the same time, in order to implement real-time speech processing on the mobile terminal, the AI audio noise reduction algorithm controls the computational overhead and library size to a very low level. magnitude. In terms of computational overhead, taking 48KHz as an example, the RNN network processing of each frame of speech only requires about 17.5Mflops, FFT and IFFT require about 7.5Mflops of each frame of speech, and feature extraction requires about 12Mflops, totaling about 42Mflops. The computational complexity is approximately The 48KHz Opus codec is equivalent. In a certain brand of mid-range mobile phone models, statistics indicate that the RNN noise reduction module CPU usage is about 4%. In terms of the size of the audio library, after turning on RNN noise reduction compilation, the size of the audio engine library only increases by about 108kB. The The module uses the RNN model because RNN carries time information compared to other learning models (such as CNN) and can model timing signals, not just separate audio input and output frames. At the same time, the model uses a gated recurrent unit (GRU, as shown in Figure 1). Experiments show that GRU performs slightly better than LSTM on speech noise reduction tasks, and because GRU has fewer weight parameters, it can save computing resources. Compared to a simple loop unit, a GRU has two extra gates. The reset gate control state is used to calculate the new state, while the update gate control state is how much it will change based on the new input. This update gate allows GRU to remember timing information for a long time, which is why GRU performs better than simple recurrent units. ## Figure 1 The left side is a simple cyclic unit, the right side The structure of the GRU model is shown in Figure 2. The trained model will be embedded into the audio and video communication SDK. By reading the audio stream of the hardware device, the audio stream will be framed and sent to the AI noise reduction preprocessing module. The preprocessing module will add the corresponding features ( Feature) is calculated and output to the trained model. The corresponding gain (Gain) value is calculated through the model, and the gain value is used to adjust the signal to ultimately achieve the purpose of noise reduction (as shown in Figure 3). ##Figure 2. GRU-based RNN network model

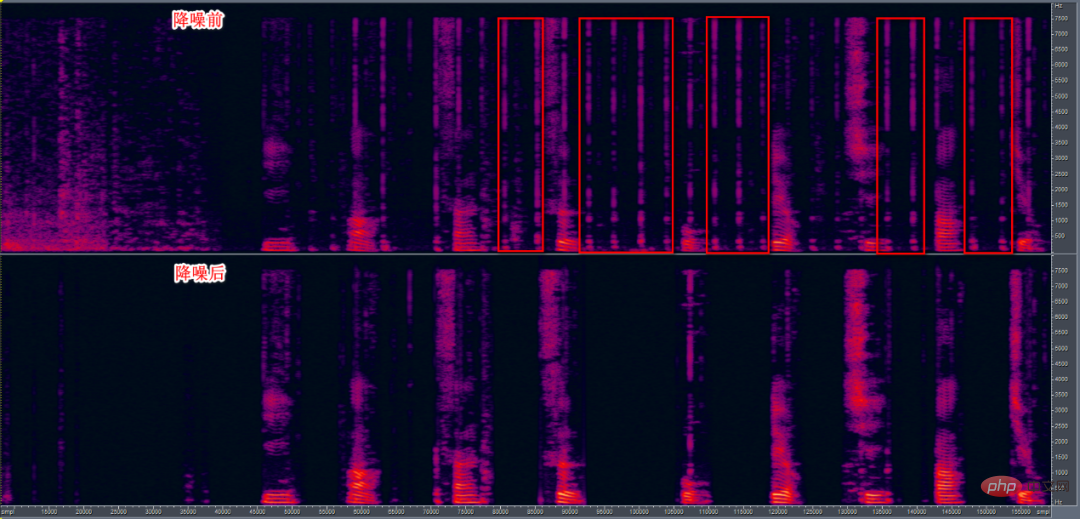

Figure 4 shows the keystrokes Comparison of the speech spectrograms before and after noise reduction. The upper part is the noisy speech signal before noise reduction, and the red rectangular box is the keyboard tapping noise. The lower part is the speech signal after noise reduction. Through observation, it can be found that most of the keyboard tapping sounds can be suppressed, while the speech damage is controlled to a low level. ## Figure 4. Noisy speech (accompanied by Keyboard tapping sound) before and after noise reduction The current AI noise reduction model has been launched on the mobile phone and Jiaqin to improve the mobile phone and Jiaqin APP The call noise reduction effect has excellent suppression capabilities in more than 100 noise scenarios in homes, offices, etc., while maintaining voice distortion. In the next stage, we will continue to optimize the computational complexity of the AI noise reduction model so that it can be promoted and used on IoT low-power devices.

Part 03 Deep Learning Noise Reduction Algorithm Design

Part 04 Network model and processing process

Part 05 AI noise reduction processing effect and implementation

The above is the detailed content of Let's talk about AI noise reduction technology in real-time communication. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

How to get logged in user information in WordPress for personalized results

Apr 19, 2025 pm 11:57 PM

How to get logged in user information in WordPress for personalized results

Apr 19, 2025 pm 11:57 PM

Recently, we showed you how to create a personalized experience for users by allowing users to save their favorite posts in a personalized library. You can take personalized results to another level by using their names in some places (i.e., welcome screens). Fortunately, WordPress makes it very easy to get information about logged in users. In this article, we will show you how to retrieve information related to the currently logged in user. We will use the get_currentuserinfo(); function. This can be used anywhere in the theme (header, footer, sidebar, page template, etc.). In order for it to work, the user must be logged in. So we need to use

How to elegantly obtain entity class variable names to build database query conditions?

Apr 19, 2025 pm 11:42 PM

How to elegantly obtain entity class variable names to build database query conditions?

Apr 19, 2025 pm 11:42 PM

When using MyBatis-Plus or other ORM frameworks for database operations, it is often necessary to construct query conditions based on the attribute name of the entity class. If you manually every time...

Java BigDecimal operation: How to accurately control the accuracy of calculation results?

Apr 19, 2025 pm 11:39 PM

Java BigDecimal operation: How to accurately control the accuracy of calculation results?

Apr 19, 2025 pm 11:39 PM

Java...

How to solve the problem of username and password authentication failure when connecting to local EMQX using Eclipse Paho?

Apr 19, 2025 pm 04:54 PM

How to solve the problem of username and password authentication failure when connecting to local EMQX using Eclipse Paho?

Apr 19, 2025 pm 04:54 PM

How to solve the problem of username and password authentication failure when connecting to local EMQX using EclipsePaho's MqttAsyncClient? Using Java and Eclipse...

How to properly configure apple-app-site-association file in pagoda nginx to avoid 404 errors?

Apr 19, 2025 pm 07:03 PM

How to properly configure apple-app-site-association file in pagoda nginx to avoid 404 errors?

Apr 19, 2025 pm 07:03 PM

How to correctly configure apple-app-site-association file in Baota nginx? Recently, the company's iOS department sent an apple-app-site-association file and...

How to package in IntelliJ IDEA for specific Git versions to avoid including unfinished code?

Apr 19, 2025 pm 08:18 PM

How to package in IntelliJ IDEA for specific Git versions to avoid including unfinished code?

Apr 19, 2025 pm 08:18 PM

In IntelliJ...

How to process and display percentage numbers in Java?

Apr 19, 2025 pm 10:48 PM

How to process and display percentage numbers in Java?

Apr 19, 2025 pm 10:48 PM

Display and processing of percentage numbers in Java In Java programming, the need to process and display percentage numbers is very common, for example, when processing Excel tables...

How to efficiently query large amounts of personnel data through natural language processing?

Apr 19, 2025 pm 09:45 PM

How to efficiently query large amounts of personnel data through natural language processing?

Apr 19, 2025 pm 09:45 PM

Effective method of querying personnel data through natural language processing How to efficiently use natural language processing (NLP) technology when processing large amounts of personnel data...