Technology peripherals

Technology peripherals

AI

AI

Nanyang Polytechnic proposed the task of generating PSG from a full scene graph, locating objects at the pixel level and predicting 56 relationships.

Nanyang Polytechnic proposed the task of generating PSG from a full scene graph, locating objects at the pixel level and predicting 56 relationships.

Nanyang Polytechnic proposed the task of generating PSG from a full scene graph, locating objects at the pixel level and predicting 56 relationships.

It’s already 2022, but most current computer vision tasks still only focus on image perception. For example, the image classification task only requires the model to identify the object categories in the image. Although tasks such as target detection and image segmentation further require finding the location of objects, such tasks are still not enough to demonstrate that the model has obtained a comprehensive and in-depth understanding of the scene.

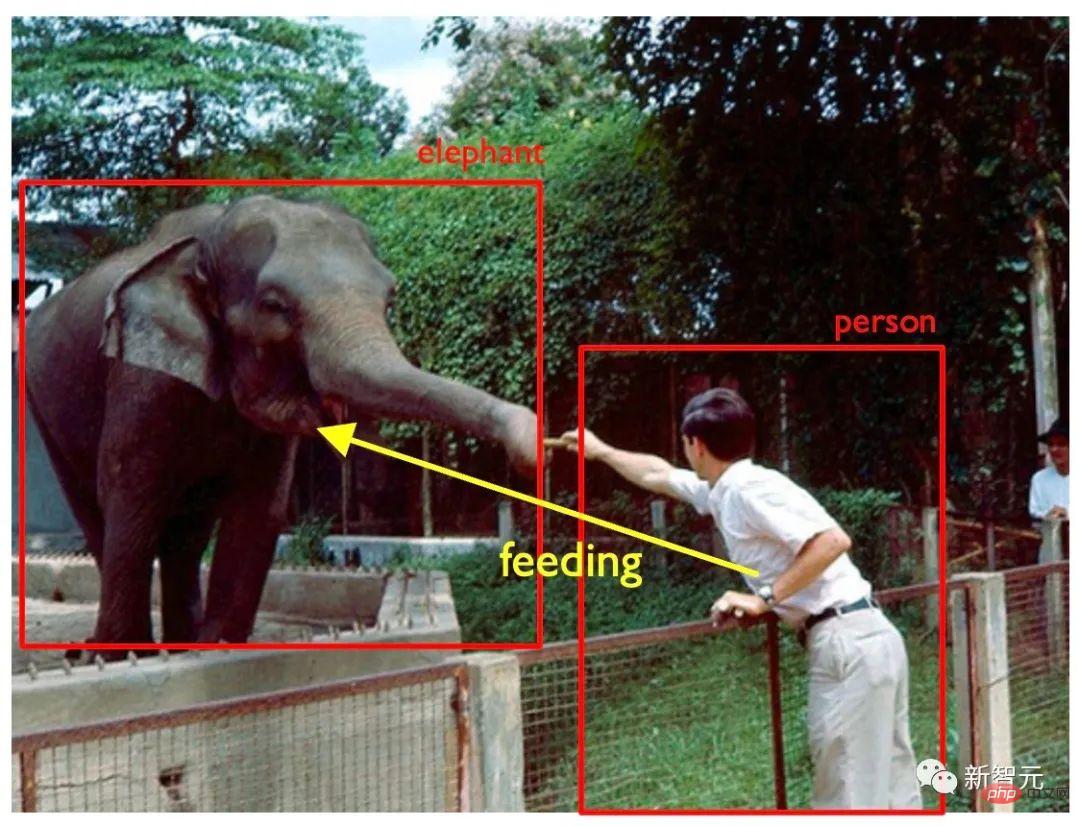

The following Figure 1 is an example. If the computer vision model only detects people, elephants, fences, trees, etc. in the picture, we usually do not think that the model has understood the picture, but The model is also unable to make more advanced decisions based on understanding, such as issuing a "no feeding" warning.

##Figure 1: Original example diagram

In fact, in wisdom In many real-world AI scenarios such as cities, autonomous driving, and smart manufacturing, in addition to locating targets in the scene, we usually also expect the model to reason and predict the relationship between various subjects in the image. For example, in autonomous driving applications, autonomous vehicles need to analyze whether pedestrians on the roadside are pushing a cart or riding a bicycle. Depending on the situation, the corresponding subsequent decisions may be different.

In a smart factory scenario, judging whether the operator is operating safely and correctly also requires the monitoring-side model to have the ability to understand the relationship between subjects. Most existing methods manually set some hard-coded rules. This makes the model lack generalization and difficult to adapt to other specific situations.

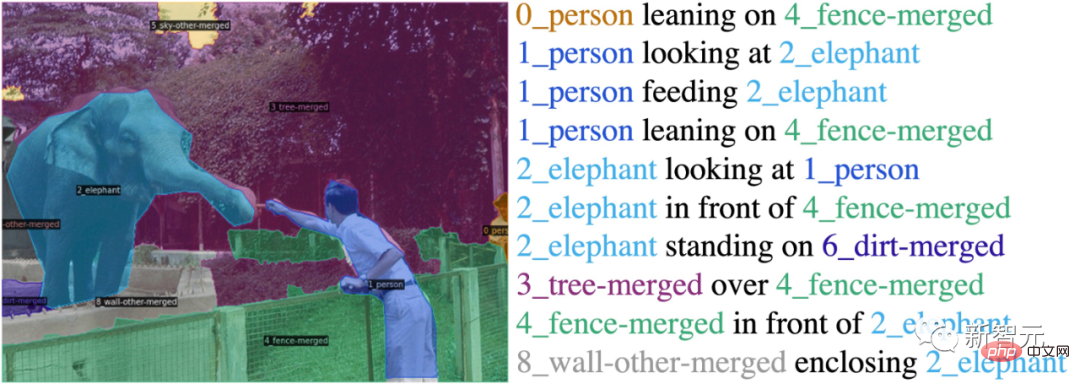

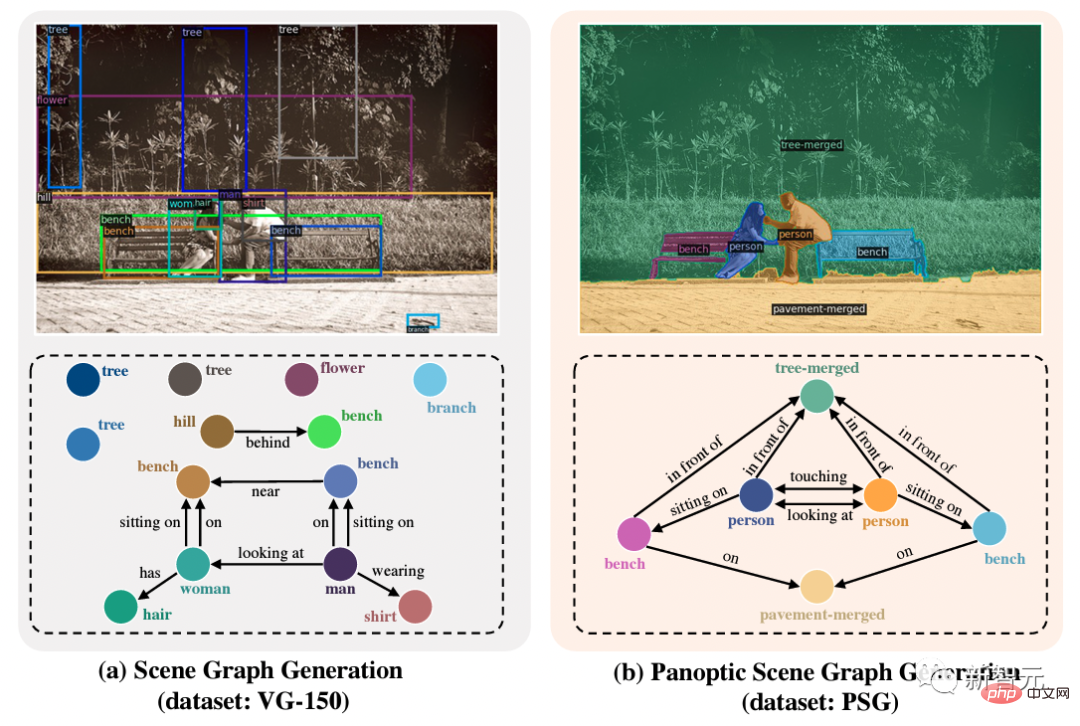

The scene graph generation task (scene graph generation, or SGG) is designed to solve the above problems. In addition to the requirements for classifying and locating target objects, the SGG task also requires the model to predict the relationship between objects (see Figure 2).

Figure 2: Scene graph generation

Traditional scene graph generation task Datasets typically have bounding box annotations of objects and annotation of relationships between bounding boxes. However, this setting has several inherent flaws:

(1) Bounding boxes cannot accurately locate objects: As shown in Figure 2, bounding boxes inevitably fail when labeling people. Contains objects around people;

(2) The background cannot be marked: As shown in Figure 2, the trees behind the elephant are marked with a bounding box, which almost covers the entire image, so it involves The relationship between the background cannot be accurately annotated, which also makes it impossible for the scene graph to completely cover the image and achieve comprehensive scene understanding.

Therefore, the author proposes the full scene graph generation (PSG) task with a finely annotated large-scale PSG data set.

Figure 3: Full scene graph generationAs shown in Figure 3, this task uses panoramic segmentation to achieve comprehensive and accurate positioning objects and backgrounds, thereby addressing the inherent shortcomings of the scene graph generation task, thereby advancing the field toward comprehensive and deep scene understanding.

Paper information

Paper link: https://arxiv.org/abs/2207.11247Project Page: https ://psgdataset.org/OpenPSG Codebase: https://github.com/Jingkang50/OpenPSGCompetition Link: https://www.cvmart.net/race/10349/baseECCV'22 SenseHuman Workshop Link: https://sense- human.github.io/HuggingFace Demo Link: https://huggingface.co/spaces/ECCV2022/PSG

The PSG data set proposed by the author contains nearly 50,000 images of coco, and is based on coco's existing panoramic segmentation annotation, marking the relationship between segmented blocks. The author carefully defines 56 kinds of relationships, including positional relationships (over, in front of, etc.), common relationships between objects (hanging from, etc.), common biological actions (walking on, standing on, etc.), human behaviors (cooking, etc.), relationships in traffic scenes (driving, riding, etc.), relationships in motion scenes (kicking, etc.), and relationships between backgrounds (enclosing, etc.). The author requires annotators to use more precise verb expressions rather than more vague expressions, and to annotate the relationships in the diagram as fully as possible.

##PSG model effect display

Task advantagesThe author once again understands the advantages of the Full Scene Graph Generation (PSG) task through the example below:

The left picture comes from the traditional data of the SGG task Set Visual Genome (VG-150). It can be seen that annotations based on detection frames are usually inaccurate, and the pixels covered by the detection frames cannot accurately locate objects, especially backgrounds such as chairs and trees. At the same time, relationship annotation based on detection frames usually tends to label some boring relationships, such as "people have heads" and "people wear clothes".

In contrast, the PSG task proposed in the right figure provides more comprehensive (including interaction of foreground and background), clearer (appropriate object granularity) and more accurate (pixel level of accuracy) scene graph representation to advance the field of scene understanding.

Two major types of PSG modelsIn order to support the proposed PSG task, the author built an open source code platform OpenPSG, which implemented four two-stage methods and two A single-stage method is convenient for everyone to develop, use, and analyze.

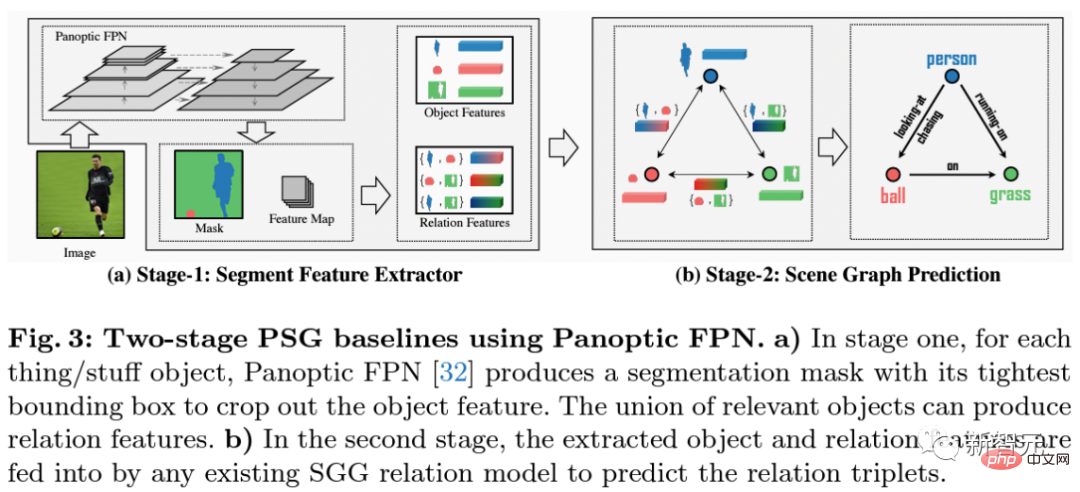

The two-stage method uses Panoptic-FPN to perform panoramic segmentation of the image in the first stage.

Next, the author extracts the features of the objects obtained by panoramic segmentation and the relationship features of each pair of object fusions, and sends them to the next stage of relationship prediction. The framework has integrated and reproduced the classic methods of traditional scene graph generation IMP, VCTree, Motifs, and GPSNet.

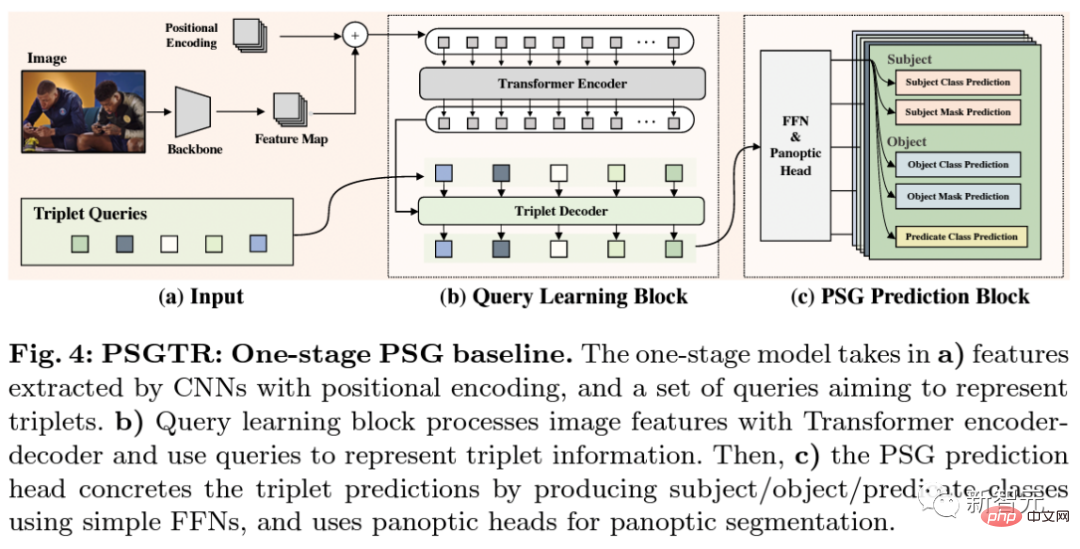

PSGFormer is a single-stage method based on dual decoder DETR. The model first extracts image features through the convolutional neural network backbone in a) and adds position coding information as the input of the encoder. At the same time, it initializes a set of queries to represent triples. Similar to DETR, in b) the model inputs the output of the encoder as key and value together with the queries representing triples into the decoder for cross-attention operation. Then the model inputs each decoded query into the prediction module corresponding to the subject-verb-object triplet in c), and finally obtains the corresponding triplet prediction result.

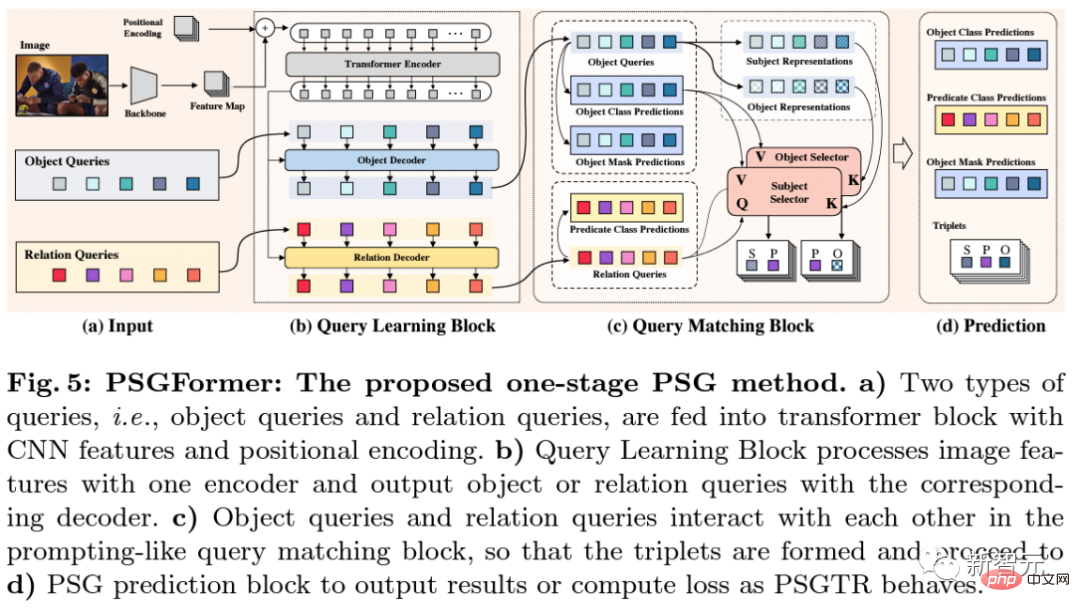

PSGFormer is a single-stage method of DETR based on double decode. The model a) extracts image features through CNN, inputs position encoding information into the encoder, and initializes two sets of queries to represent objects and relationships respectively. Then in step b), the model learns object query and relation query through cross-attention decoding in the object decoder and relation encoder respectively based on the image information encoded by the encoder.

After both types of queries are learned, they are matched through mapping in c) to obtain paired triple queries. Finally, in d), predictions about the object query and relationship query are completed through the prediction head, and the final triple prediction result is obtained based on the matching results in c).

PSGTR and PSGFormer are both expanded and improved models based on DETR. The difference is that PSGTR uses a set of queries to directly model triples, while PSGFormer uses two sets of queries to model objects and Regarding relationship modeling, both methods have their own pros and cons. For details, please refer to the experimental results in the paper.

Conclusion sharing

Most of the methods that are effective on SGG tasks are still effective on PSG tasks. However, some methods that utilize strong statistical priors on the data set or priors on the predicate direction in subject, predicate, and object may not be so effective. This may be due to the fact that the bias of the PSG data set is not so serious compared to the traditional VG data set, and the definition of predicate verbs is clearer and learnable. Therefore, the authors hope that subsequent methods will focus on the extraction of visual information and the understanding of the image itself. Statistical priors may be effective in brushing data sets, but they are not essential.

Compared with the two-stage model, the single-stage model can currently achieve better results. This may be due to the fact that the supervision signal about the relationship in the single-stage model can be directly transferred to the feature map, so that the relationship signal participates in more model learning, which is beneficial to the capture of relationships. However, since this article only proposes several baseline models and does not optimize single-stage or two-stage models, it cannot be said that the single-stage model is necessarily stronger than the two-stage model. This also hopes that the contestants will continue to explore.

Compared with the traditional SGG task, the PSG task performs relationship matching based on the panoramic segmentation map and requires confirmation of the ID of the subject and object objects in each relationship. Compared with the two-stage direct prediction of the panoramic segmentation map to complete the division of object IDs, the single-stage model needs to complete this step through a series of post-processing. If the existing single-stage model is further improved and upgraded, how to more effectively complete the confirmation of object IDs in the single-stage model and generate better panoramic segmentation images is still a topic worth exploring.

Finally, everyone is welcome to try HuggingFace:

Demo: https://huggingface .co/spaces/ECCV2022/PSG

Perspectives on image generation

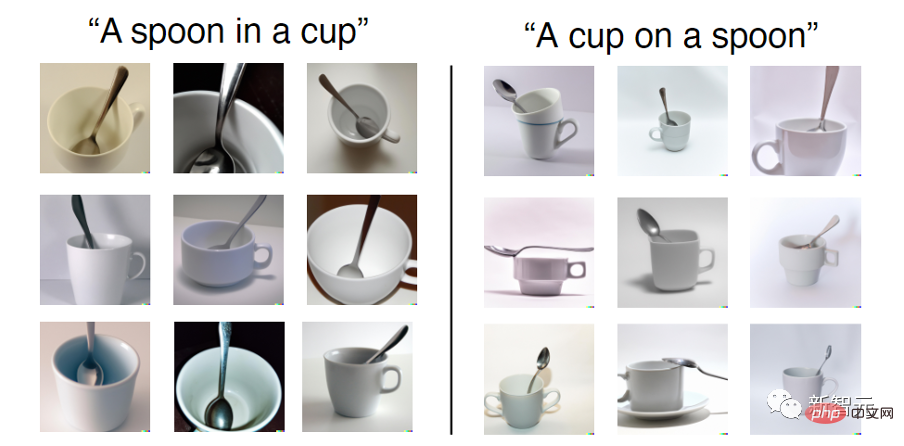

Recently popular text input-based generative models (such as DALL-E 2) It’s really amazing, but some research shows that these generative models may just glue together several entities in the text, without even understanding the spatial relationships expressed in the text. As shown below, although the input is "cup on spoon", the generated pictures are still "spoon on cup".

By coincidence, the PSG data set is marked with a mask-based scene graph relationship. The author can use scene graph and panoramic segmentation mask as a training pair to obtain a text2mask model, and generate more detailed pictures based on the mask. Therefore, it is possible that the PSG dataset also provides a potential solution for relationship-focused image generation.

P.S. The "PSG Challenge", which aims to encourage the field to jointly explore comprehensive scene recognition, is in full swing. Millions of prizes are waiting for you! Competition Link: https://www.cvmart.net/race/10349/base

The above is the detailed content of Nanyang Polytechnic proposed the task of generating PSG from a full scene graph, locating objects at the pixel level and predicting 56 relationships.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

At the forefront of software technology, UIUC Zhang Lingming's group, together with researchers from the BigCode organization, recently announced the StarCoder2-15B-Instruct large code model. This innovative achievement achieved a significant breakthrough in code generation tasks, successfully surpassing CodeLlama-70B-Instruct and reaching the top of the code generation performance list. The unique feature of StarCoder2-15B-Instruct is its pure self-alignment strategy. The entire training process is open, transparent, and completely autonomous and controllable. The model generates thousands of instructions via StarCoder2-15B in response to fine-tuning the StarCoder-15B base model without relying on expensive manual annotation.

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

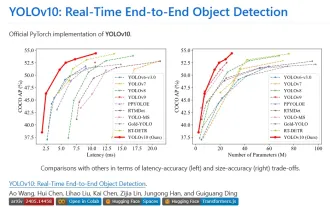

Yolov10: Detailed explanation, deployment and application all in one place!

Jun 07, 2024 pm 12:05 PM

Yolov10: Detailed explanation, deployment and application all in one place!

Jun 07, 2024 pm 12:05 PM

1. Introduction Over the past few years, YOLOs have become the dominant paradigm in the field of real-time object detection due to its effective balance between computational cost and detection performance. Researchers have explored YOLO's architectural design, optimization goals, data expansion strategies, etc., and have made significant progress. At the same time, relying on non-maximum suppression (NMS) for post-processing hinders end-to-end deployment of YOLO and adversely affects inference latency. In YOLOs, the design of various components lacks comprehensive and thorough inspection, resulting in significant computational redundancy and limiting the capabilities of the model. It offers suboptimal efficiency, and relatively large potential for performance improvement. In this work, the goal is to further improve the performance efficiency boundary of YOLO from both post-processing and model architecture. to this end

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year