Technology peripherals

Technology peripherals

AI

AI

Looking at the past and future of multimodal learning from an audio-visual perspective

Looking at the past and future of multimodal learning from an audio-visual perspective

Looking at the past and future of multimodal learning from an audio-visual perspective

Vision and hearing are crucial in human communication and scene understanding. In order to imitate human perceptual abilities, audio-visual learning aimed at exploring audio-visual modalities has become a booming field in recent years. This article is a review of the latest audio-visual learning "Learning in Audio-visual Context: A Review, Analysis, and Interpretation of "New Perspective".

This review first analyzes the cognitive scientific basis of audiovisual modalities, and then systematically analyzes and summarizes recent audiovisual learning work (nearly three hundred related documents). . Finally, in order to take an overview of the current field of visual-visual learning, this review revisits the progress of visual-visual learning in recent years from the perspective of visual-visual scene understanding and explores potential development directions in this field.

##arXiv link: https://arxiv.org/abs/2208.09579

Project homepage: https://gewu-lab.github.io/audio-visual-learning/

awesome-list link: https://gewu-lab.github.io/awesome-audiovisual-learning/

##1Introduction

Visual and auditory Information is the main source of information for humans to perceive the external world. The human brain obtains an overall cognition of the surrounding environment by integrating heterogeneous multi-modal information. For example, in a cocktail party scene with multiple speakers, we can use changes in lip shape to enhance the received speech of the speaker of interest. Therefore, visual and audio learning is indispensable for the exploration of human-like machine perception capabilities. Compared with other modalities, the characteristics of the audio-visual modality make it unique:

1) Cognitive basis. As the two most widely studied senses, the integration of vision and hearing is found throughout the human nervous system. On the one hand, the importance of these two senses in human perception provides a cognitive basis for machine perception research based on audio-visual data. On the other hand, the interaction and integration of vision and hearing in the nervous system can serve as a basis for promoting visual-visual learning. basis.

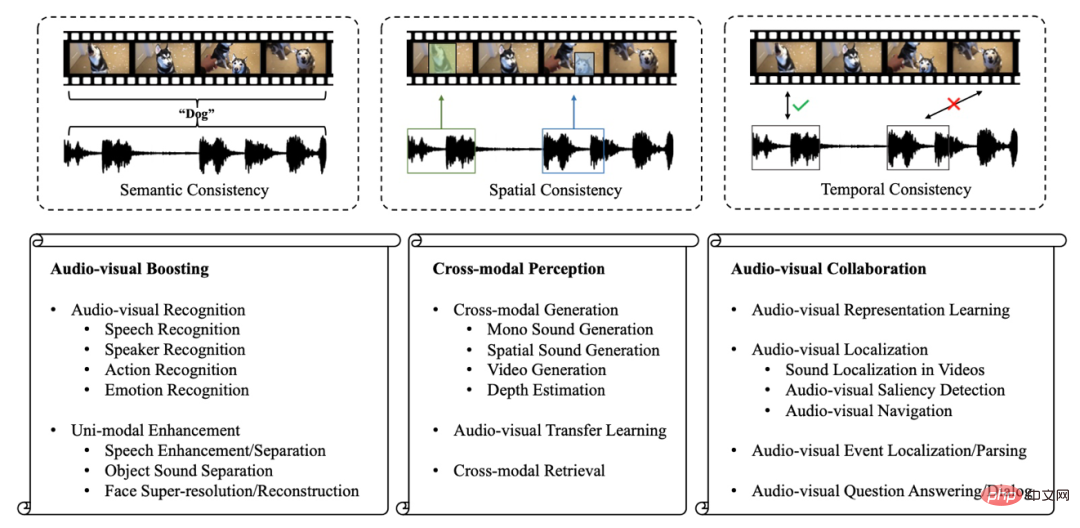

2) Multiple consistency. In our daily life, vision and hearing are closely related. As shown in Figure 1, both the barking of a dog and its appearance allow us to associate it with the concept of "dog" (Semantic Consistency). At the same time, we can determine the dog's exact spatial location with the help of heard sounds or vision (Spatial Consistency). And when we hear a dog barking, we can usually see the dog visually at the same time (Timing Consistency). Multiple coherences between vision and hearing are the basis of research on audiovisual learning.

3) Rich data support. The rapid development of mobile terminals and the Internet has prompted more and more people to share videos on public platforms, which has reduced the cost of collecting videos. These rich public videos ease the barriers to data acquisition and provide data support for audio-visual learning.

These characteristics of the audio-visual modality naturally led to the birth of the field of audio-visual learning. In recent years, this field has achieved vigorous development. Researchers are no longer content to simply introduce additional modalities into the original single-modal tasks, and have begun to explore and solve new problems and challenges.However, existing audiovisual learning efforts are often task-oriented. In these works, they focus on specific audiovisual tasks. There is still a lack of comprehensive work that systematically reviews and analyzes developments in the field of audiovisual learning. Therefore, this article summarizes the current field of audiovisual learning and then further looks at its potential development directions.

Due to the close connection between visual and audio learning and human perceptual ability, this article first summarizes the cognitive basis of visual and auditory modalities, and then on this basis, Divide existing audio-visual learning research into three categories:

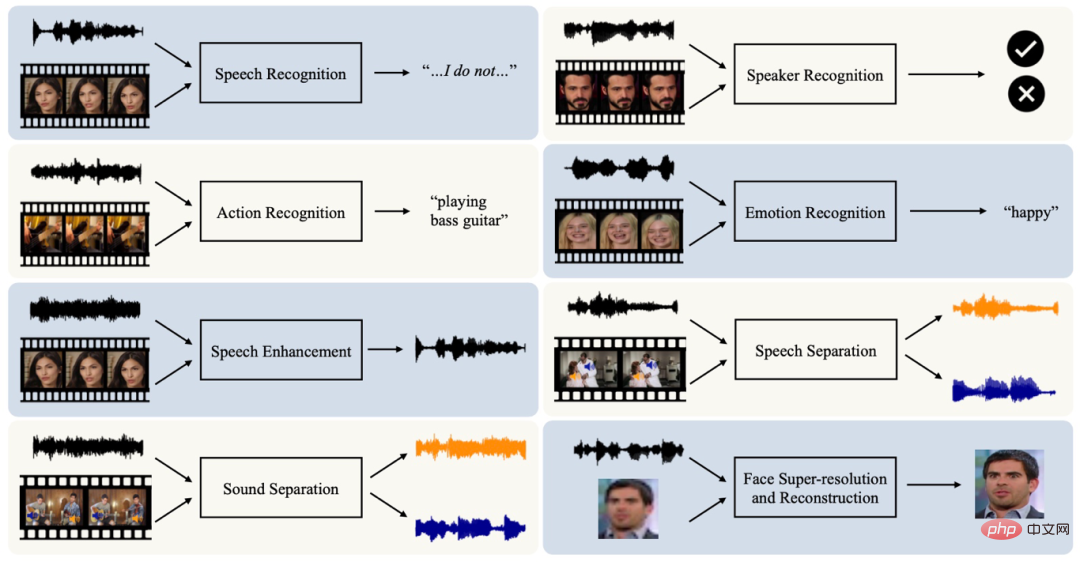

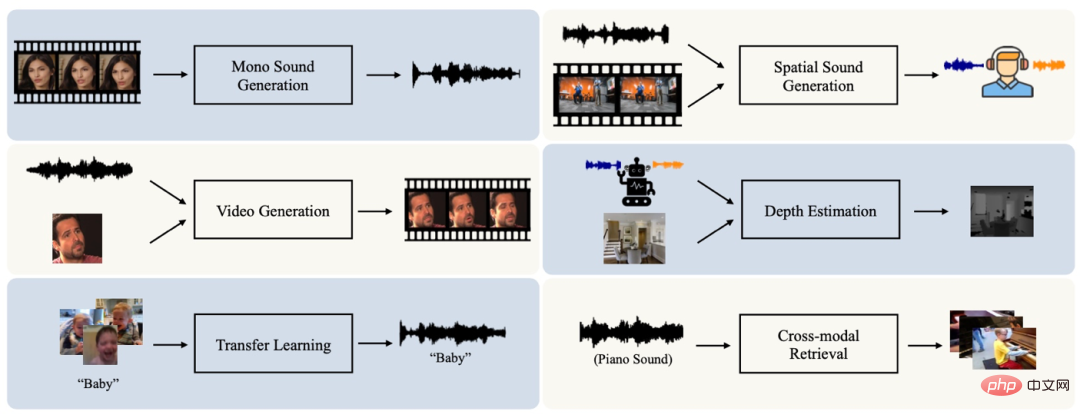

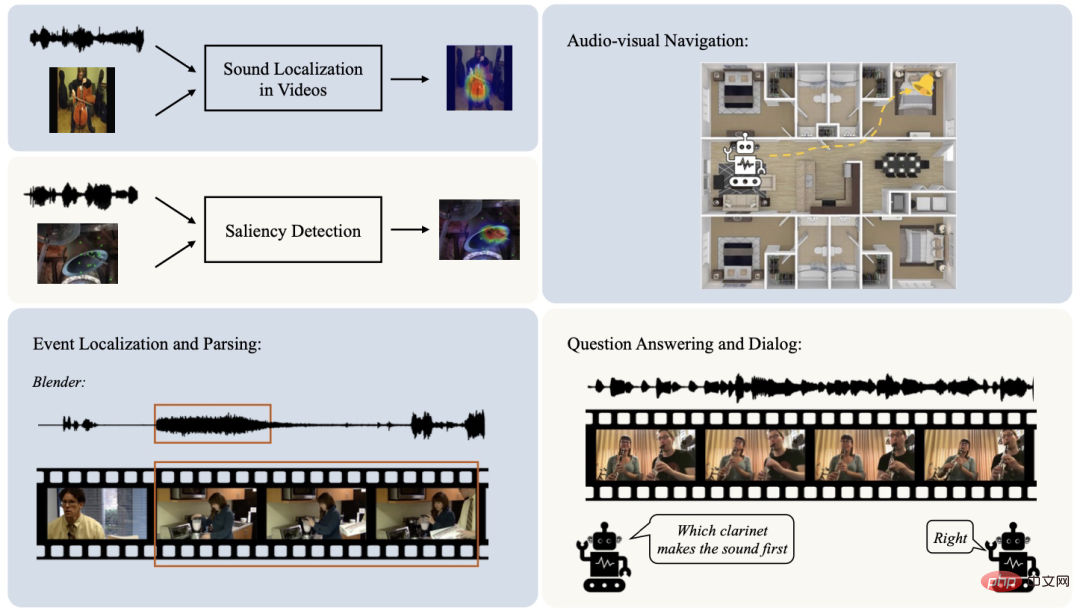

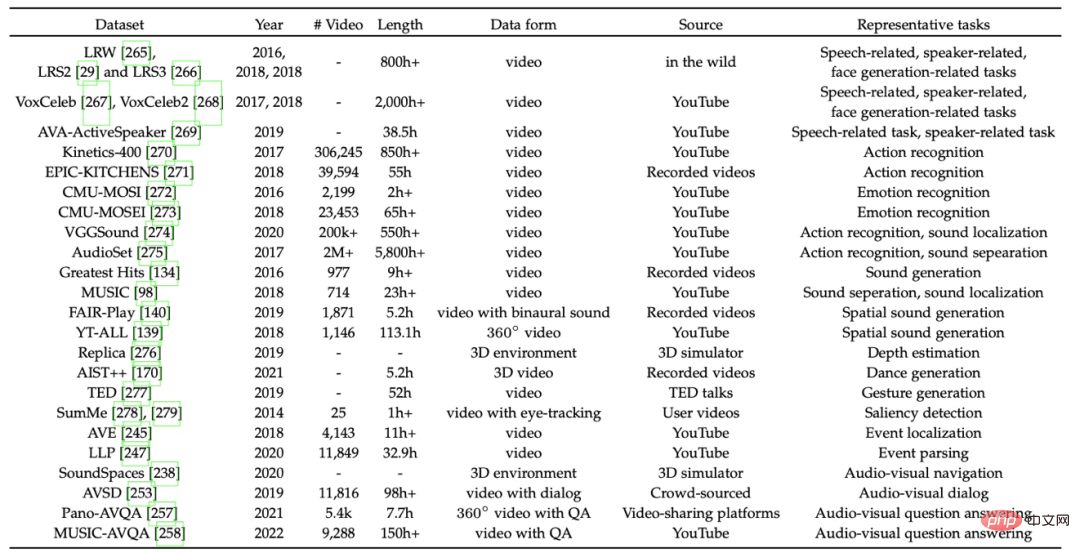

1) Audio-visual Boosting. Visual and audio data each have a long research history and wide application. Although these single-modal methods have achieved quite effective results, they only utilize partial information of the object of interest, the performance of single-modal methods is limited, and they are susceptible to single-modal noise. Therefore, researchers introduce additional modalities into these audio or visual tasks, which not only improves the model effect by integrating complementary information, but also promotes model robustness. 2) Cross-modal Perception. Human beings can associate related pictures when hearing sounds, and can also think of matching sounds when seeing pictures. This is because visual and auditory information are consistent. This consistency provides a basis for the machine to transfer cross-modal knowledge or generate corresponding data of another modality based on information from one modality. Therefore, many studies have been devoted to exploring cross-modal perception capabilities and have achieved remarkable results. 3) Audio-visual Collaboration. In addition to fusing signals from different modalities, there are higher-level interactions between modalities in the cortical areas of the human brain to achieve deeper scene understanding. Therefore, human-like perception capabilities require the exploration of collaboration between audio and video modalities. In order to achieve this goal, many studies in recent years have proposed more challenging scene understanding problems, which have received widespread attention. Figure 1: Overview of visual-visual consistency and visual-visual learning areas The consistency of semantics, space and timing between audio and video modalities provides feasibility for the above audio and video research. Therefore, this article, after summarizing recent audiovisual research, analyzes the multiple coherences of audiovisuals. In addition, this article once again reviews the progress in the field of audio-visual learning from a new perspective of audio-visual scene understanding. Vision and hearing are the two core senses for human scene understanding. This chapter summarizes the neural pathways of visual and auditory senses and the integration of visual and audio modalities in cognitive neuroscience, laying the foundation for the subsequent discussion of research in the field of visual and audio learning. Vision is the most widely studied sense, and some even believe that it dominates human perception. Correspondingly, the neural pathways of vision are also relatively complex. Reflected light from objects contains visual information, which activates numerous photoreceptors (approximately 260 million) in the retina. The output of the photoreceptors is sent to ganglion cells (approximately 2 million). This process compresses visual information. Then, after being processed by cells in the lateral geniculate nucleus, the visual information finally reaches the vision-related areas of the cerebral cortex. The visual cortex is a functionally distinct collection of regions whose visual neurons have preferences. For example, neurons in V4 and V5 are sensitive to color and motion, respectively. In addition to vision, hearing is also an important sense for observing the surrounding environment. It not only reminds humans to avoid risks (for example, humans will take active action when hearing the cry of wild beasts), but is also the basis for people to communicate with each other. Sound waves are converted into neuronal signals on the eardrum. The auditory information is then carried to the inferior colliculus and cochlear nuclei of the brainstem. After processing by the medial geniculate nucleus of the thalamus, sounds are ultimately encoded in the primary auditory cortex. The brain takes auditory information and uses the acoustic cues contained in it, such as frequency and timbre, to determine the identity of the sound source. At the same time, the intensity between the two ears and the timing difference between hearing provide clues to the location of the sound, which is called the binaural effect. In practice, human perception can combine multiple senses, especially hearing and vision, which is called multi-channel perception. Each sense provides unique information about the surrounding environment. Although the information received by the multiple senses is different, the resulting representation of the environment is a unified experience rather than separate sensations. A representative example is the McGurk effect: visual signals and auditory signals with different semantics obtain single semantic information. These phenomena indicate that in human perception, signals from multiple senses are often integrated. Among them, the intersection of auditory and visual neural pathways combines information from two important human senses, promoting the sensitivity and accuracy of perception. For example, visual information related to sound can improve the search efficiency of auditory space. These perceptual phenomena that combine multiple sensory information have attracted people's attention in the field of cognitive neuroscience. A well-studied multichannel sensory region in the human nervous system is the superior colliculus. Many neurons in the superior colliculus have multisensory properties and can be activated by information from vision, hearing, and even touch. This multisensory response is often stronger than a single response. The superior temporal sulcus in the cortex is another representative region. Based on studies in monkeys, it has been observed to connect to multiple senses, including vision, hearing, and somatosensory. Further brain regions, including the parietal lobes, frontal lobes, and hippocampus, exhibit similar multichannel perception phenomena. Based on the research on multi-channel perception phenomena, we can observe several key findings: 1) Multi-modal enhancement. As mentioned above, many neurons can respond to the fused signals of multiple senses. When the stimulation of a single sense is weak, this enhanced response is more reliable than a single-modality response. #2) Cross-modal plasticity. This phenomenon means that deprivation of a sense can affect the development of its corresponding cortical area. For example, it is possible that the auditory-related cortex of deaf people is activated by visual stimulation. 3) Multi-modal collaboration. There is a more complex integration of signals from different senses in cortical areas. Researchers have discovered that there are modules in the cerebral cortex that have the ability to integrate multisensory information in a collaborative manner to build awareness and cognition. Inspired by human cognition, researchers have begun to study how to achieve human-like visual and audio perception capabilities, and more visual and audio research has gradually emerged in recent years. Although each modality itself has relatively sufficient information for learning, and there are already many single-based Modal data tasks, but single-modal data only provides local information and is more sensitive to single-modal noise (for example, visual information is affected by factors such as lighting, viewing angle, etc.). Therefore, inspired by the phenomenon of multimodal improvement in human cognition, some researchers introduce additional visual (or audio) data into the original single-modal task to improve task performance. We divide related tasks into two parts: identification and enhancement. Single-modal recognition tasks have been widely studied in the past, such as audio-based speech recognition and vision-based action recognition. However, single-modal data only observes part of the information of things and is susceptible to single-modal noise. Therefore, the audio-visual recognition task, which integrates multi-modal data to promote model capabilities and robustness, has attracted attention in recent years and covers many aspects such as speech recognition, speaker recognition, action recognition, and emotion recognition. The consistency of audio and video modalities not only provides the basis for multi-modal recognition tasks, but also makes it possible to use one modality to enhance the signal of another modality. For example, multiple speakers are visually separated, so visual information about the speakers can be used to aid speech separation. In addition, audio information can provide identity information such as gender and age for reconstructing obscured or missing speaker facial information. These phenomena have inspired researchers to use information from other modalities for denoising or enhancement, such as speech enhancement, sound source separation and facial super-reconstruction. Figure 2: Video and audio improvement task The phenomenon of cross-modal plasticity in cognitive neuroscience and the consistency between audio-visual modalities have promoted the study of cross-modal perception, which aims to learn and build audio The association with visual modalities has led to the creation of tasks such as cross-modal generation, transfer, and retrieval. Human beings have the ability to predict the information corresponding to another modality under the guidance of a known modality. For example, without hearing the sound, we can roughly infer what the person is saying simply by seeing the visual information of lip movements. The semantic, spatial, and temporal consistency between audio and vision provides the possibility for machines to have human-like cross-modal generation capabilities. Cross-modal generation tasks currently cover many aspects including single-channel audio generation, stereo generation, video/image generation, and depth estimation. In addition to cross-modal generation, the semantic consistency between audio and video indicates that learning in one modality is expected to be assisted by semantic information from the other modality. This is also the goal of the audiovisual transfer task. In addition, the semantic consistency of audio and video also promotes the development of cross-modal information retrieval tasks. Figure 3: Cross-modal perception related tasks The human brain will integrate the audio-visual information of the scene received so that they can cooperate and complement each other, thereby improving the ability to understand the scene. Therefore, it is necessary for machines to pursue human-like perception by exploring audio-visual collaboration rather than just fusing or predicting multi-modal information. To this end, researchers have introduced a variety of new challenges in the field of visual and audio learning, including visual and audio component analysis and visual and audio reasoning. At the beginning of audio-visual collaboration, how to effectively extract representations from audio-visual modalities without human annotation is an important topic. This is because high-quality representations can contribute to a variety of downstream tasks. For audiovisual data, the semantic, spatial and temporal consistency between them provides a natural signal for learning audiovisual representation in a self-supervised manner. In addition to representation learning, the collaboration between audio and video modalities mainly focuses on scene understanding. Some researchers focus on the analysis and localization of visual and audio components in the scene, including sound source localization, visual and audio saliency detection, visual and audio navigation, etc. Such tasks establish fine-grained connections between visual and audio modalities. In addition, in many audio-visual tasks, we often assume that the audio-visual content in the entire video is always matched in time, that is, in each video At each moment, the picture and sound are consistent. But in fact, this assumption cannot always be established. For example, in the "playing basketball" sample, the camera sometimes shoots scenes such as the auditorium that have nothing to do with the label "playing basketball." Therefore, tasks such as visual and audio event localization and analysis are proposed to further peel off the visual and audio components in the scene in a temporal sequence. Human beings are able to make inferences beyond perception in audio-visual scenes. Although the above audio-visual collaboration tasks have gradually achieved a fine-grained understanding of the audio-visual scene, they have not performed inferential analysis of the audio-visual components. Recently, with the development of the field of visual-visual learning, some researchers have begun to pay further attention to visual-visual reasoning, such as visual-visual question answering and dialogue tasks. These tasks aim to perform cross-modal spatiotemporal reasoning about audiovisual scenes, answer scene-related questions, or generate dialogues about observed audiovisual scenes. Figure 4: Tasks related to video and audio collaboration This section summarizes and discusses some representative data sets in the field of audio-visual learning. Although audiovisual modalities have heterogeneous data forms, their internal consistency covers semantic, spatial and temporal aspects, laying the foundation for audiovisual research. First, visual and audio modalities depict the thing of interest from different perspectives. Therefore, the semantics of audiovisual data are considered to be semantically consistent. In visual-visual learning, semantic consistency plays an important role in most tasks. This consistency makes it possible, for example, to combine visual and audio information for better visual and audio recognition and single-modality enhancement. In addition, the semantic consistency between audio-visual modalities also plays an important role in cross-modal retrieval and transfer learning. Secondly, both visual and audio can help determine the exact spatial location of the sounding object. This spatial correspondence also has a wide range of applications. For example, in sound source localization tasks, this consistency is used to determine the visual location of a sound-emitting object guided by input audio. In the stereo case, visual depth information can be estimated based on binaural audio or stereo audio can be generated using visual information as an aid. Finally, visual content and the sounds it produces are often temporally consistent. This consistency is also widely exploited in most audiovisual learning research, such as fusing or predicting multimodal information in audiovisual recognition or generation tasks. In practice, these different audio-visual consistencies are not isolated, but often appear together in audio-visual scenes. Therefore, they are often jointly exploited in related tasks. A combination of semantic and temporal consistency is the most common case. In simple scenarios, audio and video clips with the same timestamp are considered to be consistent in both semantics and timing. However, this strong assumption may fail, for example, the video image and the background sound at the same timestamp are not semantically consistent. These false positives interfere with training. Recently, researchers have begun to focus on these situations to improve the quality of scene understanding. Furthermore, the combination of semantic and spatial consistency is also common. For example, successful sound source localization in video relies on semantic consistency to explore the corresponding visual spatial location based on the input sound. Furthermore, during the early stages of the audiovisual navigation task, the vocal target produces a steady, repetitive sound. Although spatial consistency is satisfied, the semantic content in visual and audio is uncorrelated. Subsequently, semantic consistency of sounds and utterance locations is introduced to improve the quality of audio-visual navigation. In general, the semantic, spatial and temporal consistency of the visual and audio modalities provide solid support for the research on visual and audio learning. The analysis and exploitation of these consistencies not only improves the performance of existing audiovisual tasks but also contributes to a better understanding of audiovisual scenes. This article summarizes the cognitive basis of audio-visual modalities and analyzes the phenomenon of human multi-channel perception. Here Basically, the current audio-visual learning research is divided into three categories: Audio-visual Boosting, Cross-modal Perception, and Audio-visual Collaboration. In order to review the current development in the field of audio-visual learning from a more macro perspective, the article further proposes a new perspective on audio-visual scene understanding: 1) Basic scene understanding (Basic Scene Understanding). Tasks of audiovisual enhancement and cross-modal perception often focus on fusing or predicting consistent audiovisual information. The core of these tasks is the basic understanding of audio-visual scenes (for example, action classification of input videos.) or the prediction of cross-modal information (for example, generating corresponding audio based on silent videos.) However, in natural scenes Videos often contain diverse visual and audio components that are beyond the scope of these basic scene understanding tasks. #2) Fine-grained Scene Understanding. As mentioned above, audio-visual scenes usually have rich components of different modalities. Therefore, researchers have proposed some tasks to peel off target components. For example, a sound source localization task aims to mark the area in vision where a target sound-producing object is located. The audio-visual event localization and analysis task determines the target audible event or visible event in time series. These tasks separate the audio-visual components and decouple the audio-visual scenes, resulting in a more fine-grained understanding of the scenes compared to the previous stage. 3) Causal Scene Understanding. In audio-visual scenes, humans can not only perceive things of interest around them, but also infer the interactions between them. The goal of scene understanding at this stage is closer to the pursuit of human-like perception. Currently, only a few missions are explored at this stage. Audio-visual question answering and dialogue tasks are representative works. These tasks attempt to explore the association of visual and audio components in videos and perform spatiotemporal reasoning. In general, the exploration of these three stages is uneven. From basic scene understanding to causal interaction scene understanding, the diversity and richness of related research gradually decreases. In particular, causal interaction scene understanding is still in its infancy. This hints at some potential development directions for audio-visual learning: 1) Task integration. Most research in the audiovisual field is task-oriented. These individual tasks simulate and learn only specific aspects of the audiovisual scene. However, the understanding and perception of audiovisual scenes does not occur in isolation. For example, sound source localization tasks emphasize sound-related objects in vision, whereas event localization and parsing tasks temporally identify target events. The two tasks are expected to be integrated to facilitate refined understanding of audiovisual scenes. The integration of multiple audio-visual learning tasks is a direction worth exploring in the future. #2) Deeper understanding of causal interaction scenarios. Currently, the diversity of research on scene understanding involving reasoning is still limited. Existing tasks, including audio-visual question answering and dialogue, mostly focus on conducting dialogues based on events in videos. Deeper types of inference, such as predicting audio or visual events that may occur next based on a previewed scene, deserve further research in the future. In order to better present the content of the article, this review is also equipped with a continuously updated project homepage, which displays the goals and development of different audio-visual tasks in pictures, videos, and more. , for readers to quickly understand the field of audio-visual learning.

2 Basics of visual and audio cognition

2.1 Neural pathways of vision and hearing

2.2 Audio-Visual Integration in Cognitive Neuroscience

3 Visual and video improvement

4 Cross-modal perception

5 Visual-visual collaboration

6 Representative Dataset

7 Trends and New Perspectives

7.1 Semantic, spatial and temporal consistency

7.2 A new perspective on scene understanding

The above is the detailed content of Looking at the past and future of multimodal learning from an audio-visual perspective. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

Learn to completely uninstall pip and use Python more efficiently

Jan 16, 2024 am 09:01 AM

Learn to completely uninstall pip and use Python more efficiently

Jan 16, 2024 am 09:01 AM

No more need for pip? Come and learn how to uninstall pip effectively! Introduction: pip is one of Python's package management tools, which can easily install, upgrade and uninstall Python packages. However, sometimes we may need to uninstall pip, perhaps because we wish to use another package management tool, or because we need to completely clear the Python environment. This article will explain how to uninstall pip efficiently and provide specific code examples. 1. How to uninstall pip The following will introduce two common methods of uninstalling pip.

A deep dive into matplotlib's colormap

Jan 09, 2024 pm 03:51 PM

A deep dive into matplotlib's colormap

Jan 09, 2024 pm 03:51 PM

To learn more about the matplotlib color table, you need specific code examples 1. Introduction matplotlib is a powerful Python drawing library. It provides a rich set of drawing functions and tools that can be used to create various types of charts. The colormap (colormap) is an important concept in matplotlib, which determines the color scheme of the chart. In-depth study of the matplotlib color table will help us better master the drawing functions of matplotlib and make drawings more convenient.

Getting Started with Pygame: Comprehensive Installation and Configuration Tutorial

Feb 19, 2024 pm 10:10 PM

Getting Started with Pygame: Comprehensive Installation and Configuration Tutorial

Feb 19, 2024 pm 10:10 PM

Learn Pygame from scratch: complete installation and configuration tutorial, specific code examples required Introduction: Pygame is an open source game development library developed using the Python programming language. It provides a wealth of functions and tools, allowing developers to easily create a variety of type of game. This article will help you learn Pygame from scratch, and provide a complete installation and configuration tutorial, as well as specific code examples to get you started quickly. Part One: Installing Python and Pygame First, make sure you have

Revealing the appeal of C language: Uncovering the potential of programmers

Feb 24, 2024 pm 11:21 PM

Revealing the appeal of C language: Uncovering the potential of programmers

Feb 24, 2024 pm 11:21 PM

The Charm of Learning C Language: Unlocking the Potential of Programmers With the continuous development of technology, computer programming has become a field that has attracted much attention. Among many programming languages, C language has always been loved by programmers. Its simplicity, efficiency and wide application make learning C language the first step for many people to enter the field of programming. This article will discuss the charm of learning C language and how to unlock the potential of programmers by learning C language. First of all, the charm of learning C language lies in its simplicity. Compared with other programming languages, C language

Let's learn how to input the root number in Word together

Mar 19, 2024 pm 08:52 PM

Let's learn how to input the root number in Word together

Mar 19, 2024 pm 08:52 PM

When editing text content in Word, you sometimes need to enter formula symbols. Some guys don’t know how to input the root number in Word, so Xiaomian asked me to share with my friends a tutorial on how to input the root number in Word. Hope it helps my friends. First, open the Word software on your computer, then open the file you want to edit, and move the cursor to the location where you need to insert the root sign, refer to the picture example below. 2. Select [Insert], and then select [Formula] in the symbol. As shown in the red circle in the picture below: 3. Then select [Insert New Formula] below. As shown in the red circle in the picture below: 4. Select [Radical Formula], and then select the appropriate root sign. As shown in the red circle in the picture below:

Learn the main function in Go language from scratch

Mar 27, 2024 pm 05:03 PM

Learn the main function in Go language from scratch

Mar 27, 2024 pm 05:03 PM

Title: Learn the main function in Go language from scratch. As a simple and efficient programming language, Go language is favored by developers. In the Go language, the main function is an entry function, and every Go program must contain the main function as the entry point of the program. This article will introduce how to learn the main function in Go language from scratch and provide specific code examples. 1. First, we need to install the Go language development environment. You can go to the official website (https://golang.org

Learn the strconv.Atoi function in the Go language documentation to convert strings to integers

Nov 03, 2023 am 08:55 AM

Learn the strconv.Atoi function in the Go language documentation to convert strings to integers

Nov 03, 2023 am 08:55 AM

Learn the strconv.Atoi function in the Go language documentation to convert strings to integers. The Go language is a powerful and flexible programming language. The strconv package in its standard library provides the function of string conversion. In this post, we will learn how to convert string to integer using strconv.Atoi function. First, we need to understand the purpose and declaration of the strconv.Atoi function. The description of the function in the document is as follows: funcAtoi(sstring)(i

Quickly learn pip installation and master the skills from scratch

Jan 16, 2024 am 10:30 AM

Quickly learn pip installation and master the skills from scratch

Jan 16, 2024 am 10:30 AM

Learn pip installation from scratch and quickly master the skills. Specific code examples are required. Overview: pip is a Python package management tool that can easily install, upgrade and manage Python packages. For Python developers, it is very important to master the skills of using pip. This article will introduce the installation method of pip from scratch, and give some practical tips and specific code examples to help readers quickly master the use of pip. 1. Install pip Before using pip, you first need to install pip. pip