Technology peripherals

Technology peripherals

AI

AI

Using unique 2D materials and machine learning, CV 'sees” millions of colors like a human

Using unique 2D materials and machine learning, CV 'sees” millions of colors like a human

Using unique 2D materials and machine learning, CV 'sees” millions of colors like a human

Human eyes can see millions of colors, and now artificial intelligence can too.

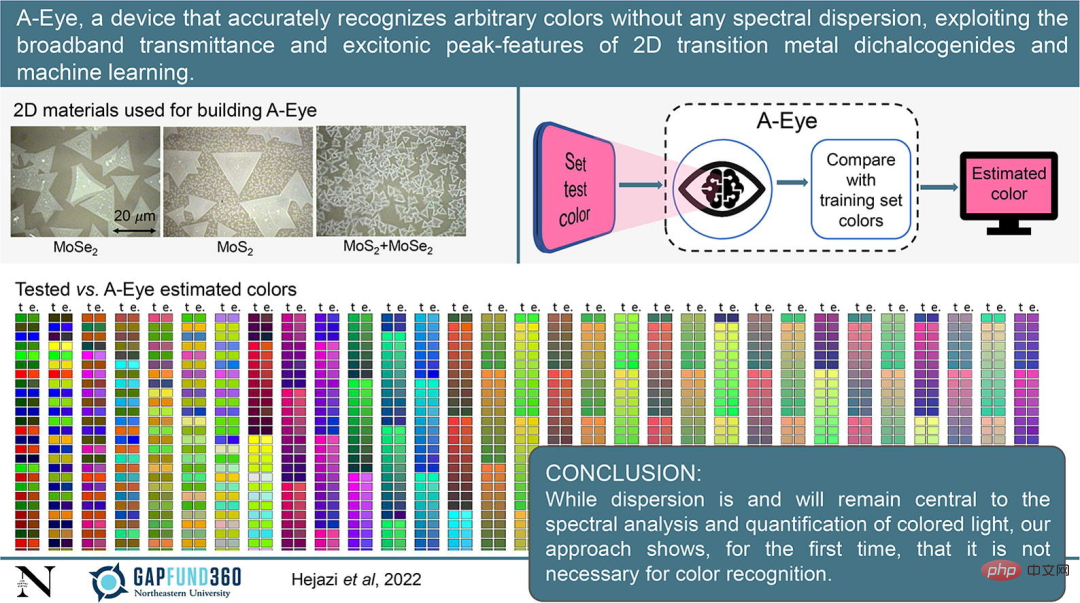

Recently, an interdisciplinary research team from Northeastern University used new artificial intelligence technology to build a new device A-Eye that can recognize millions of colors. The field of machine vision has taken a big step forward and will be widely used in a range of technologies such as self-driving cars, agricultural sorting and remote satellite imaging.

The research paper was published in Materials Today.

Paper address: https://www.sciencedirect.com/science/article/abs/pii /S1369702122002255

Swastik Kar, corresponding author of the study and associate professor of physics at Northeastern University, said: "As automation becomes more widespread, machines are becoming more and more capable of identifying the color and shape of objects. is becoming more and more important."

The research designed a 2D material with special quantum properties. By embedding light into the optical window of the A-Eye machine, it can achieve "very high Process a rich variety of colors with unprecedented precision.

Furthermore, A-Eye is able to accurately identify and reproduce "seen" colors with zero deviation from the original spectrum. This is possible thanks to machine learning algorithms developed by an AI research team led by Sarah Ostadabbas, assistant professor of electrical and computer engineering at Northeastern University.

The main technology of the entire research focuses on the quantum and optical properties of a class of materials called transition metal dichalcogenides. This unique material has been considered to have Unlimited potential, especially in sensing and energy storage applications.

Research Overview

When identifying colors, machines typically use traditional RGB (red, green and blue) filters to break down the color into its component parts and then use that The information essentially guesses and reproduces the three primary colors of light. When you point your digital camera at a colored object and take a picture, the light from the object passes through a set of detectors with filters in front of them that separate the light into these raw RGB colors.

Kar said, “You can think of color filters as funnels that send visual information or data to separate boxes, and then those funnels assign artificial numbers to natural colors. ." But if you just break down color into its three components (red, green, and blue), there are some limitations.

However, instead of using color filters, Kar and his team used "transmissive windows" made of a unique 2D material.

Kar says they are getting the machine, known as the A-Eye, to recognize colors in a completely different way. When colored light hits a detector, instead of breaking it down into its main red, green and blue components or just looking for those components, the researchers used the entire spectrum of information.

Most importantly, the researchers used techniques to modify and encode these ingredients and store them in different ways. So they were given a set of numbers and were able to identify the primary colors in a very different way than conventionally.

The upper left of the picture below shows the 2D materials used to build A-Eye, the upper right shows the workflow of A-Eye, and the bottom of the picture shows the color comparison between the test color and A-Eye’s estimate.

Another author, Sarah Ostadabbas, said that when light passes through these transmission windows, A-Eye processes color into data. And built-in machine learning models look for patterns to better identify the corresponding colors analyzed by A-Eye.

At the same time, A-Eye can also continuously improve the color estimation results by adding any correct guesses to its training data set.

Introduction to the first author

The first author of this study, Davoud Hejazi, is currently a senior data scientist at Titan Advanced Energy Solutions, focusing on statistical modeling, machine learning, signal processing, image processing, cloud computing and data Visualization and other fields.

In May of this year, he obtained a Ph.D. in physics from Northeastern University. His graduation thesis was "Dispersion-Free Accurate Color Estimation using Layered Excitonic 2D Materials and Machine Learning."

#Thesis address: https://repository.library.northeastern.edu/files/neu:4f171c96c

The above is the detailed content of Using unique 2D materials and machine learning, CV 'sees” millions of colors like a human. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

Transparent! An in-depth analysis of the principles of major machine learning models!

Apr 12, 2024 pm 05:55 PM

Transparent! An in-depth analysis of the principles of major machine learning models!

Apr 12, 2024 pm 05:55 PM

In layman’s terms, a machine learning model is a mathematical function that maps input data to a predicted output. More specifically, a machine learning model is a mathematical function that adjusts model parameters by learning from training data to minimize the error between the predicted output and the true label. There are many models in machine learning, such as logistic regression models, decision tree models, support vector machine models, etc. Each model has its applicable data types and problem types. At the same time, there are many commonalities between different models, or there is a hidden path for model evolution. Taking the connectionist perceptron as an example, by increasing the number of hidden layers of the perceptron, we can transform it into a deep neural network. If a kernel function is added to the perceptron, it can be converted into an SVM. this one

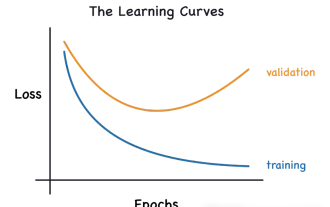

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

This article will introduce how to effectively identify overfitting and underfitting in machine learning models through learning curves. Underfitting and overfitting 1. Overfitting If a model is overtrained on the data so that it learns noise from it, then the model is said to be overfitting. An overfitted model learns every example so perfectly that it will misclassify an unseen/new example. For an overfitted model, we will get a perfect/near-perfect training set score and a terrible validation set/test score. Slightly modified: "Cause of overfitting: Use a complex model to solve a simple problem and extract noise from the data. Because a small data set as a training set may not represent the correct representation of all data." 2. Underfitting Heru

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

In the 1950s, artificial intelligence (AI) was born. That's when researchers discovered that machines could perform human-like tasks, such as thinking. Later, in the 1960s, the U.S. Department of Defense funded artificial intelligence and established laboratories for further development. Researchers are finding applications for artificial intelligence in many areas, such as space exploration and survival in extreme environments. Space exploration is the study of the universe, which covers the entire universe beyond the earth. Space is classified as an extreme environment because its conditions are different from those on Earth. To survive in space, many factors must be considered and precautions must be taken. Scientists and researchers believe that exploring space and understanding the current state of everything can help understand how the universe works and prepare for potential environmental crises

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

A purely visual annotation solution mainly uses vision plus some data from GPS, IMU and wheel speed sensors for dynamic annotation. Of course, for mass production scenarios, it doesn’t have to be pure vision. Some mass-produced vehicles will have sensors like solid-state radar (AT128). If we create a data closed loop from the perspective of mass production and use all these sensors, we can effectively solve the problem of labeling dynamic objects. But there is no solid-state radar in our plan. Therefore, we will introduce this most common mass production labeling solution. The core of a purely visual annotation solution lies in high-precision pose reconstruction. We use the pose reconstruction scheme of Structure from Motion (SFM) to ensure reconstruction accuracy. But pass