The past ten years have been the "golden decade" of deep learning. It has completely changed the way humans work and play, and has been widely used in various industries such as medical care, education, and product design. Advances in computing hardware, especially innovations in GPUs.

The successful implementation of deep learning technology depends on three major elements: The first is the algorithm. Most deep learning algorithms such as deep neural networks, convolutional neural networks, backpropagation algorithms, and stochastic gradient descent were proposed in the 1980s or even earlier.

The second is the data set. The data set used to train a neural network must be large enough for the neural network to outperform other techniques. It was not until the early 21st century that big data sets such as Pascal and ImageNet became available. The third is hardware. Only with mature hardware development can the time required to train large neural networks with large data sets be controlled within a reasonable range. The industry generally believes that a more "reasonable" training time is about two weeks.

At this point, a prairie fire has ignited in the field of deep learning. If algorithms and data sets are regarded as the mixed fuel of deep learning, then GPU is the spark that ignites them. When powerful GPUs can be used to train networks, deep learning technology becomes practical.

Since then, deep learning has replaced other algorithms and has been widely used in fields such as image classification, image detection, speech recognition, natural language processing, time series analysis, and even in Go and chess. You can also see it. As deep learning penetrates into all aspects of human life, model training and inference have increasingly demanding hardware.

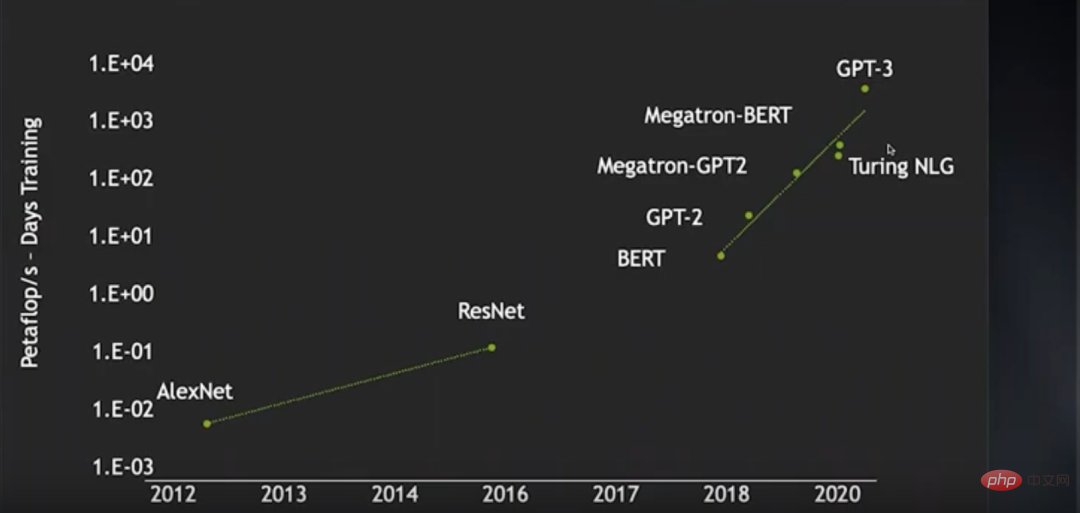

From the emergence of AlexNet in 2012 to the advent of ResNet in 2016, the training algorithm of image neural network Power consumption (in petaflop/s-day) has increased by nearly 2 orders of magnitude, while from BERT in 2018 to GPT-3 in recent years, training computing power consumption has increased by nearly 4 orders of magnitude. During this period, thanks to the advancement of certain technologies, the training efficiency of neural networks has been significantly improved, thus saving a lot of computing power, otherwise the increase in computing power consumption will be even more exaggerated.

The researchers wanted to train a larger language model with a larger unsupervised language data set. However, although they already had a 4000-node GPU cluster, it was difficult to train within a reasonable training time. The operations that can be processed are still very limited. This means that how fast deep learning technology develops depends on how fast hardware develops.

Nowadays, deep learning models are not only becoming more and more complex, but also have an increasingly wide range of applications. Therefore, there is a need to continue to improve the performance of deep learning. So, how can deep learning hardware continue to improve? NVIDIA chief scientist Bill Dally is undoubtedly the authority to answer this question. Before the release of the H100 GPU, he reviewed the current status of deep learning hardware in a speech and discussed the continued improvement of performance when Moore's Law fails. Several directions of expansion. The OneFlow community compiled this.

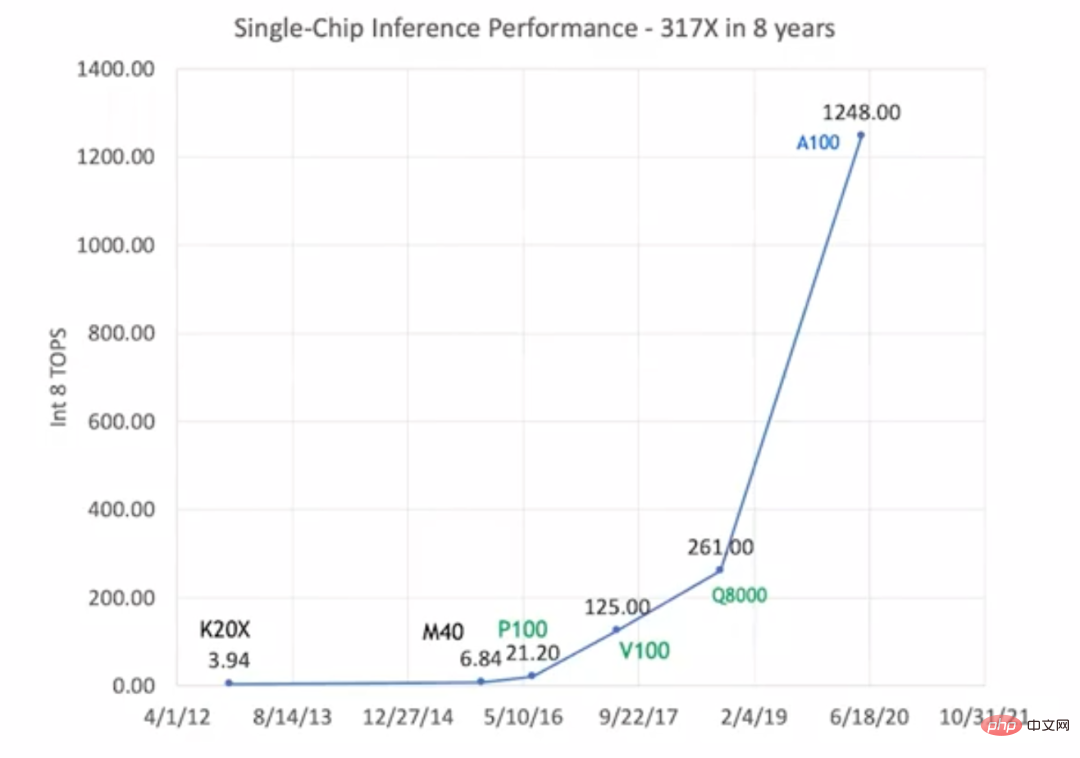

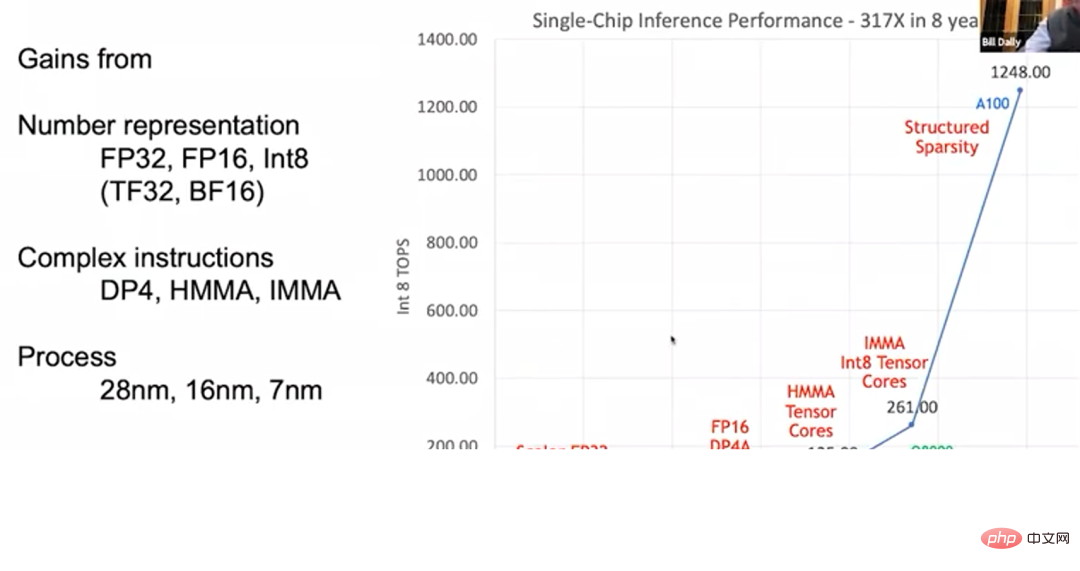

## From K20X in 2012 to A100 in 2020, GPU inference performance Increased to 317 times the original value. This is what we call "Huang's Law", and this development rate is much faster than "Moore's Law".

The inference performance of GPU is improved But unlike "Moore's Law", in "Huang's Law", the performance improvement of GPU is not Completely dependent on progress in process technology. The above picture marks these types of GPUs in black, green, and blue colors, which represent that they use three different process technologies. The early K20X and M40 used the 28nm process; the P100, V100 and Q8000 used the 16nm process; and the A100 used the 7nm process. Advances in process technology can only increase GPU performance to 1.5 or 2 times its original level. The overall 317-fold performance improvement is mostly attributed to the improvement of the GPU architecture and circuit design.

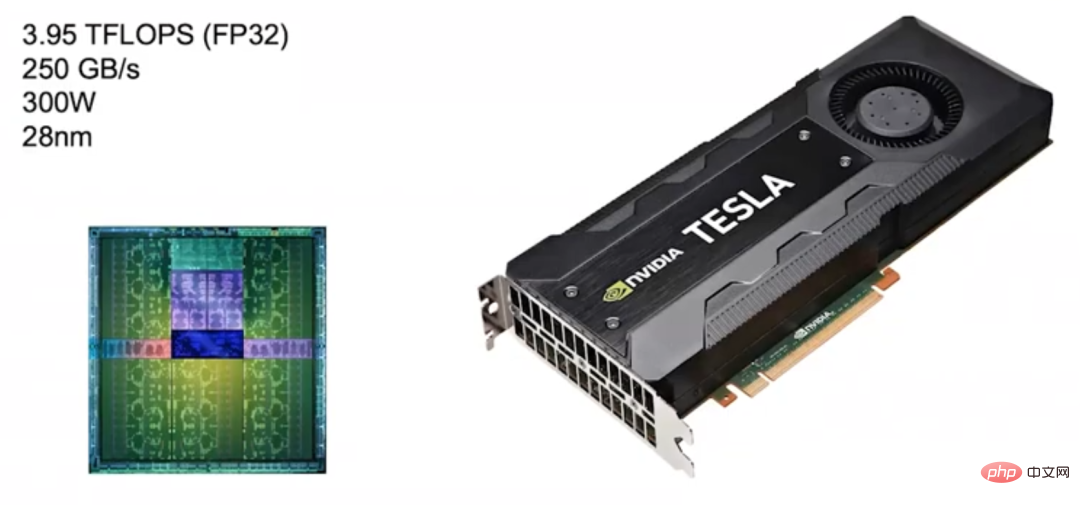

In 2012, NVIDIA launched a Kepler architecture GPU, but it was not designed specifically for deep learning. Nvidia only started to get involved with deep learning in 2010, and at that time it had not yet considered customizing GPU products for deep learning.

Kepler (2012) Kepler The target usage scenario is image processing and high-performance computing, but it is mainly used for image processing. Therefore, it is characterized by high floating point computing power, with its FP32 calculation (single precision floating point calculation) speed reaching nearly 4 TFLOPS and memory bandwidth reaching 250 GB/s. Based on Kepler's excellent performance, NVIDIA also regards it as the baseline for its own products.

Pascal (2016) Later, Nvidia launched it in 2016 With the Pascal architecture, its design is more suitable for deep learning. After some research, NVIDIA found that many neural networks can be trained with FP16 (half-precision floating point calculation), so most models of the Pascal architecture support FP16 calculations.

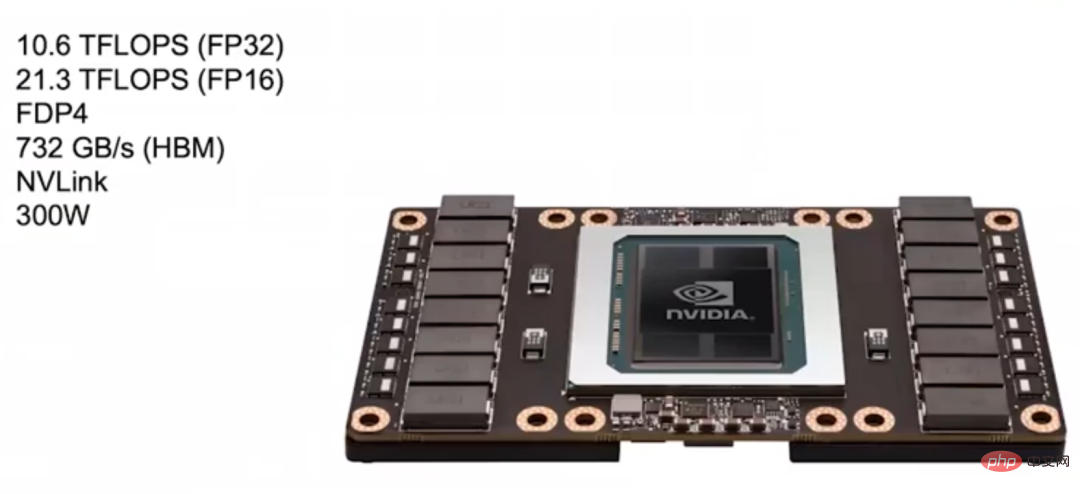

The FP32 calculation speed of this Pascal GPU in the picture below can reach 10.6 TFLOPS, which is much higher than the previous Kepler GPU, and its FP16 calculation is faster, with a speed of FP32 twice.

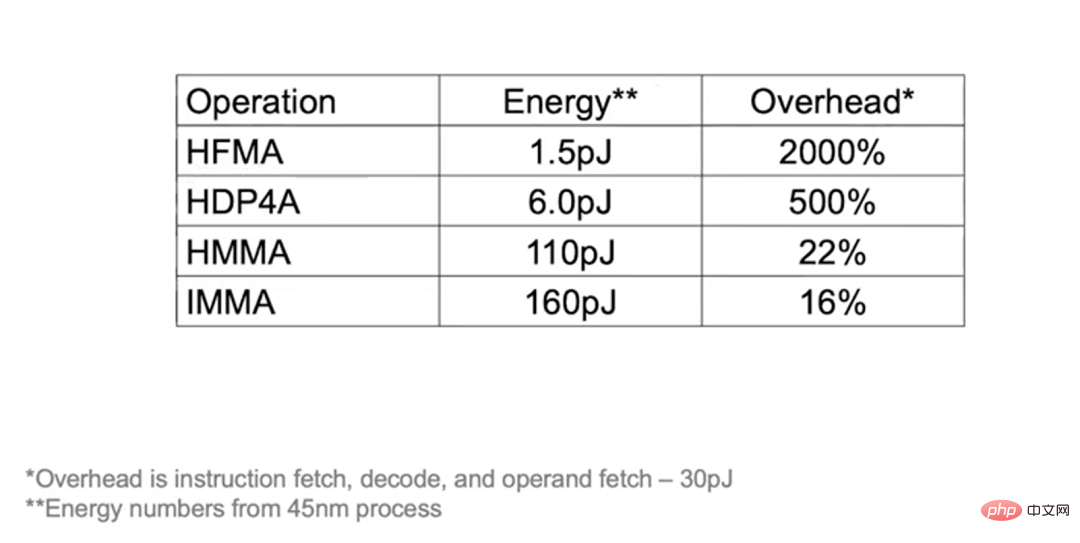

The Pascal architecture also supports more complex instructions, such as FDP4, so that the overhead of fetching instructions, decoding, and fetching operands can be amortized into 8 arithmetic operations. Compared with the previous fused multiply-add (Fuse Multiply-Add) instruction that can only allocate the overhead to two arithmetic operations, the Pascal architecture can reduce the energy consumption caused by additional overhead and instead use it for mathematical operations.

The Pascal architecture also uses HBM video memory, with a bandwidth of 732 GB/s, which is 3 times that of Kepler. The reason for increasing bandwidth is that memory bandwidth is the main bottleneck in improving deep learning performance. In addition, Pascal uses NVLink to connect more machines and GPU clusters to better complete large-scale training.

The DGX-1 system launched by NVIDIA for deep learning uses 8 GPUs based on the Pascal architecture.

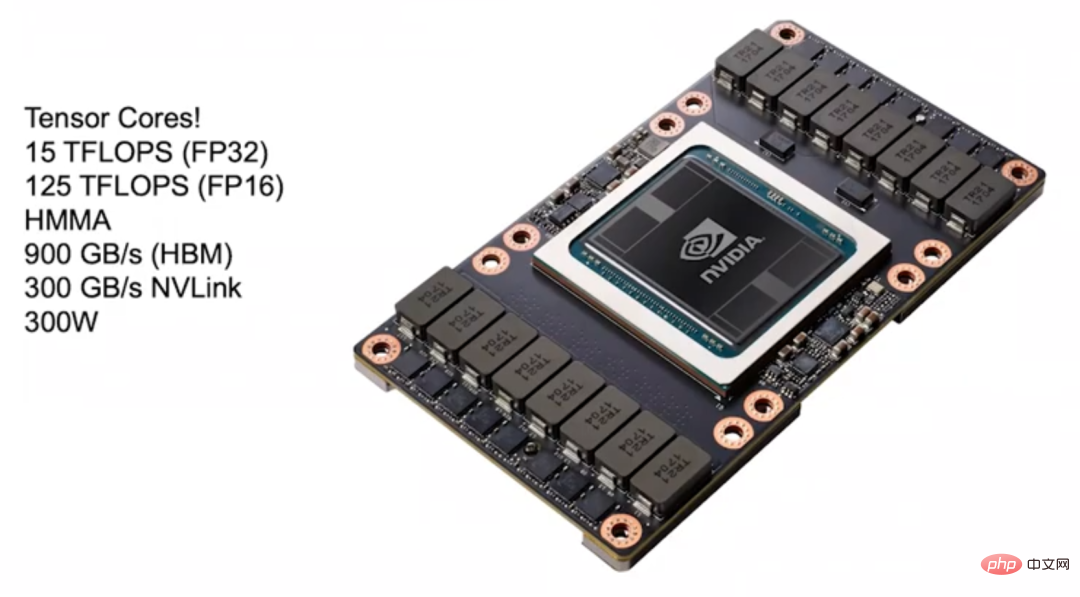

Volta (2017) 2017 In 2017, NVIDIA launched the Volta architecture suitable for deep learning. One of its design focuses is to better amortize instruction overhead. Tensor Core is introduced in the Volta architecture for deep learning acceleration. Tensor Core can be connected to the GPU in the form of instructions. The key instruction is HMMA (Half Precision Matrix Multiply Accumulate), which multiplies two 4×4 FP16 matrices and then adds the results to one In FP32 matrices, this kind of operation is very common in deep learning. Through the HMMA instruction, the overhead of fetching instructions and decoding can be reduced to the original 10% to 20% through amortization.

The rest is a load issue. If you want to surpass the performance of Tensor Core, you should work hard on the load. In the Volta architecture, a large amount of energy consumption and space are used for deep learning acceleration, so even if programmability is sacrificed, it cannot bring much performance improvement.

Volta also upgraded HBM video memory, with a memory bandwidth of 900 GB/s, and also used a new version of NVLink, which can double the bandwidth when building a cluster. In addition, the Volta architecture also introduces NVSwitch, which can connect multiple GPUs. In theory, NVSwitch can connect up to 1024 GPUs to build a large shared memory machine.

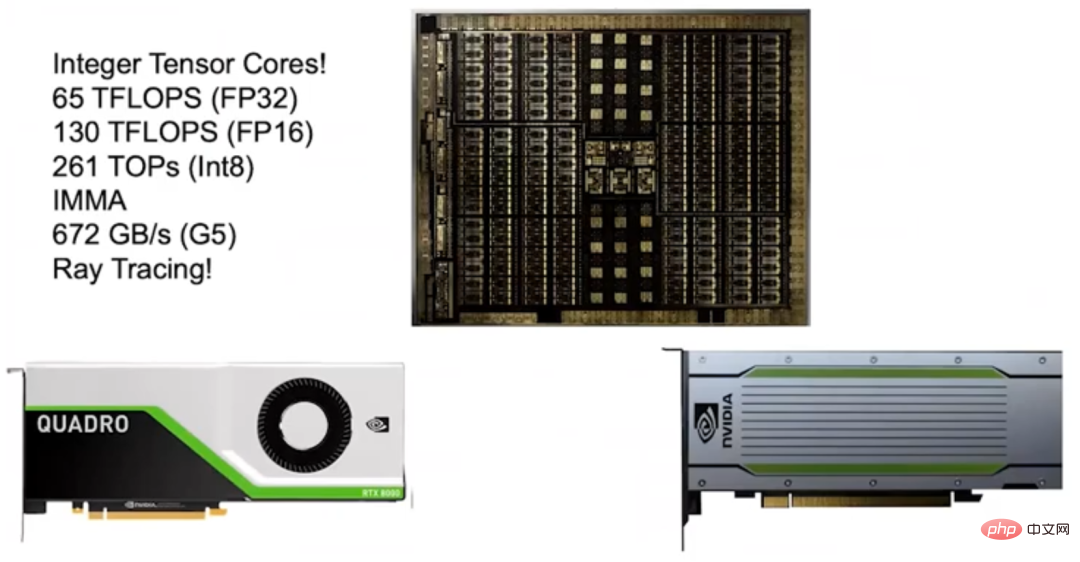

Turing (2018) 2018 In 2016, NVIDIA launched the Turing architecture. Due to the great success of the previous Tensor Core, NVIDIA launched the Integer Tensor Core. Because most neural networks can be trained with FP16, and inference does not require too high accuracy and a large dynamic range, just use Int8. Therefore, NVIDIA introduced Integer Tensor Core in the Turing architecture, which doubled the performance.

The Turing architecture also uses GDDR memory to support NLP models and recommendation systems that have high bandwidth requirements. At the time, some people questioned that the energy efficiency of the Turing architecture was not as good as other accelerators on the market. But if you calculate carefully, you will find that the Turing architecture is actually more energy efficient, because Turing uses G5 video memory, while other accelerators use LPDDR memory. In my opinion, choosing G5 memory is the right decision because it can support models with high bandwidth requirements that similar products cannot support.

What I am deeply proud of the Turing architecture is that it is also equipped with an RT Core that supports Ray Tracing. Nvidia only started researching RT Core in 2013, and officially launched RT Core just five years later.

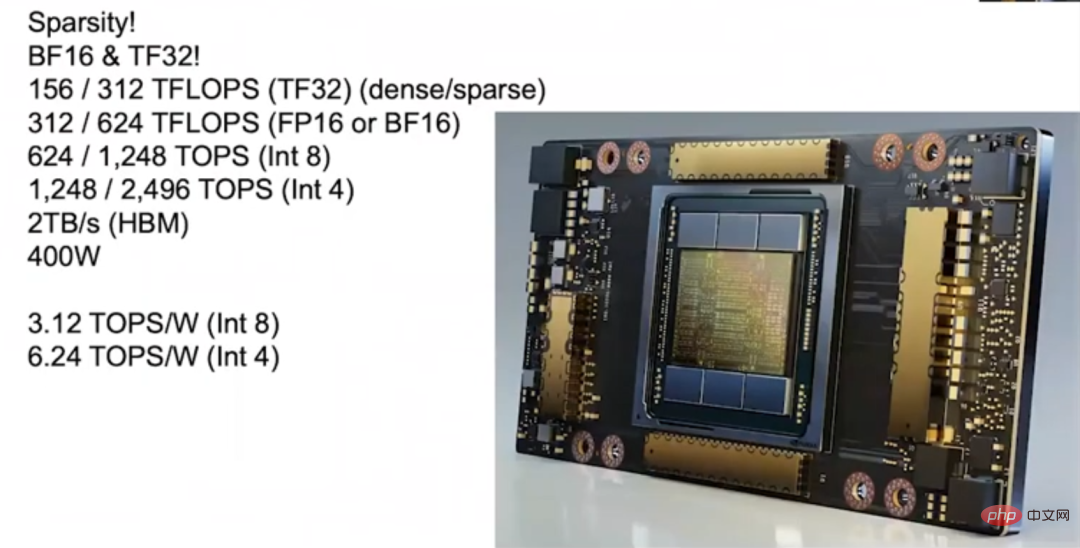

Ampere (2020) In 2020, NVIDIA released Ampere The architecture enabled the A100 released that year to achieve a performance leap, with an inference speed of more than 1,200 Teraflops. One of the great advantages of the Ampere architecture is that it supports sparsity. We found that most neural networks can be sparsified, that is, the neural network can be "pruned" and a large number of weights can be set to 0 without affecting its accuracy. But different neural networks can be sparsified to different degrees, which is a bit tricky. For example, without losing accuracy, the density of convolutional neural networks can be reduced to 30% to 40%, while that of fully connected neural networks can be reduced to 10% to 20%.

The traditional view is that due to the large overhead of operating sparse matrix packages, if the density cannot be reduced below 10%, the trade-off is not as good as operating dense matrix packages. We first collaborated with Stanford University to study sparsity, and later built very good machines that can run efficiently when the matrix density reaches 50%, but to make the sparse matrix more efficient than the dense matrix in terms of power gating. It is still difficult to make the matrix superior, and this is where we always want to break through.

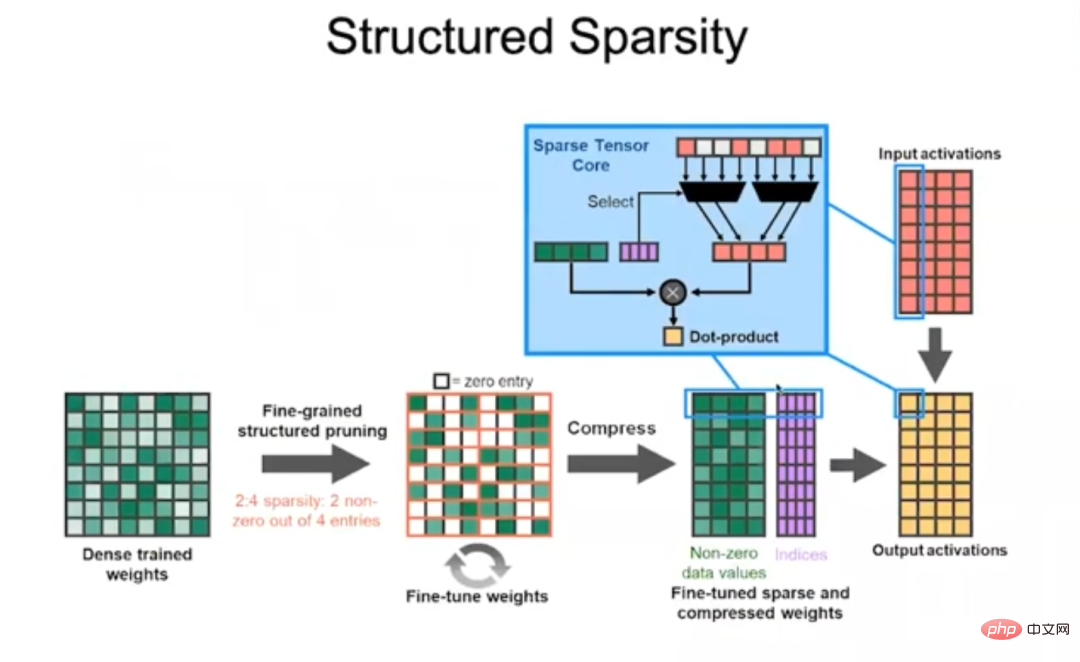

In the end, we overcame the problem and developed Ampere, and the secret is structured sparsity.

Structured sparse The Ampere architecture stipulates that every 4 elements of the matrix In the numerical value, there cannot be more than 2 non-zero values, that is, the weights are compressed by removing the non-zero values. Determine which weights should be retained by inputting the code word (code word), and use the code word to determine which input activations these non-zero weights should be multiplied by, and then add them up to complete the dot multiplication operation. This approach is very efficient, allowing the Ampere architecture to double the performance of most neural networks.

In addition, the Ampere architecture has many innovations. For example, Ampere has built-in TF32 (TensorFloat-32) format, which combines the 8-bit exponent bit of FP32 and the 10-bit exponent bit of FP16. mantissa digit. Ampere also supports the BFLOAT format. The exponent bits of BFLOAT are the same as FP32, and the mantissa bits are fewer than FP32, so it can be regarded as a reduced version of FP32. All the above data formats support structured sparse, so whether you use FP16 and TF32 for training, or use Int8 and Int4 for inference, you can get the high performance brought by structured sparse.

As Ampere gets better and better at quantization, it can be applied to many neural networks and ensure high performance. Ampere has 6 HBM stacks, and the bandwidth of HBM memory has also been upgraded, reaching 2TB/s. During end-to-end inference, Ampere's computing capabilities can reach 3.12 TOPS/W (Int8) and 6.24 TOPS/W (Int4).

Three major factors for improving GPU inference performance Summarizing the past development of deep learning, the 317-fold increase in GPU inference performance in 8 years is mainly attributed to three factors Big factors:

#First and most important is the development of number representation.

#The precision of FP32 is too high, causing the cost of arithmetic operations to be too high. Later, the Turing and Ampere architectures supported Int8, which greatly improved the performance per watt of the GPU. When Google published a paper announcing TPU1, it stated that the advantage of TPU1 is that it is specifically tailored for machine learning. In fact, Google should be comparing its own TPU1 with NVIDIA's Kepler (as mentioned before, Kepler is not specifically designed for deep learning), so the advantage of TPU1 can be said to be the advantage of Int8 compared to FP32.

Secondly, the GPU supports complex instructions.

#The Pascal architecture has added dot multiplication instructions, and then the Volta, Turing and Ampere architectures have added matrix product instructions to allow the overhead to be shared. Keeping a programmable engine in the GPU can bring many benefits. It can be as efficient as an accelerator because each instruction completes so many tasks that the overhead amortization per instruction is almost negligible.

#Finally, the progress of process technology.

The development of the chip manufacturing process from 28 nanometers to today's 7 nanometers has made a certain contribution to the improvement of GPU performance. The following example can help you better understand the effect of cost sharing: if you perform an HFMA operation, the "multiply" and "add" operations total only 1.5pJ (Picojoules, Picojoules), while fetching instructions, decoding and getting operands It requires an overhead of 30pJ, and the cost will be as high as 2000% when amortized.

If you perform HDP4A operation, you can allocate the overhead to 8 operations, reducing the overhead to 500%. For HMMA operation, since most of the energy consumption is used for the load, the overhead is only 22%, and IMMA is even lower, 16%. Therefore, while the pursuit of programmability adds a small amount of overhead, the performance improvements that can be achieved by adopting a different design are more important.

The above talks about the performance of a single GPU, but training a large language model obviously requires multiple GPUs, so the connection between GPUs must also be improved. We introduced NVLink in the Pascal architecture, and later the Volta architecture adopted NVLink 2, and the Ampere architecture adopted NVLink 3. The bandwidth of each generation of architecture doubled. In addition, we launched the first generation of NVSwitch on the Volta architecture and the second generation on the Ampere architecture. Through NVLink and NVSwitch, extremely large GPU clusters can be built. In addition, we also launched the DGX box.

DGX box

In 2020, Nvidia acquired Mellanox, so it can now provide a complete set of data center solutions including Switches and Interconnect for building large GPU clusters. In addition, we are equipped with the DGX SuperPOD, which ranks in the top 20 on the AI Performance Record 500 list. In the past, users needed to customize machines, but now they only need to purchase a pre-configured machine that can deploy DGX SuperPOD to get the high performance brought by DGX SuperPOD. Additionally, these machines are well suited for scientific computing.

In the past, it took several months to train a single large language model with a single machine. However, training efficiency can be greatly improved by building a GPU cluster. Therefore, optimizing GPU cluster connections and improving single GPU Performance is equally important.

Let’s talk about NVIDIA’s accelerator research and development work. Nvidia regards accelerators as a carrier for testing new technologies, and successful technologies will eventually be applied to mainstream GPUs. The accelerator can be understood this way: it has a matrix multiplication unit input by the memory hierarchy. The next thing to do is to let most of the energy consumption be used for matrix multiplication calculations instead of data handling.

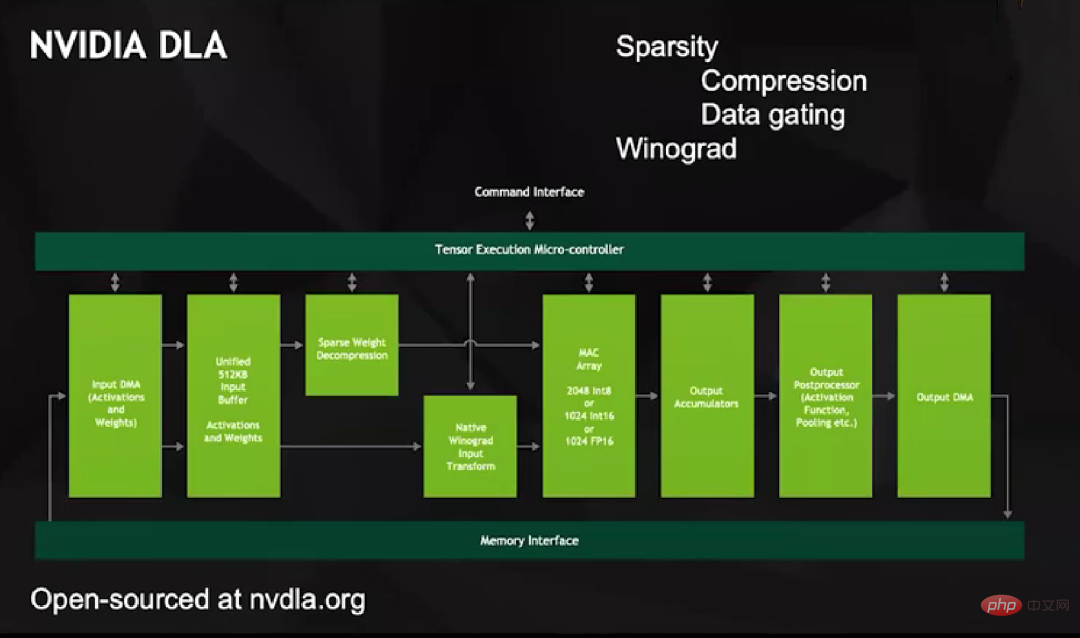

To achieve this goal, we launched the NVIDIA DLA project around 2013. It is an open source product with very complete supporting facilities, no different from other deep learning accelerators. But DLA has a large MAC array that supports 2048 Int8, 1024 Int16 or 1024 FP16 operations.

DLA has two unique features: First, it supports sparsification. We start from the low-hanging fruit. All data transfers, including from DMA to Unified Buffer and from Unified Buffer to MAC array, only involve non-zero values. We decide which elements are left through encoding, and then solve these elements. Compress and then input into the MAC array for calculation.

The way DLA decompresses is clever, it does not enter zero values into the MAC array, because this will make a series of data become zeros. Instead, it sets up a separate line to represent the zero value, and when the multiplier receives that line on either input, it latches on to the data within the multiplier and then sends the output, which does not increase by any numeric value. Data Gating is very energy efficient.

The second is to support Winograd transformation at the hardware level. You know, if you want to do convolution, for example, an m×n convolution kernel, you need n 2 multipliers and adders in the spatial domain, but if you do it in the frequency domain, you only need point-by-point multiplication. Therefore, large convolution kernel operations are more efficient in the frequency domain than in the spatial domain. Depending on the size of the convolution kernel, Winograd transformation can bring a 4x performance improvement to some image networks.

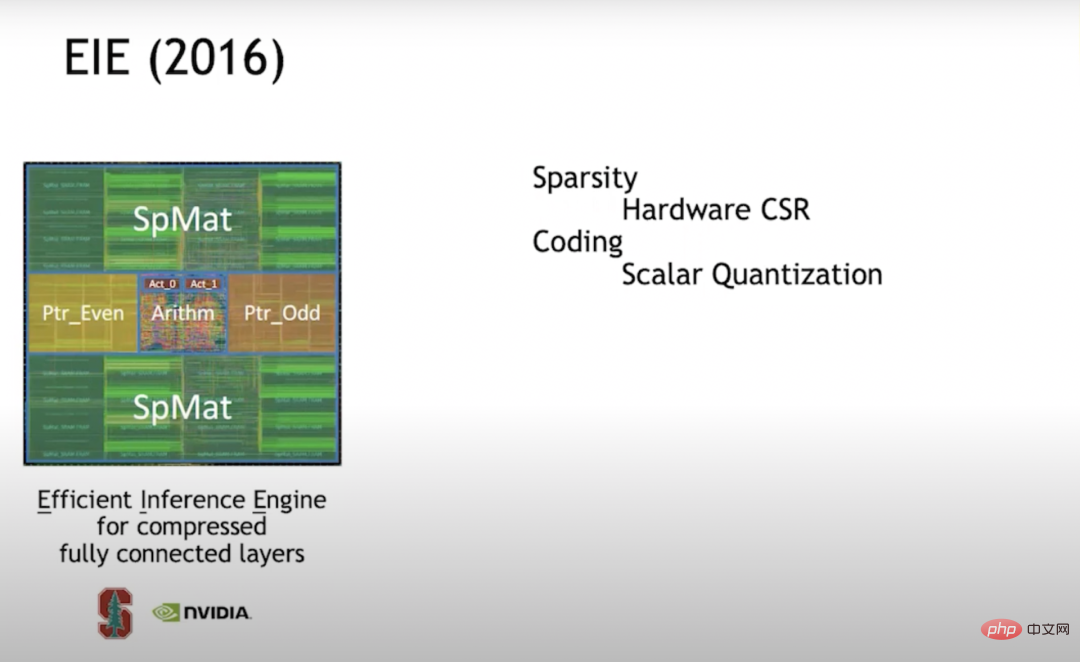

EIE (2016) In 2016, I studied at Stanford and My then student Han Song (assistant professor of MIT EECS and former co-founder of Shenjian Technology) studied EIE (Efficient Inference Engine) together. This was one of the first explorations into sparsification. We support CSR (Compressed Sparse Row) matrix representation at the hardware level. This approach is very efficient. When the density is 50%, it is even more energy-saving than full-density computing.

It was later discovered that if you want the accelerator to be more efficient, you should build a vector unit array so that each engine does not only perform a single multiply-accumulate, but each PE (Processing Element) performs 16×16=256 multiplications and additions. But when we started building vector cell arrays, we found it difficult to achieve sparsity efficiently, so we turned to structured sparsity.

When EIE processes the scalar unit, it stores the pointer structure in a separate memory, then processes the pointer structure through the pipeline stage, determines which data can be multiplied, and then performs the multiplication. The result of the operation is placed in the appropriate location. This entire process runs very efficiently. We also found that in addition to "pruning" to achieve sparsity, the method to improve the computing efficiency of neural networks also involves quantification. Therefore, we decided to use codebook quantization. Codebook quantization is the best way to improve efficiency when it comes to data expressed in bits. So we trained on the codebook.

It turns out that if you can use backpropagation to capture gradient descent, you can apply backpropagation to anything. So we used backpropagation in the codebook to train the optimal set of codewords for a given accuracy. Assuming that the codebook has 7 bits, then you will get 128 codewords. We will find the optimal 128 codewords in the neural network for training.

Codebook quantization faces a problem: the overhead of mathematical operations is very high. Because no matter how big the codebook is, what the actual value is, you need to look it up in RAM (random access memory). Actual values must be represented with high precision, and you can't represent these codewords exactly. So we put a lot of effort into high-precision mathematics. From a compression point of view, this works very well, but from a math energy point of view, it is not very cost-effective, so we abandoned this technology in subsequent work.

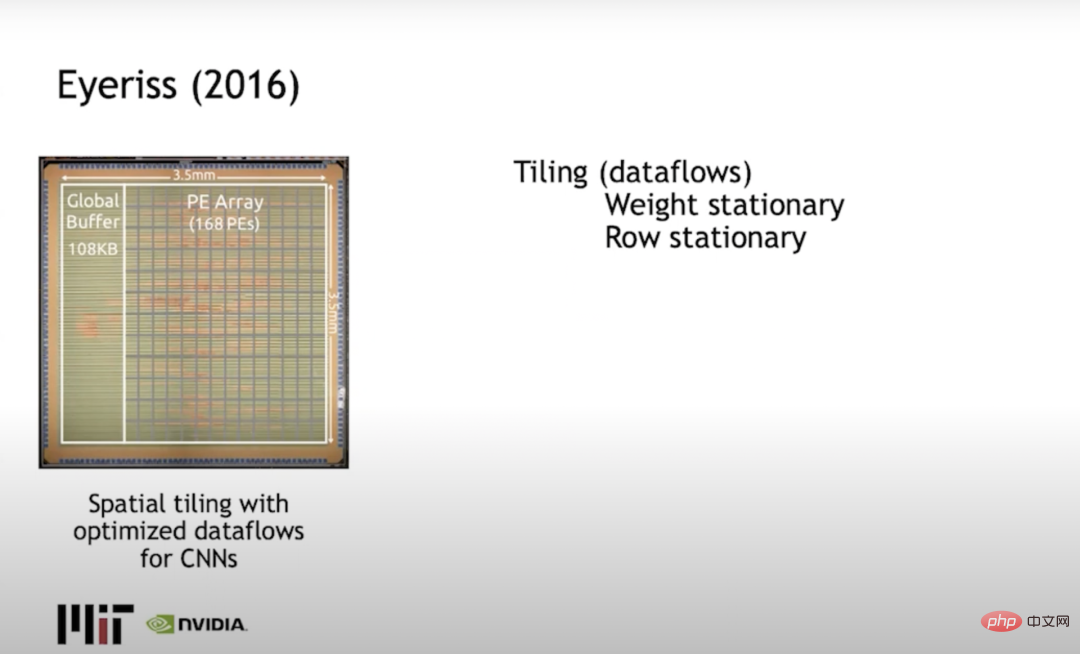

Eyeriss (2016) Joel Emer (who works at both Nvidia and MIT) and Vivienne Sze of MIT built Eyeriss, which mainly solves the tiling problem, or how to limit calculations to minimize data movement. change. A typical approach is to use row stationary, spreading weights in rows and output activations in columns, and minimizing the energy consumed by data movement.

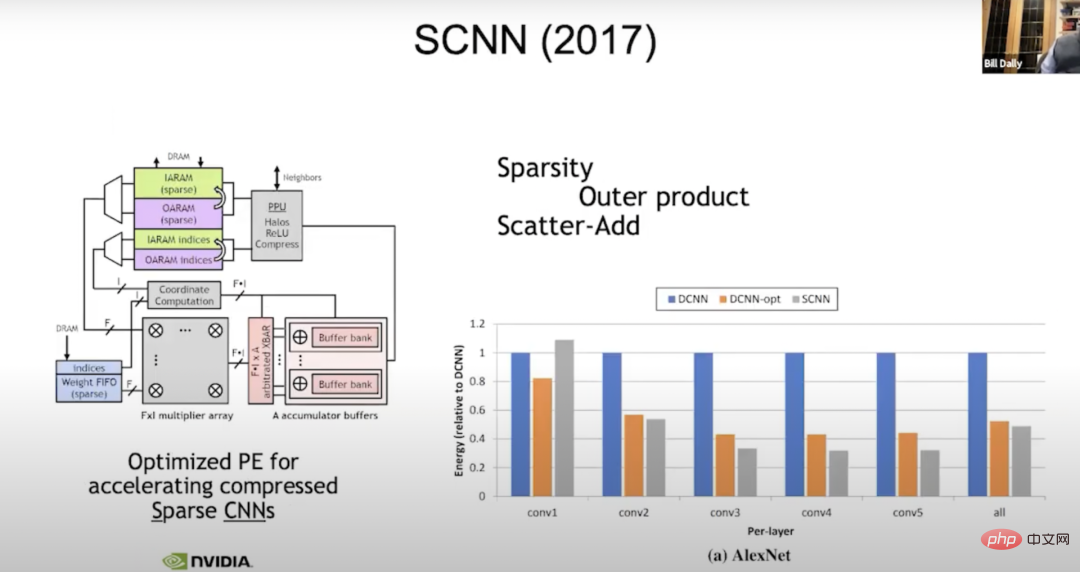

SCNN (2017) We are still working on sparsity now Research. In 2017, we built a machine called SCNN (Sparse CNNs) for sparse compilation (an evolution of neural networks). What we did is: we moved all the complex problems related to dealing with sparsity to the output. Read all the input activations and figure out where they need to go, so the "f-wide vector" here is a typical vector input activation.

We will read four input activations at a time, four weights, and each weight needs to be multiplied by each input activation. It's just a question of where to put the result, so we multiply f by f.

In the coordinate calculation, we take the exponent of the input activation and weight, and calculate the position where the summation result is required in the output activation. Then a data scatter (scatter_add) calculation is done on these accumulator buffers. Before that, everything worked perfectly. But it turns out that transferring irregularities to the output is not a good idea, because in the output, the precision is actually the widest. When you tend to accumulate, you do eight bits of weighting, eight bits of activation, and the total is 24 bits. Here we use wide accumulators to do a lot of data movement, which is better than doing more intensive data movement. However, the improvement is not as much as imagined, maybe 50% of the energy of the density unit.

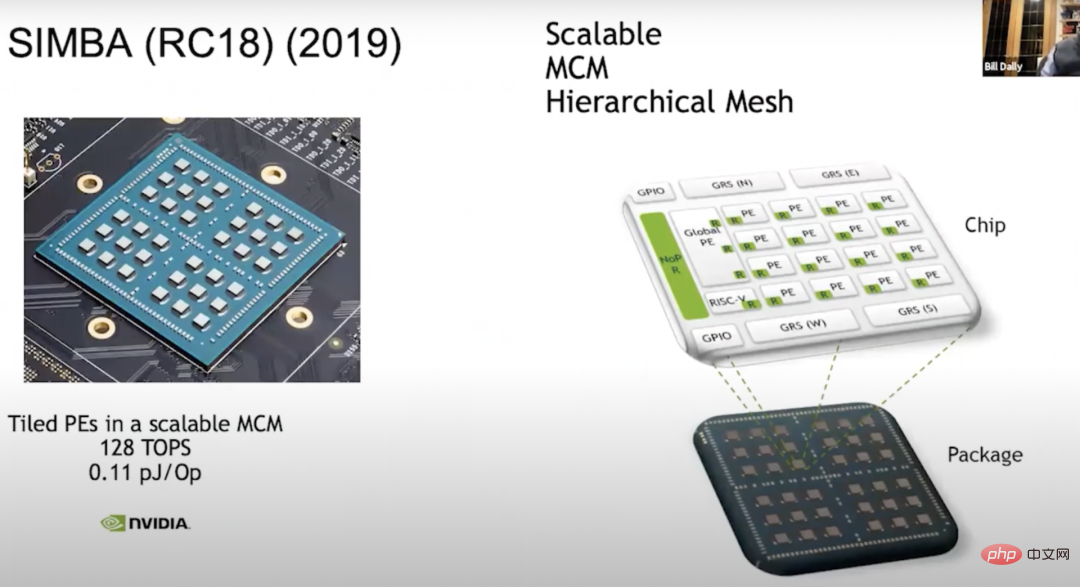

SIMBA(RC18)(2019) Another thing we have to do is: use the existing accelerator to build a multi-chip module - SIMBA (RC18). The idea of doing this research came up in 2018. At the same time, this chip also demonstrated many ingenious technologies. . It has a good PE architecture, and the chip provides a very effective signaling technology in the middle. Now the architecture scales to a full 36 chips, each of which has a 4x4 PE matrix, and within this unit, each PE has 8 wide vector units, so we can get 128 TOPS of computing power, each Each Op has 0.1 pJ, which is approximately equivalent to 10 TOPS/W.

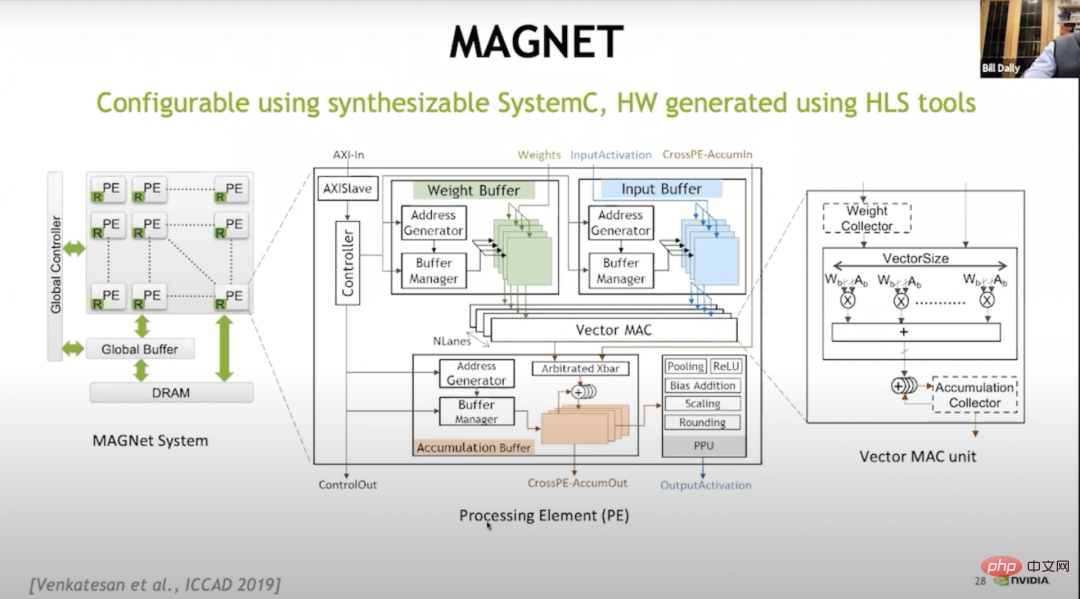

We learned a lot about trade-offs. We realized that building these PE arrays was like building a very large design space, about how to build the memory hierarchy, how to schedule data, etc., so we built a system called MAGNET.

MAGNET The picture above is a design space exploration system published at ICCAD (International Conference on Computer-Aided Design) in 2019. It mainly Used to enumerate its design space, such as: how wide each vector unit should be, how many vector units each PE has, how big the weight buffer is, how big the accumulator buffer is, how big the activation buffer is, etc. Later, we found that we needed to do another level of caching, so we added a weight collector and an accumulator collector.

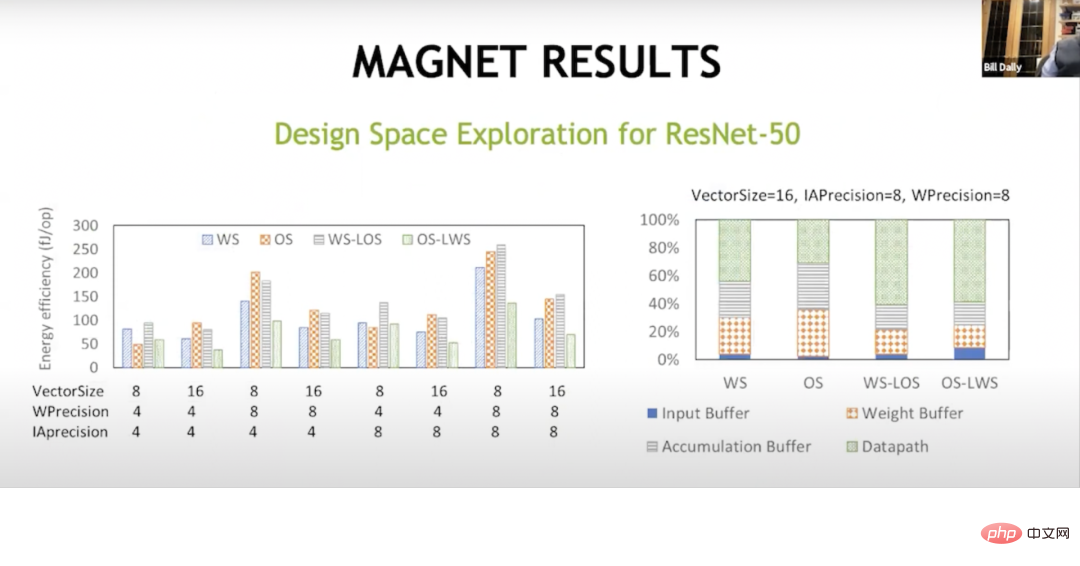

##MAGNET RESULTS With this additional level of caching, we Ultimately it was a success. This shows that the data flow here is different, and the weight fixed data flow was originally done by Sze and Joel. You're putting most of your energy into things outside of the data path, like into accumulation buffers, weight buffers, and input buffers. But with these hybrid data flows, weights are fixed, local outputs are fixed, outputs are fixed, local weights are fixed, you can get almost two-thirds of the energy in the math operations, and you can reduce the energy spent in these memory arrays, thereby in memory processing on another level of the hierarchy. This brings the performance per watt to about 20 TOPS now.

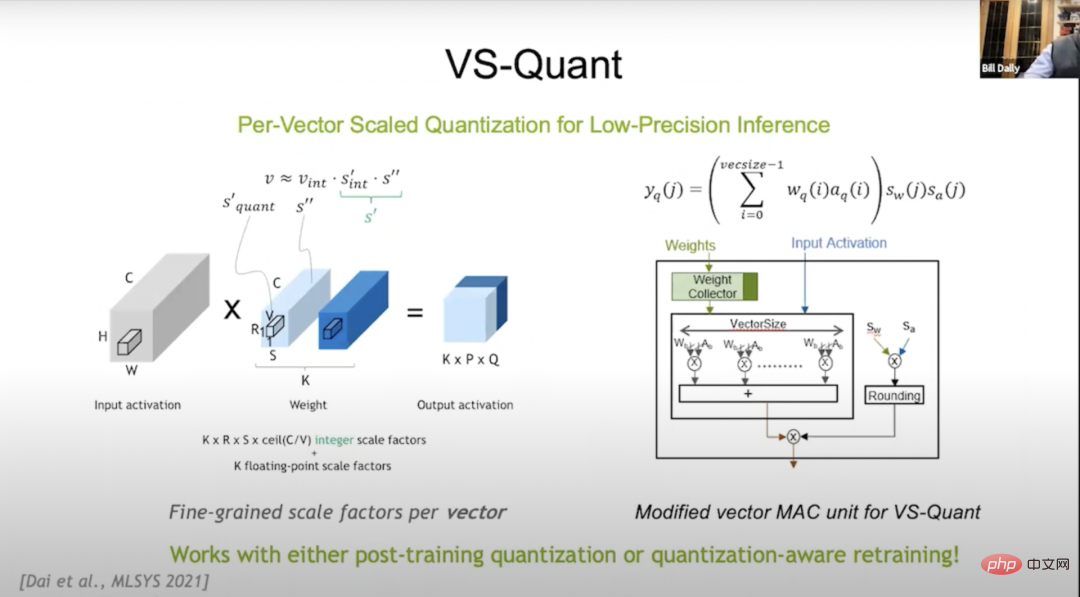

VS-Quant 2021 , at the MLSYS (The Conference on Machine Learning and Systems, Machine Learning and Systems Conference) conference, we introduced VS-Quant to explore a way to compress the number of bits (the codebook quantization effect is very good in this regard) and Quantitative method that is very cost-effective in terms of mathematical overhead. We use an integer representation but want to scale it so that the dynamic range of integers can be represented.

But it turns out that if you apply this to the entire neural network now, it won't work very well because there are a lot of different dynamic ranges on the neural network, so VS-Quant's The key point is: we impose a single scale factor on a relatively small vector, approximately by doing the above operation on 32 weights, the dynamic range will be much smaller. We can put these integers on top, and we can also adjust and optimize them.

Maybe we didn’t represent the outliers accurately, but we represented the rest of the numbers better. In this way, we can trade relatively low-precision weights and activations for higher accuracy. So we now have multiple scale factors: one is the weight factor and one is the activation factor.

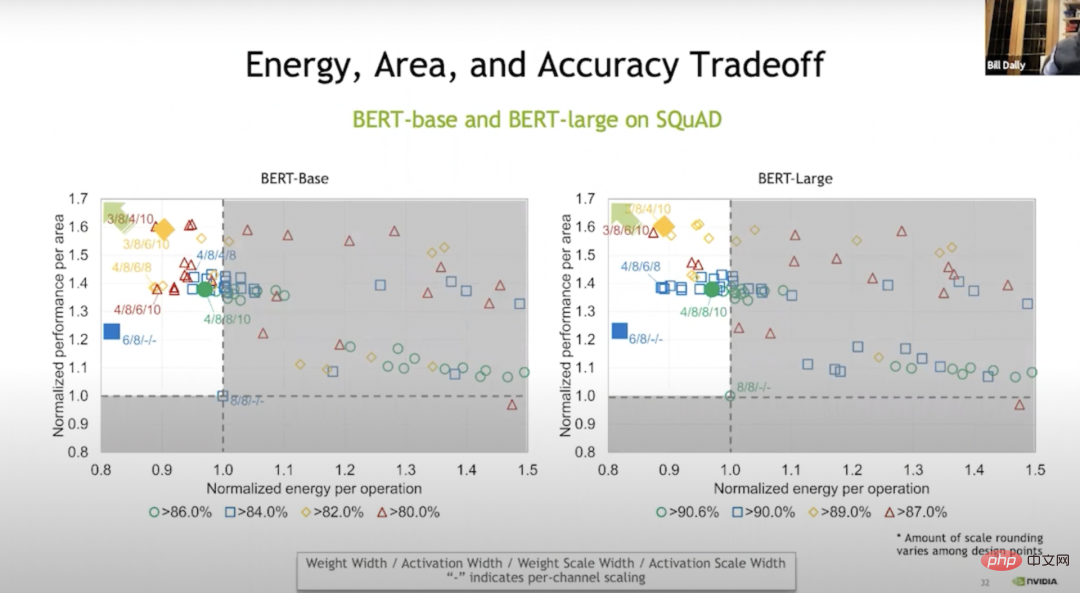

##Energy, Area, and Accuracy Tradeoff We basically perform these operations at the vector level, and the results are shown in Bert-base. Compared with training without weights, we can save 20% energy and 70% space in some cases through training. The green color in the above figure indicates that there is basically no loss of accuracy; blue, orange and red indicate that the accuracy is better. High or lower. But even at the blue level, the accuracy is quite high.

With VS-Quant and some other tweaks, we did a trial run on these language models. Running on a language model is much harder than running on an image model which is around 120 TOPS/W.

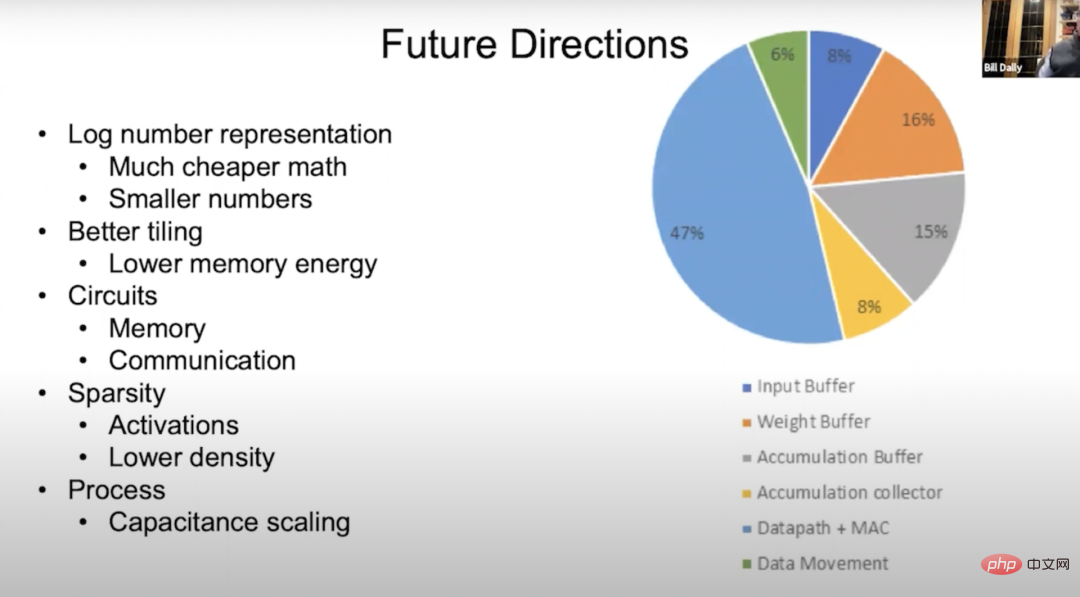

Accelerators So for the accelerator, you need to do a matrix multiplication first device. We need to come up with a tiling method, a seven nested loop calculation method using neural networks. Essentially copying some of these loops to each layer of the memory system to maximize reuse of each layer's memory hierarchy and minimize data handling. We also studied sparsity, which is very good at compression. It basically increases memory bandwidth and communication bandwidth and reduces memory and communication energy. The next level of sparsity development is: when you have a zero value, just send a separate line representing the zero value without having to switch to 8 or 16 bits every loop. The Ampere architecture can reuse multipliers by using structured sparsity, which is a very efficient method that only requires the overhead of a few multiplexers (which is basically negligible Excluding). We can also reuse the multiplier when doing pointer operations, from which we can get 2x the performance. Number representation is very important. We started with EIE (Translator's Note: Efficient Inference Engine, Dr. Han Song's paper at ISCA 2016. Implemented hardware acceleration of compressed sparse neural networks. ESE with its approximate method won the best paper of FPGA2017.), trying to does codebook, but this makes scaling mathematically expensive. Finally, technologies successfully tested in accelerators will eventually be applied to GPUs. This is a good way to test. We believe that GPU is a platform for domain-specific hardware. Its memory system is very good and the network is smooth, allowing deep learning applications to run very fast. ##Future Directions Let’s talk about the future of deep learning hardware. The picture above is a pie chart of energy flow. You can see that most of it flows to the data path. About 50% of it is about mathematical operations, so we want to make mathematical operations consume less energy; the rest flows to the memory. and data movement. The green ones are data transfers, and the rest are input buffers, weight buffers, accumulation buffers, and accumulation collectors, with different proportions. We are looking into reducing the energy consumption of mathematical operations, and one of the best ways is to move it to a logarithmic system. Because in a logarithmic system, multiplication becomes addition, and addition is usually much less energy intensive. Another way is to convert to a smaller value, which can be achieved through VS-Quant. By quantizing more precisely, we can get equivalent accuracy from neural networks with lower precision numbers. We hope to do better with tiling, such as maybe adding more layers in the memory hierarchy in some cases, so that the memory energy can be reduced, and also It can make memory circuits and communication circuits more effective. On the Ampere architecture, the work we are already doing on structured sparsity is a good start, but I think we can do better by lowering the density or choosing multiple densities to adjust activations and weights. With the deepening of research, process technology will also bring about some progress in capacitance scaling. Released in 2012 Since the Kepler architecture, GPU inference performance has doubled every year. This development is largely due to ever-better digital representation. This time we talked about a lot of content, such as from FP32 to FP16 to Int8 and then to Int4 of the Kepler architecture; we talked about using more complex dot products by allocating instruction overhead; we talked about the Pascal architecture and the semi-precision matrix multiply and accumulate in the Volta architecture. , integer matrix multiplication and accumulation in Turing architecture, and Ampere architecture and structural sparsity. I have talked very little about Plumbing, but Plumbing is very important. Plumbing is used to lay out the on-chip memory system and network, so that powerful Tensor Cores can be fully utilized. For Tensor Cores to perform a gigabit of operations per second in the Turing architecture and feed the data into executing common benchmarks, arranging branch memory, on-chip memory and interconnection between them and Normal operation is very important. Looking to the future, we are ready to try to apply various new technologies to the accelerator. As mentioned earlier, we have conducted many experiments on sparsity and tiling techniques, and experimented with different tiling techniques, numerical representations, etc. in the MAGNet project. But we still feel a lot of pressure, because the progress of deep learning actually depends on the continuous improvement of hardware performance. Doubling the inference performance of GPU every year is a huge challenge. In fact, the cards in our hands are almost played, which means that we must start to develop new technologies. The following are four directions that I think are worthy of attention: First, study new number representations, such as logarithms (Log number), and more clever quantization schemes than EasyQuant; Secondly, continue to study sparsity in depth; Then, study storage circuits and communication circuits; Finally, improve the existing process technology. Dejan Milojicic: How large a matrix convolution is needed to convert the Winograd algorithm Convert to a more efficient convolution implementation? ##Bill Dally: I think that 3×3 matrix convolution is Very efficient. Of course, the larger the convolution, the higher the efficiency. Dejan Milojicic: How is the memory bandwidth of High Bandwidth Memory (HBM) calculated? Is the memory accessed through all GPU cores at the same time? Bill Dally: Each HBM stack has a separate framebuffer Area, like Ampere architecture has six stacks. Our memory bandwidth is calculated with each memory controller running at full bandwidth. There is a cache layer between each GPU core, and the bandwidth of our on-chip network is several times the HBM bandwidth, so basically we only need to run a small part of the streaming multiprocessor to saturate the HBM. Dejan Milojicic: How does distributed computing with NVLink work? Who decides which calculation to perform? Where and what overhead is incurred when doing scatter-gather on multiple GPUs? Bill Dally:The programmer will decide where to put the data and threads location, and you just start threads and data on the GPU and determine where they run. A major advantage of systems connected using NVLink is that it is a shared address space, and the overhead of transmitting relatively small data is also quite small, so we use cluster communication in the network. Normally, if you do data parallelism in deep learning, each GPU will run the same network, but processing different parts of the same data set, and they will each Accumulate weight gradients, then you share the gradients across GPUs and accumulate all gradients and then add them to the weights. Cluster communication is very good at handling this kind of work. Dejan Milojicic: Should we create a universal deep learning accelerator for all applications, or should we create separate dedicated accelerators, such as vision accelerators or natural language processing accelerators? ? Bill Dally: Without affecting efficiency, I think the accelerator Of course, the more general the better, and Nvidia’s GPUs are comparable to dedicated accelerators in accelerating deep learning efficiency. What's really important is that the field of machine learning is moving forward at an incredible pace. A few years ago, everyone was still using recurrent neural networks to process languages. Then Transformer appeared and replaced RNN at lightning speed. In the blink of an eye, everyone started using Transformer for processing. Natural language processing. Similarly, just a few years ago, everyone was using CNNs to process images. Although many people are still using convolutional neural networks, more and more people are starting to use Transformers to process images. Therefore, I do not support over-specializing the product or creating a dedicated accelerator for a certain network, because the product design cycle usually takes several years, and during this time, people are likely to stop using it. A network. We must have a keen eye to detect changes in the industry in a timely manner, because it is developing at an alarming rate all the time. Dejan Milojicic: What impact does Moore’s Law have on GPU performance and memory usage? Bill Dally: Moore's Law states that the cost of transistors will decrease year by year. . Today, the number of transistors that can be accommodated on integrated circuits is indeed increasing, and the chip manufacturing process has also made a leap from 16 nanometers to 7 nanometers. The density of transistors on integrated circuits is getting larger and larger, but the price of a single transistor has not decreased. . So I think Moore's Law is a bit outdated. Still, having more transistors on an integrated circuit is a good thing so we can build larger GPUs. Large GPUs also consume more power and are more expensive, but that's always a good thing because we're able to build things that we couldn't build before. Dejan Milojicic: If developers pay more attention to frameworks like PyTorch, then what should they learn from the advancement of hardware to make their deep learning models run better? Efficient? Bill Dally: This question is difficult to answer. The framework does a good job of abstracting the hardware, but there are still some factors that affect how fast your model runs that are worth investigating. What we can try to do is, when coming up with a better technique, like a better numerical representation, we can try to combine various different techniques with the framework and see which one is more effective, which is An indispensable part of R&D work. Dejan Milojicic: Is Nvidia experimenting with new packaging methods? Bill Dally: We have been conducting various experiments on various packaging technologies. Experiment and figure out what they can and can’t do so you can deploy them into production when the time is right. For example, some of these projects are studying multi-chip modules, using solder bumps and hybrid bonding for chip stacking. In fact, there are many simple packaging technologies. Dejan Milojicic: Compared with Nvidia’s Tensor Core and Google’s TPU, who is better? Bill Dally: We don’t know much about Google’s latest TPU, but the TPUs they launched before were all dedicated engines, basically built-in large multiplier-accumulator arrays. TPU has independent units to handle things like nonlinear functions and batch norm, but our approach is to build a very general computational unit streaming multi- processor (SM), just very general instructions can be used to make it do anything, and then Tensor Cores are used to accelerate the matrix multiplication part. So both Tensor Core and Google's TPU have similar multiplier-accumulator arrays, it's just that the array we use is relatively smaller. Dejan Milojicic: Who is Nvidia’s biggest opponent? Bill Dally: NVIDIA never compares with other companies. Our biggest opponent is ourselves. We are constantly challenging ourselves. I think this is the right attitude. If we blindly regard others as competitors, it will slow down our progress. Instead of focusing so much on what others are doing, we should really focus on what is possible. What we do is like pursuing the speed of light. We pay more attention to how to do the best and how far we are from the speed of light. This is the real challenge. Dejan Milojicic: What are your thoughts on quantum computing? Are quantum simulations a natural extension of deep learning challenges? ## Bill Dally: In March 2021, we released a software development kit called "cuQuantum". Google has also previously developed a computer with 53 qubits and claimed that it had achieved "quantum superiority." Some calculations that cannot be completed by traditional computers can be completed within five minutes using cuQuantum. Therefore, if you want to achieve truly accurate quantum algorithms, rather than today's Noisy Intermediate-Scale Quantum (NIST) calculations, GPU should be the best choice. Nvidia’s traditional GPU computer is one of the fastest quantum computers currently, and Alibaba has also achieved good results in similar classical calculations, which just confirms our conclusion. Our take on quantum computing: Nvidia won't be surprised by any developments in this technology area. In fact, we have also established a research group to track cutting-edge developments in the field of quantum computing. For example, IBM announced the development of a chip with 127 qubits. We have also been tracking progress in aspects such as the number of qubits and coherence time. Given the number of qubits required, the accuracy of the qubits, the interference of noise on the quantum, and the overhead required for quantum error correction, I think that within the next five to ten years, quantum will be The calculation cannot be commercialized. My most optimistic view is that in about five years, people will start doing quantum chemistry simulations, which should be the most likely thing to do. But before that happens, there are still a lot of physical puzzles to solve. What many people don’t realize is that quantum computers are analog computers, and analog computers need to be very precise and easily isolated, otherwise any coupling to the environment will lead to inconsistent results. Dejan Milojicic: In your opinion, when will machines reach the level of artificial general intelligence (AGI)? Bill Dally: I have a negative view on this issue. Take a look at some of the more successful artificial intelligence use cases, such as neural networks, which are essentially universal function fitters. Neural networks can learn a function through observation, so its value is still reflected in artificial perception rather than artificial intelligence. Although we have achieved good results so far, we can continue to study how to use artificial intelligence and deep learning to improve productivity, thereby improving medical care and education, and bringing better things to people. life. In fact, we don't need AGI to do this, but should focus on how to maximize the use of existing technology. There is still a long way to go before AGI, and we must also understand what AGI is. 5 The future of deep learning hardware

Summary

7 Answer audience questions

The above is the detailed content of NVIDIA Chief Scientist: The past, present and future of deep learning hardware. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

Windows 10 running opening location introduction

Windows 10 running opening location introduction

What are the dos commands?

What are the dos commands?

How to compress html files into zip

How to compress html files into zip

How to solve deletefile error code 5

How to solve deletefile error code 5

What are Python identifiers?

What are Python identifiers?

Python crawler method to obtain data

Python crawler method to obtain data