Technology peripherals

Technology peripherals

AI

AI

A brief analysis of image distortion correction technology on the difficulties of smart car perception front-end processing

A brief analysis of image distortion correction technology on the difficulties of smart car perception front-end processing

A brief analysis of image distortion correction technology on the difficulties of smart car perception front-end processing

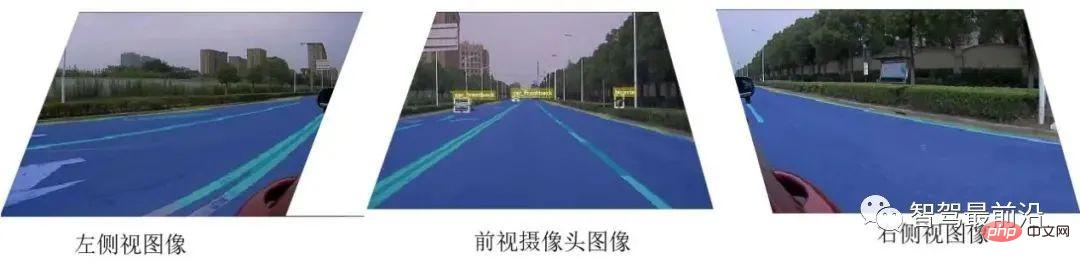

We know that when shooting images, the ideal position of the camera is perpendicular to the shooting plane, so as to ensure that the image can be reproduced according to the original geometric proportion. However, in the actual application of smart driving cars, due to the limitations of the smart car body structure, body control requires the camera to have a certain preview distance. The horizontal and vertical scanning surfaces of the camera usually expand in a fan shape, and the camera is generally in contact with the ground. Install at an angle. The existence of this angle will cause certain imaging distortion at the edge of the image. The result of distortion is a series of similar problems as follows during post-image processing:

1) Vertical lines are photographed as oblique lines, resulting in slope calculation errors;

2) Distant curves may be compressed due to distortion, resulting in curvature calculation errors, etc.;

3) For the side lane vehicle status, serious problems occur during the recognition process. Distortion will cause mismatching problems during post-processing;

Various problems such as the above may exist in the entire image perception. If the distortion is not handled properly, it will affect the entire image. Image quality and subsequent neural network recognition pose greater risks. In order to meet the real-time control requirements of smart cars, it is generally necessary to propose corresponding correction algorithms for camera image distortion in practical application scenarios.

01 The main types of distortion in smart cars

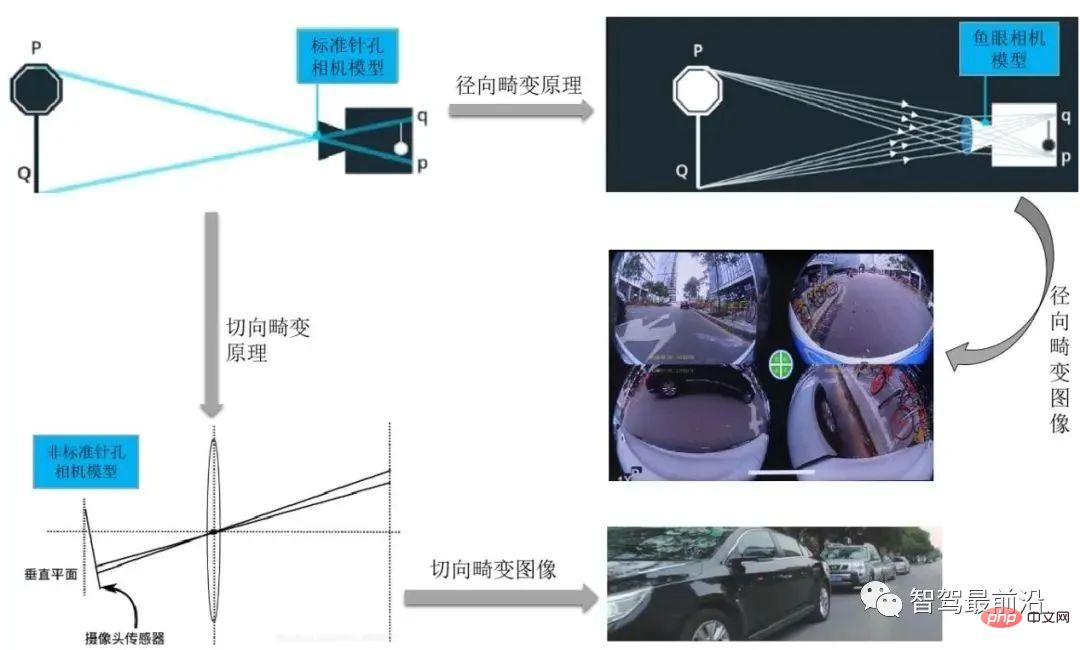

Camera distortion includes radial distortion, tangential distortion, centrifugal distortion, thin Prismatic distortion, etc. The camera distortions on smart cars mainly include radial distortion and tangential distortion.

Radial distortion is divided into barrel distortion and pincushion distortion.

Usually, the surround-view camera used in our smart parking system uses wide-angle shooting, and its corresponding distortion type is usually radial distortion. The main cause of radial distortion is the irregular change of the radial curvature of the lens, which will cause the distortion of the image. The characteristic of this distortion is that the principal point is the center and moves along the radial direction. The farther away it is, the farther away it is. The greater the amount of deformation produced. Severe radial distortion of a rectangle needs to be corrected into an ideal linear lens image before it can enter the back-end processing process.

The front-view, side-view, and rear-view cameras generally used in driving systems use general CMOS process cameras for shooting, and the lens may not be guaranteed during the installation process of the front-side view camera. Strictly parallel to the imaging plane, it may also be due to manufacturing defects that the lens is not parallel to the imaging plane, resulting in tangential distortion. This phenomenon usually occurs when the imager is attached to the camera.

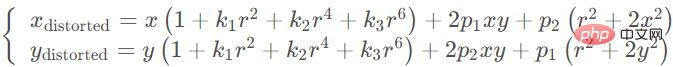

There are a total of 5 distortion parameters in the radial distortion and tangential distortion models. In Opencv, they are arranged as A 5*1 matrix, containing k1, k2, p1, p2, k3 in sequence, is often defined in the form of a Mat matrix.

For distortion correction, these 5 parameters are the 5 distortion coefficients of the camera that need to be determined during camera calibration. The parameters k1, k2, and k3 are called radial distortion parameters, where k3 is an optional parameter. For cameras with severe distortion (such as eye cameras), there may also be k4, k5, and k6. Tangential distortion can be represented by two parameters p1 and p2: So far, a total of five parameters have been obtained: K1, K2, K3, P1, P2. These five parameters are necessary to eliminate distortion and are called distortion vectors, also They are called camera external parameters.

Therefore, after obtaining these five parameters, the deformation distortion of the image caused by lens distortion can be corrected. The following figure shows the effect after correction according to the lens distortion coefficient:

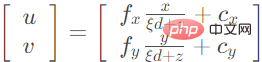

The formula to find the correct position of this point on the pixel plane through 5 distortion coefficients is as follows:

After distortion The point can be projected to the pixel plane through the internal parameter matrix to obtain the correct position (u, v) of the point on the image:

02 Image distortion correction method

Different from the camera model methodology, image dedistortion is to compensate for lens defects. Perform radial/tangential dedistortion and then use the camera model. The method of dealing with image distortion mainly involves which camera model to choose for image projection.

Typical camera model projection methods include spherical model and cylindrical model.

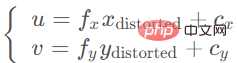

1. Fisheye camera imaging distortion correction

Usually similar to fisheye lens Produces great deformation. For example, during the imaging process of an ordinary camera, a straight line is still a straight line of a certain size when projected onto the image plane. However, when the image captured by a fisheye camera is projected onto the image plane, it will become a very large and long straight line, even in some scenes. Lower line detection is projected to infinity, so the pinhole model cannot model a fisheye lens.

In order to project the largest possible scene into a limited image plane, the first version of the fisheye lens is composed of more than a dozen different lenses. During the imaging process, the incident light After varying degrees of refraction and projection onto an imaging plane of limited size, the fisheye lens has a larger field of view compared with ordinary lenses.

Research shows that the model followed by fisheye cameras when imaging is approximately the unit sphere projection model. Here, in order to better adapt to the derivation process of the camera pinhole model, the common method is to use the process of projection to the spherical camera model.

The analysis of the fisheye camera imaging process can be divided into two steps:

- Three-dimensional space points are linearly projected onto a sphere, Of course, this sphere is the virtual sphere we assume, and its center is considered to coincide with the origin of the camera coordinates.

- The point on the unit sphere is projected onto the image plane. This process is nonlinear.

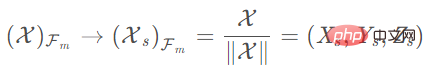

The following figure shows the image processing process from a fisheye camera to a spherical camera in a smart driving system. Assume that the point in the camera coordinate system is X=(x,y,z) and the pixel coordinate is x=(u,v). Then its projection process is expressed as follows:

1) The first step is to use the camera to collect the three-dimensional image in the world coordinate system point, and project the imaging point in the image coordinate system onto the normalized unit spherical coordinates;

2) Deviating the camera coordinate center by units along the z-axis, we get the following:

3) Consider the unit sphere and divide the spherical surface into Normalize to 1 unit:

4) Transform the spherical projection model to the pinhole model to get The corresponding principal point coordinates, with which the corresponding standard camera coordinate system model can be established:

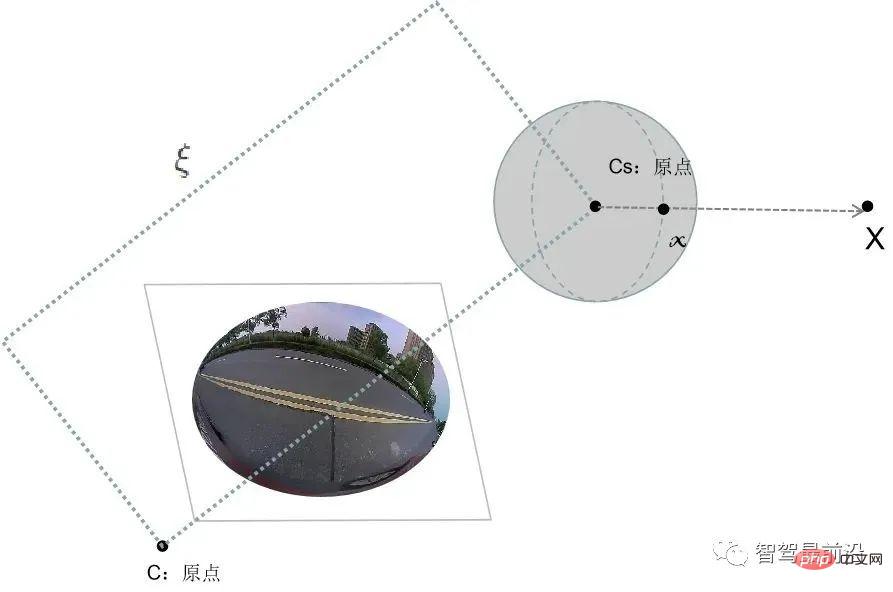

2. Cylindrical coordinate projection

For terminals such as front-view and side-view cameras, the images captured usually mainly produce tangential distortion. For tangential distortion, it is usually recommended to use a cylindrical camera model. Its advantage is that the user can switch the line of sight arbitrarily within a 360-degree range in a panoramic view such as a fisheye camera, and can also change the perspective on a line of sight to achieve the effect of getting closer or farther away. , at the same time, cylindrical panoramic images are also easier to process, because the cylindrical surface can be cut along the axis and unfolded on a plane. Traditional image processing methods can often be used directly. Cylindrical panoramic images do not require the camera to be calibrated very accurately. . Users have a 360-degree viewing angle in the horizontal direction and can also make certain changes in viewing angle in the vertical direction, but the angle range is limited. Since the image quality of the cylindrical model is uniform and the details are more realistic, it has a wider range of applications.

Generally speaking, the significant advantages of cylindrical panorama are summarized in the following two points:

1) The acquisition of its single photo The method is simpler than obtaining the cubic form and spherical form. Ordinary vehicle-mounted cameras (such as front-view and side-view cameras) can basically obtain original images.

2) The cylindrical panorama is easily expanded into a rectangular image, which can be directly stored and accessed using commonly used computer image formats. The cylindrical panorama allows the rotation angle of the participant's line of sight to be less than 180 degrees in the vertical direction, but in most applications, a 360-degree panoramic scene in the horizontal direction is sufficient to express spatial information.

Here we focus on the algorithm of how to use a cylindrical camera to correct distortion of the original image. In fact, this It is a process of obtaining the mapping relationship from the virtual camera to the original camera. The virtual camera here refers to the mapping relationship between real images and generated cylindrical images.

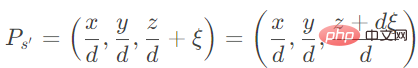

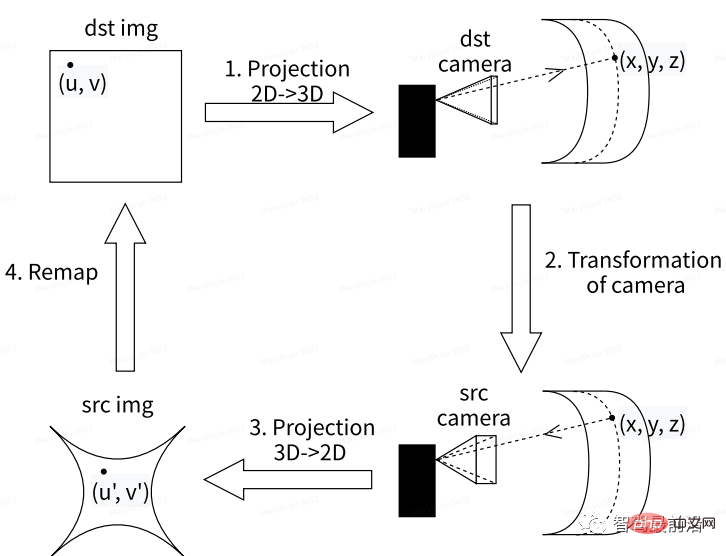

The following figure shows the image processing process of converting from ordinary vehicle camera shooting to cylindrical camera in a smart driving system. Among them, the essence of obtaining a virtual camera image is to find the mapping relationship between the virtual camera and the original camera. The general process is as follows:

##First, the front/side view original video image can be set as the target image dst img; where the main point (u, v) on the target map is the basic point for 2D to 3D back projection transformation to the target camera coordinate map above, the target camera can reconstruct the point position (x, y, z) in the world coordinate system; then, the corresponding original camera image Src Camera under the virtual camera is obtained through the projection transformation algorithm in the three-dimensional coordinate system; after By performing a 3D to 2D projection transformation on the original camera image, the corresponding corrected image Src img (u', v') can be obtained. This image can be reconstructed to restore the original image dst img under the virtual camera.

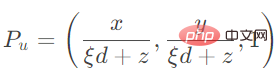

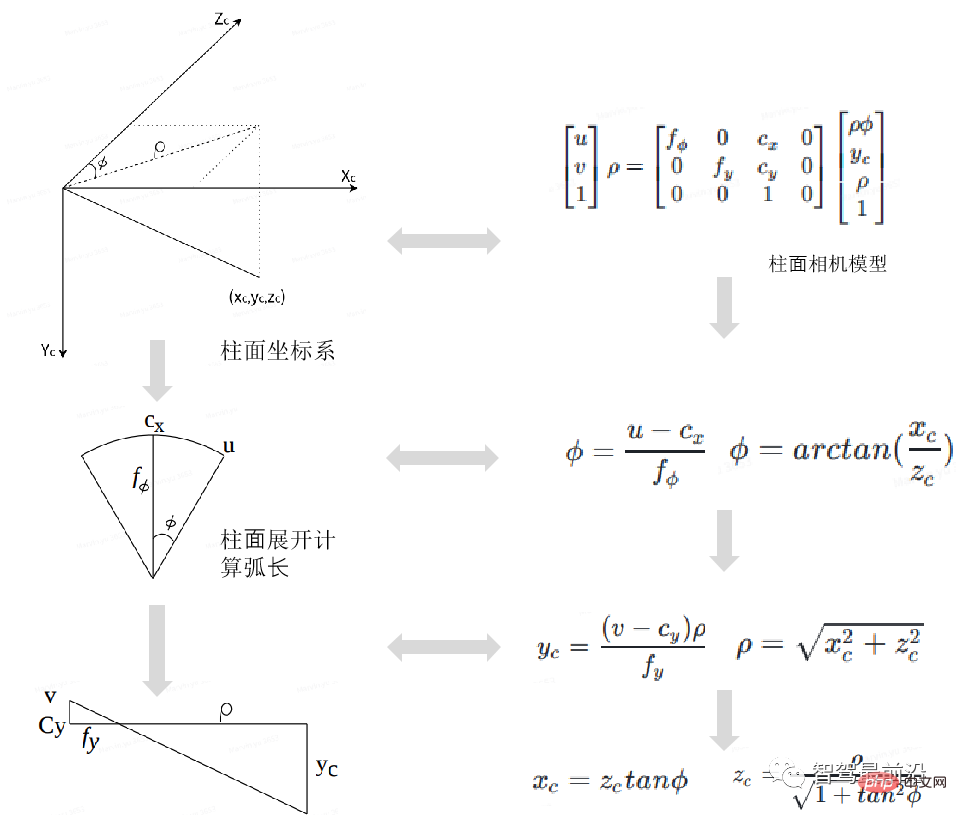

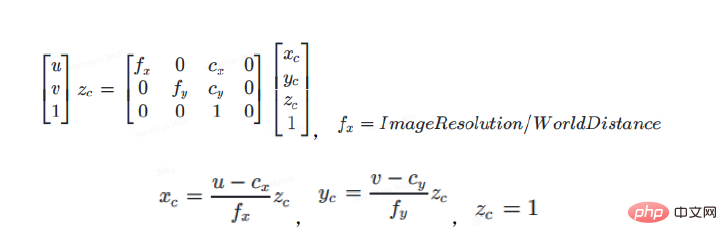

It can be seen from the cylindrical camera model that the transformation formula from the cylindrical camera model to the pinhole camera model is as follows:

In the above formula, u and v represent the principal point of the pinhole camera plane (also called the coordinates in the pixel coordinate system), and fx, fy, cx, and cy represent manufacturing or installation errors. The two coordinate axis skew parameters generated. The principal point is multiplied by the radius distance in the cylindrical coordinate system to obtain the corresponding projection on the cylindrical coordinates.

ρ to perform polynomial approximation. The process of cylindrical camera 2D->3D space is uncertain. When Tdst=Tsrc, when ρ takes different values, from 3D space ->The mapping of the virtual camera obtained by the side-view/front-view camera 2D is the same; if Tdst! =Tsrc, the obtained virtual camera image changes with ρ. For a given cylindrical 2D position (u, v), under a given ρ condition, the 3D camera coordinates xc, yc, zc in the dst camera cylindrical coordinate system can be calculated according to the above formula.

Φ is used to perform polynomial approximation. Φ is the angle between the incident light and the image plane. This value is very similar to the parameters of a fisheye camera.

The next step is the camera transformation process, which can be summarized as follows.

First set the virtual camera image resolution to the resolution of the bird's-eye view IPM map you want to obtain; the principal point of the virtual camera image is the center of the IPM map resolution (generally assumed not to be set offset). Secondly, set the fx, fy and camera position of the virtual camera. The height is set to 1, which corresponds to the setting method of fx and fy. The offset of y can be modified according to needs. From this, the dst camera camera coordinates (xc, yc, zc) dst can be converted into the observation coordinate system vcs coordinates according to the external parameters (R, T) dst of the target camera dst camera, and then combined with the external parameters of the src camera ( R, T) src, convert VCS coordinates to src camera camera coordinates (xc, yc, zc) src.

##03 Summary

Since car cameras usually carry different Imaging lens, this multi-element structure makes it impossible to simply adapt the original pinhole camera model to the analysis of the refraction relationship of the vehicle camera. Especially for fisheye cameras, due to the need to expand the viewing range, the image distortion caused by this refractive index is even more obvious. In this article, we focus on the de-distortion method adapted to various types of visual sensors in intelligent driving systems. We mainly use projection to project the image in the world coordinate system into the virtual spherical coordinate system and the virtual cylindrical coordinate system, thereby relying on 2D- >3D camera transformation to remove distortion. Some algorithms have been improved on the basis of long-term practice compared with the classic dedistortion algorithm.

The above is the detailed content of A brief analysis of image distortion correction technology on the difficulties of smart car perception front-end processing. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Smart App Control on Windows 11: How to turn it on or off

Jun 06, 2023 pm 11:10 PM

Smart App Control on Windows 11: How to turn it on or off

Jun 06, 2023 pm 11:10 PM

Intelligent App Control is a very useful tool in Windows 11 that helps protect your PC from unauthorized apps that can damage your data, such as ransomware or spyware. This article explains what Smart App Control is, how it works, and how to turn it on or off in Windows 11. What is Smart App Control in Windows 11? Smart App Control (SAC) is a new security feature introduced in the Windows 1122H2 update. It works with Microsoft Defender or third-party antivirus software to block potentially unnecessary apps that can slow down your device, display unexpected ads, or perform other unexpected actions. Smart application

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

With such a powerful AI imitation ability, it is really impossible to prevent it. It is completely impossible to prevent it. Has the development of AI reached this level now? Your front foot makes your facial features fly, and on your back foot, the exact same expression is reproduced. Staring, raising eyebrows, pouting, no matter how exaggerated the expression is, it is all imitated perfectly. Increase the difficulty, raise the eyebrows higher, open the eyes wider, and even the mouth shape is crooked, and the virtual character avatar can perfectly reproduce the expression. When you adjust the parameters on the left, the virtual avatar on the right will also change its movements accordingly to give a close-up of the mouth and eyes. The imitation cannot be said to be exactly the same, but the expression is exactly the same (far right). The research comes from institutions such as the Technical University of Munich, which proposes GaussianAvatars, which

MotionLM: Language modeling technology for multi-agent motion prediction

Oct 13, 2023 pm 12:09 PM

MotionLM: Language modeling technology for multi-agent motion prediction

Oct 13, 2023 pm 12:09 PM

This article is reprinted with permission from the Autonomous Driving Heart public account. Please contact the source for reprinting. Original title: MotionLM: Multi-Agent Motion Forecasting as Language Modeling Paper link: https://arxiv.org/pdf/2309.16534.pdf Author affiliation: Waymo Conference: ICCV2023 Paper idea: For autonomous vehicle safety planning, reliably predict the future behavior of road agents is crucial. This study represents continuous trajectories as sequences of discrete motion tokens and treats multi-agent motion prediction as a language modeling task. The model we propose, MotionLM, has the following advantages: First

Do you know that programmers will be in decline in a few years?

Nov 08, 2023 am 11:17 AM

Do you know that programmers will be in decline in a few years?

Nov 08, 2023 am 11:17 AM

"ComputerWorld" magazine once wrote an article saying that "programming will disappear by 1960" because IBM developed a new language FORTRAN, which allows engineers to write the mathematical formulas they need and then submit them. Give the computer a run, so programming ends. A few years later, we heard a new saying: any business person can use business terms to describe their problems and tell the computer what to do. Using this programming language called COBOL, companies no longer need programmers. . Later, it is said that IBM developed a new programming language called RPG that allows employees to fill in forms and generate reports, so most of the company's programming needs can be completed through it.

An article discussing the application of SLAM technology in autonomous driving

Apr 09, 2023 pm 01:11 PM

An article discussing the application of SLAM technology in autonomous driving

Apr 09, 2023 pm 01:11 PM

Positioning occupies an irreplaceable position in autonomous driving, and there is promising development in the future. Currently, positioning in autonomous driving relies on RTK and high-precision maps, which adds a lot of cost and difficulty to the implementation of autonomous driving. Just imagine that when humans drive, they do not need to know their own global high-precision positioning and the detailed surrounding environment. It is enough to have a global navigation path and match the vehicle's position on the path. What is involved here is the SLAM field. key technologies. What is SLAMSLAM (Simultaneous Localization and Mapping), also known as CML (Concurrent Mapping and Localiza

GR-1 Fourier Intelligent Universal Humanoid Robot is about to start pre-sale!

Sep 27, 2023 pm 08:41 PM

GR-1 Fourier Intelligent Universal Humanoid Robot is about to start pre-sale!

Sep 27, 2023 pm 08:41 PM

The humanoid robot is 1.65 meters tall, weighs 55 kilograms, and has 44 degrees of freedom in its body. It can walk quickly, avoid obstacles quickly, climb steadily up and down slopes, and resist impact interference. You can now take it home! Fourier Intelligence's universal humanoid robot GR-1 has started pre-sale. Robot Lecture Hall Fourier Intelligence's Fourier GR-1 universal humanoid robot has now opened for pre-sale. GR-1 has a highly bionic trunk configuration and anthropomorphic motion control. The whole body has 44 degrees of freedom. It has the ability to walk, avoid obstacles, cross obstacles, go up and down slopes, resist interference, and adapt to different road surfaces. It is a general artificial intelligence system. Ideal carrier. Official website pre-sale page: www.fftai.cn/order#FourierGR-1# Fourier Intelligence needs to be rewritten.

Read the smart car skateboard chassis in one article

May 24, 2023 pm 12:01 PM

Read the smart car skateboard chassis in one article

May 24, 2023 pm 12:01 PM

01 What is a skateboard chassis? The so-called skateboard chassis integrates the battery, electric transmission system, suspension, brakes and other components on the chassis in advance to achieve separation of the body and chassis and decoupling the design. Based on this type of platform, car companies can significantly reduce early R&D and testing costs, while quickly responding to market demand to create different models. Especially in the era of driverless driving, the layout of the car is no longer centered on driving, but will focus on space attributes. The skateboard-type chassis can provide more possibilities for the development of the upper cabin. As shown in the picture above, of course when we look at the skateboard chassis, we should not be framed by the first impression of "Oh, it is a non-load-bearing body" when we come up. There were no electric cars back then, so there were no battery packs worth hundreds of kilograms, no steering-by-wire system that could eliminate the steering column, and no brake-by-wire system.

Huawei will launch the Xuanji sensing system in the field of smart wearables, which can assess the user's emotional state based on heart rate

Aug 29, 2024 pm 03:30 PM

Huawei will launch the Xuanji sensing system in the field of smart wearables, which can assess the user's emotional state based on heart rate

Aug 29, 2024 pm 03:30 PM

Recently, Huawei announced that it will launch a new smart wearable product equipped with Xuanji sensing system in September, which is expected to be Huawei's latest smart watch. This new product will integrate advanced emotional health monitoring functions. The Xuanji Perception System provides users with a comprehensive health assessment with its six characteristics - accuracy, comprehensiveness, speed, flexibility, openness and scalability. The system uses a super-sensing module and optimizes the multi-channel optical path architecture technology, which greatly improves the monitoring accuracy of basic indicators such as heart rate, blood oxygen and respiration rate. In addition, the Xuanji Sensing System has also expanded the research on emotional states based on heart rate data. It is not limited to physiological indicators, but can also evaluate the user's emotional state and stress level. It supports the monitoring of more than 60 sports health indicators, covering cardiovascular, respiratory, neurological, endocrine,