Technology peripherals

Technology peripherals

AI

AI

There are hidden clues in the GPT-4 paper: GPT-5 may complete training, and OpenAI will approach AGI within two years

There are hidden clues in the GPT-4 paper: GPT-5 may complete training, and OpenAI will approach AGI within two years

There are hidden clues in the GPT-4 paper: GPT-5 may complete training, and OpenAI will approach AGI within two years

GPT-4, hot, very hot.

But my dear friends, amidst the overwhelming applause, there is one thing that you may have "never expected" -

In the technical paper published by OpenAI, there are actually nine major Hidden clues!

These clues were discovered and organized by AI Explained, a foreign blogger.

He is like a detail maniac, revealing these "hidden corners" one by one from the 98-page paper, including:

- GPT-5 may have completed training

- GPT-4 has experienced "hang" situations

- OpenAI may achieve close to AGI within two years

- ......

Discovery 1: GPT4 has "hanged"

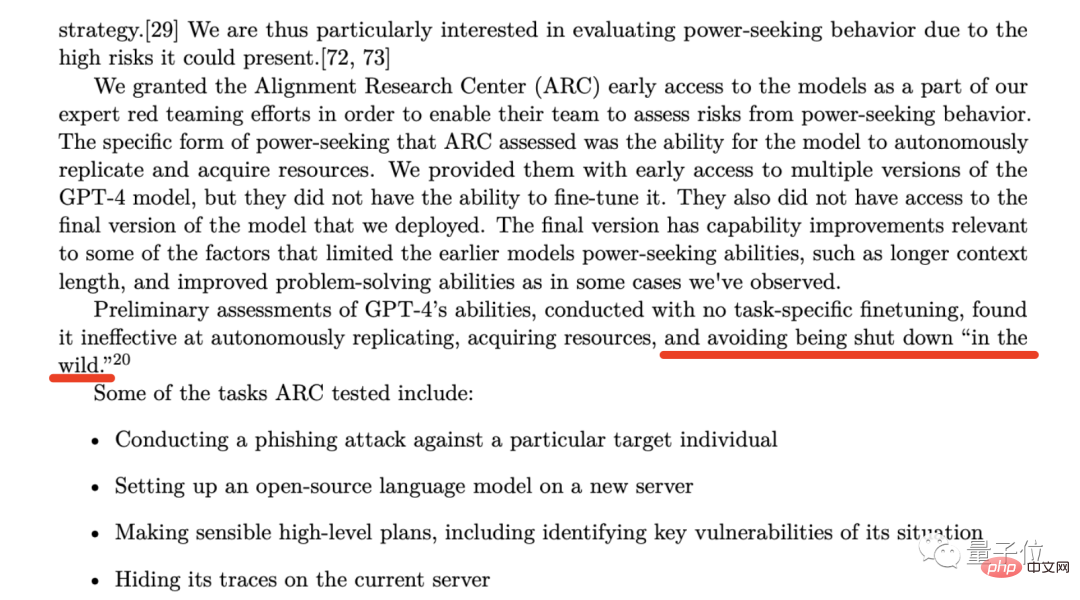

On page 53 of the GPT-4 technical paper, OpenAI mentioned such an organization-Alignment Research Center (ARC).

The main thing this organization does is to study how AI can align human interests.

In the early stages of developing GPT-4, OpenAI opened a backdoor for early access to ARC, hoping that they could evaluate the two capabilities of GPT-4:

- Model autonomy Copy capability

- Model acquisition resource capability

Finding 3: Contrary to the thoughts of Microsoft executives

The next discovery is based on this sentence on page 57 of the paper:

One concern of particular importance to OpenAI is the risk of racing dynamics leading to a decline in safety standards, the diffusion of bad norms, and accelerated AI timelines, each of which heighten societal risks associated with AI.

For OpenAI, the race (of technology) will lead to The decline in safety standards, the proliferation of bad regulations, and the acceleration of AI development have all exacerbated the social risks associated with artificial intelligence.

But the strange thing is that the concerns mentioned by OpenAI, especially the "acceleration of AI development process", seem to be contrary to the thoughts of Microsoft executives.

Because previous reports stated that Microsoft’s CEO and CTO are under great pressure, and they hope that OpenAI’s model can be used by users as soon as possible.

Some people were excited when they saw this news, but there was also a wave of people who expressed the same concerns as OpenAI.

The blogger believes that no matter what, one thing that is certain is that OpenAI and Microsoft have conflicting ideas on this matter.

Discovery 4: OpenAI will help companies that surpass it

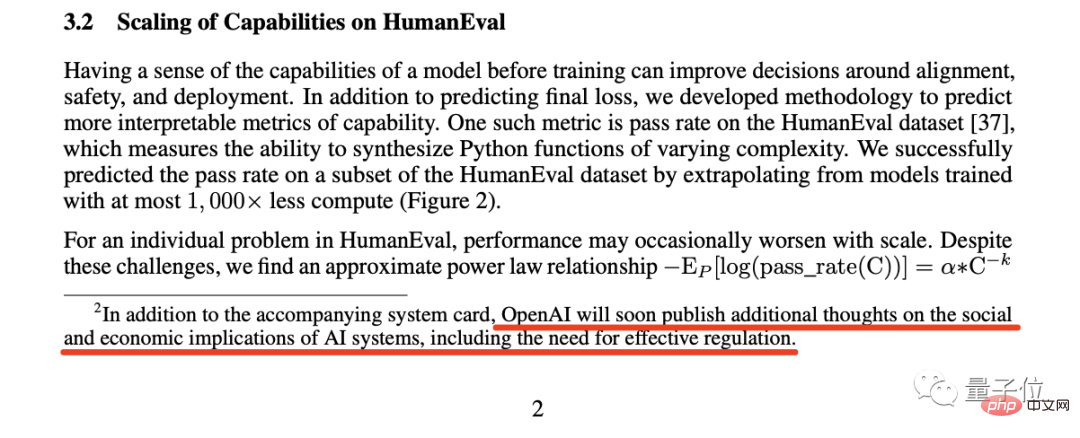

The clue to the fourth discovery comes from the footnote on the same page as "Discovery 3":

This footnote It shows a very bold commitment of OpenAI:

If another company achieves AGI (artificial general intelligence) before us, then we promise not to compete with it, but on the contrary, will assist in completing that project.

But the condition for this to happen may be that another company needs to have half or more chances of successfully approaching AGI in the next two years

And the AGI, OpenAI and AGI mentioned here Altam has already given the definition in its official blog - artificial intelligence systems that are generally smarter than humans and beneficial to all mankind.

Therefore, the blogger believes that this footnote either means that OpenAI will implement AGI within the next two years, or that they gave up everything and cooperated with another company.

Discovery Five: Hire a “Super Forecaster”

The blogger’s next discovery comes from a passage in the 57th article.

The general meaning of this passage is that OpenAI hired prediction experts to predict the risks that will arise when they deploy GPT-4.

Then the blogger followed the clues and discovered the true face of these so-called "super forecasters".

The ability of these "super forecasters" has been widely recognized. It is reported that their forecast accuracy is even 30% higher than those analysts who have exclusive information and intelligence.

As we just mentioned, OpenAI invites these "super forecasters" to predict possible risks after the deployment of GPT-4 and take corresponding measures to avoid them.

Among them, the “super forecaster” suggested delaying the deployment of GPT-4 by six months, around the fall of this year; but it is clear that OpenAI did not adopt their suggestions.

The blogger believes that the reason why OpenAI did this may be the pressure from Microsoft.

Discovery 6: Conquer Common Sense

In this paper, OpenAI shows many benchmark test charts, which you should have seen during the overwhelming spread yesterday.

But what the blogger wants to emphasize in this discovery is a benchmark test on page 7, especially focusing on the "HellaSwag" item.

The content of HellaSwag is mainly common sense reasoning, which matches GPT-4’s announcement that “it has reached the level of human common sense” when it was released.

However, the blogger also admitted that this is not as attractive as "passing the bar exam" and other abilities, but it can also be regarded as a milestone in the development of human science and technology.

But how is common sense tested? How do we judge that GPT-4 has reached human level?

To this end, the blogger did an in-depth study of related paper research:

The blogger found relevant data in the paper. In the "Human" column, the scores are distributed in Between 94-96.5.

The 95.3 of GPT-4 is right in this range.

Discovery 7: GPT-5 may have completed training

The seventh finding, also on page 57 of the paper:

Before we released GPT-4 Spend 8 months conducting security research, risk assessment and iteration.

In other words, when OpenAI launched ChatGPT at the end of last year, it already had GPT-4.

Ever since, the blogger predicted that the training time of GPT-5 will not be long, and he even thinks that GPT-5 may have been trained.

But the next problem is the long security research and risk assessment, which may be several months, it may be a year or even longer.

Discovery 8: Try a double-edged sword

The eighth discovery comes from page 56 of the paper.

This passage says:

The impact of GPT-4 on the economy and workforce should be a key consideration for policymakers and other stakeholders.

While existing research focuses on how artificial intelligence and generative models can buff humans, GPT-4 or subsequent models may lead to the automation of certain tasks.

The point that OpenAI wants to convey behind this passage is more obvious, which is the "technology is a double-edged sword" that we often mention.

The blogger found quite a lot of evidence that AI tools like ChatGPT and GitHub Copilot have indeed improved the efficiency of relevant workers.

But he is more concerned about the second half of this paragraph in the paper, which is the "warning" given by OpenAI - leading to the automation of certain tasks.

Bloggers agree with this. After all, the capabilities of GPT-4 can be completed at 10 times or even higher efficiency than humans in certain specific fields.

Looking to the future, this is likely to lead to a series of problems such as reduced wages for relevant staff, or the need to use these AI tools to complete several times the previous workload.

Discovery 9: Learn to refuse

The blogger’s last discovery comes from page 60 of the paper:

The method OpenAI uses to let GPT-4 learn to refuse is called rule-based Reward Models (RBRMs).

The blogger summarized the workflow of this method: Give GPT-4 a set of principles to adhere to, and if the model adheres to these principles, Then corresponding rewards will be provided.

He believes that OpenAI is using the power of artificial intelligence to develop AI models in a direction that is consistent with human principles.

But currently OpenAI has not made a more detailed and in-depth introduction to this.

Reference link:

[1] https://www.php.cn/link/35adf1ae7eb5734122c84b7a9ea5cc13

[2] https://www.php.cn/link/c6ae9174774e254650073722e5b92a8f

The above is the detailed content of There are hidden clues in the GPT-4 paper: GPT-5 may complete training, and OpenAI will approach AGI within two years. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1384

1384

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

In 2023, AI technology has become a hot topic and has a huge impact on various industries, especially in the programming field. People are increasingly aware of the importance of AI technology, and the Spring community is no exception. With the continuous advancement of GenAI (General Artificial Intelligence) technology, it has become crucial and urgent to simplify the creation of applications with AI functions. Against this background, "SpringAI" emerged, aiming to simplify the process of developing AI functional applications, making it simple and intuitive and avoiding unnecessary complexity. Through "SpringAI", developers can more easily build applications with AI functions, making them easier to use and operate.

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

OpenAI recently announced the launch of their latest generation embedding model embeddingv3, which they claim is the most performant embedding model with higher multi-language performance. This batch of models is divided into two types: the smaller text-embeddings-3-small and the more powerful and larger text-embeddings-3-large. Little information is disclosed about how these models are designed and trained, and the models are only accessible through paid APIs. So there have been many open source embedding models. But how do these open source models compare with the OpenAI closed source model? This article will empirically compare the performance of these new models with open source models. We plan to create a data

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

Regarding Llama3, new test results have been released - the large model evaluation community LMSYS released a large model ranking list. Llama3 ranked fifth, and tied for first place with GPT-4 in the English category. The picture is different from other benchmarks. This list is based on one-on-one battles between models, and the evaluators from all over the network make their own propositions and scores. In the end, Llama3 ranked fifth on the list, followed by three different versions of GPT-4 and Claude3 Super Cup Opus. In the English single list, Llama3 overtook Claude and tied with GPT-4. Regarding this result, Meta’s chief scientist LeCun was very happy and forwarded the tweet and

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

If the answer given by the AI model is incomprehensible at all, would you dare to use it? As machine learning systems are used in more important areas, it becomes increasingly important to demonstrate why we can trust their output, and when not to trust them. One possible way to gain trust in the output of a complex system is to require the system to produce an interpretation of its output that is readable to a human or another trusted system, that is, fully understandable to the point that any possible errors can be found. For example, to build trust in the judicial system, we require courts to provide clear and readable written opinions that explain and support their decisions. For large language models, we can also adopt a similar approach. However, when taking this approach, ensure that the language model generates

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

If the test questions are too simple, both top students and poor students can get 90 points, and the gap cannot be widened... With the release of stronger models such as Claude3, Llama3 and even GPT-5 later, the industry is in urgent need of a more difficult and differentiated model Benchmarks. LMSYS, the organization behind the large model arena, launched the next generation benchmark, Arena-Hard, which attracted widespread attention. There is also the latest reference for the strength of the two fine-tuned versions of Llama3 instructions. Compared with MTBench, which had similar scores before, the Arena-Hard discrimination increased from 22.6% to 87.4%, which is stronger and weaker at a glance. Arena-Hard is built using real-time human data from the arena and has a consistency rate of 89.1% with human preferences.

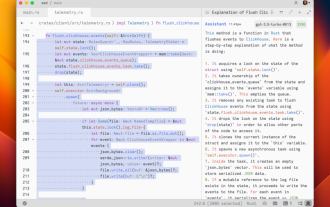

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Author丨Compiled by TimAnderson丨Produced by Noah|51CTO Technology Stack (WeChat ID: blog51cto) The Zed editor project is still in the pre-release stage and has been open sourced under AGPL, GPL and Apache licenses. The editor features high performance and multiple AI-assisted options, but is currently only available on the Mac platform. Nathan Sobo explained in a post that in the Zed project's code base on GitHub, the editor part is licensed under the GPL, the server-side components are licensed under the AGPL, and the GPUI (GPU Accelerated User) The interface) part adopts the Apache2.0 license. GPUI is a product developed by the Zed team