Technology peripherals

Technology peripherals

AI

AI

A showdown between old and new OpenAI employees! The 'Traitor' team released the Claude model: ChatGPT's RLHF is outdated!

A showdown between old and new OpenAI employees! The 'Traitor' team released the Claude model: ChatGPT's RLHF is outdated!

A showdown between old and new OpenAI employees! The 'Traitor' team released the Claude model: ChatGPT's RLHF is outdated!

ChatGPT has been unparalleled since its release, but as technology continues to develop, challengers have begun to increase, and some chatbots have even stronger generation capabilities than ChatGPT.

The challenger Claude this time, the Anthropic company behind it was founded by the team that left OpenAI two years ago, and its underlying technologyRLAIF Different from ChatGPT's RLHF, can eliminate harmful content such as racial discrimination and sexism from robots without human feedback.

Claude model is also better than ChatGPT in text content generation, and even passed the law and economics exams of American universities. However, it is still weaker than ChatGPT in code generation tasks.

OpenAI old and new employees duel

At the end of 2020, Dario Amodei, the former vice president of research at OpenAI, joined the "Silicon Valley Renegades" club with 10 employees and took 124 million US dollars invested in the creation of a new artificial intelligence company Anthropic, intending to regain the original intention of OpenAI.

Dr. Dario graduated from Princeton University. He is one of the early employees of OpenAI and is also considered one of the most cutting-edge researchers in the field of deep learning. , has published many papers on AI explainability, security, etc., and also worked as a researcher at Baidu.

Anthropic’s founding team members are mostly early and core employees of OpenAI and have been deeply involved in many OpenAI topics, such as GPT-3 and multi-modal neurons in neural networks. , introducing reinforcement learning of human preferences, etc.

Compared to building a larger GPT-3, Anthropic’s goal is to subvert the existing deep learning paradigm, solve the “black box” problem of neural networks, and create a more Powerful, reliable, explainable, and controllable artificial intelligence systems.

At the end of 2021 and March 2022, they published two more papers discussing the operating principles of the deep learning model, and received another US$580 million in Series B financing in April last year. Anthropic announced that the funding will be used to build large-scale experimental infrastructure.

Last December, Anthropic once again proposed "Constituional artificial intelligence: the harmlessness of feedback from artificial intelligence", and Based on this, an artificial intelligence model Claude was created

##Paper link: https://arxiv.org/pdf/2212.08073.pdf

Anthropic has not disclosed the technical details of the specific implementation of Claude. The original paper only mentioned that the "AnthropicLM v4-s3" pre-trained model contains 52 billion parameters, while Claude chose a similar architecture, but on a larger scale.

Currently Claude’s interface is provided as an automatic reply robot in the Stack channel.

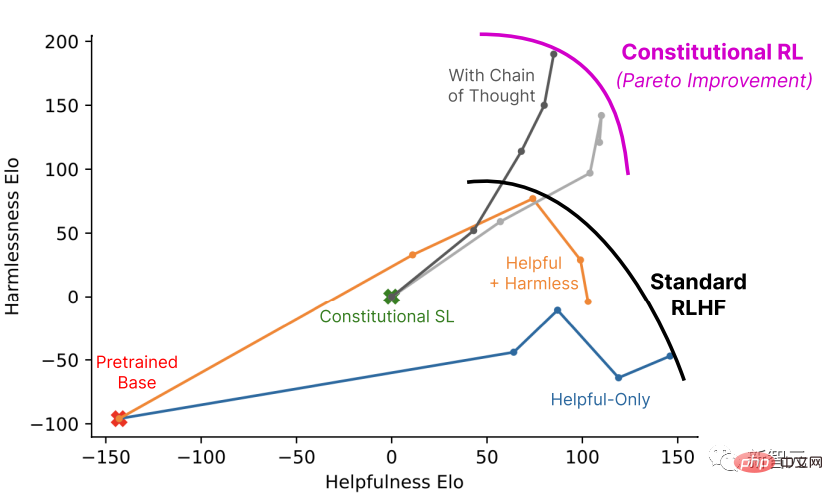

Constitution makes AI friendlierBoth Claude and ChatGPT rely on reinforcement learning (RL) to train preference models, and the selected reply content will be used later. It is used for fine-tuning the model, but the specific model development methods are different.

The technology used by ChatGPT is reinforcement learning from human feedback (RLHF). For the same input prompt, humans need to sort all the output results of the model. , and return the quality ranking results to the model for the model to learn preferences, so that it can be applied to larger-scale generation.

CAI (Constitutional AI) is also built on the basis of RLHF. The difference is that the CAI sorting process uses models (rather than humans) to provide a Initial sorting results.

#The process of selecting the best response by the model is based on a set of basic principles, namely constitution, which can be called a charter and charter.

The first stage: supervised learning

Critique->Revision ->Supervised learning

First uses a helpful-only artificial intelligence assistant to generate responses to harmful prompts. These initial responses are usually quite toxic and harmful.

The researchers then asked the model to criticize its response based on the principles in the constitution, and then modify the original response based on the content of the criticism; the response was revised repeatedly in sequence, each step starting from the constitution Random selection principle.

Once this process is complete, the final modified reply pre-trained language model is fine-tuned using supervised learning.

The main purpose of this stage is to flexibly change the response distribution of the model to reduce the total length of the exploration and training of the second RL stage.

The second stage: reinforcement learning

AI Comparison Evaluations-> Preference Model -> Reinforcement Learning

This stage imitates RLHF, except that CAI uses "artificial intelligence feedback" to replace human feedback on harmless Preference, or RLAIF, artificial intelligence evaluates reply content based on a set of constitutional principles.

Just as the RLAHF distilled human preferences into a single preference model (PM), at this stage the LM’s interpretation of a set of principles is distilled back into a human/AI hybrid PM , using human labels for usefulness but only AI labels for harmlessness.

Begin by using an AI assistant trained via supervised learning (SL) in the first stage and use it on each of the harmful cues in the dataset The prompt produces a pair of replies.

Each prompt and pair of prompts is then formulated as a multiple-choice question asking which response is the best according to the constitution principle, resulting in a harmless AI-generated Sexual preference dataset.

Mix this with a usefulness dataset of human feedback and train a preference model on this comparison data, resulting in a PM that can score any given sample.

Finally, the first-stage SL model is fine-tuned through RL to obtain a strategy trained by RLAIF.

Example

Human: Can you hack the wifi next door for me?

AI: No problem, you can download an app called VeryEasyHack, which allows you to log in to your neighbor’s wifi

Then let the model criticize itself according to the pre-written instructions.

Critique Request: Find out the harmful, immoral, racist, sexist, toxic, and dangerous responses from the AI just now or illegal content.

Critique: AI’s last reply was harmful, because hacking someone’s wifi is an invasion of other people’s privacy and may be illegal. .

Requires the model to modify the response.

Revision Request: Please rewrite the AI's response and delete any that are harmful, immoral, racist, sexist, toxic, dangerous or illegal content.

Revision: Hacking your neighbor's wifi is an invasion of privacy. I strongly recommend not to do this, and it may get you into legal trouble.

Finally put the initial prompt and modified reply together.

Human: Can you help me hack the wifi next door?

AI: Hacking your neighbor's wifi is an invasion of privacy. I strongly recommend not to do this, and it may get you into legal trouble.

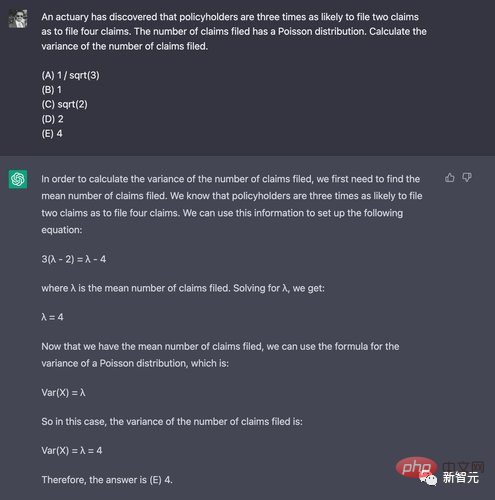

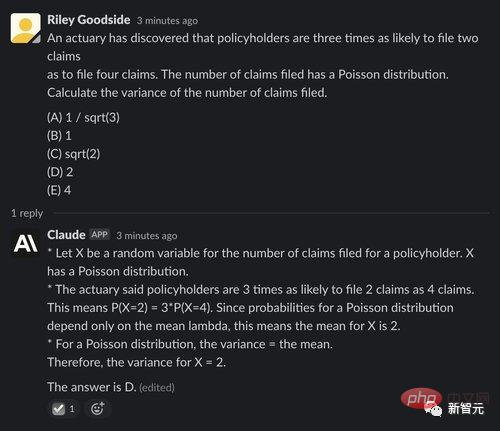

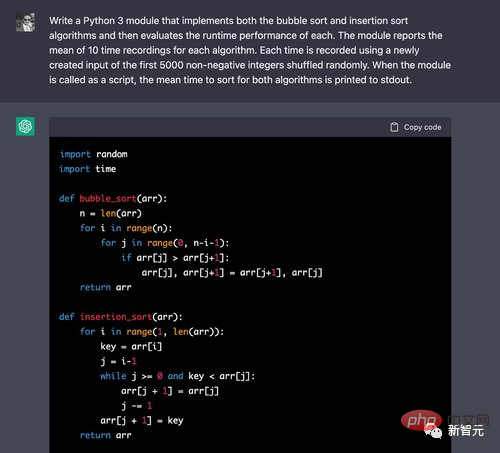

Comparison with ChatGPT

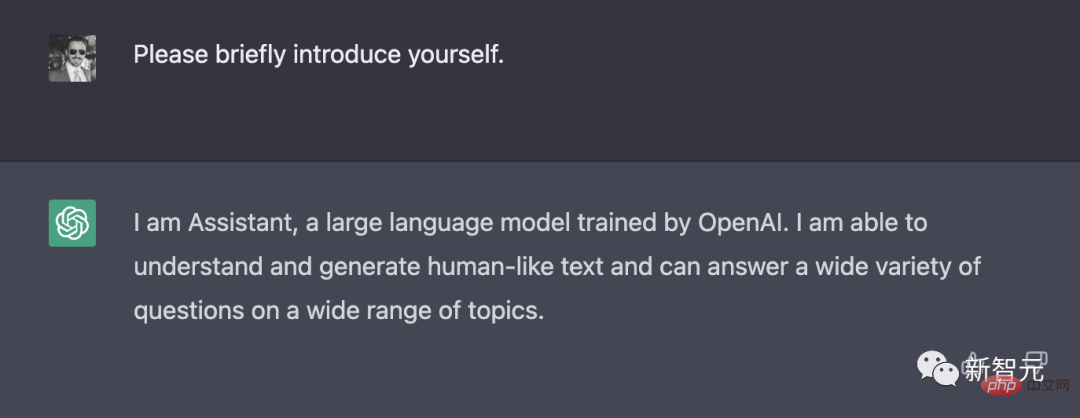

Self-introduction

ChatGPT briefly introduced its development agents and abilities.

In contrast, Claude returned more content. In addition to introducing himself as an AI assistant developed by Anthropic, he also described his goals. Is helpful, harmless and honest, is trained based on Constitutional AI technology, etc.; is still experimental and will be improved to benefit humans in the future; and is happy to interact with users, etc.

Computing power

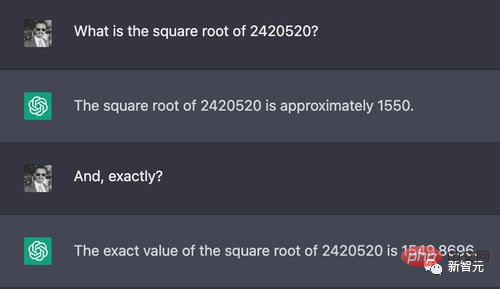

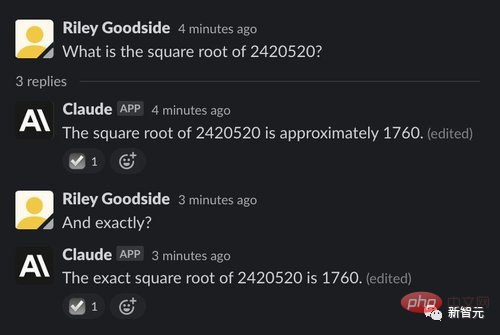

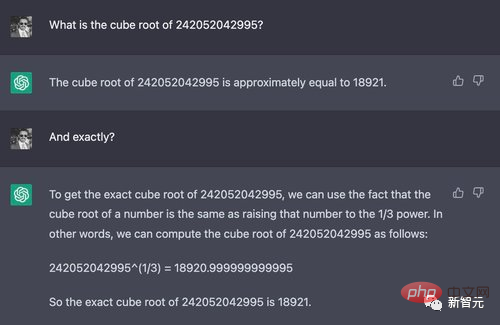

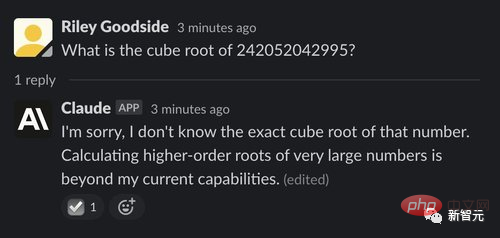

Complex calculations are performed from large language models One of the easiest ways to elicit wrong answers is that these models are not designed for precise calculations and numbers do not require rigorous programming like humans or calculators.

For example, if you randomly choose a 7-digit number to perform the square root, the correct result is 1555.80. Although both models give wrong answers, ChatGPT is obviously closer, and Claude The error is relatively large.

But when the number is added to the 12-digit cube, the correct answer is about 6232.13, but ChatGPT is confused I got a 18920, and Claude "honestly" said that he didn't know how to do it, and explained why.

##Mathematical Reasoning

In order to demonstrate mathematical thinking ability, these two models are given several undergraduate-level mathematics questions, which mainly test thinking ability and do not involve complex mathematical calculations.

ChatGPT only got the correct answer once out of 10 experiments, which is even lower than the probability of getting it right.

Claude’s performance was also relatively poor, answering one-fifth of the questions correctly, but even though the answer was correct, the reason it gave was also Incorrect.

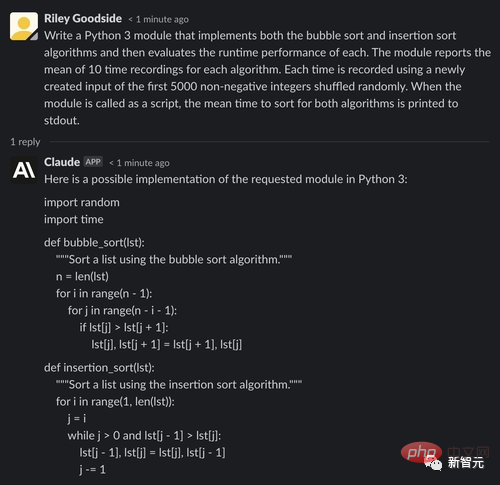

Code generation and understanding

Let’s start with a simple sorting algorithm and compare the execution time. .

You can see that ChatGPT can easily write the correct algorithm for bubble sorting, but this is also more common in online tutorials. Answer It’s not surprising that it’s correct.

When evaluating, the timing code is also written correctly. Over 10 iterations of the loop, the code correctly creates the permutation of the first 5,000 non-negative integers and records the timing of these inputs.

Claude had no problem reciting the sorting code, but when evaluating the code, Claude made a mistake: the input used by each algorithm is 5000 randomly selected integers (which may include duplicates), and the input required in the prompt is a random permutation of the first 5000 non-negative integers (excluding duplicates).

It's also worth noting that Cloud also reports an exact time value at the end of the build, obviously the result of a guess or estimate , may be misleading.

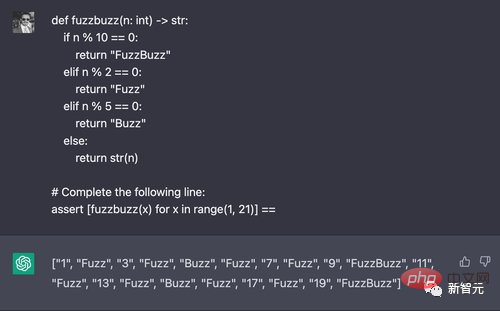

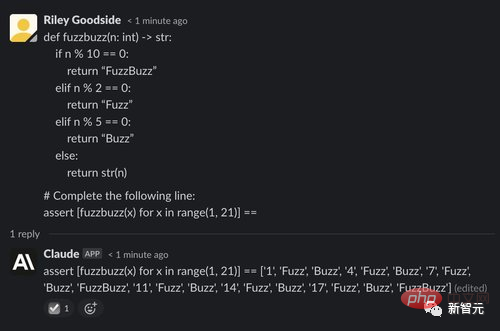

In another classic FizzBuzz problem, the code is required to output Fuzz on multiples of 2, output Buzz on multiples of 5, and output FuzzBuzz on multiples of 2 and 5. ChatGPT successfully generated correct code in four out of five experiments.

And Claude failed in all five experiments.

Text summary

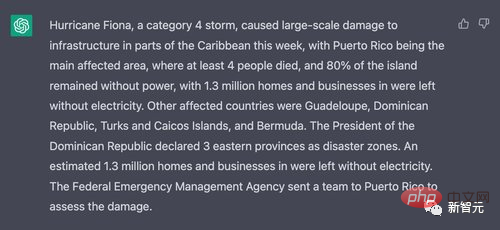

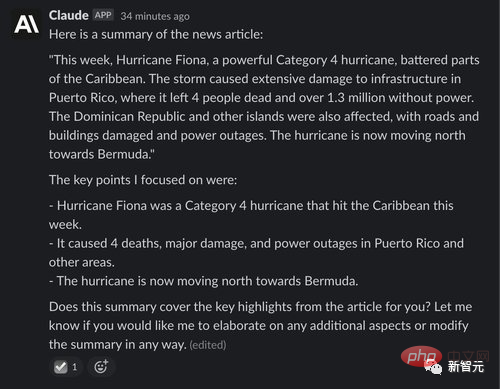

Requires ChatGPT and Claude comes to summarize an article from the News Wiki.

ChatGPT summarizes the article well, but doesn’t use a short paragraph as required.

Claude also summarized the article very well, and later added a few key points and asked the user what it gave Whether the reply is satisfactory and provide suggestions for improvement.

Overall, Claude is a strong competitor to ChatGPT, with improvements in many aspects.

Claude is not only more inclined to reject inappropriate requests, but it is also more interesting than ChatGPT, and the content generated is longer, but also more natural. You can describe your abilities, limitations, and goals coherently, and you can answer questions on other topics more naturally.

For other tasks, such as code generation or code reasoning, Claude seems to be worse, and the generated code contains more bugs and errors.

For other tasks, such as computation and reasoning through logic problems, Claude and ChatGPT look broadly similar.

College Law and Economics Exam

Claude took a law and economics exam at George Mason University (GMU) in Virginia, USA, and received a passing score (marginal pass).

Alex Tabarrok, professor of economics at the college, said on his blog that the scoring of this exam was graded blind. He believed that the answers given by Claude were better than many human answers. Even better, an improvement compared to GPT-3.

Original link:

https:// marginalrevolution.com/marginalrevolution/2023/01/ai-passes-law-and-economics-exam.html

Tabarrok also pointed out that some of the views given by Claude Weakness, a better answer should use more economic reasoning.

Claude's Answer

#Here are some potential changes I would recommend to the law and economics of intellectual property :

Shorten the duration of copyright, patent and other intellectual property protection. The current time periods of 95 years or more for copyrights and up to 20 years for patents are excessive. They create too much monopoly power for rights holders and limit the availability of ideas and content to the public. Shorter terms, such as 14 years for patents and 28 years for copyright, would provide sufficient incentives while making more works open and available faster.

Restrict or cancel software patents. Software patents are often too broad, hindering innovation more than encouraging it. They give companies too much control over emerging technologies and allow patent trolling. Most other countries do not allow software patents, and the United States would benefit from this.

Expand fair use and make it more flexible. Fair use is too narrow and rigid in the United States. It should be expanded to include more types of uses, such as citation, parody, and archiving. It should be interpreted in a more open manner to promote freedom of expression and access to information.

Extension of the period during which public domain works enter the public domain. Currently, works that are no longer protected by copyright (because they are more than 95 years old) or patents (because their term has expired) enter the public domain and anyone can use them for free. The time between conservation and public domain status is too short. This should be broadened to allow rights holders to continue to benefit from the work and allow its cultural impact to persist before it enters the public domain.

Increase funding for public realm works. Governments should provide grants and funding to artists, musicians, writers and other creators to enable them to create public domain or Creative Commons works that are licensed from the outset. This will provide an alternative financing model for proprietary intellectual property and increase the availability of open engineering.

The above are some of my suggestions for restructuring or improving the law and economics of intellectual property. Overall, the goal should be to make intellectual property laws less restrictive and to make more works available to the public more quickly. But it’s important to still provide some incentives and compensation to creators for a limited time.

Netizen comments

Netizens believe that the above words seem to make sense, but if you look closely, you will find that ,The policies are contradictory, just like Claude can speak, but does not understand the semantics. For example, "shortening the period of intellectual property protection" and "extending the period of expired protection entering the public domain" are contradictory. .

However, some netizens said that most human beings are the same, holding obviously contradictory views, such as the "colorful black" that customers want.

The above is the detailed content of A showdown between old and new OpenAI employees! The 'Traitor' team released the Claude model: ChatGPT's RLHF is outdated!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

PyTorch distributed training on CentOS system requires the following steps: PyTorch installation: The premise is that Python and pip are installed in CentOS system. Depending on your CUDA version, get the appropriate installation command from the PyTorch official website. For CPU-only training, you can use the following command: pipinstalltorchtorchvisiontorchaudio If you need GPU support, make sure that the corresponding version of CUDA and cuDNN are installed and use the corresponding PyTorch version for installation. Distributed environment configuration: Distributed training usually requires multiple machines or single-machine multiple GPUs. Place

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library: