Technology peripherals

Technology peripherals

AI

AI

From BERT to ChatGPT, a hundred-page review summarizes the evolution history of pre-trained large models

From BERT to ChatGPT, a hundred-page review summarizes the evolution history of pre-trained large models

From BERT to ChatGPT, a hundred-page review summarizes the evolution history of pre-trained large models

All success has its traces, and ChatGPT is no exception.

Not long ago, Turing Award winner Yann LeCun was put on the hot search list because of his harsh evaluation of ChatGPT.

In his view, “As far as the underlying technology is concerned, ChatGPT has no special innovation,” nor is it “anything revolutionary.” Many research labs are using the same technology and doing the same work. What’s more, ChatGPT and the GPT-3 behind it are in many ways composed of multiple technologies developed over many years by multiple parties and are the result of decades of contributions by different people. Therefore, LeCun believes that ChatGPT is not so much a scientific breakthrough as it is a decent engineering example.

"Whether ChatGPT is revolutionary" is a controversial topic. But there is no doubt that it is built on the basis of many technologies accumulated previously. For example, the core Transformer was proposed by Google a few years ago, and Transformer was inspired by Bengio's work on the concept of attention. If we go back further, we can also link to research from even earlier decades.

Of course, the public may not be able to appreciate this step-by-step feeling. After all, not everyone will read the papers one by one. But for technicians, it is still very helpful to understand the evolution of these technologies.

In a recent review article, researchers from Michigan State University, Beijing Aeronautical University, Lehigh University and other institutions carefully combed this field There are hundreds of papers, mainly focusing on pre-training basic models in the fields of text, image and graph learning, which are well worth reading. Professor at Duke University, Academician of the Canadian Academy of EngineeringPei Jian,Distinguished Professor of Computer Science Department, University of Illinois at ChicagoYu Shilun(Philip S. Yu) , Salesforce AI Research Vice PresidentXiong CaimingDu is one of the authors of the paper.

Paper link: https://arxiv.org/pdf/2302.09419.pdf

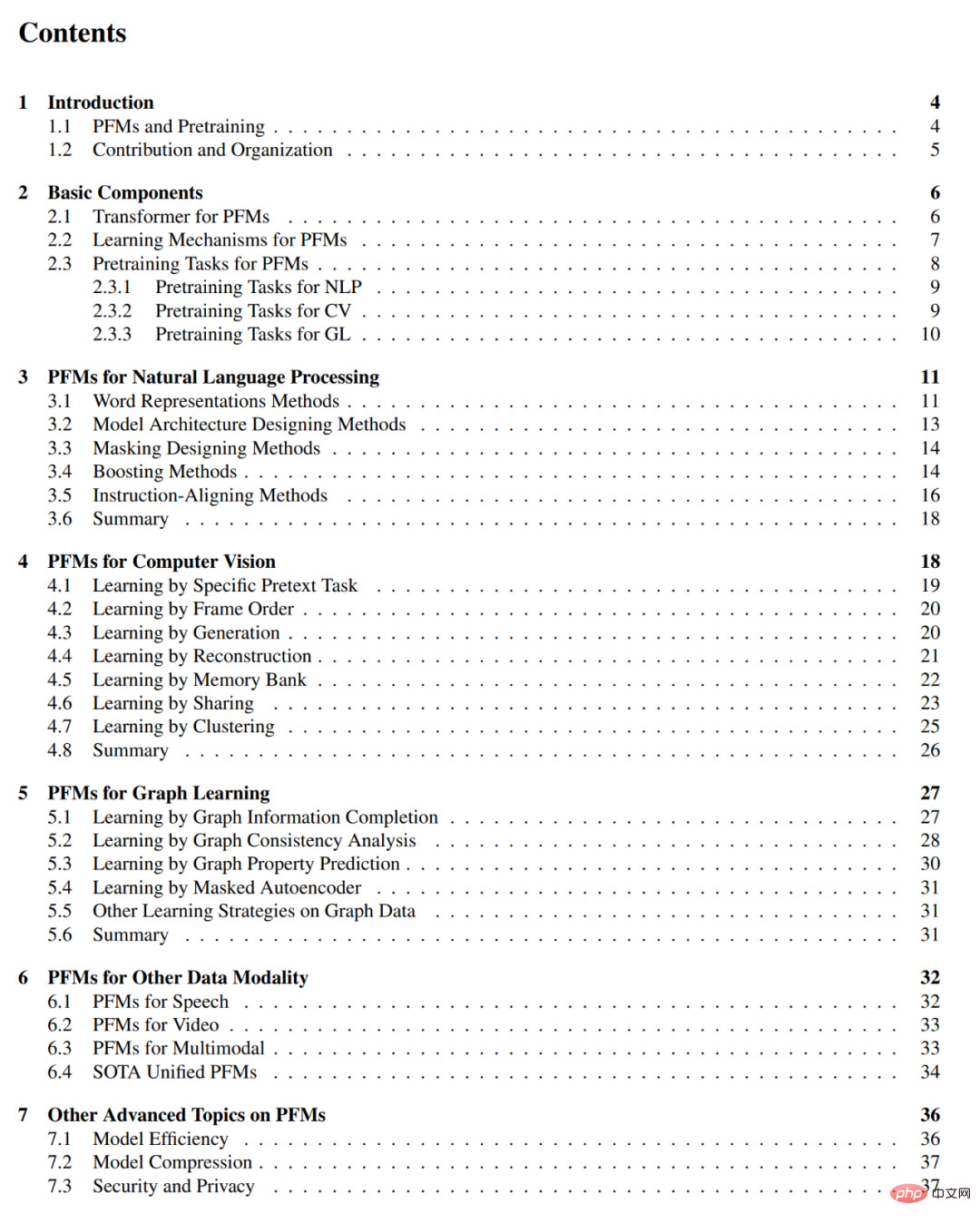

Thesis table of contents is as follows:

On overseas social platforms, DAIR.AI co-founder Elvis S. recommended this review and won one More than a thousand likes.

Introduction

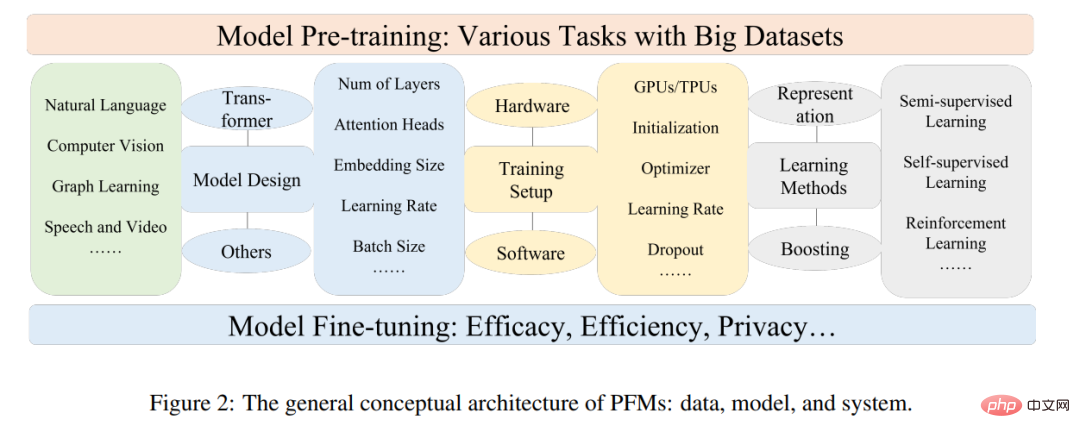

Pre-trained basic model (PFM) is an important part of artificial intelligence in the big data era. The name "Basic Model" comes from a review published by Percy Liang, Li Feifei and others - "On the Opportunities and Risks of Foundation Models", which is a general term for a type of model and its functions. PFM has been extensively studied in the fields of NLP, CV, and graph learning. They show strong potential for feature representation learning in various learning tasks, such as text classification, text generation, image classification, object detection, and graph classification. Whether training on multiple tasks with large datasets or fine-tuning on small-scale tasks, PFM exhibits superior performance, which makes it possible to quickly start data processing.

PFM and pre-training

PFM is based on pre-training technology, which aims to utilize a large amount of data and tasks to Train a general model that can be easily fine-tuned in different downstream applications.

The idea of pre-training originated from transfer learning in CV tasks. But after seeing the effectiveness of this technology in the CV field, people began to use this technology to improve model performance in other fields.

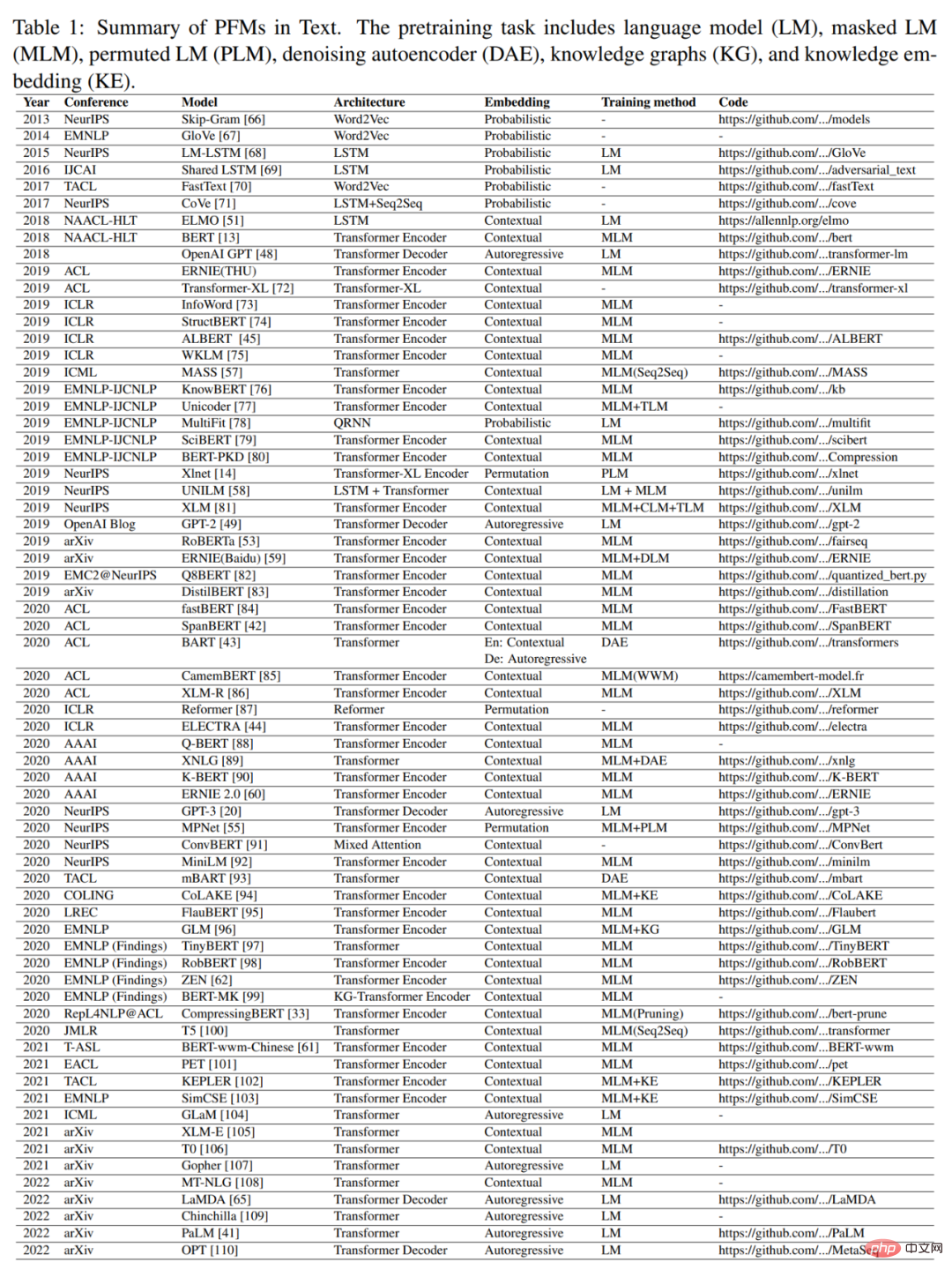

When pre-training technology is applied to the NLP field, a well-trained language model can capture rich knowledge that is beneficial to downstream tasks, such as long-term dependencies, hierarchical relationships, etc. In addition, the significant advantage of pre-training in the field of NLP is that the training data can come from any unlabeled text corpus, that is, there is an almost unlimited amount of training data that can be used for the pre-training process. Early pre-training was a static technique, such as NNLM and Word2vec, but static methods are difficult to adapt to different semantic environments. Therefore, dynamic pre-training techniques have been proposed, such as BERT, XLNet, etc. Figure 1 depicts the history and evolution of PFM in the fields of NLP, CV, and GL. PFM based on pre-training techniques uses large corpora to learn common semantic representations. Following the introduction of these pioneering works, various PFMs have emerged and been applied to downstream tasks and applications.

The recently popular ChatGPT is a typical case of PFM application. It is fine-tuned from the generative pre-trained transformer model GPT-3.5, which was trained using a large amount of this paper and code. Additionally, ChatGPT applies reinforcement learning from human feedback (RLHF), which has emerged as a promising way to align large-scale LMs with human intentions. The excellent performance of ChatGPT may bring about changes in the training paradigm of each type of PFM, such as the application of instruction alignment technology, reinforcement learning, prompt tuning and thinking chain, thus moving towards general artificial intelligence.

This article will focus on PFM in the fields of text, image and graph, which is a relatively mature research classification method. For text, it is a general-purpose LM used to predict the next word or character in a sequence. For example, PFM can be used in machine translation, question answering systems, topic modeling, sentiment analysis, etc. For images, it is similar to PFM on text, using huge datasets to train a large model suitable for many CV tasks. For graphs, similar pre-training ideas are used to obtain PFMs that are used in many downstream tasks. In addition to PFMs for specific data domains, this article also reviews and elaborates on some other advanced PFMs, such as PFMs for voice, video, and cross-domain data, and multimodal PFMs. In addition, a great convergence of PFMs capable of handling multi-modal tasks is emerging, which is the so-called unified PFM. The authors first define the concept of unified PFM and then review recent research on unified PFMs that have achieved SOTA (such as OFA, UNIFIED-IO, FLAVA, BEiT-3, etc.).

Based on the characteristics of existing PFM in the above three fields, the author concludes that PFM has the following two major advantages. First, to improve performance on downstream tasks, the model requires only minor fine-tuning. Second, PFM has been scrutinized in terms of quality. Instead of building a model from scratch to solve a similar problem, we can apply PFM to task-relevant data sets. The broad prospects of PFM have inspired a large amount of related work to focus on issues such as model efficiency, security, and compression.

Contribution and structure of the paper

Before the publication of this article, there have been several reviews reviewing some specific Pre-trained models in fields such as text generation, visual transformer, and target detection.

"On the Opportunities and Risks of Foundation Models" summarizes the opportunities and risks of foundation models. However, existing works do not achieve a comprehensive review of PFM in different domains (e.g., CV, NLP, GL, Speech, Video) on different aspects, such as pre-training tasks, efficiency, effectiveness, and privacy. In this review, the authors elaborate on the evolution of PFM in the NLP field and how pre-training has been transferred and adopted in the CV and GL fields.

Compared with other reviews, this article does not provide a comprehensive introduction and analysis of existing PFMs in all three fields. Different from the review of previous pre-trained models, the authors summarize existing models, from traditional models to PFM, as well as the latest work in three fields. Traditional models emphasize static feature learning. Dynamic PFM provides an introduction to structure, which is mainstream research.

The author further introduces some other research on PFM, including other advanced and unified PFM, model efficiency and compression, security, and privacy. Finally, the authors summarize future research challenges and open issues in different fields. They also provide a comprehensive introduction to relevant evaluation metrics and datasets in Appendices F and G.

In short, the main contributions of this article are as follows:

- To PFM Developments in NLP, CV and GL are reviewed in detail and up-to-date. In the review, the authors discuss and provide insights on the design and pre-training methods of general-purpose PFM in these three major application areas;

- summarizes the development of PFM in other multimedia areas, Such as voice and video. Additionally, the authors discuss cutting-edge topics on PFM, including unified PFM, model efficiency and compression, and security and privacy.

- Through a review of various modes of PFM in different tasks, the author discusses the main challenges and opportunities for future research on very large models in the big data era, which guides a new generation of PFM-based Collaborative and interactive intelligence.

The main contents of each chapter are as follows:

Chapter 2 of the paper introduces the general concept of PFM architecture.

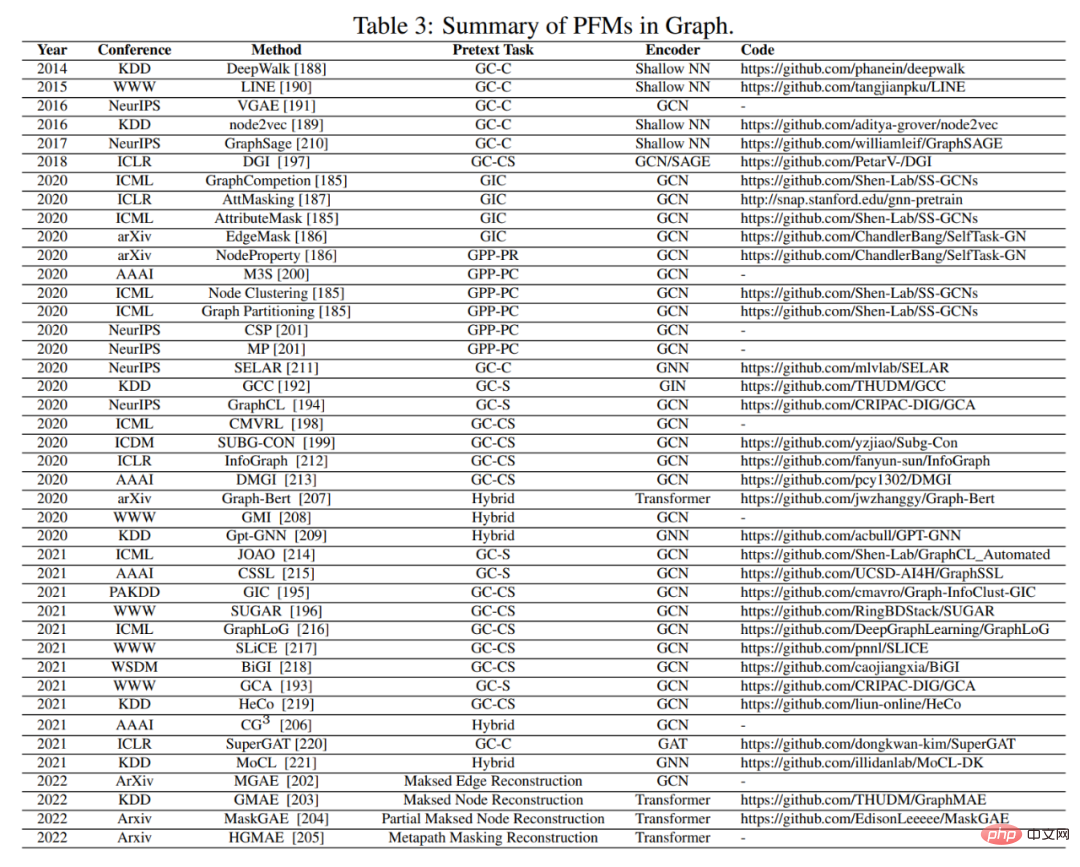

Chapters 3, 4, and 5 summarize existing PFMs in the fields of NLP, CV, and GL, respectively.

#Chapter 6 and 7 introduce other cutting-edge research in PFM, including cutting-edge and unified PFM, model efficiency and compression, and security and privacy.

Chapter 8 summarizes the main challenges of PFM. Chapter 9 summarizes the full text.

The above is the detailed content of From BERT to ChatGPT, a hundred-page review summarizes the evolution history of pre-trained large models. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile