Four cross-validation techniques you must learn in machine learning

Introduction

Consider creating a model on a dataset, but it fails on unseen data.

We cannot simply fit a model to our training data and wait for it to run perfectly on real, unseen data.

This is an example of overfitting, where our model has extracted all the patterns and noise in the training data. To prevent this from happening, we need a way to ensure that our model has captured the majority of the patterns and is not picking up every bit of noise in the data (low bias and low variance). One of the many techniques for dealing with this problem is cross-validation.

Understanding cross-validation

Suppose in a specific data set, we have 1000 records, and we train_test_split() is executed on it. Assuming we have 70% training data and 30% test data random_state = 0, these parameters result in 85% accuracy. Now, if we set random_state = 50 let's say the accuracy improves to 87%.

This means that if we continue to choose precision values for different random_state, fluctuations will occur. To prevent this, a technique called cross-validation comes into play.

Types of cross-validation

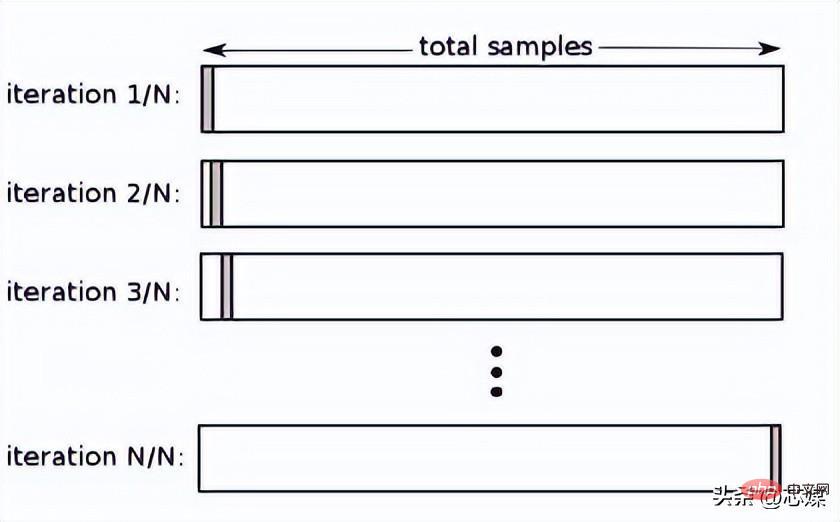

Leave one out cross-validation (LOOCV)

In LOOCV, we select 1 data point as testing, and all remaining data will be training data from the first iteration. In the next iteration, we will select the next data point as testing and the rest as training data. We will repeat this for the entire dataset so that the last data point is selected as the test in the final iteration.

Typically, to calculate the cross-validation R² for an iterative cross-validation procedure, you calculate the R² scores for each iteration and take their average.

Although it leads to reliable and unbiased estimates of model performance, it is computationally expensive to perform.

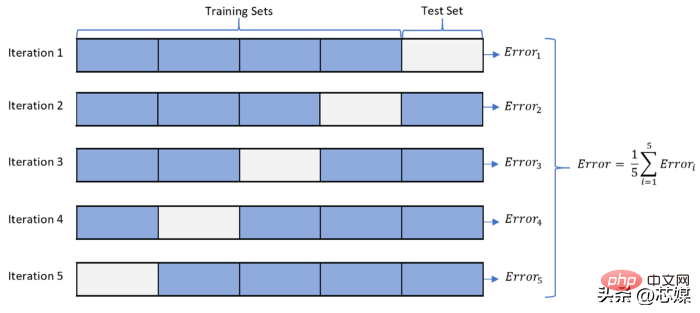

2. K-fold cross validation

in In K-fold CV, we split the data set into k subsets (called folds), then we train on all subsets, but leave one (k-1) subset for evaluation after training model.

Suppose we have 1000 records and our K=5. This K value means we have 5 iterations. The number of data points for the first iteration to be considered for the test data is 1000/5=200 from the start. Then for the next iteration, the next 200 data points will be considered tests, and so on.

To calculate the overall accuracy, we calculate the accuracy for each iteration and then take the average.

The minimum accuracy we can obtain from this process will be the lowest accuracy produced among all iterations, and likewise, the maximum accuracy will be among all iterations Produces the highest accuracy.

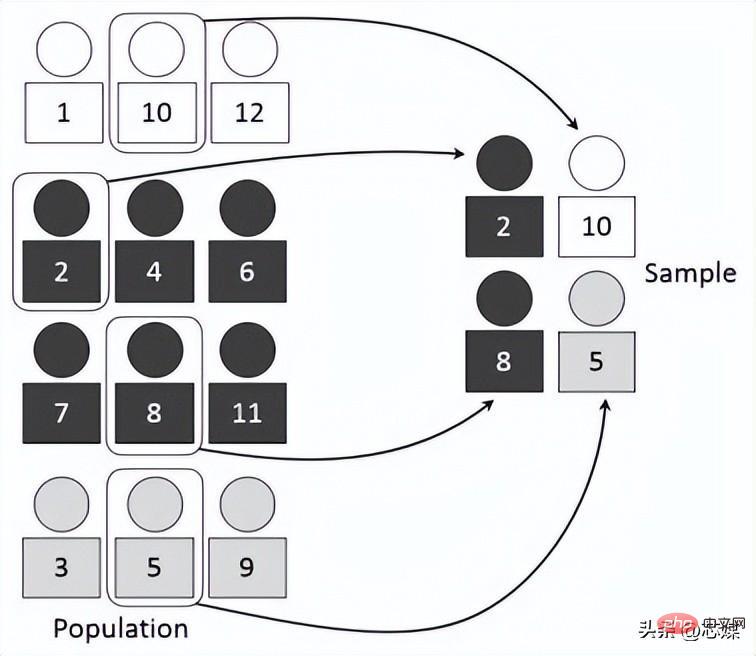

3.stratified cross-validation

Hierarchical CV is an extension of regular k-fold cross-validation, but specifically for classification problems where the splits are not completely random and the ratio between target classes is the same at each fold as in the full dataset.

Suppose we have 1000 records, which contain 600 yes and 400 no. So, in each experiment, it ensures that the random samples populated into training and testing are populated in such a way that at least some instances of each class will be present in both the training and testing splits.

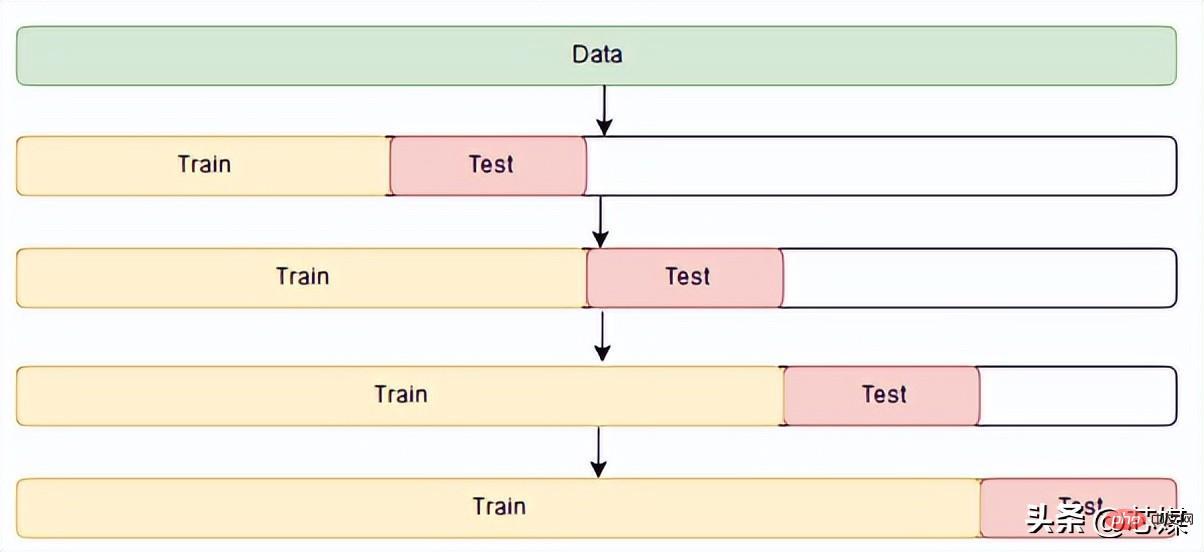

4.Time Series Cross Validation

In time series CV there is a series of test sets, each test set contains an observation. The corresponding training set contains only observations that occurred before the observation that formed the test set. Therefore, future observations cannot be used to construct predictions.

Prediction accuracy is calculated by averaging the test set. This process is sometimes called the "evaluation of the rolling forecast origin" because the "origin" on which the forecast is based is rolled forward in time.

Conclusion

In machine learning, we usually don’t want the algorithm or model that performs best on the training set. Instead, we want a model that performs well on the test set, and a model that consistently performs well given new input data. Cross-validation is a critical step to ensure that we can identify such algorithms or models.

The above is the detailed content of Four cross-validation techniques you must learn in machine learning. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

01 Outlook Summary Currently, it is difficult to achieve an appropriate balance between detection efficiency and detection results. We have developed an enhanced YOLOv5 algorithm for target detection in high-resolution optical remote sensing images, using multi-layer feature pyramids, multi-detection head strategies and hybrid attention modules to improve the effect of the target detection network in optical remote sensing images. According to the SIMD data set, the mAP of the new algorithm is 2.2% better than YOLOv5 and 8.48% better than YOLOX, achieving a better balance between detection results and speed. 02 Background & Motivation With the rapid development of remote sensing technology, high-resolution optical remote sensing images have been used to describe many objects on the earth’s surface, including aircraft, cars, buildings, etc. Object detection in the interpretation of remote sensing images

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

MetaFAIR teamed up with Harvard to provide a new research framework for optimizing the data bias generated when large-scale machine learning is performed. It is known that the training of large language models often takes months and uses hundreds or even thousands of GPUs. Taking the LLaMA270B model as an example, its training requires a total of 1,720,320 GPU hours. Training large models presents unique systemic challenges due to the scale and complexity of these workloads. Recently, many institutions have reported instability in the training process when training SOTA generative AI models. They usually appear in the form of loss spikes. For example, Google's PaLM model experienced up to 20 loss spikes during the training process. Numerical bias is the root cause of this training inaccuracy,

Outlook on future trends of Golang technology in machine learning

May 08, 2024 am 10:15 AM

Outlook on future trends of Golang technology in machine learning

May 08, 2024 am 10:15 AM

The application potential of Go language in the field of machine learning is huge. Its advantages are: Concurrency: It supports parallel programming and is suitable for computationally intensive operations in machine learning tasks. Efficiency: The garbage collector and language features ensure that the code is efficient, even when processing large data sets. Ease of use: The syntax is concise, making it easy to learn and write machine learning applications.