Technology peripherals

Technology peripherals

AI

AI

Two Chinese Google researchers released the first purely visual 'mobile UI understanding' model, four major tasks to refresh SOTA

Two Chinese Google researchers released the first purely visual 'mobile UI understanding' model, four major tasks to refresh SOTA

Two Chinese Google researchers released the first purely visual 'mobile UI understanding' model, four major tasks to refresh SOTA

For AI, "playing with mobile phones" is not an easy task. Just identifying various user interfaces (UI) is a big problem: not only must the type of each component be identified, but also according to its Use the symbols and positions to determine the function of the component.

Understanding the UI of mobile devices can help realize various human-computer interaction tasks, such as UI automation.

Previous work modeling mobile UI usually relied on the view hierarchy information of the screen, directly utilizing the structural data of the UI, and thereby bypassing the problem of identifying components starting from the screen pixels.

However, not all view hierarchies are available in all scenarios. This method usually outputs incorrect results due to missing object descriptions or misaligned structural information, so although using view hierarchies can improve short-term performance , but may ultimately hinder the applicability and generalization performance of the model.

Recently, two researchers from Google Research proposed Spotlight, a purely visual method that can be used for mobile UI understanding. Based on the visual language model, it only needs to combine screenshots of the user interface and a sense on the screen. The area of interest (focus) can be used as input.

Paper link: https://arxiv.org/pdf/2209.14927.pdf

Spotlight’s general architecture is very Easily extensible and capable of performing a range of user interface modeling tasks.

The experimental results in this article show that the Spotlight model has achieved sota performance on several representative user interface tasks, successfully surpassing previous methods that used screenshots and view hierarchies as input.

In addition, the article also explores the multi-task learning and few-shot prompt capabilities of the Spotlight model, and also shows promising experimental results in the direction of multi-task learning.

The author of the paper, Yang Li, is a senior researcher at Google Research Center and an affiliated faculty member of CSE at the University of Washington. He received a PhD in computer science from the Chinese Academy of Sciences and conducted postdoctoral research at EECS at the University of California, Berkeley. He leads the development of Next Android App Prediction, is a pioneer in on-device interactive machine learning on Android, develops gesture search, and more.

Spotlight: Understanding Mobile Interfaces

Computational understanding of user interfaces is a critical step in enabling intelligent UI behavior.

Prior to this, the team studied various UI modeling tasks, including window titles (widgets), screen summarization (screen summarization) and command grounding. These tasks solved the automation and usability of different interaction scenarios. Accessibility issues.

Subsequently, these functions were also used to demonstrate how machine learning can help "user experience practitioners" improve UI quality by diagnosing clickability confusion, and provide ideas for improving UI design. All of this work is consistent with work in other fields. Together they demonstrate how deep neural networks can potentially change end-user experience and interaction design practices.

Although we have achieved a certain degree of success in handling "single UI tasks", the next question is: whether it can be done from "specific Improve the processing capabilities of "general UI" in the "UI Recognition" task.

The Spotlight model is also the first attempt at a solution to this problem. The researchers developed a multi-tasking model to handle a series of UI tasks simultaneously. Although some progress has been made in the work, there are still some problem.

The previous UI model relied heavily on the UI view hierarchy, which is the structure or metadata of the mobile UI screen, such as the Document Object Model of the web page. The model directly obtains the UI objects on the screen. Details including type, text content, location, etc.

This kind of metadata gave the previous model an advantage over purely visual models, but the accessibility of view hierarchy data was a big problem, and problems such as missing object descriptions or improper alignment of structural information often occurred.

So while there are short-term benefits to using a view hierarchy, it may ultimately hinder the performance and applicability of your model. Additionally, previous models had to handle heterogeneous information across datasets and UI tasks, often resulting in more complex model architectures that were ultimately difficult to scale or generalize across tasks.

Spotlight Model

The purely visual Spotlight approach aims to achieve universal user interface understanding capabilities entirely from raw pixels.

Researchers introduce a unified approach to representing different UI tasks, where information can be universally represented into two core modes: visual and verbal, where the visual mode captures what the user sees from the UI screen Content and language patterns can be natural language or any task-related token sequence.

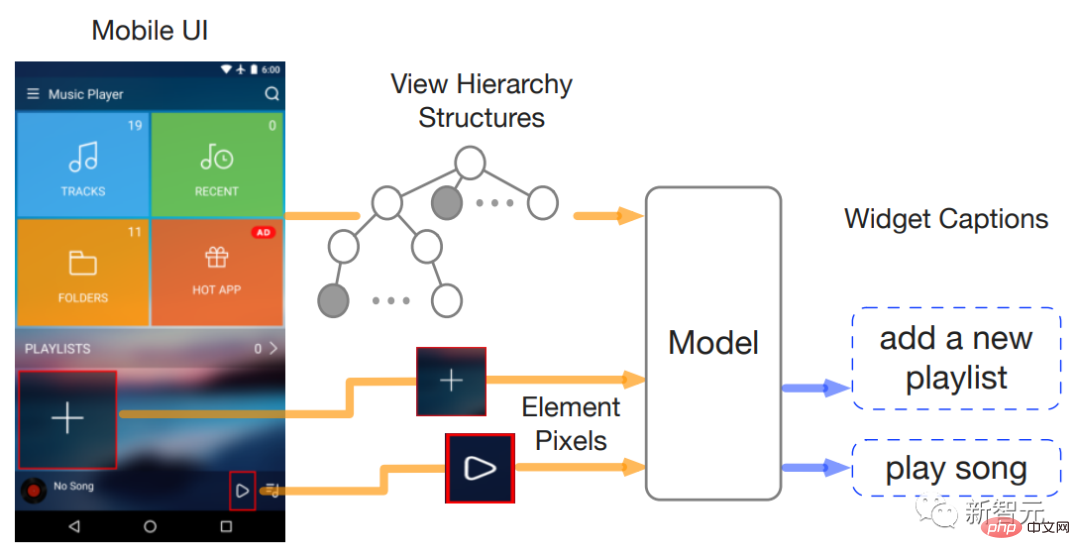

The Spotlight model input is a triplet: a screenshot, an area of interest on the screen, and a text description of the task; the output is a text description or response about the area of interest.

This simple input and output representation of the model is more general, can be applied to a variety of UI tasks, and can be extended to a variety of model architectures.

The model is designed to enable a series of learning strategies and settings, from fine-tuning for specific tasks to multi-task learning and few-shot learning.

Spotlight models can leverage existing architectural building blocks, such as ViT and T5, which are pre-trained in high-resource general visual language fields and can be built directly on top of these general domain models. Construct.

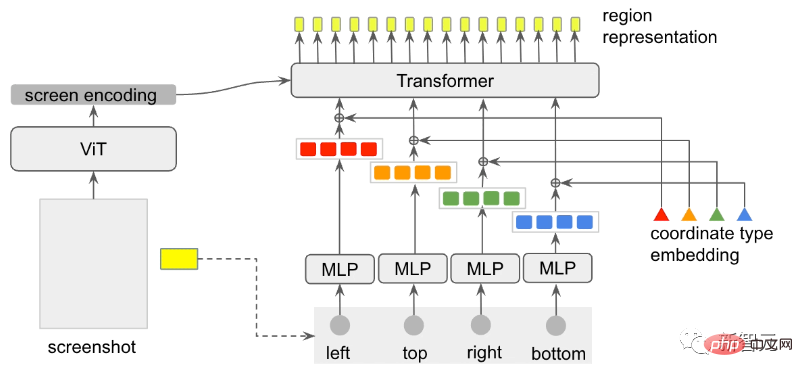

Because UI tasks are usually related to specific objects or areas on the screen, the model needs to be able to focus on the object or area of interest. The researchers introduced the focus region extractor (Focus Region Extractor) into the visual language model. , enabling the model to focus on that area based on the screen context.

The researchers also designed a Region Summarizer to obtain a latent representation of the screen area based on ViT encoding by using the attention query generated by the region bounding box.

Specifically, the bounding box for each coordinate (a scalar value, including left, top, right, or bottom), represented as a yellow box in the screenshot.

First convert the input into a set of dense vectors through a multi-layer perceptron (MLP), and then feed it back to the Transformer model to obtain the embedding vector (coordinate-type embedding) according to the coordinate type. The dense vector and Their corresponding coordinate type embeddings are color-coded to indicate their relationship to each coordinate value.

Then coordinate queries participate in the screen encoding of ViT output through "cross-attention", and finally the attention output of Transformer is used as Regional representation of T5 downstream decoding.

Experimental results

The researchers used two unlabeled (unlabeled) data sets to pre-train the Spotlight model, which were an internal data set based on the C4 corpus and an internal mobile data set. , containing a total of 2.5 million mobile UI screens and 80 million web pages.

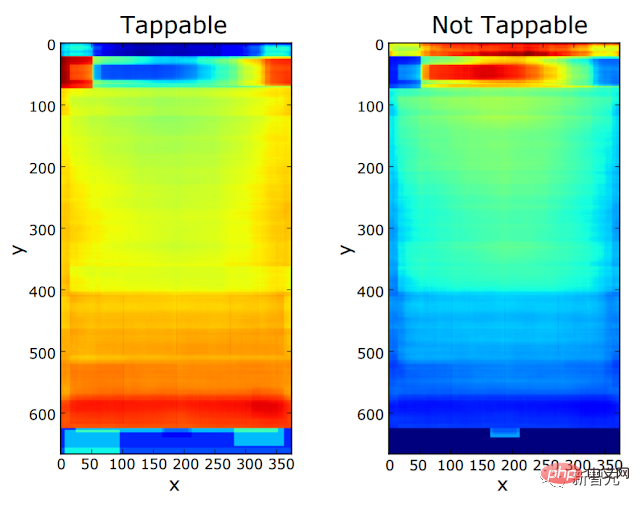

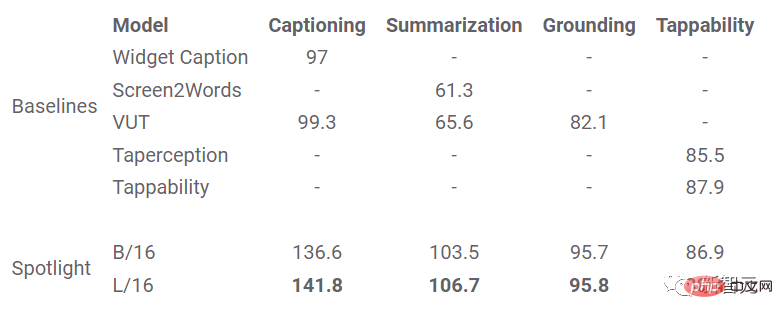

Then the pre-trained model is fine-tuned for four downstream tasks: title, summary, grouding and clickability.

For the window title (widget captioning) and screen summary tasks, use the CIDEr metric to measure how similar the model text description is to a set of references created by the rater; for the command grounding task, the accuracy metric is the model's response to the user The percentage of commands that successfully locate target objects; for clickability predictions, use the F1 score to measure the model's ability to distinguish clickable objects from non-clickable objects.

In the experiment, Spotlight was compared to several baseline models: WidgetCaption uses the view hierarchy and the image of each UI object to generate text descriptions for the objects; Screen2Words uses the view hierarchy and screenshots and accessibility features (e.g., app Program Description) to generate summaries for screens; VUT combines screenshots and view hierarchies to perform multiple tasks; the original Tappability model leverages object metadata from view hierarchies and screenshots to predict an object's Tappability.

Spotlight greatly surpassed the previous sota model in four UI modeling tasks.

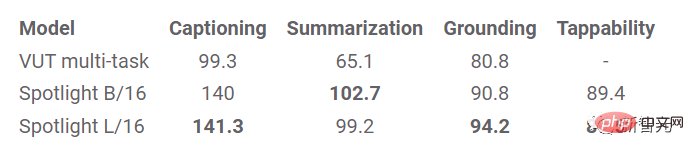

In a more difficult task setting, the model is required to learn multiple tasks at the same time, because multi-task models can greatly reduce the energy of the model Consumption (model footprint), the results show that the performance of the Spotlight model is still competitive.

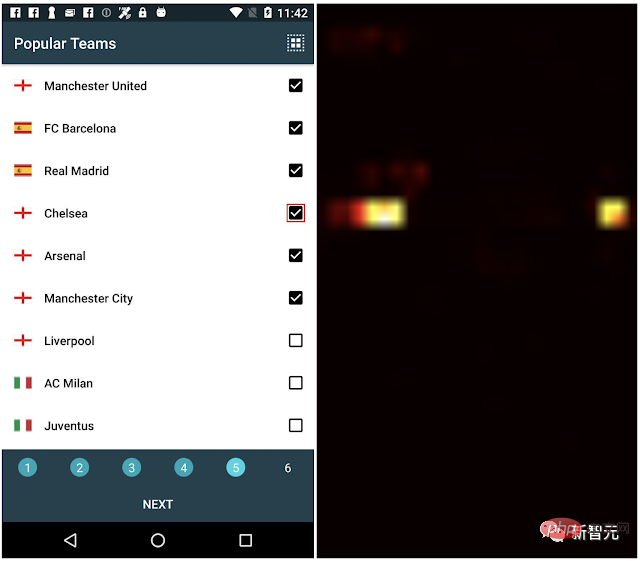

To understand how the Region Summarizer enables Spotlight to focus on target and related areas on the screen, the researchers analyzed the window The attention weights of the title and screen summary tasks indicate where the model's attention is on the screenshot.

In the figure below, for the window title task, when the model predicts "select Chelsea team", the check box on the left The box is highlighted with a red border, and you can see from the attention heat map on the right that the model not only learned to pay attention to the target area of the checkbox, but also learned to pay attention to the leftmost text "Chelsea" to generate the title.

For the screen summary task, the model predicts "page displaying the tutorial of a learning app", and Given the screenshot on the left, in the example, the target area is the entire screen, and the model can learn to process important parts of the screen for summary.

Reference materials:

https://www.php.cn/link/64517d8435994992e682b3e4aa0a0661

The above is the detailed content of Two Chinese Google researchers released the first purely visual 'mobile UI understanding' model, four major tasks to refresh SOTA. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

Single card running Llama 70B is faster than dual card, Microsoft forced FP6 into A100 | Open source

Apr 29, 2024 pm 04:55 PM

Single card running Llama 70B is faster than dual card, Microsoft forced FP6 into A100 | Open source

Apr 29, 2024 pm 04:55 PM

FP8 and lower floating point quantification precision are no longer the "patent" of H100! Lao Huang wanted everyone to use INT8/INT4, and the Microsoft DeepSpeed team started running FP6 on A100 without official support from NVIDIA. Test results show that the new method TC-FPx's FP6 quantization on A100 is close to or occasionally faster than INT4, and has higher accuracy than the latter. On top of this, there is also end-to-end large model support, which has been open sourced and integrated into deep learning inference frameworks such as DeepSpeed. This result also has an immediate effect on accelerating large models - under this framework, using a single card to run Llama, the throughput is 2.65 times higher than that of dual cards. one