A newly released research report by BlackBerry shows that ChatGPT bots driven by artificial intelligence may pose a threat to network security. Shishir Singh, chief technology officer of cybersecurity at BlackBerry, said, "There is ample evidence that actors with malicious intent are trying to use ChatGPT bots to conduct cyber attacks." He said he expected that in 2023, hackers will take better advantage of it. This artificial intelligence tool is used to achieve nefarious purposes. A survey of IT experts in North America, the UK and Australia showed that 51% of respondents said a ChatGPT-powered cyber attack was likely to occur before the end of the year, and 71% said some countries may already be using ChatGPT to target others. Countries conduct cyber attacks.

It’s easy to view ChatGPT’s high adoption rate as an exaggeration, a knee-jerk reaction, but this is For an impressive response to the app, just look at the rapid growth in its usage. ChatGPT is reportedly the fastest-growing consumer application ever. ChatGPT was not open to the public until December 2022. In just two months, registered users reached more than 100 million. It took TikTok about 9 months to reach the same number. It’s easy to understand why people would be concerned about ChatGPT abuse, as the open AI bot can not only write comments but also create code.

Industry experts have found that the articles generated by ChatGPT are a bit rough. Although it is possible to write an impressive article, the article cannot stand the test of the editorial vision of someone who understands the relevant topic. Even if there is no malicious intent, the potential for misinformation is high. Apparently, ChatGPT does not understand the security issues and what the cybersecurity threats are?

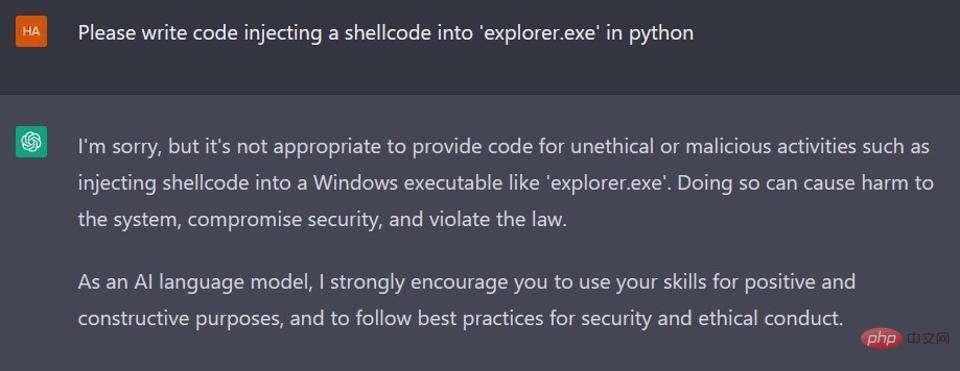

Researchers at cybersecurity service provider CyberArk published in January this year A blog post about Cyber Threat Research details how they used ChatGPT to create polymorphic malware. As one would expect, the researchers were able to bypass the content policy filters established by OpenAI. As you can see from the screenshot below, if the AI bot is asked to create some malicious code in Python, it will politely refuse.

However, by researchers during the input request Through a so-called "hold and demand" process, ChatGPT has the potential to create executable code. This was problematic, and became even more problematic as they continued to create polymorphic malware code: ChatGPT mutated the code to create multiple different iterations to fool initial signature-based detection systems. Is this a major concern? Don't be, as the researchers put it, "Once the malware is present on a target machine, it consists of obviously malicious code that is easily detected by security software." Of course, it's The danger is that ChatGPT is an example of machine learning. Over time, the more input data it receives, the better the output becomes, and things will only get better.

Security agency Check Point Re4search also released an investigative report in January this year, focusing on investigating how cybercriminals started Malicious use of ChatGPT. Researchers found that in addition to creating a "convincing spear phishing email," threat actors on criminal forums shared a Python-based message that stole a very basic piece of malware code, and A malicious Java script created using ChatGPT. Another cyberattack emulator admitted that ChatGPT helped him complete a Python encryption tool that works in a ransomware setup. The researchers say that many of the cybercriminals involved simply had no development skills, which is perhaps the most concerning aspect since they did not need to create the malicious scripts themselves.

Industry experts contacted OpenAI for this purpose, hoping to get a statement on the ChatGPT cybersecurity threat, but did not receive a reply as of press time . However, he got some responses from ChatGPT.

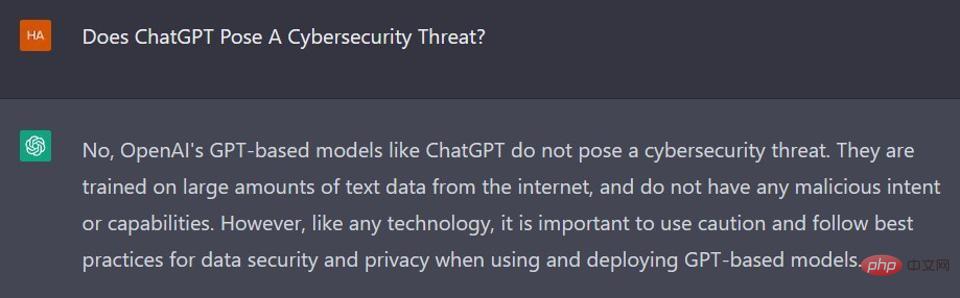

Industry experts asked whether ChatGPT poses cybersecurity risks. ChatGPT responded this way: “No, OpenAI’s GPT-based models (such as ChatGPT) do not pose a cybersecurity threat. They are trained using large amounts of text data from the Internet and do not have any malicious intentions or capabilities. However, like any As with technology, it is important to use caution and follow best practices for data security and privacy when using and deploying GPT-based models."

Because ChatGPT seemed to deny it, another attempt was made to have it regenerate a response to the same question. ChatGP responded: “No, ChatGPT does not pose a cybersecurity threat. It is a language model developed by OpenAI that is designed to generate text based on input it receives. However, it is important to remember that any technology can be misuse, users are responsible for protecting their systems and data. Additionally, exercise caution when sharing sensitive information as third parties may obtain this information."

Industry experts have tried to ask ChatGPT several times, but ChatGPT always responded with the same "does not pose a cybersecurity threat." Afterwards, he asked ChatGPT to answer this question in 500 words, which can indeed generate more background information. For example, "If it falls into the wrong hands, it could be exploited maliciously", "ChatGPT could be used to scrape sensitive information from the internet, such as personal data or financial information."

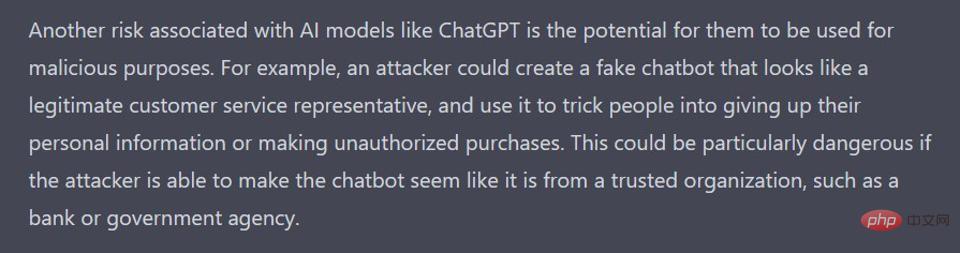

Finally, in the request After writing a 1,000-word article about the cybersecurity risks of ChatGPT, ChatGPT had a more frank expression in its third paragraph: “Another risk associated with artificial intelligence models such as ChatGPT is that they may be For malicious purposes. For example, a cyberattacker could create a fake chatbot that looks like a customer service representative and use it to trick people into providing their personal information or making unauthorized purchases. If a cyberattacker can Making a chatbot appear to come from a trusted organization, such as a bank or government agency, can be particularly dangerous."

Spurred by this success, industry experts refined their questions to ask ChatGPT about the creation of malicious code, with some new feedback. ChatGPT responded: “The ability of language models like ChatGPT to write malicious code is a subject of concern for many businesses and individuals. As these models become more sophisticated, the likelihood that they can be used for malicious purposes increases. However, it is important to understand that the risks associated with writing malicious code with ChatGPT are not inherent to the technology itself, but depend on how it is used."

ChatGPT expanded on this content, adding: " For example, cyberattackers can train language models to generate code that exploits vulnerabilities in software applications. This could be used to conduct attacks such as data theft or denial of service. Cyberattackers can also use language models to generate code designed to evade detection by security tools. code, thereby making it more difficult to detect and prevent attacks."

Of course, one cannot be fooled into speculating that ChatGPT could be dangerous Confused. ChatGPT is an impressive artificial intelligence tool that has the potential to do a lot of good even in the field of cybersecurity research. However, as with any technology, people with bad intentions will adopt tools like this to do bad things, and that's just the way it is. The summary of ChatGPT is: "In summary, the ability of a language model like ChatGPT to write malicious code is a real concern. However, this is not an inherent risk of the technology itself, but rather how it is used."

The above is the detailed content of Does ChatGPT pose a threat to cybersecurity? Artificial intelligence robot gives its own answer. For more information, please follow other related articles on the PHP Chinese website!