Technology peripherals

Technology peripherals

AI

AI

Diffusion model explodes, this is the first review and summary of Github papers

Diffusion model explodes, this is the first review and summary of Github papers

Diffusion model explodes, this is the first review and summary of Github papers

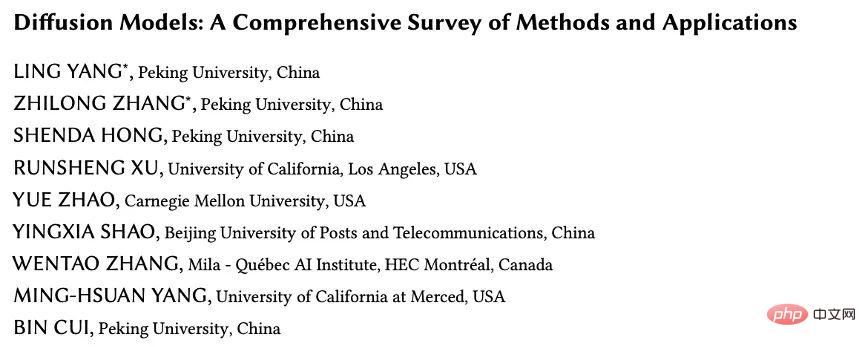

This review (Diffusion Models: A Comprehensive Survey of Methods and Applications) comes from Ming-Hsuan Yang of the University of California & Google Research, Cui Bin Laboratory of Peking University, CMU, UCLA, Montreal Mila Research Institute, etc. The team conducted a comprehensive summary and analysis of the existing diffusion models for the first time, starting from the detailed classification of the diffusion model algorithm, its association with other five major generation models, and its application in seven major fields, and finally proposed The existing limitations and future development directions of the diffusion model are explained.

Article link: https://arxiv.org/abs/2209.00796 Paper classification summary github link: https://github.com/YangLing0818/Diffusion-Models-Papers-Survey-Taxonomy

Introduction

Diffusion models are deep generation New SOTA in model. The diffusion model surpasses the original SOTA: GAN in image generation tasks, and has excellent performance in many application fields, such as computer vision, NLP, waveform signal processing, multi-modal modeling, molecular graph modeling, and time series modeling. , antagonistic purification, etc. In addition, diffusion models are closely related to other research fields, such as robust learning, representation learning, and reinforcement learning. However, the original diffusion model also has shortcomings. Its sampling speed is slow, usually requiring thousands of evaluation steps to draw a sample; its maximum likelihood estimation is not comparable to that of likelihood-based models; it generalizes to a variety of data Type is less capable. Nowadays, many studies have made many efforts to solve the above limitations from the perspective of practical applications, or analyzed the model capabilities from a theoretical perspective.

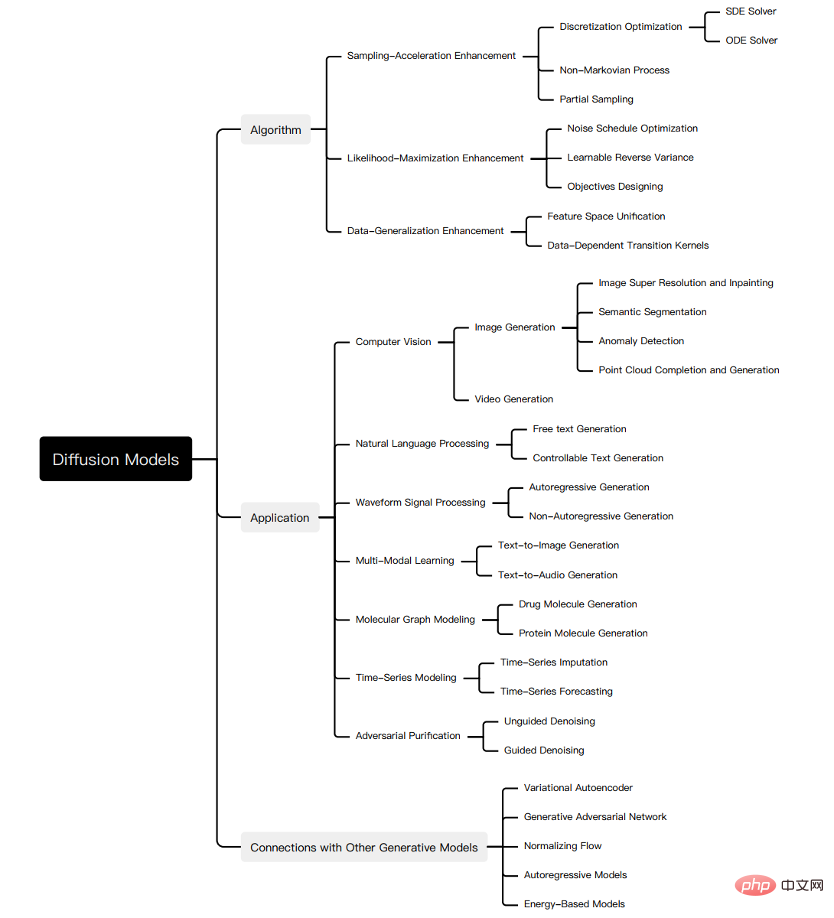

However, there is currently a lack of systematic review of recent advances in diffusion models from algorithms to applications. To reflect the progress in this rapidly growing field, we present the first comprehensive review of diffusion models. We envision that our work will shed light on the design considerations and advanced methods of diffusion models, demonstrate their applications in different fields, and point to future research directions. The summary of this review is shown in the figure below:

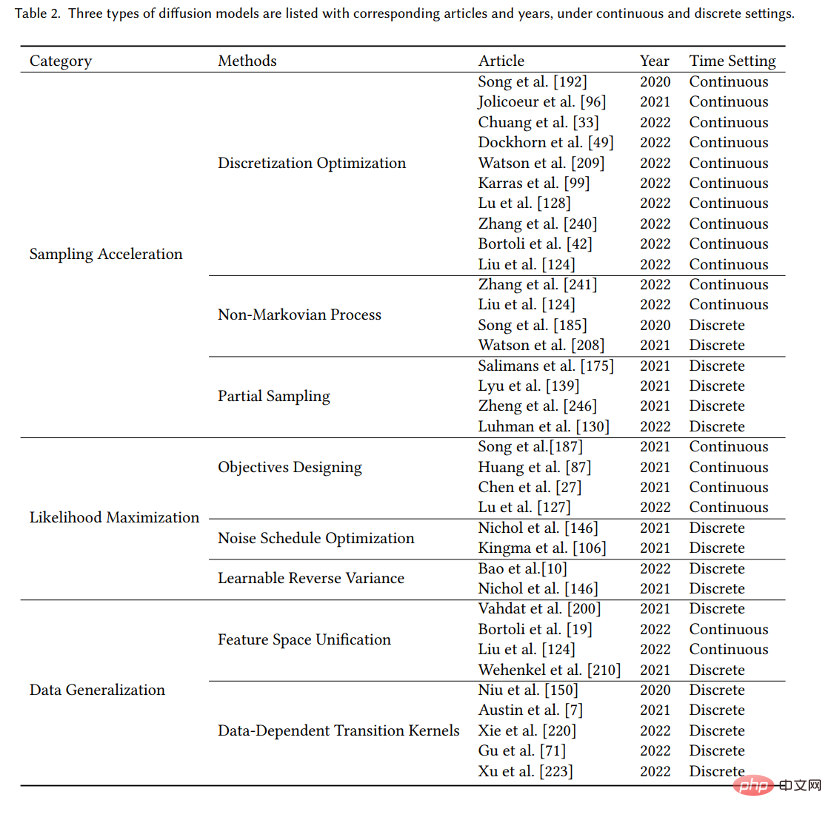

Although diffusion model has great performance in various tasks It has excellent performance in both models, but it still has its own shortcomings, and many studies have improved the diffusion model. In order to systematically clarify the research progress of diffusion models, we summarized the three main shortcomings of the original diffusion model, slow sampling speed, poor maximum likelihood, and weak data generalization ability, and proposed to divide the improvement research on diffusion models into corresponding Three categories: sampling speed improvement, maximum likelihood enhancement and data generalization enhancement. We first explain the motivation for improvement, and then further classify the research in each improvement direction according to the characteristics of the method, so as to clearly show the connections and differences between methods. Here we only select some important methods as examples. Each type of method is introduced in detail in our work, as shown in the figure:

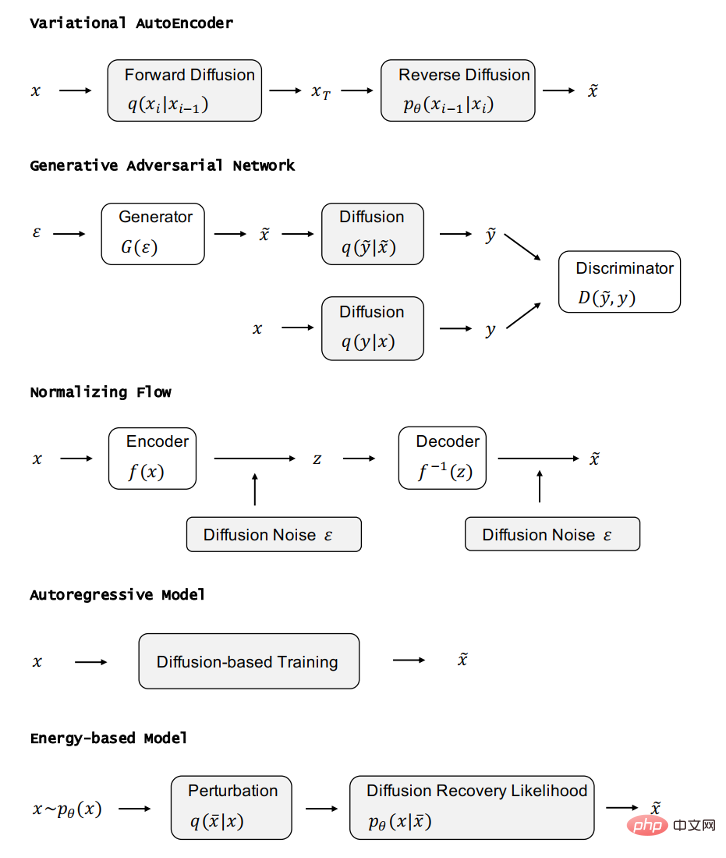

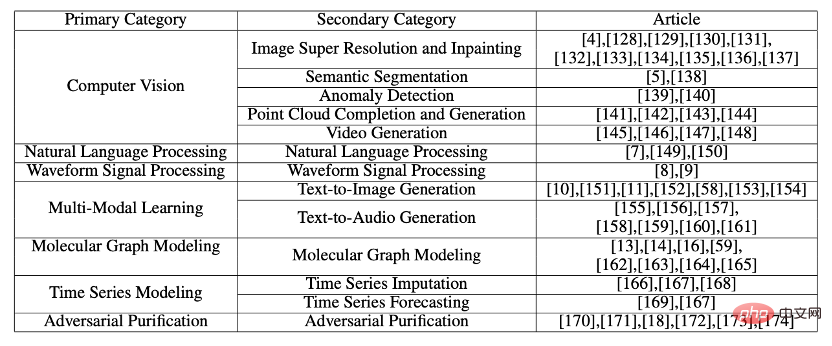

After analyzing the three types of diffusion models, we will introduce the other five generative models GAN, VAE, Autoregressive model, Normalizing flow, and Energy-based model. Considering the excellent properties of the diffusion model, researchers have combined the diffusion model with other generative models according to its characteristics. Therefore, in order to further demonstrate the characteristics and improvement work of the diffusion model, we introduced in detail the combination of the diffusion model and other generative models. work and illustrate improvements over the original generative model. Diffusion model has excellent performance in many fields, and considering that diffusion model has different deformations in applications in different fields, we systematically introduced the application research of diffusion model, including the following fields: computer vision, NLP, waveform signal Processing, multimodal modeling, molecular graph modeling, time series modeling, adversarial purification.

For each task, we define the task and introduce the work of using the diffusion model to handle the task. We summarize the main contributions of this work as follows:

- New classification method: We propose a new, systematic classification of diffusion models and their applications . Specifically, we divide the models into three categories: sampling speed enhancement, maximum likelihood estimation enhancement, and data generalization enhancement. Furthermore, we classify the applications of diffusion models into seven categories: computer vision, NLP, waveform signal processing, multimodal modeling, molecular graph modeling, time series modeling, and adversarial purification.

- Comprehensive Review: We provide the first comprehensive overview of modern diffusion models and their applications. We present the main improvements for each diffusion model, make necessary comparisons with the original model, and summarize the corresponding papers. For each type of application of diffusion models, we present the main problems that diffusion models address and explain how they solve these problems.

- Future research directions: We raise open questions for future research and evaluate the application of diffusion models in algorithms. Some suggestions are provided for future developments in applications.

Diffusion Model Basics

A core issue in generative modeling is the trade-off between model flexibility and computability. The basic idea of the diffusion model is to systematically perturb the distribution in the data through the forward diffusion process, and then restore the distribution of the data by learning the reverse diffusion process, thus producing a highly flexible and easy-to-compute generative model.

A.Denoising Diffusion Probabilistic Models (DDPM)

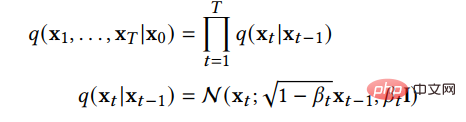

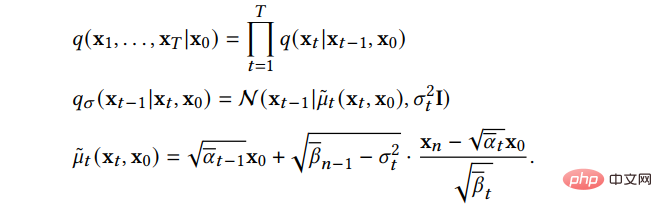

A DDPM consists of two parameterized Markov chains composition, and uses variational inference to generate samples consistent with the original data distribution after a finite time. The function of the forward chain is to perturb the data. It gradually adds Gaussian noise to the data according to the pre-designed noise schedule until the distribution of the data tends to the prior distribution, that is, the standard Gaussian distribution. The backward chain starts from a given prior and uses a parameterized Gaussian transformation kernel, learning to gradually restore the original data distribution. Using  to represent the original data and its distribution, the distribution of the forward chain can be expressed by the following formula:

to represent the original data and its distribution, the distribution of the forward chain can be expressed by the following formula:

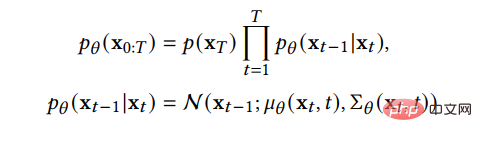

## This shows that the forward chain is a Markov process, x_t is the sample after adding t steps of noise, and β_t is the parameter that controls the progress of the noise given in advance. When  tends to 1, x_T can be approximately considered to obey the standard Gaussian distribution. When β_t is small, the transfer kernel of the reverse process can be approximately considered to be Gaussian:

tends to 1, x_T can be approximately considered to obey the standard Gaussian distribution. When β_t is small, the transfer kernel of the reverse process can be approximately considered to be Gaussian:

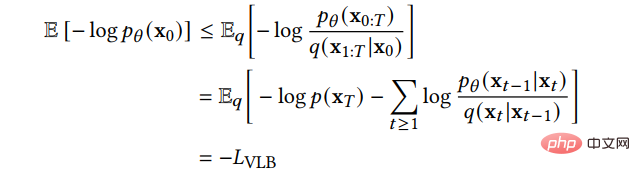

We can learn the variational lower bound as a loss function:

##B.Score-Based Generative Models (SGM)

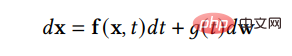

Above DDPM can be regarded as the discrete form of SGM. SGM constructs a stochastic differential equation (SDE) to smoothly disturb the data distribution and transform the original data distribution into a known prior distribution:

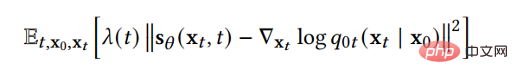

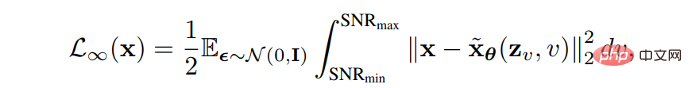

So, to reverse the diffusion process and generate data, the only information we need is the fractional function at each time point. Using score-matching techniques, we can learn the score function through the following loss function:

Further introduction to the two methods and the relationship between them See our article for an introduction.

The three main shortcomings of the original diffusion model are slow sampling speed, poor maximum likelihood, and weak data generalization ability. Many recent studies have addressed these shortcomings, so we classify improved diffusion models into three categories: sampling speed enhancement, maximum likelihood enhancement, and data generalization enhancement. In the next three, four, and five sections we will introduce these three types of models in detail.

Sampling acceleration method

When applied, in order to achieve the best quality of new samples, the diffusion model often requires thousands of calculation steps to obtain a new sample. sample. This limits the practical application value of the diffusion model, because in actual application, we often need to generate a large number of new samples to provide materials for the next step of processing. Researchers have conducted a lot of research on improving the sampling speed of diffusion model. We describe these studies in detail. We refine it into three methods: Discretization Optimization, Non-Markovian Process, and Partial Sampling.

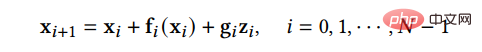

A.Discretization Optimization methodOptimize the method of solving diffusion SDE. Because solving complex SDE in reality can only use discrete solutions to approximate the real solution, this type of method attempts to optimize the discretization method of SDE to reduce the number of discrete steps while ensuring sample quality. SGM proposes a general method to solve the reverse process, i.e., the same discretization method is adopted for the forward and backward processes. If the forward SDE is given a discretization:

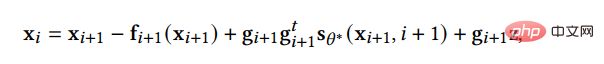

# then we can discretize the inverse SDE in the same way:

This method is slightly better than simple DDPM. Furthermore, SGM adds a corrector to the SDE solver so that the samples generated at each step have the correct distribution. At each step of the solution, after the solver is given a sample, the corrector uses a Markov chain Monte Carlo method to correct the distribution of the just-generated sample. Experiments show that adding a corrector to the solver is more efficient than directly increasing the number of steps in the solver.

B.Non-Markovian Process The method breaks through the limitations of the original Markovian Process, and its reverse process Each step can rely on more past samples to predict new samples, so better predictions can be made even when the step size is large, thereby speeding up the sampling process. Among them, DDIM, the main work, no longer assumes that the forward process is a Markov process, but obeys the following distribution:

The sampling process of DDIM can Treated as a discretized divine regular differential equation, the sampling process is more efficient and supports interpolation of samples. Further research found that DDIM can be regarded as a special case of the on-manifold diffusion model PNDM.

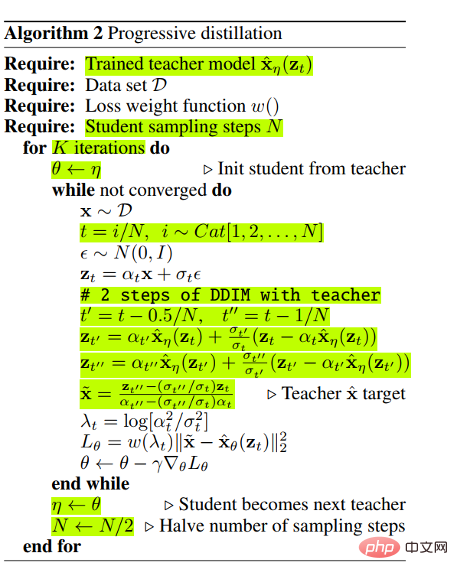

C.Partial Sampling method ignores part of the time nodes in the generation process and only uses the remaining The next time node is used to generate samples, which directly reduces the sampling time. For example, Progressive Distillation distills a more efficient diffusion model from a trained diffusion model. For a trained diffusion model, Progressive Distillation will retrain a diffusion model so that one step of the new diffusion model corresponds to the two steps of the trained diffusion model, so that the new model can save half of the sampling process of the old model. The specific algorithm is as follows:

#Continuously cycling this distillation process can reduce the sampling steps exponentially.

Maximum likelihood estimation enhancement

The performance of the diffusion model in maximum likelihood estimation is worse than that of the generative model based on the likelihood function, but the maximum likelihood estimation has many advantages. Application scenarios are of great significance, such as image compression, semi-supervised learning, and adversarial purification. Since the log-likelihood is difficult to calculate directly, research mainly focuses on optimizing and analyzing variational lower bounds (VLB). We elaborate on models that improve maximum likelihood estimates of diffusion models. We refine it into three categories of methods: Objectives Designing, Noise Schedule Optimization, and Learnable Reverse Variance.

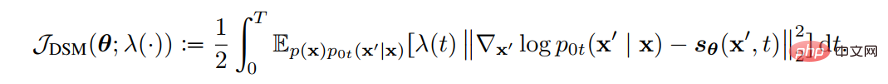

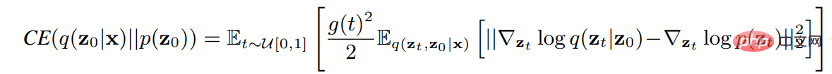

A.Objectives Designing The method uses diffusion SDE to derive the log-likelihood and fractional functions of the generated data Matching loss functions. In this way, by appropriately designing the loss function, VLB and log-likelihood can be maximized. Song et al. proved that the weight function of the loss function can be designed so that the likelihood function value of the sample generated by plug-in reverse SDE is less than or equal to the loss function value, that is, the loss function is the upper bound of the likelihood function. The loss function for fractional function fitting is as follows:

We only need to set the weight function λ(t) to the diffusion coefficient g(t) Let the loss function be the VLB of the likelihood function, that is:

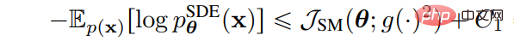

B.Noise Schedule Optimization Increase VLB by designing or learning the noisy progress of the forward process. VDM proves that when the discrete steps approach infinity, the loss function is completely determined by the endpoint of the signal-to-noise ratio function SNR(t):

Then in When the discrete steps approach infinity, VLB can be optimized by learning the endpoints of the signal-to-noise ratio function SNR(t), and other aspects of the model can be improved by learning the function values in the middle part of the signal-to-noise ratio function.

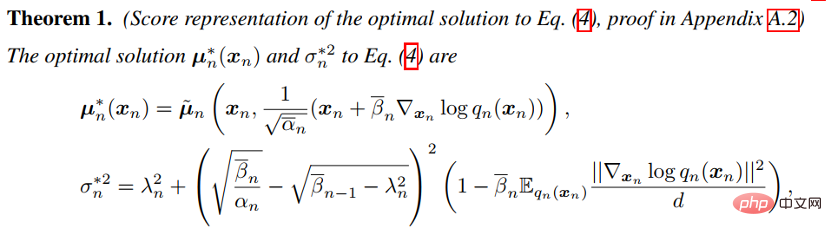

C.Learnable Reverse Variance The method learns the variance of the reverse process, thereby reducing the fitting error and can effectively maximize VLB . Analytic-DPM proves that there is an optimal expectation and variance in the reverse process in DDPM and DDIM:

Use the above formula and the trained For fractional functions, under the conditions of a given forward process, the optimal VLB can be approximately achieved.

Data Generalization Enhancement

The diffusion model assumes that the data exists in Euclidean space, that is, a manifold with a planar geometry, and adding Gaussian noise will be inevitable Convert the data into a continuous state space, so the diffusion model can initially only handle continuous data such as pictures, and the effect of directly applying discrete data or other data types is poor. This limits the application scenarios of the diffusion model. Several research works generalize the diffusion model to other data types, and we illustrate these methods in detail. We classify it into two types of methods: Feature Space Unification and Data-Dependent Transition Kernels.

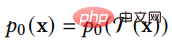

A.Feature Space Unification method converts data into a unified form of latent space, and then latent space spread on. LSGM proposes to convert the data into a continuous latent space through the VAE framework and then diffuse it on it. The difficulty of this method is how to train VAE and diffusion model at the same time. LSGM shows that since the underlying prior is intractable, the fractional matching loss no longer applies. LSGM directly uses the traditional loss function ELBO in VAE as the loss function, and derives the relationship between ELBO and score matching:

This formula holds true if constants are ignored. By parameterizing the fractional function of the sample in the diffusion process, LSGM can efficiently learn and optimize ELBO.

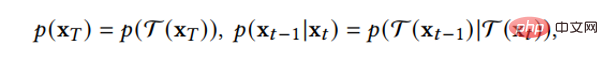

B.Data-Dependent Transition Kernels #Method: Design the transition kernels in the diffusion process according to the characteristics of the data type , allowing diffusion models to be directly applied to specific data types. D3PM has designed a transition kernel for discrete data, which can be set to lazy random-walk, absorbing state, etc. GEODIFF designed a translation-rotation invariant graph neural network for 3D molecular graph data, and proved that the invariant initial distribution and transition kernel can derive an invariant marginal distribution. Suppose T is a translation-rotation transformation, such as:

Then the generated sample distribution also has translation-rotation invariance:

Relationship with other generative modelsIn each section below, we first introduce the other five important types of generative models and analyze their Advantages and Limitations. We then introduce how diffusion models are related to them and illustrate how these generative models can be improved by incorporating diffusion models. The relationship between VAE, GAN, Autoregressive model, Normalizing flow, Energy-based model and diffusion model is shown in the figure below:

- DDPM can be regarded as a hierarchical Markovian VAE. But there are also differences between DDPM and general VAE. As a VAE, DDPM's encoder and decoder both obey Gaussian distribution and have Markov rows; the dimension of its hidden variables is the same as the data dimension; all layers of the decoder share a neural network.

- DDPM can help GAN solve the problem of unstable training. Because the data is in a low-dimensional manifold in a high-dimensional space, the distribution of the data generated by GAN has a low overlap with the distribution of the real data, resulting in unstable training. The diffusion model provides a process of systematically adding noise. It adds noise to the generated data and real data through the diffusion model, and then sends the noise-added data to the discriminator. This can effectively solve the problem of GAN being unable to train and the training being unstable. .

- Normalizing flow uses a bijection function to transform data into a priori distribution. This approach limits the expression ability of Normalizing flow, resulting in poor application results. The analogy diffusion model adds noise to the encoder, which can increase the expressive ability of Normalizing flow. From another perspective, this approach is to extend the diffusion model to a model that can also be learned in the forward process.

- Autoregressive model needs to ensure that the data has a certain structure, which makes it very difficult to design and parameterize autoregressive models. The training of diffusion models inspired the training of autoregressive models, which avoids design difficulties through specific training methods.

- Energy-based model directly models the distribution of the original data, but direct modeling makes learning and sampling difficult. By using diffusion recovery likelihood, the model can first add slight noise to the sample, and then infer the distribution of the original sample from the slightly noisy sample distribution, making the learning and sampling process simpler and more stable.

Application of Diffusion Model

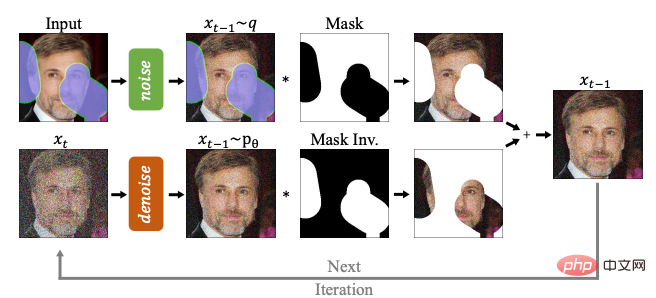

In this section, we introduce the application of diffusion model in computer vision, natural language processing, waveform signal processing, multi-mode Applications in seven major application directions, including dynamic learning, molecular graph generation, time series, and adversarial learning, and the methods in each type of application are subdivided and analyzed. For example, in computer vision, diffusion model can be used for image completion repair (RePaint):

In multi-modal tasks, you can use diffusion model to generate text to image (GLIDE):

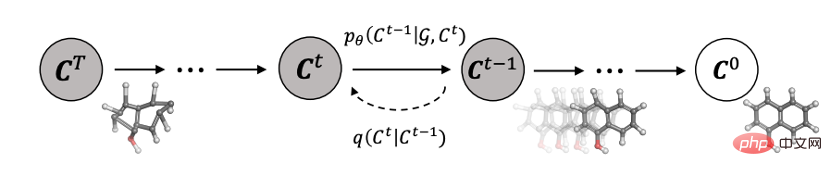

You can also use Use diffusion model to generate drug molecules and protein molecules in molecular graph generation (GeoDiff):

Application classification summary is shown in the table:

Future research directions

- Apply hypothesis re-testing. We need to examine the generally accepted assumptions we make in our applications. For example, in practice, it is generally believed that the forward process of the diffusion model will transform the data into a standard Gaussian distribution, but this is not the case. More forward diffusion steps will make the final sample distribution closer to the standard Gaussian distribution, consistent with the sampling process. ; but more forward diffusion steps also make estimating the fractional function more difficult. Theoretical conditions are difficult to obtain, thus leading to a mismatch between theory and practice in practice. We should be aware of this situation and design appropriate diffusion models.

- From discrete time to continuous time. Due to the flexibility of diffusion models, many empirical methods can be enhanced with further analysis. This research idea is promising by converting discrete-time models into corresponding continuous-time models and then designing more and better discrete methods.

- New generation process. Diffusion models generate samples through two main methods: one is to discretize the inverse diffusion SDE, and then generate the samples through the discretized inverse SDE; the other is to use the Markov properties of the inverse process to progressively denoise the samples. However, for some tasks, it is difficult to apply these methods to generate samples in practice. Therefore, further research into new generative processes and perspectives is needed.

- Generalize to more complex scenarios and more research areas. Although the diffusion model has been applied to many scenarios so far, most of them are limited to single-input and single-output scenarios. In the future, you can consider applying it to more complex scenarios, such as text-to-audiovisual speech synthesis. You can also consider combining it with more research fields.

The above is the detailed content of Diffusion model explodes, this is the first review and summary of Github papers. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

PyTorch distributed training on CentOS system requires the following steps: PyTorch installation: The premise is that Python and pip are installed in CentOS system. Depending on your CUDA version, get the appropriate installation command from the PyTorch official website. For CPU-only training, you can use the following command: pipinstalltorchtorchvisiontorchaudio If you need GPU support, make sure that the corresponding version of CUDA and cuDNN are installed and use the corresponding PyTorch version for installation. Distributed environment configuration: Distributed training usually requires multiple machines or single-machine multiple GPUs. Place

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library: