Technology peripherals

Technology peripherals

AI

AI

Unified visual AI capabilities! Automated image detection and segmentation, and controllable Vincentian images, produced by a Chinese team

Unified visual AI capabilities! Automated image detection and segmentation, and controllable Vincentian images, produced by a Chinese team

Unified visual AI capabilities! Automated image detection and segmentation, and controllable Vincentian images, produced by a Chinese team

This article is reprinted with the authorization of AI New Media Qubit (public account ID: QbitAI). Please contact the source for reprinting.

Now it’s time for the AI circle to compete with hand speed.

No, Meta’s SAM has just been launched a few days ago, and domestic programmers have come to superimpose a wave of buffs, integrating target detection, segmentation, and generation of major visual AI functions all in one!

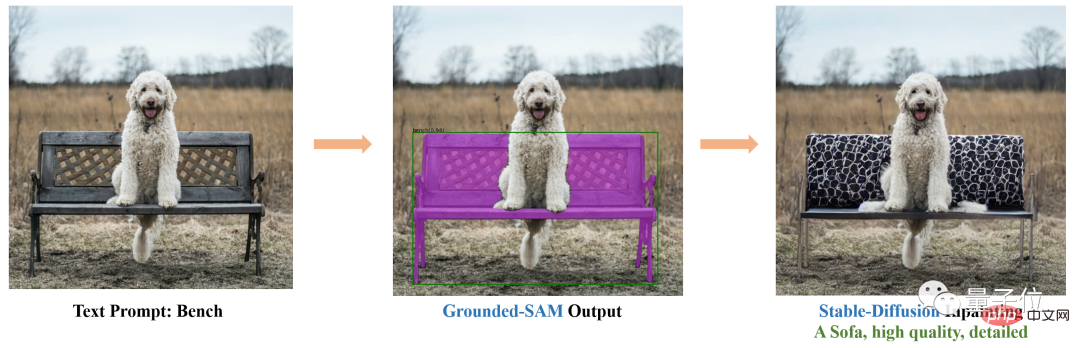

For example, based on Stable Diffusion and SAM, you can seamlessly replace the chair in the photo with a sofa:

It is also so easy to change clothes and hair color :

As soon as the project was released, many people exclaimed: The hand speed is too fast!

Someone else said: There are new wedding photos of Yui Aragaki and I.

The above is the effect brought by Gounded-SAM. The project has received 1.8k stars on GitHub.

To put it simply, this is a zero-shot vision application that only needs to input images to automatically detect and segment images.

This research comes from IDEA Research Institute (Guangdong-Hong Kong-Macao Greater Bay Area Digital Economy Research Institute), whose founder and chairman is Shen Xiangyang.

No additional training required

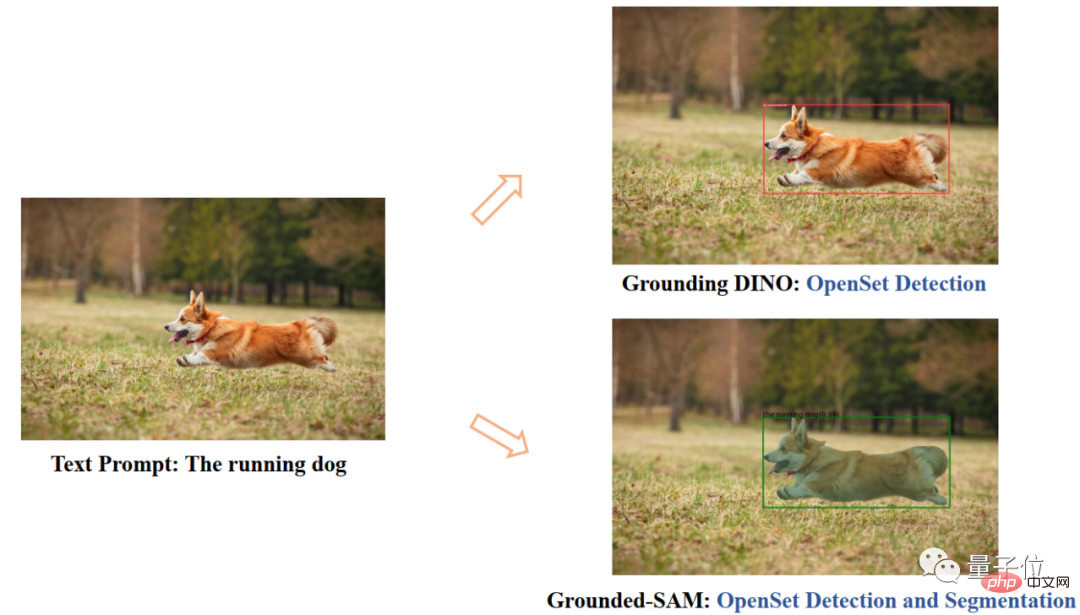

Grounded SAM is mainly composed of two models: Grounding DINO and SAM.

SAM (Segment Anything) is a zero-sample segmentation model just launched by Meta 4 days ago.

It can generate masks for any objects in images/videos, including objects and images that have not appeared during the training process.

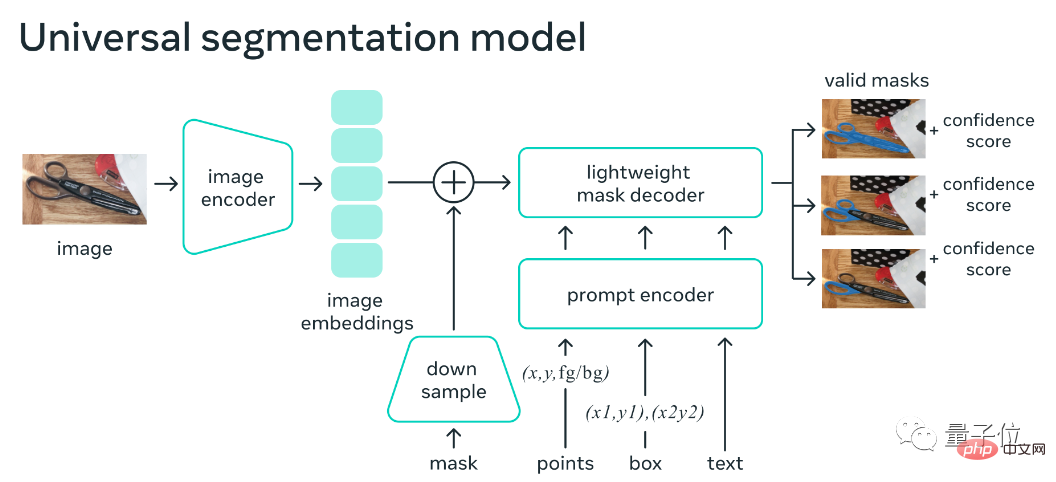

By allowing SAM to return a valid mask for any prompt, the model's output should be a reasonable mask among all possibilities, even if the prompt is ambiguous or points to multiple objects. This task is used to pretrain the model and solve general downstream segmentation tasks via hints.

The model framework mainly consists of an image encoder, a hint encoder and a fast mask decoder. After computing the image embedding, SAM is able to generate a segmentation based on any prompt in the web within 50 milliseconds.

Grounding DINO is an existing achievement of this research team.

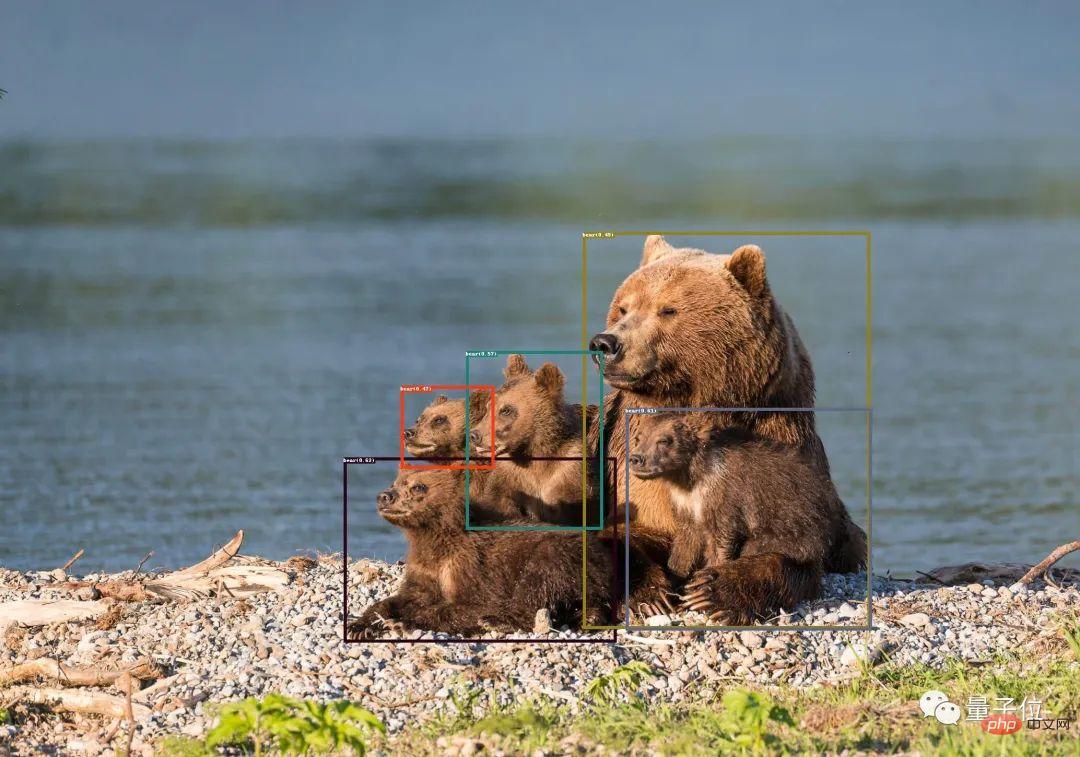

This is a zero-shot detection model, which can generate object boxes and labels with text descriptions.

After combining the two, you can find any object in the picture through text description, and then use SAM's powerful segmentation capability to segment the mask in a fine-grained manner.

On top of these abilities, they also added the ability of Stable Diffusion, which is the controllable image generation shown at the beginning.

It is worth mentioning that Stable Diffusion has been able to achieve similar functions before. Just erase the image elements you want to replace and enter the text prompt.

This time, Grounded SAM can save the step of manual selection and control it directly through text description.

In addition, combined with BLIP (Bootstrapping Language-Image Pre-training), it generates image titles, extracts labels, and then generates object boxes and masks.

Currently, there are more interesting features under development.

For example, some expansion of characters: changing clothes, hair color, skin color, etc.

Public information shows that the institute is an international innovative research institution for artificial intelligence, digital economy industry and cutting-edge technology. Former chief scientist of Microsoft Asia Research Institute and former vice president of Microsoft Global Intelligence Shen Xiangyang Dr. serves as the founder and chairman.

One More Thing

For the future work of Grounded SAM, the team has several prospects:

- Automatically generate images to form a new data set

- The powerful basic model with segmentation pre-training

- cooperates with (Chat-)GPT

- to form a pipeline that automatically generates image labels, boxes and masks, and can generate new images.

It is worth mentioning that many of the team members of this project are active respondents in the AI field on Zhihu. This time they also answered questions about Grounded SAM on Zhihu. Content, interested children can leave a message to ask~

The above is the detailed content of Unified visual AI capabilities! Automated image detection and segmentation, and controllable Vincentian images, produced by a Chinese team. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to define header files for vscode

Apr 15, 2025 pm 09:09 PM

How to define header files for vscode

Apr 15, 2025 pm 09:09 PM

How to define header files using Visual Studio Code? Create a header file and declare symbols in the header file using the .h or .hpp suffix name (such as classes, functions, variables) Compile the program using the #include directive to include the header file in the source file. The header file will be included and the declared symbols are available.

Do you use c in visual studio code

Apr 15, 2025 pm 08:03 PM

Do you use c in visual studio code

Apr 15, 2025 pm 08:03 PM

Writing C in VS Code is not only feasible, but also efficient and elegant. The key is to install the excellent C/C extension, which provides functions such as code completion, syntax highlighting, and debugging. VS Code's debugging capabilities help you quickly locate bugs, while printf output is an old-fashioned but effective debugging method. In addition, when dynamic memory allocation, the return value should be checked and memory freed to prevent memory leaks, and debugging these issues is convenient in VS Code. Although VS Code cannot directly help with performance optimization, it provides a good development environment for easy analysis of code performance. Good programming habits, readability and maintainability are also crucial. Anyway, VS Code is

Can vscode run kotlin

Apr 15, 2025 pm 06:57 PM

Can vscode run kotlin

Apr 15, 2025 pm 06:57 PM

Running Kotlin in VS Code requires the following environment configuration: Java Development Kit (JDK) and Kotlin compiler Kotlin-related plugins (such as Kotlin Language and Kotlin Extension for VS Code) create Kotlin files and run code for testing to ensure successful environment configuration

Which one is better, vscode or visual studio

Apr 15, 2025 pm 08:36 PM

Which one is better, vscode or visual studio

Apr 15, 2025 pm 08:36 PM

Depending on the specific needs and project size, choose the most suitable IDE: large projects (especially C#, C) and complex debugging: Visual Studio, which provides powerful debugging capabilities and perfect support for large projects. Small projects, rapid prototyping, low configuration machines: VS Code, lightweight, fast startup speed, low resource utilization, and extremely high scalability. Ultimately, by trying and experiencing VS Code and Visual Studio, you can find the best solution for you. You can even consider using both for the best results.

Can vscode be used for java

Apr 15, 2025 pm 08:33 PM

Can vscode be used for java

Apr 15, 2025 pm 08:33 PM

VS Code is absolutely competent for Java development, and its powerful expansion ecosystem provides comprehensive Java development capabilities, including code completion, debugging, version control and building tool integration. In addition, VS Code's lightweight, flexibility and cross-platformity make it better than bloated IDEs. After installing JDK and configuring JAVA_HOME, you can experience VS Code's Java development capabilities by installing "Java Extension Pack" and other extensions, including intelligent code completion, powerful debugging functions, construction tool support, etc. Despite possible compatibility issues or complex project configuration challenges, these issues can be addressed by reading extended documents or searching for solutions online, making the most of VS Code’s

What does sublime renewal balm mean

Apr 16, 2025 am 08:00 AM

What does sublime renewal balm mean

Apr 16, 2025 am 08:00 AM

Sublime Text is a powerful customizable text editor with advantages and disadvantages. 1. Its powerful scalability allows users to customize editors through plug-ins, such as adding syntax highlighting and Git support; 2. Multiple selection and simultaneous editing functions improve efficiency, such as batch renaming variables; 3. The "Goto Anything" function can quickly jump to a specified line number, file or symbol; but it lacks built-in debugging functions and needs to be implemented by plug-ins, and plug-in management requires caution. Ultimately, the effectiveness of Sublime Text depends on the user's ability to effectively configure and manage it.

How to beautify json with vscode

Apr 15, 2025 pm 05:06 PM

How to beautify json with vscode

Apr 15, 2025 pm 05:06 PM

Beautifying JSON data in VS Code can be achieved by using the Prettier extension to automatically format JSON files so that key-value pairs are arranged neatly and indented clearly. Configure Prettier formatting rules as needed, such as indentation size, line breaking method, etc. Use the JSON Schema Validator extension to verify the validity of JSON files to ensure data integrity and consistency.

Can vscode run c

Apr 15, 2025 pm 08:24 PM

Can vscode run c

Apr 15, 2025 pm 08:24 PM

Of course! VS Code integrates IntelliSense, debugger and other functions through the "C/C" extension, so that it has the ability to compile and debug C. You also need to configure a compiler (such as g or clang) and a debugger (in launch.json) to write, run, and debug C code like you would with other IDEs.