Technology peripherals

Technology peripherals

AI

AI

The LLaMA model was leaked, and the Meta version of ChatGPT was forced to be 'open source'! GitHub gains 8k stars and a large number of reviews are released

The LLaMA model was leaked, and the Meta version of ChatGPT was forced to be 'open source'! GitHub gains 8k stars and a large number of reviews are released

The LLaMA model was leaked, and the Meta version of ChatGPT was forced to be 'open source'! GitHub gains 8k stars and a large number of reviews are released

The battle for ChatGPT is intensifying.

# A few weeks ago, Meta released its own large-scale language model LLaMA, with parameters ranging from 7 billion to 65 billion.

In the paper, LLaMA (13 billion) with only 1/10 parameters surpasses GPT-3 in most benchmark tests.

For LLaMA with 65 billion parameters, it is comparable to DeepMind’s Chinchilla (70 billion parameters) and Google’s PaLM (540 billion parameters).

#Although Meta claims that LLaMA is open source, researchers still need to apply and review it.

#However, what I never expected was that just a few days after its release, the model files of LLaMA were leaked in advance.

#So, the question is, is this intentional or accidental?

LLaMA suffered from "open source" ?

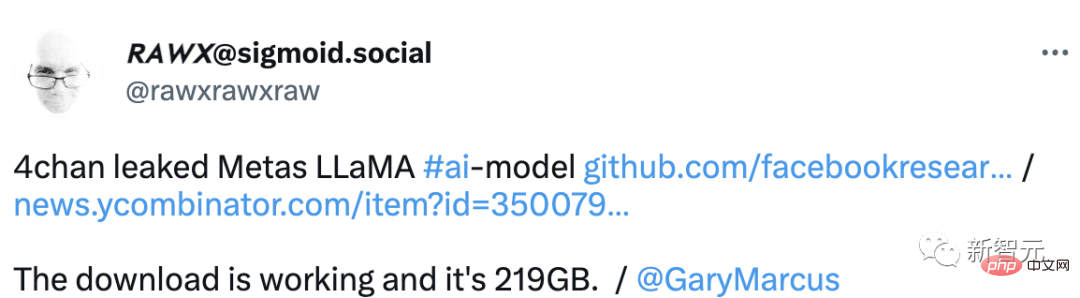

Recently, LLaMA’s finished product library was leaked on the foreign forum 4chan.

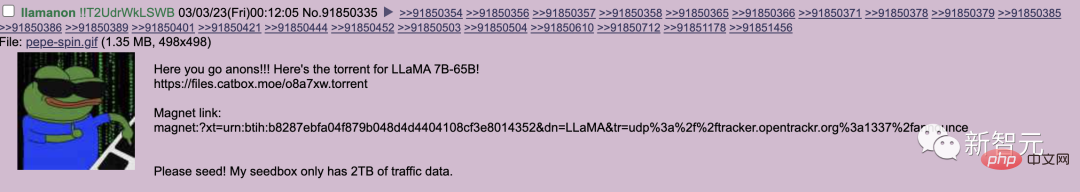

Last Thursday, user llamanon posted on 4chan’s tech board via a torrent (torrent) Release of LLaMA models of 7B and 65B.

#This seed link is currently blocked Merged into LLaMA's GitHub page.

He also submitted a second pull request to the project, which provided a seed link to another set of weights for the model.

#Currently, the project has received 8k stars on GitHub.

#However, one of the biggest mistakes leakers make is including their unique identifier code in the leaked model.

#This code is specifically designed to track down leakers, putting user llamanon’s personal information at risk.

# As the saying goes, LLaMA is not open source and it is not decent, but netizens help it to be decent.

Additionally, users on 4chan have created a handy resource for those looking to deploy the model on their own workstations.

# and provides a guide to a distribution tutorial on how to obtain a model and add modified weights to it for more efficient inference.

#What’s more, this resource even provides a way to integrate LLaMA into the online writing platform KoboldAI.

Whether Meta did this intentionally or accidentally leaked it. Netizens expressed their opinions one after another.

A netizen’s analysis was very clear, “Maybe Meta leaked it deliberately to fight against OpenAI.”

Some customers think this is a better model and it hits right at the heart of their business plan to sell access for $250,000 a year. A month of access to their service buys a machine capable of running this leaked model. Meta undercuts a potential upstart competitor to keep the current big tech cartel stable. Maybe this is a bit of a conspiracy theory, but we live in the age of big technology and big conspiracies.

# On Monday, Meta said it would continue to release its artificial intelligence tools to accredited researchers even though LLaMA had been leaked to unauthorized users.

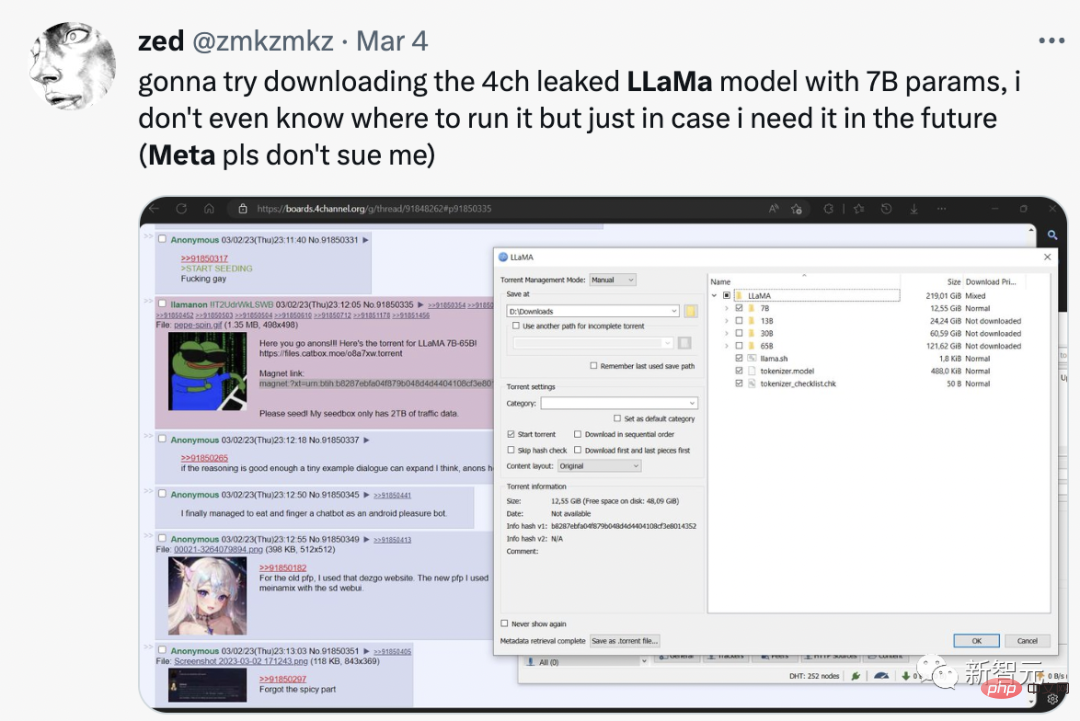

Some netizens directly said that they downloaded LLaMA with 7 billion parameters. Although they don’t know how to run it, they can get it just in case they need it in the future.

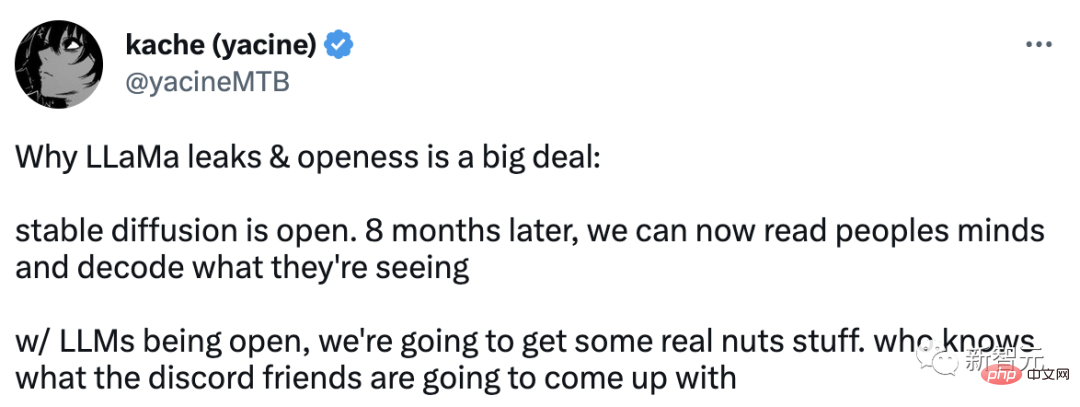

##The leak and open source of LLaMA is a big event:

Stable Diffusion is open source. Eight months later, we can now read other people's minds and decode everything they see.

#With the opening of LLMs, we're going to get some really crazy stuff.

Not long after LLaMA was released, netizens discovered this The smallest parameter model also requires nearly 30GB of GPU to run.

# However, with floating point optimization via Bits and Bytes libraries, they were able to get the model running on a single NVIDIA RTX 3060.

Additionally, a researcher on GitHub was even able to infer a few words per second running the 7B version of LLM on a Ryzen 7900X CPU.

#So what exactly is the LLaMA model? Foreign guys reviewed it.

##LLaMA performed well in many tests.

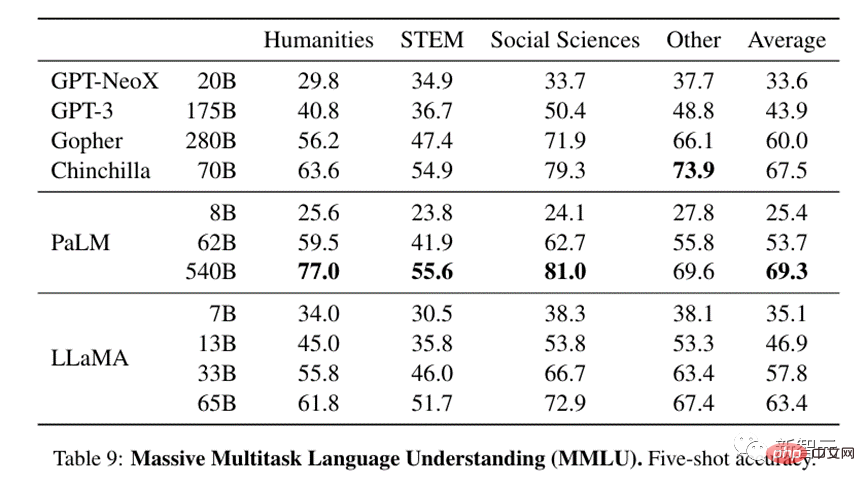

In terms of large-scale multi-task language understanding, even the relatively small 13B model is on par with GPT-3, which is the size of its 13 times.

The 33B version is far superior to GPT-3, and the 65B version can compete with the most powerful existing LLM model-Google's 540B parameter PaLM.

For text that needs to be processed using logic or calculations, LLaMA performs well and can compete with PaLM in quantitative reasoning. Compared to, or even better than the latter's code generation capabilities.

Given these results, LLaMA appears to be one of the most advanced models currently available, and, It's small enough that it doesn't require many resources to run. This makes LLaMA very tempting for people to want to play with it and see what it can do.

Explaining Jokes

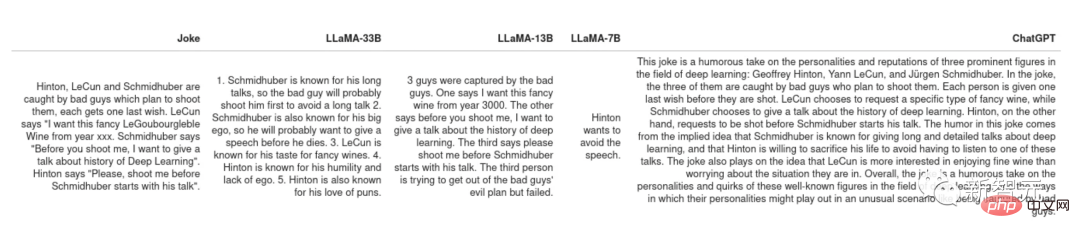

PaLM’s original paper shows a very cool use case: given a joke, let the model explain why it is funny. This task requires a combination of experimentation and logic, which all previous models of PaLM were unable to achieve.

Let some of the jokes be explained by LLaMA and ChatGPT. Some joke language models can get them, such as Schimidhuber's long and boring speech.

But overall, neither LLaMA nor ChatGPT have a sense of humor.

However, the two have different strategies for dealing with jokes that they don’t understand. ChatGPT will generate “a wall of text”, hoping that at least some of the sentences are correct answers. , this behavior is like students who don’t know the answer, hoping that the teacher can find the answer from their random talk.

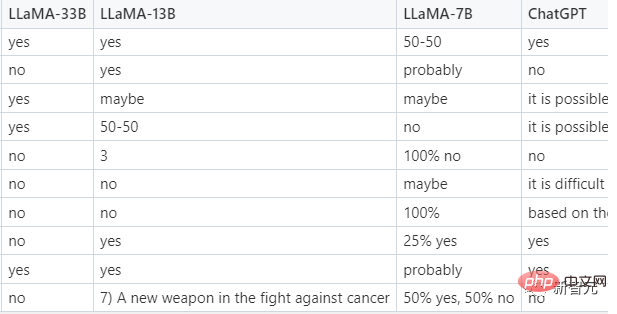

Zero sample classification

This is a very practical function that allows people to use LLM instead of scoring to generate training sets and then train smaller serviceable models on those training sets.

A more challenging task is to classify clicked ads. Since even humans cannot agree on what a clicked ad is, the model is provided in the prompt. Some examples, so in fact this is a few-sample rather than zero-sample classification. Here are tips from LLaMA.

In the test, only LLaMA-33B managed to follow the required format and give answers, and its predictions were reasonable. ChatGPT performed second, and could give a comparison A reasonable answer, but often not in the prescribed format, and the smaller 7B and 13B models are not well suited to the task.

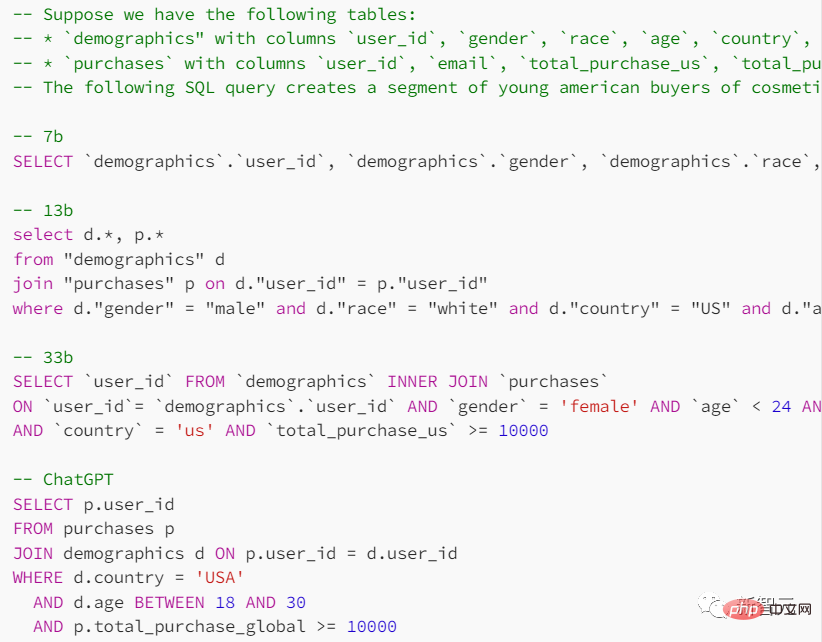

##Code generation

Although the method is LLM Excellent in humanities but not in STEM subjects, so how does LLaMA perform in this area?

#In the prompt, give the form of the search table and the purpose you hope to achieve, and ask the model to provide SQL query statements.

ChatGPT performs better in this task, but the results given by the language model are generally unreliable.

In various tests compared with ChatGPT, LLaMA did not perform as expected. Just as successful. Of course, if the gap is only caused by RLHF (reinforcement learning with human feedback), then the future of small models may be brighter.

The above is the detailed content of The LLaMA model was leaked, and the Meta version of ChatGPT was forced to be 'open source'! GitHub gains 8k stars and a large number of reviews are released. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1317

1317

25

25

1268

1268

29

29

1246

1246

24

24

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

Hey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

This week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Shopify CEO Tobi Lütke's recent memo boldly declares AI proficiency a fundamental expectation for every employee, marking a significant cultural shift within the company. This isn't a fleeting trend; it's a new operational paradigm integrated into p

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

Introduction OpenAI has released its new model based on the much-anticipated “strawberry” architecture. This innovative model, known as o1, enhances reasoning capabilities, allowing it to think through problems mor

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

Introduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

3 Methods to Run Llama 3.2 - Analytics Vidhya

Apr 11, 2025 am 11:56 AM

3 Methods to Run Llama 3.2 - Analytics Vidhya

Apr 11, 2025 am 11:56 AM

Meta's Llama 3.2: A Multimodal AI Powerhouse Meta's latest multimodal model, Llama 3.2, represents a significant advancement in AI, boasting enhanced language comprehension, improved accuracy, and superior text generation capabilities. Its ability t

Newest Annual Compilation Of The Best Prompt Engineering Techniques

Apr 10, 2025 am 11:22 AM

Newest Annual Compilation Of The Best Prompt Engineering Techniques

Apr 10, 2025 am 11:22 AM

For those of you who might be new to my column, I broadly explore the latest advances in AI across the board, including topics such as embodied AI, AI reasoning, high-tech breakthroughs in AI, prompt engineering, training of AI, fielding of AI, AI re