Technology peripherals

Technology peripherals

AI

AI

A complete set of tutorials for adapting the Diffusers framework is here! From T2I-Adapter to the popular ControlNet

A complete set of tutorials for adapting the Diffusers framework is here! From T2I-Adapter to the popular ControlNet

A complete set of tutorials for adapting the Diffusers framework is here! From T2I-Adapter to the popular ControlNet

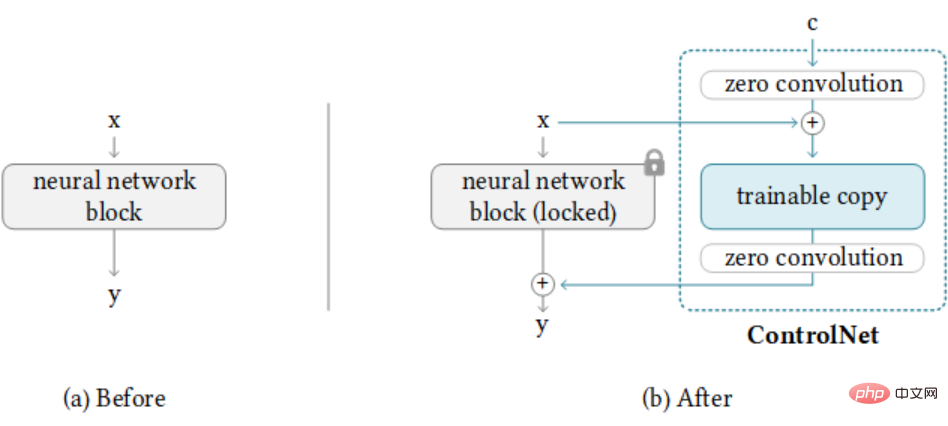

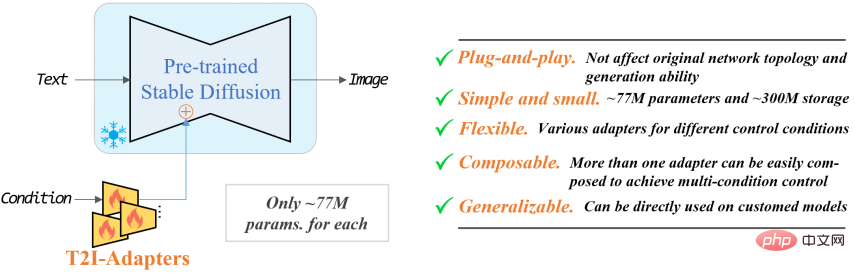

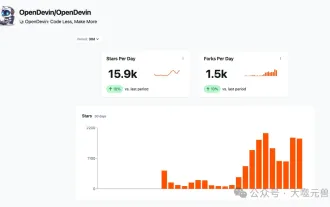

Not long after ChatGPT came out of the circle, the emergence of ControlNet quickly gained many developers and ordinary users on the English and Chinese Internet. Some users even promoted that the emergence of ControlNet brought AI creation into the era of upright walking. . It is no exaggeration to say that, including ControlNet, the T2I-Adapter, Composer, and LoRA training techniques of the same period, controllable generation, as the last high wall of AI creation, is very likely to have further breakthroughs in the foreseeable time, thus greatly Reduce the user's creation costs and improve the playability of creation. In just two weeks since ControlNet was open sourced, its official Star count has exceeded 10,000. This popularity is undoubtedly unprecedented.

At the same time, the open source community has also greatly lowered the threshold for users. For example, the Hugging Face platform provides basic model weights and general model training framework diffusers, stable-diffusion-webui A complete Demo platform has been developed, and Civitai has contributed a large number of stylized LoRA weights.

##Although webui is currently the most popular The visualization tool has quickly supported various recently launched generative models and supports many options for users to set. Because it focuses on the ease of use of the front-end interface, the code structure behind it is actually very complex and not friendly enough for developers. For example, although webui supports multiple types of loading and inference, it cannot support conversion under different frameworks, nor can it support flexible training of models. In community discussions, we discovered many pain points that have not yet been solved by existing open source code.

First of all, the code framework is not compatible. Currently popular models, such as ControlNet and T2I-Adapter, are not compatible with the mainstream Stable Diffusion training library. diffusers is not compatible, ControlNet pre-trained models cannot be used directly in the diffusers framework.

Secondly, Model loading is limited. Currently, models are saved in various formats, such as .bin, .ckpt, .pth, .satetensors, etc. , in addition to webui, the diffusers framework currently has limited support for these model formats. Considering that most LoRA models are mainly saved in safetensors, it is difficult for users to directly load LoRA models into existing models trained based on the diffusers framework.

Third, The basic model is limited. Currently, ControlNet and T2I-Adapter are trained based on Stable-Diffusion-1.5, and only The model weights under SD1.5 are open sourced. Considering specific scenarios, high-quality animation models such as anything-v4 and ChilloutMix already exist. Even if controllable information is introduced, the final generated results are still limited by the capabilities of UNet in SD1.5.

Finally, model training is limited. Currently, LoRA has been widely verified to be one of the most effective methods for style transfer and maintaining a specific image IP. 1. However, the diffusers framework currently only supports UNet's LoRA embedding and cannot support text encoder embedding, which will limit LoRA training.

After discussing with the open source community, we learned that the diffusers framework, as a general code library, is planning to adapt to the recently launched generation models at the same time; because it involves rewriting many underlying interfaces, it is still It will take some time to update. To this end, we started from the above actual problems and took the lead in proposing self-developed solutions for each problem to quickly help developers develop more easily.

Full adaptation solution from LoRA, ControlNet, T2I-Adapter to diffusers##LoRA for diffusers

This solution is to flexibly embed LoRA weights in various formats in the diffusers framework, that is, the model saved based on diffusers training. Since the training of LoRA usually freezes the base model, it can be easily embedded into existing models as pluggable modules as style or IP conditional constraints. LoRA itself is a general training technique. Its basic principle is that through low-rank decomposition, the number of parameters of the module can be greatly reduced. Currently, in image generation, it is generally used to train pluggable modules that are independent of the base model. , the actual use is to merge it with the output of the base model in the form of residuals.The first is the embedding of LoRA weights. Currently, the weights provided on the Civitai platform are mainly stored in ckpt or safetensors format, divided into the following two situations. (1) Full model (base model LoRA module) If the full model is in safetensors format, it can be converted by the following diffusers script If the full model is in ckpt format, it can be converted through the following diffusers script After the conversion is completed, the model can be loaded directly using the API of diffusers (2) LoRA only (only contains LoRA module) Currently diffusers officially cannot support loading only LoRA weights, and on the open source platform The LoRA weights are basically stored in this form. Essentially, it completes the remapping of key-value in LoRA weights and adapts it to the diffusers model. For this reason, we support this feature ourselves and provide conversion scripts. Only need to specify the model in diffusers format, and the LoRA weights stored in safetensors format. We provide an example conversion. In addition, due to its lightweight, LoRA itself can quickly complete training with small data and can be embedded into other networks. In order not to be limited to the existing LoRA weights, we support multi-module (UNet text encoder) training of LoRA in the diffusers framework and have submitted a PR in the official code base (https://github.com/huggingface/diffusers/pull/ 2479), and supports training LoRA in ColossalAI. The code is open source at: https://github.com/haofanwang/Lora-for-Diffusers ControlNet for diffuserspython ./scripts/convert_original_stable_diffusion_to_diffusers.py --checkpoint_path xxx.safetensors--dump_path save_dir --from_safetensors

python ./scripts/convert_original_stable_diffusion_to_diffusers.py --checkpoint_path xxx.ckpt--dump_path save_dir

from diffusers import StableDiffusionPipeline

pipeline = StableDiffusionPipeline.from_pretrained (save_dir,torch_dtype=torch.float32)

pipeline = StableDiffusionPipeline.from_pretrained (model_id,torch_dtype=torch.float32)

model_path = "onePieceWanoSagaStyle_v2Offset.safetensors"

state_dict = load_file (model_path)

# the default mergering ratio is 0.75, you can manually set it

python convert_lora_safetensor_to_diffusers.py

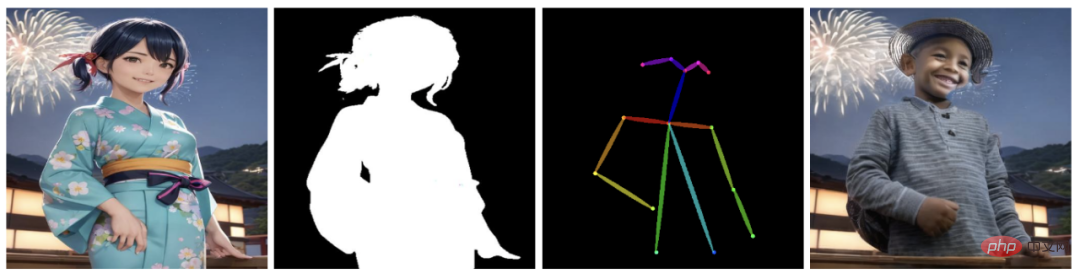

##This solution is to support the use of ControlNet in the diffusers framework. Based on some attempts of the open source community, we provide a complete use case for ControlNet Anything-V3, supporting the replacement of the base model from the original SD1.5 to the anything-v3 model, so that ControlNet has better animation generation capabilities.

In addition, we also support ControlNet Inpainting and provide a pipeline adapted to diffusers,

and Multi-ControlNet for multi-condition control.

The code is open source at: https://github.com/haofanwang/ControlNet-for-Diffusers

T2I-Adapter for diffusers

The code is open source at: https://github.com/haofanwang/T2I-Adapter-for-Diffusers Currently, the above three adaptation solutions have been open sourced to the community, and have been officially acknowledged in ControlNet and T2I-Adapter respectively. They have also received thanks from the author of stable-diffusion-webui-colab. We are maintaining discussions with diffusers officials and will complete the integration of the above solution into the official code base in the near future. You are also welcome to try our work in advance. If you have any questions, you can directly raise an issue and we will reply as soon as possible.

The above is the detailed content of A complete set of tutorials for adapting the Diffusers framework is here! From T2I-Adapter to the popular ControlNet. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Four recommended AI-assisted programming tools

Apr 22, 2024 pm 05:34 PM

Four recommended AI-assisted programming tools

Apr 22, 2024 pm 05:34 PM

This AI-assisted programming tool has unearthed a large number of useful AI-assisted programming tools in this stage of rapid AI development. AI-assisted programming tools can improve development efficiency, improve code quality, and reduce bug rates. They are important assistants in the modern software development process. Today Dayao will share with you 4 AI-assisted programming tools (and all support C# language). I hope it will be helpful to everyone. https://github.com/YSGStudyHards/DotNetGuide1.GitHubCopilotGitHubCopilot is an AI coding assistant that helps you write code faster and with less effort, so you can focus more on problem solving and collaboration. Git

GE universal remote codes program on any device

Mar 02, 2024 pm 01:58 PM

GE universal remote codes program on any device

Mar 02, 2024 pm 01:58 PM

If you need to program any device remotely, this article will help you. We will share the top GE universal remote codes for programming any device. What is a GE remote control? GEUniversalRemote is a remote control that can be used to control multiple devices such as smart TVs, LG, Vizio, Sony, Blu-ray, DVD, DVR, Roku, AppleTV, streaming media players and more. GEUniversal remote controls come in various models with different features and functions. GEUniversalRemote can control up to four devices. Top Universal Remote Codes to Program on Any Device GE remotes come with a set of codes that allow them to work with different devices. you may

Which AI programmer is the best? Explore the potential of Devin, Tongyi Lingma and SWE-agent

Apr 07, 2024 am 09:10 AM

Which AI programmer is the best? Explore the potential of Devin, Tongyi Lingma and SWE-agent

Apr 07, 2024 am 09:10 AM

On March 3, 2022, less than a month after the birth of the world's first AI programmer Devin, the NLP team of Princeton University developed an open source AI programmer SWE-agent. It leverages the GPT-4 model to automatically resolve issues in GitHub repositories. SWE-agent's performance on the SWE-bench test set is similar to Devin, taking an average of 93 seconds and solving 12.29% of the problems. By interacting with a dedicated terminal, SWE-agent can open and search file contents, use automatic syntax checking, edit specific lines, and write and execute tests. (Note: The above content is a slight adjustment of the original content, but the key information in the original text is retained and does not exceed the specified word limit.) SWE-A

Learn how to develop mobile applications using Go language

Mar 28, 2024 pm 10:00 PM

Learn how to develop mobile applications using Go language

Mar 28, 2024 pm 10:00 PM

Go language development mobile application tutorial As the mobile application market continues to boom, more and more developers are beginning to explore how to use Go language to develop mobile applications. As a simple and efficient programming language, Go language has also shown strong potential in mobile application development. This article will introduce in detail how to use Go language to develop mobile applications, and attach specific code examples to help readers get started quickly and start developing their own mobile applications. 1. Preparation Before starting, we need to prepare the development environment and tools. head

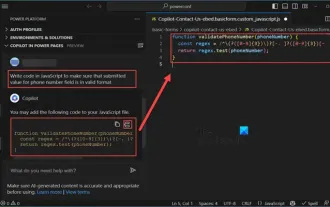

How to use Copilot to generate code

Mar 23, 2024 am 10:41 AM

How to use Copilot to generate code

Mar 23, 2024 am 10:41 AM

As a programmer, I get excited about tools that simplify the coding experience. With the help of artificial intelligence tools, we can generate demo code and make necessary modifications as per the requirement. The newly introduced Copilot tool in Visual Studio Code allows us to create AI-generated code with natural language chat interactions. By explaining functionality, we can better understand the meaning of existing code. How to use Copilot to generate code? To get started, we first need to get the latest PowerPlatformTools extension. To achieve this, you need to go to the extension page, search for "PowerPlatformTool" and click the Install button

Which Linux distribution is best for Android development?

Mar 14, 2024 pm 12:30 PM

Which Linux distribution is best for Android development?

Mar 14, 2024 pm 12:30 PM

Android development is a busy and exciting job, and choosing a suitable Linux distribution for development is particularly important. Among the many Linux distributions, which one is most suitable for Android development? This article will explore this issue from several aspects and give specific code examples. First, let’s take a look at several currently popular Linux distributions: Ubuntu, Fedora, Debian, CentOS, etc. They all have their own advantages and characteristics.

Create and run Linux ".a" files

Mar 20, 2024 pm 04:46 PM

Create and run Linux ".a" files

Mar 20, 2024 pm 04:46 PM

Working with files in the Linux operating system requires the use of various commands and techniques that enable developers to efficiently create and execute files, code, programs, scripts, and other things. In the Linux environment, files with the extension ".a" have great importance as static libraries. These libraries play an important role in software development, allowing developers to efficiently manage and share common functionality across multiple programs. For effective software development in a Linux environment, it is crucial to understand how to create and run ".a" files. This article will introduce how to comprehensively install and configure the Linux ".a" file. Let's explore the definition, purpose, structure, and methods of creating and executing the Linux ".a" file. What is L

Tsinghua University and Zhipu AI open source GLM-4: launching a new revolution in natural language processing

Jun 12, 2024 pm 08:38 PM

Tsinghua University and Zhipu AI open source GLM-4: launching a new revolution in natural language processing

Jun 12, 2024 pm 08:38 PM

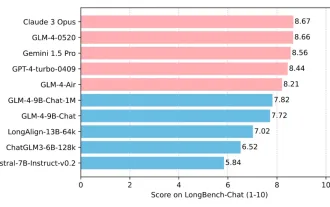

Since the launch of ChatGLM-6B on March 14, 2023, the GLM series models have received widespread attention and recognition. Especially after ChatGLM3-6B was open sourced, developers are full of expectations for the fourth-generation model launched by Zhipu AI. This expectation has finally been fully satisfied with the release of GLM-4-9B. The birth of GLM-4-9B In order to give small models (10B and below) more powerful capabilities, the GLM technical team launched this new fourth-generation GLM series open source model: GLM-4-9B after nearly half a year of exploration. This model greatly compresses the model size while ensuring accuracy, and has faster inference speed and higher efficiency. The GLM technical team’s exploration has not