Technology peripherals

Technology peripherals

AI

AI

A single card can run AI painting models. Tutorials that even novices can understand are here. Free NPU computing power is available with 1 million cards.

A single card can run AI painting models. Tutorials that even novices can understand are here. Free NPU computing power is available with 1 million cards.

A single card can run AI painting models. Tutorials that even novices can understand are here. Free NPU computing power is available with 1 million cards.

I believe everyone is familiar with the recent popularity of AI drawing.

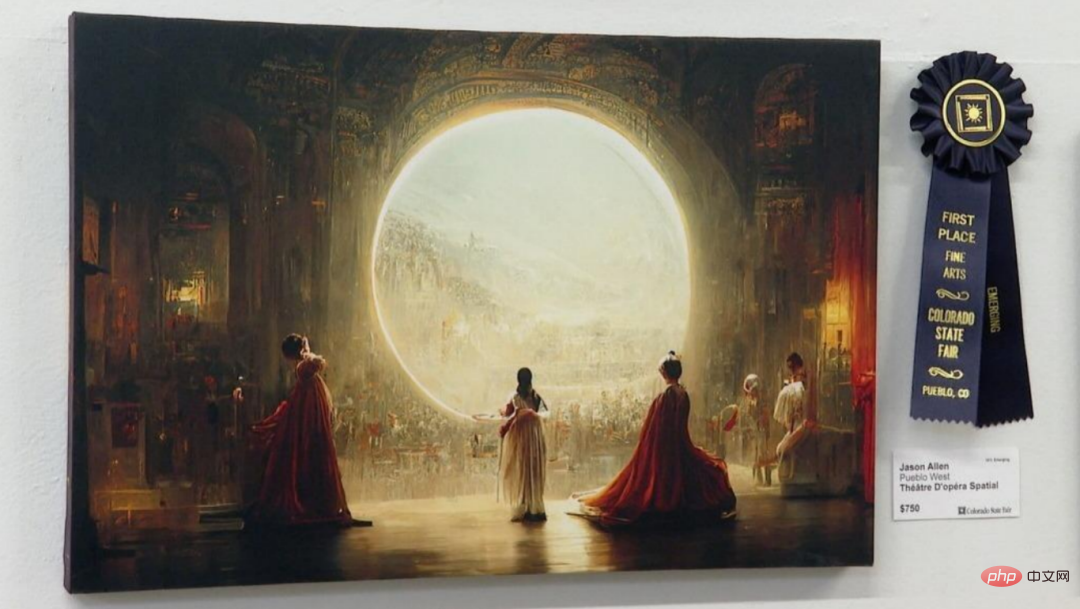

From the works generated by AI drawing software to defeating many human artists and winning the digital art championship, to now, domestic and foreign platforms such as DALL.E, Imagen, and novelai have flourished.

Perhaps you have clicked on relevant websites and tried to let AI describe the scenery in your mind, or uploaded a handsome/beautiful photo of yourself, and then laughed and laughed at the rough guy finally generated.

So, while you are feeling the charm of AI drawing, have you ever thought about it (no, you must have), what is the mystery behind it?

△The work that won the digital art category championship at the Colorado Technology Expo in the United States - "Space Opera"

Everything starts from a project called Speaking of the DDPM model...

What is DDPM?

DDPM model, the full name is Denoising Diffusion Probabilistic Model, can be said to be the originator of the current diffusion model.

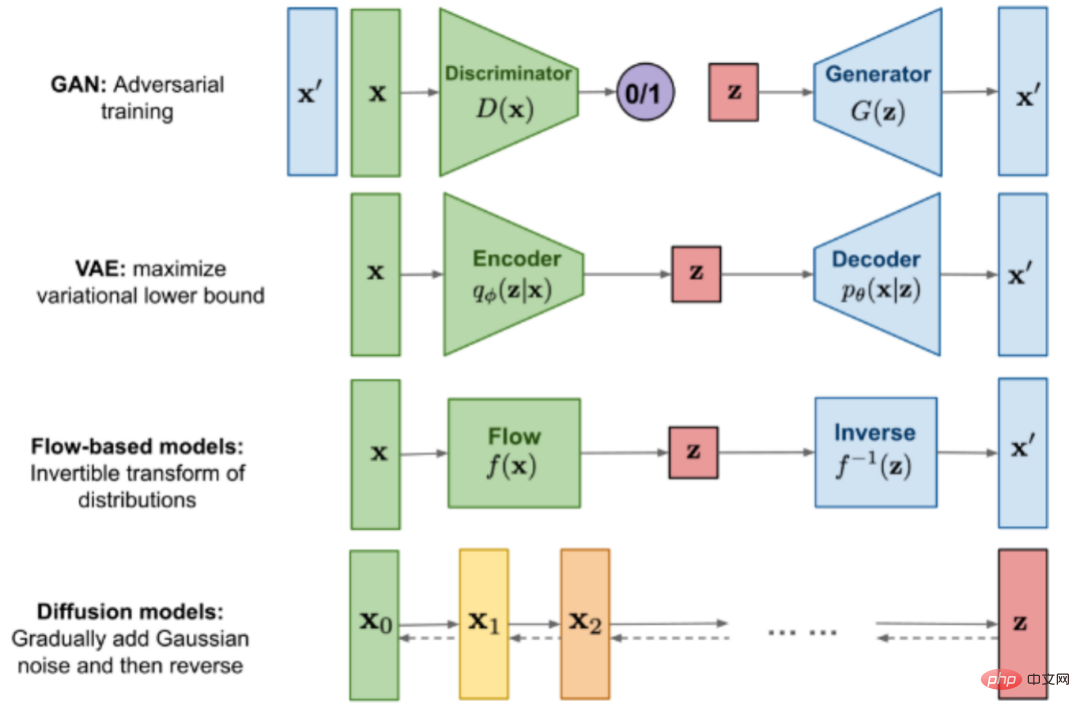

Different from predecessors such as GAN, VAE and flow models, the overall idea of the diffusion model is to gradually generate an image from a pure noise image through an optimization-oriented approach.

△Now there is a comparison of generated image models

Some friends may ask, what is a pure noise image?

It's very simple. When there is no signal on the old TV, the snowflake pictures that appear accompanied by the "prickling" noise are pure noise pictures.

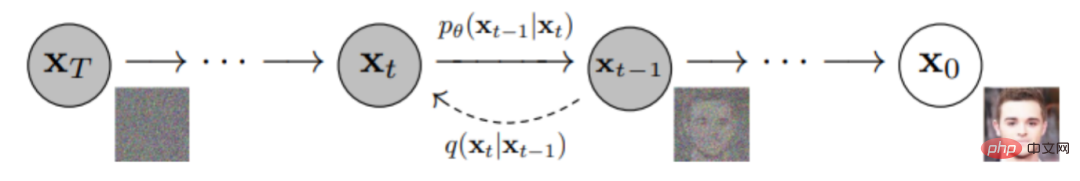

What DDPM does in the generation phase is to remove these "snowflakes" bit by bit until the clear image reveals its true appearance. We call this stage "denoising".

△Pure noise picture: Snowflake screen of old TV

Through the description, you can feel that denoising is actually a quite complicated process.

There is no certain rule for denoising. Maybe you have been busy for a long time, but in the end you still want to cry in front of the weird pictures.

Of course, different types of pictures will also have different denoising rules. As for how to let the machine learn this rule, someone had an idea and thought of a wonderful method:

Since the denoising rules are difficult to learn, why don’t I first turn a picture into a pure noise image by adding noise, and then do the whole process in reverse?

This establishes the entire training-inference process of the diffusion model: first, by gradually adding noise in the forward process, the image is converted into a pure noise image that approximates a Gaussian distribution;

Then gradually denoise in the reverse process to generate the image;

Finally, with the goal of increasing the similarity between the original image and the generated image, the model is optimized until it reaches ideal effect.

△DDPM’s training-inference process

At this point, I wonder how everyone will accept it? If you feel that there is no problem and it is easy, get ready, I am going to start using the ultimate move (in-depth theory).

1.1.1 Forward process

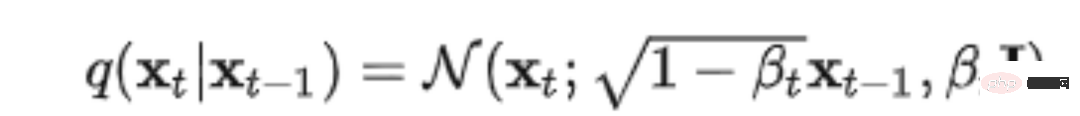

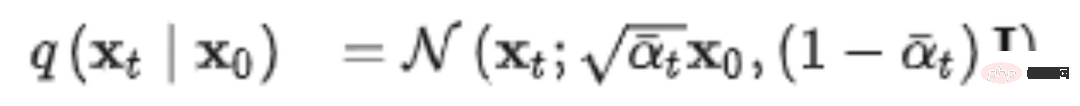

The forward process is also called the diffusion process, and the whole is a parameterized Markov Chain (Markov chain). Starting from the initial data distribution x0~q(x), Gaussian noise is added to the data distribution at each step for T times. The process from step t-1 xt-1 to step t xt can be expressed by Gaussian distribution as:

With appropriate settings, as t continues to increase , the original data x0 will gradually lose its characteristics. We can understand that after an infinite number of noise addition steps, the final data xT will become a picture without any features and completely random noise, which is what we first called the "snowflake screen".

In this process, the changes at each step can be controlled by setting the hyperparameter βt. Under the premise that we know what the first picture is, the entire process of forward noise can be said to be known. And it is controllable, we can completely know what the generated data looks like at each step.

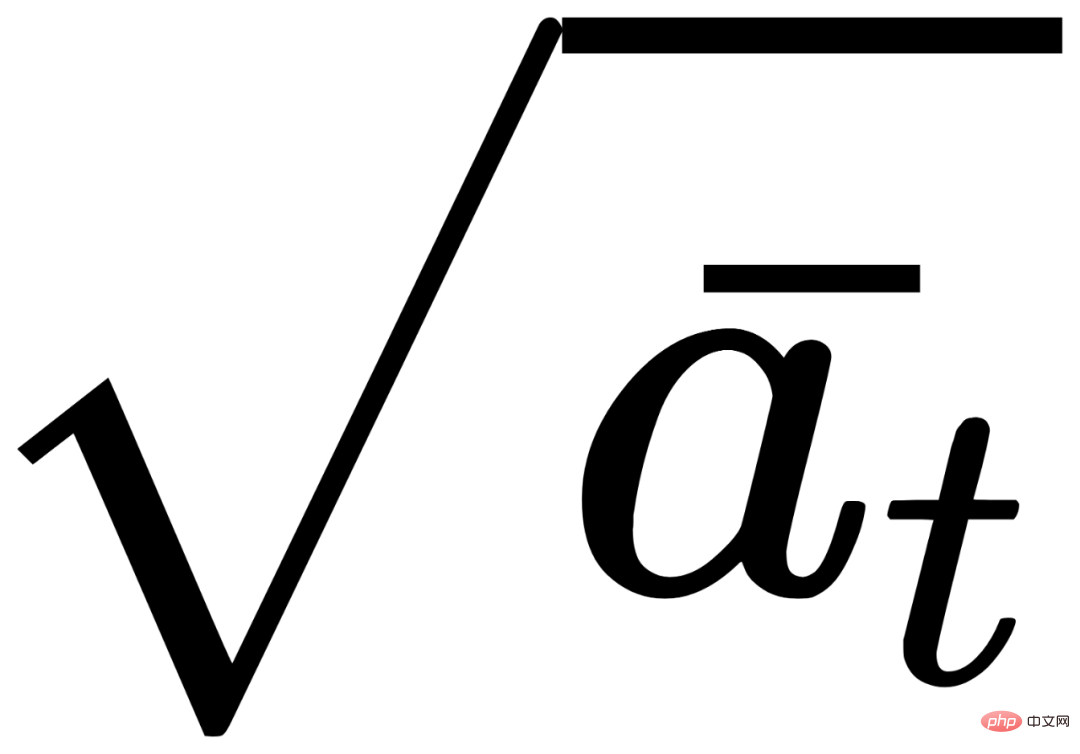

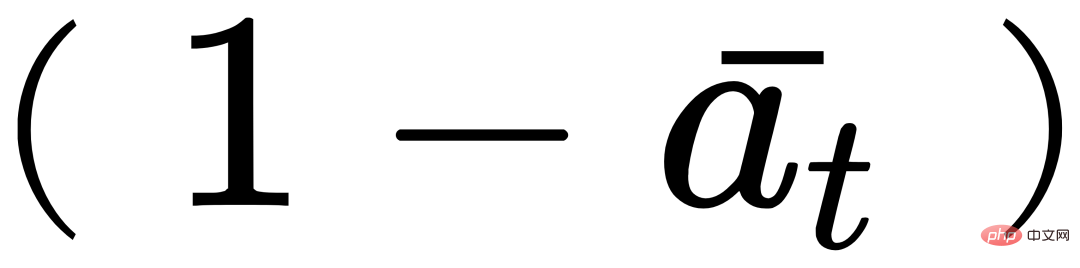

But the problem is that each calculation needs to start from the starting point, combine the process of each step, and slowly derive it to the certain step data xt you want, which is too troublesome. Fortunately, because of some characteristics of the Gaussian distribution, we can get xt directly from x0 in one step.

Note, the

here

and  are combination coefficients, which are essentially βt expressions of hyperparameters.

are combination coefficients, which are essentially βt expressions of hyperparameters.

1.1.2 Reverse process

The same as the forward process, the reverse process is also a Marl Markov chain, but the parameters used here are different. As for the specific parameters, this is what we need the machine to learn.

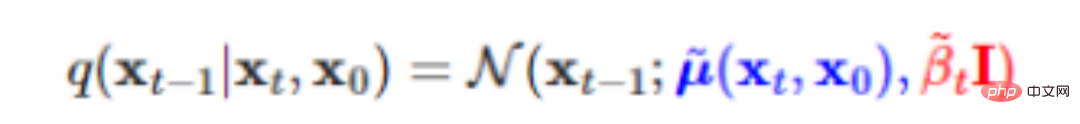

Before understanding how the machine learns, we first think about what the process of accurately inferring back to step t-1 xt-1 from step t xt based on a certain original data x0 should be?

The answer is that this can still be expressed by Gaussian distribution:

Note that x0 must be considered here, which means that the final image generated by the reverse process still needs to be compared with related to the original data. If you input a picture of a cat, the image generated by the model should be of a cat. If you input a picture of a dog, the image generated by the model should also be related to a dog. If x0 is removed, no matter what type of image training is input, the final images generated by diffusion will be the same, "cats and dogs are not distinguished".

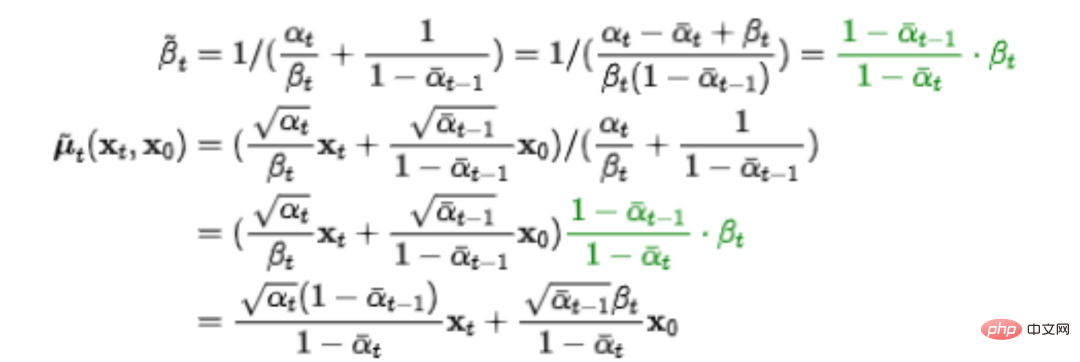

After a series of derivation, we found that the parameters in the reverse process

and

, it can still be represented by x0, xt, and parameters βt,  , isn’t it amazing~

, isn’t it amazing~

Of course, the machine does not know this in advance What it can do with the real inversion process is to simulate it with a roughly approximate estimated distribution, expressed as p0(xt-1|xt).

1.1.3 Optimization Goal

We mentioned at the beginning that the model needs to be optimized by increasing the similarity between the original data and the data finally generated by the reverse process. In machine learning, we calculate this similarity based on cross entropy.

Regarding cross entropy, the academic definition is "used to measure the difference information between two probability distributions." In other words, the smaller the cross entropy, the closer the image generated by the model is to the original image. However, in most cases, cross entropy is difficult or impossible to calculate, so we generally achieve the same effect by optimizing a simpler expression.

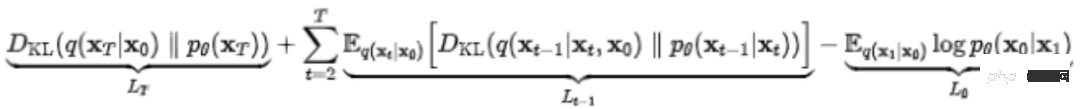

The Diffusion model draws on the optimization ideas of the VAE model and replaces cross entropy with variational lower bound (VLB, also known as ELBO) as the maximum optimization target. After countless steps of decomposition, we finally got:

Seeing such a complicated formula, many friends must have a big head. But don’t panic, what you need to pay attention to here is just Lt-1 in the middle. It represents the estimated distribution p0(xt-1|xt) and the real distribution q(xt-1|xt,x0 between xt and xt-1 )difference. The smaller the gap, the better the final image generated by the model.

1.1.4 Above code

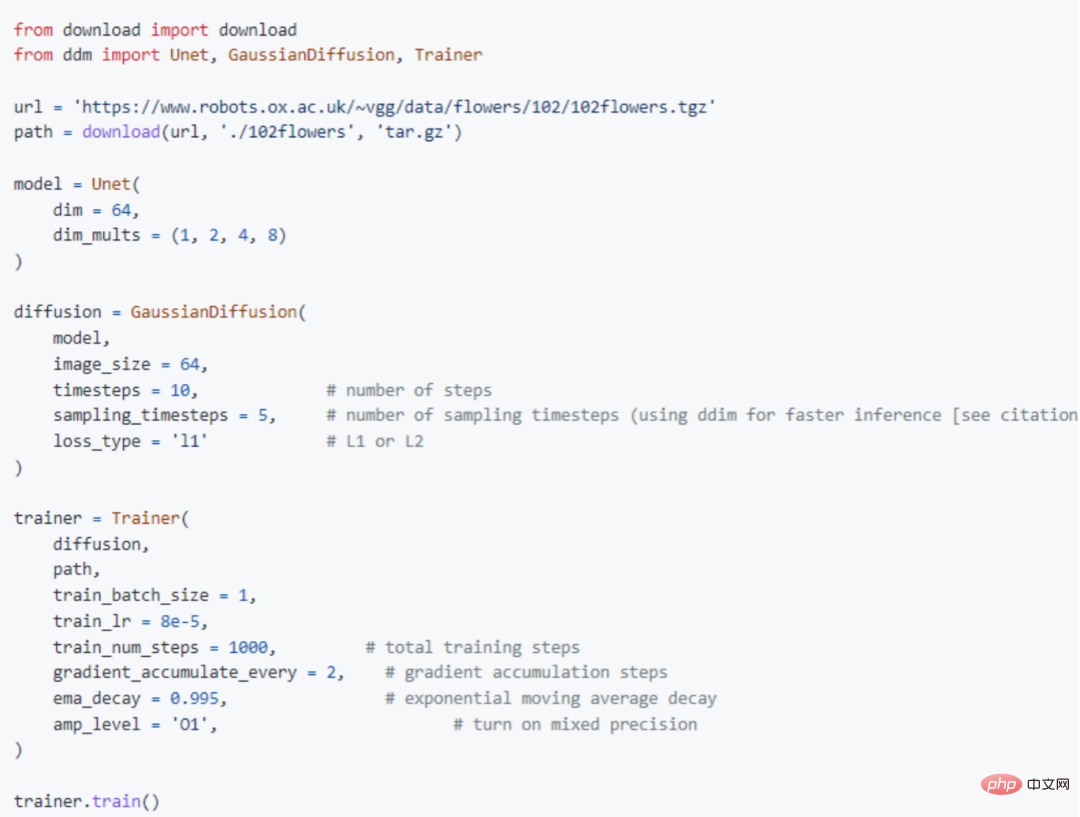

After understanding the principles behind DDPM, let us see how the DDPM model is implemented...

That’s weird. I believe that when you read this, you definitely don’t want to be baptized by hundreds or thousands of lines of code.

Fortunately, MindSpore has provided you with a fully developed DDPM model. Training and inference can be done with both hands. The operation is simple and can be run on a single card. Friends who want to experience the effect only need to

pip install denoising-diffusion-mindspore

Then, refer to the following code to configure parameters:

Some analysis of important parameters:

GaussianDiffusion

- image_size: Image size

- timesteps: Number of noise steps

- sampling_timesteps : The number of sampling steps. In order to improve the inference performance, it needs to be less than the number of noise adding steps

Trainer

- folder_or_dataset: corresponds to the path in the picture, which can be the downloaded dataset Path (str), or it can be VisionBaseDataset, GeneratorDataset or MindDataset that has been processed for data

- train_batch_size:batch size

- train_lr: learning rate

- train_num_steps: number of training steps

"Advanced version" DDPM model MindDiffusion

DDPM is just the beginning of the story of Diffusion. At present, countless researchers have been attracted by the magnificent world behind it and have devoted themselves to it.

While continuously optimizing the model, they have also gradually developed the application of Diffusion in various fields.

It includes image optimization, inpainting, 3D vision in the field of computer vision, text-to-speech in natural language processing, molecular conformation generation, material design in the field of AI for Science, etc.

Eric Zelikman, a doctoral student from the Department of Computer Science at Stanford University, used his imagination to try to combine DALLE-2 with ChatGPT, another recently popular conversation model, to create a heartwarming picture book story.

△DALLE-2 ChatGPT completed the story about a little robot named "Robbie"

But it is the most widely known to the public Yes, it should be its application in text-to-image. Enter a few keywords or a short description, and the model can generate the corresponding picture for you.

For example, if you enter "City Night Scene Cyberpunk Greg Lutkowsky", the final result will be a brightly colored work with a futuristic sci-fi style.

For another example, if you input "Monet's Woman Holding a Parasol in Moon Dream", what will be generated is a very hazy portrait of a woman, with a wooden style of color matching. Does it remind you of Monet's "Water Lilies"?

Want a realistic landscape photo as a screensaver? no problem!

△Country Field Screensaver

Want something with more two-dimensional density? That’s ok too!

△From the realistic style of abyss landscape painting

The above pictures are all made by Wukong Painting under the MindDiffusion platform Oh, Wukong Huahua is a large Chinese text graph model based on the diffusion model. It was jointly developed by Huawei's Noah team, ChinaSoft Distributed Parallel Laboratory, and Ascend Computing Product Department.

The model is trained based on Wukong dataset and implemented using MindSpore and Ascend software and hardware solutions.

Friends who are eager to give it a try, don’t worry. In order to give everyone a better experience and more room for self-development, we plan to make the models in MindDiffusion also have the characteristics of trainability and inference. It is expected that in I will meet you all next year, so stay tuned.

We welcome everyone to brainstorm and generate various unique styles of works~

(According to colleagues who went to inquire about internal information, some people have already begun to try "Zhang Fei Embroidery", "Liu Huaqiang" "Chopping Melon" and "Ancient Greek Gods vs. Godzilla". Ummmm, what should I do? I am suddenly looking forward to the finished product (ಡωಡ))

One More Thing

The last one, Now that Diffusion is booming, some people have also asked why it can become so popular and even start to surpass the GAN network in the limelight?

Diffusion has outstanding advantages and obvious disadvantages; many of its fields are still blank, and its future is still unknown.

Why are there so many people working tirelessly on it?

Perhaps, Professor Ma Yi’s words can provide us with an answer.

But the effectiveness of the diffusion process and its rapid replacement of GAN also fully illustrate a simple truth:

A few lines of simple and correct mathematical derivation can achieve greater results than those in the past ten years. Debugging hyperparameters at scale is much more effective than debugging network structures.

Perhaps this is the charm of the Diffusion model.

参考链接(可滑动查看):

[1]https://medium.com/mlearning-ai/ai-art-wins-fine-arts-competition-and-sparks-controversy-882f9b4df98c

[2]Jonathan Ho, Ajay Jain, and Pieter Abbeel. Denoising Diffusion Probabilistic Models. arXiv:2006.11239, 2020.

[3]Ling Yang, Zhilong Zhang, Shenda Hong, Runsheng Xu, Yue Zhao, Yingxia Shao, Wentao Zhang, Ming-Hsuan Yang, and Bin Cui. Diffusion models: A comprehensive survey of methods and applications. arXiv preprint arXiv:2209.00796, 2022.

[4]https://lilianweng.github.io/posts/2021-07-11-diffusion-models

[5]https://github.com/lvyufeng/denoising-diffusion-mindspore

[6]https://zhuanlan.zhihu.com/p/525106459

[7]https://zhuanlan.zhihu.com/p/500532271

[8]https://www.zhihu.com/question/536012286

[9]https://mp.weixin.qq.com/s/XTNk1saGcgPO-PxzkrBnIg

[10]https://m.weibo.cn/3235040884/4804448864177745

The above is the detailed content of A single card can run AI painting models. Tutorials that even novices can understand are here. Free NPU computing power is available with 1 million cards.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Efficiently process 7 million records and create interactive maps with geospatial technology. This article explores how to efficiently process over 7 million records using Laravel and MySQL and convert them into interactive map visualizations. Initial challenge project requirements: Extract valuable insights using 7 million records in MySQL database. Many people first consider programming languages, but ignore the database itself: Can it meet the needs? Is data migration or structural adjustment required? Can MySQL withstand such a large data load? Preliminary analysis: Key filters and properties need to be identified. After analysis, it was found that only a few attributes were related to the solution. We verified the feasibility of the filter and set some restrictions to optimize the search. Map search based on city

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

There are many reasons why MySQL startup fails, and it can be diagnosed by checking the error log. Common causes include port conflicts (check port occupancy and modify configuration), permission issues (check service running user permissions), configuration file errors (check parameter settings), data directory corruption (restore data or rebuild table space), InnoDB table space issues (check ibdata1 files), plug-in loading failure (check error log). When solving problems, you should analyze them based on the error log, find the root cause of the problem, and develop the habit of backing up data regularly to prevent and solve problems.

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

The article introduces the operation of MySQL database. First, you need to install a MySQL client, such as MySQLWorkbench or command line client. 1. Use the mysql-uroot-p command to connect to the server and log in with the root account password; 2. Use CREATEDATABASE to create a database, and USE select a database; 3. Use CREATETABLE to create a table, define fields and data types; 4. Use INSERTINTO to insert data, query data, update data by UPDATE, and delete data by DELETE. Only by mastering these steps, learning to deal with common problems and optimizing database performance can you use MySQL efficiently.

Remote senior backend engineers (platforms) need circles

Apr 08, 2025 pm 12:27 PM

Remote senior backend engineers (platforms) need circles

Apr 08, 2025 pm 12:27 PM

Remote Senior Backend Engineer Job Vacant Company: Circle Location: Remote Office Job Type: Full-time Salary: $130,000-$140,000 Job Description Participate in the research and development of Circle mobile applications and public API-related features covering the entire software development lifecycle. Main responsibilities independently complete development work based on RubyonRails and collaborate with the React/Redux/Relay front-end team. Build core functionality and improvements for web applications and work closely with designers and leadership throughout the functional design process. Promote positive development processes and prioritize iteration speed. Requires more than 6 years of complex web application backend

Can mysql return json

Apr 08, 2025 pm 03:09 PM

Can mysql return json

Apr 08, 2025 pm 03:09 PM

MySQL can return JSON data. The JSON_EXTRACT function extracts field values. For complex queries, you can consider using the WHERE clause to filter JSON data, but pay attention to its performance impact. MySQL's support for JSON is constantly increasing, and it is recommended to pay attention to the latest version and features.

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Detailed explanation of database ACID attributes ACID attributes are a set of rules to ensure the reliability and consistency of database transactions. They define how database systems handle transactions, and ensure data integrity and accuracy even in case of system crashes, power interruptions, or multiple users concurrent access. ACID Attribute Overview Atomicity: A transaction is regarded as an indivisible unit. Any part fails, the entire transaction is rolled back, and the database does not retain any changes. For example, if a bank transfer is deducted from one account but not increased to another, the entire operation is revoked. begintransaction; updateaccountssetbalance=balance-100wh

MySQL can't be installed after downloading

Apr 08, 2025 am 11:24 AM

MySQL can't be installed after downloading

Apr 08, 2025 am 11:24 AM

The main reasons for MySQL installation failure are: 1. Permission issues, you need to run as an administrator or use the sudo command; 2. Dependencies are missing, and you need to install relevant development packages; 3. Port conflicts, you need to close the program that occupies port 3306 or modify the configuration file; 4. The installation package is corrupt, you need to download and verify the integrity; 5. The environment variable is incorrectly configured, and the environment variables must be correctly configured according to the operating system. Solve these problems and carefully check each step to successfully install MySQL.

The primary key of mysql can be null

Apr 08, 2025 pm 03:03 PM

The primary key of mysql can be null

Apr 08, 2025 pm 03:03 PM

The MySQL primary key cannot be empty because the primary key is a key attribute that uniquely identifies each row in the database. If the primary key can be empty, the record cannot be uniquely identifies, which will lead to data confusion. When using self-incremental integer columns or UUIDs as primary keys, you should consider factors such as efficiency and space occupancy and choose an appropriate solution.