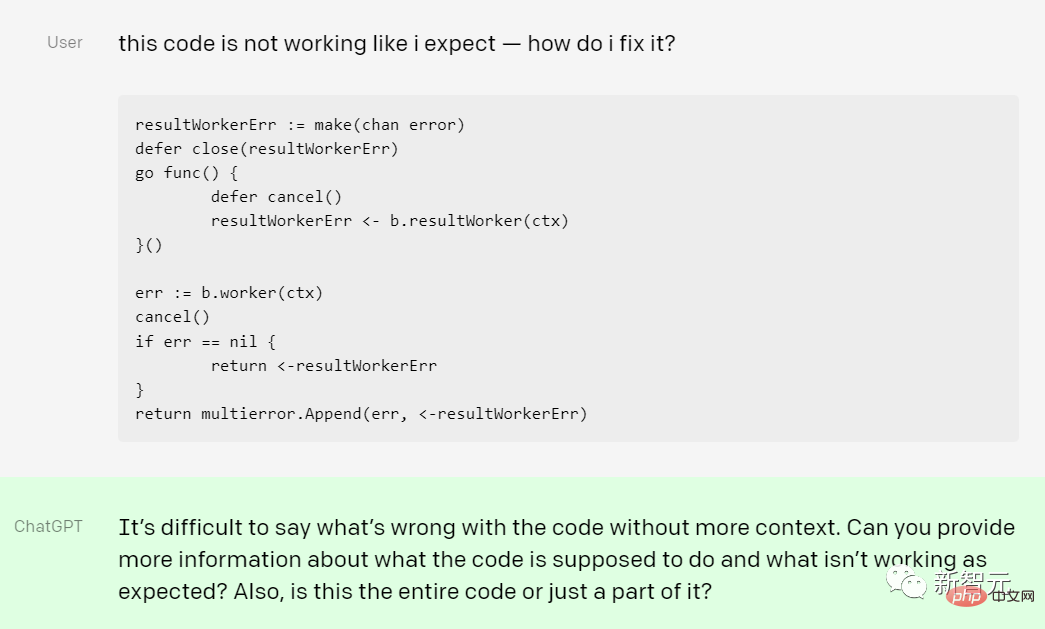

On the first day of 2023, please check ChatGPT's year-end summary!

The emergence of ChatGPT may be the most eye-catching AI breakthrough in the second half of 2022, although it may not be the most technical.

Not long ago, at the 2022 NeurIPS held in New Orleans, rumors about GPT-4 were endless. At the same time, OpenAI also became the focus of the news media. focus.

OpenAI announced a new model in the GPT-3 series of AI large-scale language models: text-davinci-003, which is its "GPT-3.5 series" part that can improve performance by processing more complex instructions and producing higher quality, longer-form content.

The new model is built on InstructGPT and uses reinforcement learning with human feedback to make the language model more compatible with humans Instructions are better aligned.

DaVinci-003 is a true reinforcement learning with human feedback (RLHF) model, which performs well in human demonstrations and high-scoring model samples Use supervised fine-tuning to improve generation quality. "

As another part of the "GPT-3.5 series", OpenAI released an early demonstration of ChatGPT. The company claimed that this interactive conversation model, Not only can you answer a large number of follow-up questions, but you can also admit mistakes, challenge incorrect premises, and reject inappropriate requests.

OpenAI said in a blog post that ChatGPT’s research release is “OpenAI’s iterative deployment of increasingly safe and useful AI.” The latest step in the system. It incorporates many lessons learned from earlier model deployments such as GPT-3 and Codex, resulting in a significant reduction in harmful and unrealistic output results when leveraging reinforcement learning with human feedback (RLHF).

In addition, ChatGPT emphasized during training that it is a machine learning model. This may be to avoid the "whether AI is conscious" caused by Google's chat robot LaMDA not long ago. dispute.

# Of course, ChatGPT also has limitations.

In a blog post, OpenAI details its limitations, including that sometimes answers may sound reasonable but are actually incorrect or nonsensical. Meaningful facts.

"Solving this problem is very challenging because (1) there is currently no guarantee of reliable sources during reinforcement learning training; (2) ) trained models are more cautious and will reject questions that may be answered correctly; (3) supervised training can mislead the model because the ideal answer depends on what the model knows, not what the human demonstrator knows."

Open AI said that ChatGPT "sometimes reacts to harmful instructions or exhibits biased behavior. We are using the API to warn or block certain types of unsafe content, but Expect there to be some false negatives and positives at the moment. We are very interested in collecting user feedback to help our ongoing work to improve this model."

Although ChatGPT may still have many problems that need improvement, we cannot deny that before GPT-4 comes on the scene, ChatGPT is still the top of the current large language models. flow.

However, in the recent community, there is a new model that has ignited everyone’s enthusiasm for discussion. The most important thing is that it is open source.

This week, Philip Wang, a developer responsible for reverse engineering closed-source AI systems including Meta’s Make-A-Video, released PaLM RLHF, which is A text generation model that behaves like ChatGPT.

Code address: https://github.com/lucidrains/PaLM-rlhf-pytorch

The system combines Google’s large-scale language model PaLM and reinforcement learning with human feedback (RLHF) technology to create a system that can complete almost any task in ChatGPT, including drafting emails and suggesting computer code.

#The power of PaLm RLHF

Since its release, ChatGPT has been able to generate high-definition human-like text. And its ability to respond to user questions in a conversational manner has taken the tech world by storm.

Although this is a major advancement in the early stages of chatbot development, many fans in the field of artificial intelligence have expressed concerns about the closed nature of ChatGPT.

To this day, the ChatGPT model remains proprietary, meaning its underlying code cannot be viewed by the public. Only OpenAI really knows how it works and what data it processes. This lack of transparency can have far-reaching consequences and could affect user trust in the long term.

# Many developers have been eager to build an open source alternative, and now, it's finally here. PaLM RLHF is built specifically for the Python language and can be implemented for PyTorch.

Developers can train PaLM as easily as an autoregressive transformer and then use human feedback to train the reward model.

Like ChatGPT, PaLM RLHF is essentially a statistical tool for predicting words. When fed a large number of examples from the training data—such as posts from Reddit, news articles, and e-books—PaLM RLHF learns how likely words are to occur based on patterns such as the semantic context of the surrounding text.

Is it really so perfect?

Of course, there is still a big gap between ideal and reality. PaLM RLHF seems perfect, but it also has various problems. The biggest problem is that people can't use it yet.

# To launch PaLM RLHF, users need to compile gigabytes of text obtained from various sources such as blogs, social media, news articles, e-books, and more.

This data is fed to a fine-tuned PaLm model, which generates several responses. For example, if you ask the model "What are the basic knowledge of economics?", PaLm will give answers such as "Economics is the social science that studies...".

#Afterwards, the developers will ask people to rank the answers generated by the model from best to worst and create a reward model. Finally, the rankings are used to train a “reward model” that takes the original model’s responses and sorts them in order of preference, filtering out the best answers for a given prompt.

# However, this is an expensive process. Collecting training data and training the model itself is not cheap. PaLM has 540 billion parameters, which is what the language model learns from the training data. A 2020 study showed that developing a text generation model with only 1.5 billion parameters would cost up to $1.6 million.

In July this year, in order to train the open source model Bloom with 176 billion parameters, Hugging Face researchers spent three months and used 384 NVIDIA A100 GPU. Each A100 costs thousands of dollars, which is not a cost that any average user can afford.

Additionally, even after training the model, running a model of the size of PaLM RLHF is not trivial. Bloom has a dedicated PC with eight A100 GPUs, and OpenAI's text generation GPT-3 (which has about 175 billion parameters) costs about $87,000 per year to run.

Scaling up the necessary development workflow can also be a challenge, AI researcher Sebastian Raschka noted in an article about PaLM RLHF.

"Even if someone gives you 500 GPUs to train this model, you still need to deal with the infrastructure and have a software framework that can handle it," he said. "Although this is feasible, it currently requires a lot of effort."

The next open source ChatGPT

The high cost and huge scale indicate that without well-funded companies or individuals taking the trouble to train the model, PaLM RLHF currently does not have the ability to replace ChatGPT.

#So far, there is no exact release date for PaLM RLHF. For reference, it took Hugging Face three months to train Bloom. In contrast, PaLM RLHF, which has 540 billion parameters, may need to wait 6-8 months to produce a meaningful version.

The good news is that so far we have three known players working on this open source alternative to ChatGPT:

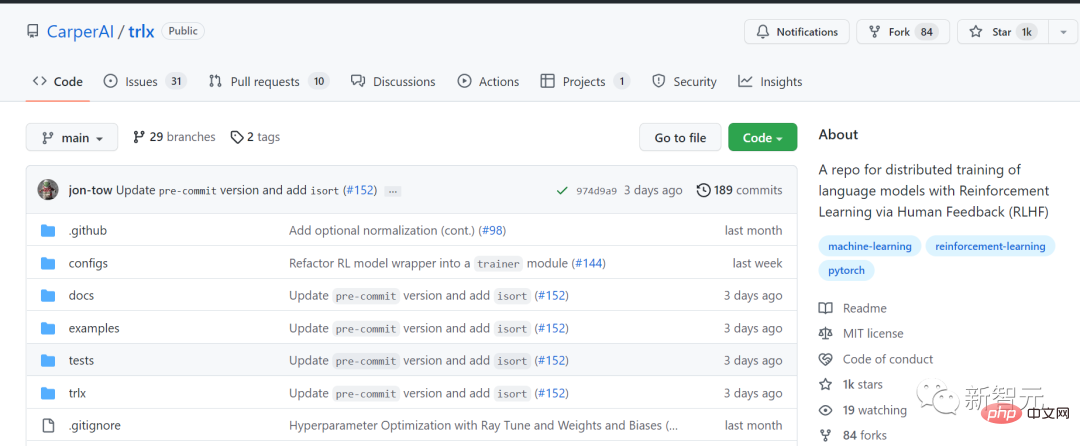

- CarperAI

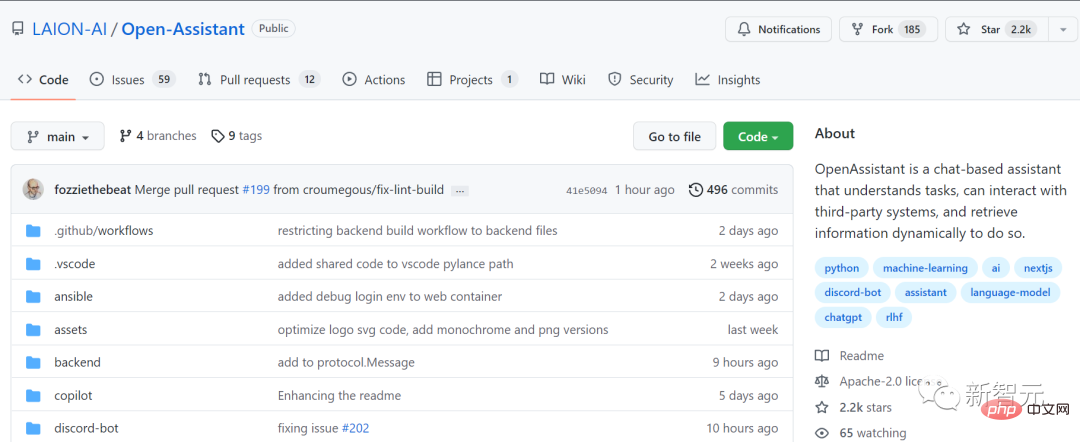

- LAION

- Yannic Kilcher

CarperAI plans to release the first ready-to-run, ChatGPT-like app in partnership with EleutherAI and startups Scale AI and Hugging Face An AI model trained with human feedback.

## Code address: https://github.com/CarperAI/trlx

LAION, the non-profit organization that provided the initial dataset for Stable Diffusion, is also spearheading a project to replicate ChatGPT using the latest machine learning technology.

## Code address: https://github.com/LAION-AI/Open-Assistant

LAION aims to create a "future assistant" that can not only write emails and cover letters, but also "do meaningful work, use APIs, dynamically research information, etc." It's in its early stages, but a project with related resources went live on GitHub a few weeks ago.

And GPT-4chan, created by YouTube celebrity and AI researcher Yannic Kilcher, is more like a bad-mouth expert who "comes out of the mud and is completely stained" .

The "4chan" in this model is an American online anonymous forum. Because the identities of netizens are anonymous, many people are fearless and express various political opinions. Incorrect remarks. Kilcher officially used posts on 4chan to train the model, and the results are predictable.

#Similar to the general tone of the forum, GPT-4chan’s answers were filled with racism, sexism, and anti-Semitism. Not only that, Kilcher also posted its underlying model to Hugging Face for others to download. However, under the condemnation of many AI researchers, officials quickly restricted netizens’ access to the model.

#While we look forward to the emergence of more open source language models, all we can do now is wait. Of course, it's also a good idea to continue using ChatGPT for free.

#It is worth noting that OpenAI is still far ahead in development before any open source version is officially launched. In 2023, GPT-4 is undoubtedly what AI enthusiasts around the world are looking forward to. Countless AI giants have made their own predictions about it. These predictions are good or bad, but as OpenAI COO Sam Altman said: "The completion of general artificial intelligence will be faster than Most people imagine faster, and it changes everything most people imagine."

The above is the detailed content of On the first day of 2023, please check ChatGPT's year-end summary!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1381

1381

52

52

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Configuring a Debian mail server's firewall is an important step in ensuring server security. The following are several commonly used firewall configuration methods, including the use of iptables and firewalld. Use iptables to configure firewall to install iptables (if not already installed): sudoapt-getupdatesudoapt-getinstalliptablesView current iptables rules: sudoiptables-L configuration

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

The steps to install an SSL certificate on the Debian mail server are as follows: 1. Install the OpenSSL toolkit First, make sure that the OpenSSL toolkit is already installed on your system. If not installed, you can use the following command to install: sudoapt-getupdatesudoapt-getinstallopenssl2. Generate private key and certificate request Next, use OpenSSL to generate a 2048-bit RSA private key and a certificate request (CSR): openss

How to perform digital signature verification with Debian OpenSSL

Apr 13, 2025 am 11:09 AM

How to perform digital signature verification with Debian OpenSSL

Apr 13, 2025 am 11:09 AM

Using OpenSSL for digital signature verification on Debian systems, you can follow these steps: Preparation to install OpenSSL: Make sure your Debian system has OpenSSL installed. If not installed, you can use the following command to install it: sudoaptupdatesudoaptininstallopenssl to obtain the public key: digital signature verification requires the signer's public key. Typically, the public key will be provided in the form of a file, such as public_key.pe

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

How to do Debian Hadoop log management

Apr 13, 2025 am 10:45 AM

How to do Debian Hadoop log management

Apr 13, 2025 am 10:45 AM

Managing Hadoop logs on Debian, you can follow the following steps and best practices: Log Aggregation Enable log aggregation: Set yarn.log-aggregation-enable to true in the yarn-site.xml file to enable log aggregation. Configure log retention policy: Set yarn.log-aggregation.retain-seconds to define the retention time of the log, such as 172800 seconds (2 days). Specify log storage path: via yarn.n

Sony confirms the possibility of using special GPUs on PS5 Pro to develop AI with AMD

Apr 13, 2025 pm 11:45 PM

Sony confirms the possibility of using special GPUs on PS5 Pro to develop AI with AMD

Apr 13, 2025 pm 11:45 PM

Mark Cerny, chief architect of SonyInteractiveEntertainment (SIE, Sony Interactive Entertainment), has released more hardware details of next-generation host PlayStation5Pro (PS5Pro), including a performance upgraded AMDRDNA2.x architecture GPU, and a machine learning/artificial intelligence program code-named "Amethylst" with AMD. The focus of PS5Pro performance improvement is still on three pillars, including a more powerful GPU, advanced ray tracing and AI-powered PSSR super-resolution function. GPU adopts a customized AMDRDNA2 architecture, which Sony named RDNA2.x, and it has some RDNA3 architecture.

How to configure HTTPS server in Debian OpenSSL

Apr 13, 2025 am 11:03 AM

How to configure HTTPS server in Debian OpenSSL

Apr 13, 2025 am 11:03 AM

Configuring an HTTPS server on a Debian system involves several steps, including installing the necessary software, generating an SSL certificate, and configuring a web server (such as Apache or Nginx) to use an SSL certificate. Here is a basic guide, assuming you are using an ApacheWeb server. 1. Install the necessary software First, make sure your system is up to date and install Apache and OpenSSL: sudoaptupdatesudoaptupgradesudoaptinsta

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab