Technology peripherals

Technology peripherals

AI

AI

An article discussing the safety technical features of self-driving cars

An article discussing the safety technical features of self-driving cars

An article discussing the safety technical features of self-driving cars

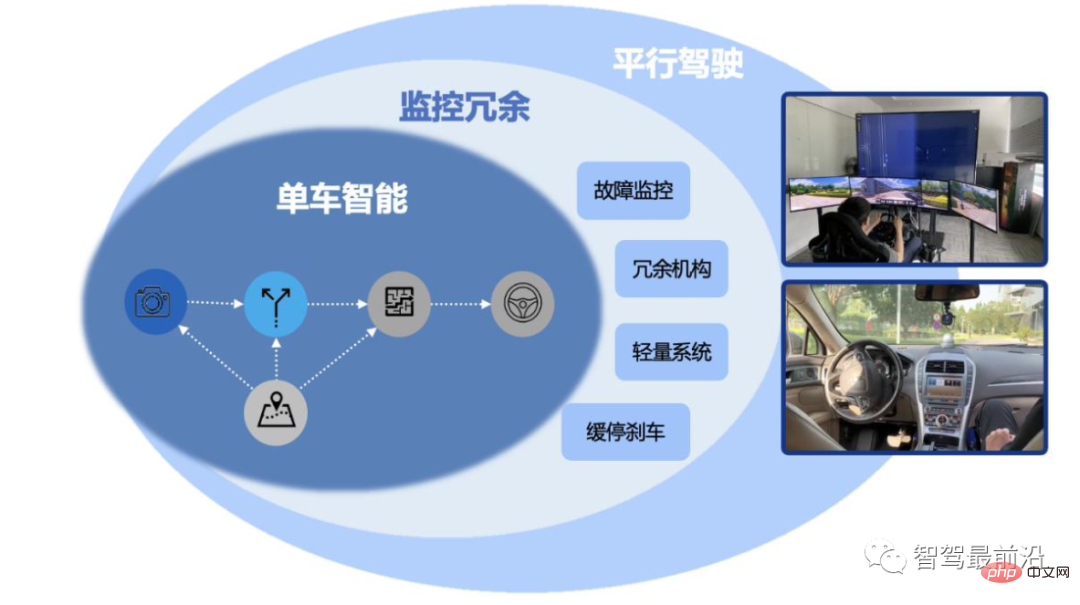

“Safety first” is the core concept and value of autonomous driving. The overall system safety design of autonomous vehicles is a complex system engineering, involving the core algorithm strategy design of the vehicle autonomous driving system, hardware and software redundant safety design, remote cloud driving technology, full-process test verification technology, etc., and follows Requirements and design considerations for functional safety (ISO 26262) and expected functional safety (ISO/PAS 21448). Let’s review Baidu’s L4 autonomous driving safety system practices, which are divided into three-layer safety systems: main system safety, redundant safety system, and remote cloud driving system.

##Figure 1 Baidu L4 overall system security design idea

Automatic driving main system safety

The main system safety system is through the on-board automatic driving system The core algorithm layer ensures the safety of driving strategies and driving behaviors, which can also be called "strategy security". Use the most advanced and reliable perception and positioning algorithms, predictive decision-making planning and control algorithms to deal with various scenarios on the road. In particular, it is necessary to ensure safety in driving strategies and behaviors when encountering difficult scenarios.

Autonomous driving main system safety is the safety design of the software and hardware combination suite. Software algorithms are the core of the entire autonomous driving system. A typical L4 autonomous driving algorithm system architecture mainly includes on-board operating systems, environmental perception, high-precision maps and positioning, predictive decision-making and planning, control and execution modules, etc.

Operating system

The basic operating system runs on autonomous vehicles and is used to manage, schedule, and control the vehicle. Basic software for software and hardware resources. Its main task is to provide real-time task scheduling, real-time computing task resource isolation, real-time message communication, system-level access control and other capabilities for the autonomous driving system, effectively manage system resources, improve system resource utilization, and shield hardware and software from the autonomous vehicle algorithm module. The physical characteristics and operational details carry core components of autonomous driving such as operation perception, positioning, planning decision-making and control. The operating system has the characteristics of high stability, real-time performance, and low latency (response speed is 250ms higher than that of a human driver).

Pan-sensing system

Environmental perception is a prerequisite for autonomous driving. The environment perception system integrates the advantages of multiple sensors such as lidar, millimeter-wave radar, and cameras to achieve a 360-degree view around the vehicle body, stably detect and track traffic behaviors, speed, direction and other information in complex and changing traffic environments, and provide information for decision-making and planning. The module provides scene understanding information.

The perception algorithm adopts a multi-sensor fusion framework and can provide detection of obstacles up to 280 meters away. Based on deep neural networks and massive autonomous driving data, it can accurately identify obstacle types and stably track obstacle behavior, providing stable perception capabilities for downstream decision-making modules. The perception system based on the multi-sensor fusion solution forms redundancy through heterogeneous sensing channels, providing high fault tolerance for the autonomous driving system and thereby improving system safety.

In addition, the perception algorithm also effectively supports scene expansion through capabilities such as water mist noise recognition, low obstacle detection, and detection of special-shaped traffic lights and signs. In terms of traffic light recognition, the traffic light color and countdown detected by self-vehicle sensing can be cross-verified with the prior information provided by high-precision maps, while improving the ability to recognize temporary traffic lights to ensure reliability and safety.

High-precision maps and high-precision positioning provide autonomous vehicles with advance road information, precise vehicle position information and rich road element data information, emphasizing the three-dimensional model and accuracy of space, and displaying the road surface very accurately every characteristic and condition of. High-precision mapping and positioning adopt a multi-sensor fusion solution of lidar, vision, RTK and IMU. Through the fusion of multiple sensors, the positioning accuracy can reach 5-10 cm, meeting the needs of L4 autonomous driving.

Predictive decision-making and planning control

The predictive decision-making and planning control technology module is equivalent to the brain of a self-driving car. Predictive decision-making and planning are the core modules of software algorithms, which directly affect the ability and effect of vehicle autonomous driving. This algorithm module is based on traffic safety specifications and consensus rules to plan safe, efficient, and comfortable driving paths and trajectories for vehicles. In order to better improve the generalization ability of the algorithm, data mining and deep learning algorithms are applied to realize intelligent planning of driving behavior.

After given the starting point and destination set by the vehicle, the system generates the optimal global planned path. The vehicle can receive the environment and obstacle information provided by the perception module in real time, combine it with high-precision maps, track and predict the behavioral intentions and predicted trajectories of surrounding vehicles, pedestrians, cyclists or other obstacles, taking safety, comfort and efficiency into consideration. Generate driving behavior decisions (following, changing lanes, parking, etc.), and plan the vehicle's operation (speed, trajectory, etc.) in accordance with traffic rules and civilized traffic etiquette, and finally output it to the control module to implement vehicle acceleration, deceleration, and steering actions. The vehicle control part is the lowest layer and communicates directly with the vehicle chassis. It transmits the vehicle's target position and speed to the chassis through electrical signals to operate the throttle, brake and steering wheel.

The goal of autonomous driving is to cope with complex traffic scenarios on urban roads and ensure that autonomous vehicles are in a safe driving state under any road traffic conditions. At the software algorithm layer, there are deep learning models trained based on massive test data to ensure safe, efficient and smooth traffic of autonomous vehicles in regular driving scenarios; at the safety algorithm layer, a series of safe driving strategies are designed for various typical dangerous scenarios. Ensure that autonomous vehicles can perform safe driving behaviors in any scenario. For example, in extreme scenarios such as bad weather and blocked vision, defensive driving strategies will be triggered, and safety risks can be reduced by slowing down and observing more.

Self-driving vehicles are more abiding by traffic rules and road rights of way. When meeting other traffic participants at road intersections and encountering vehicles with high rights of way, We will also consider slowing down and giving way based on the safety first principle to avoid risks. When encountering high-risk scenarios such as "ghost probes", we will adhere to the safety first principle and adopt emergency braking strategies to avoid injuries as much as possible. With the accumulation of autonomous driving road test data and a large amount of extreme scene data, the autonomous driving core algorithm has continued to evolve through data-driven deep learning algorithm models, becoming an "old driver" who can predict in advance and drive safely and cautiously.

Vehicle-road collaborative autonomous driving

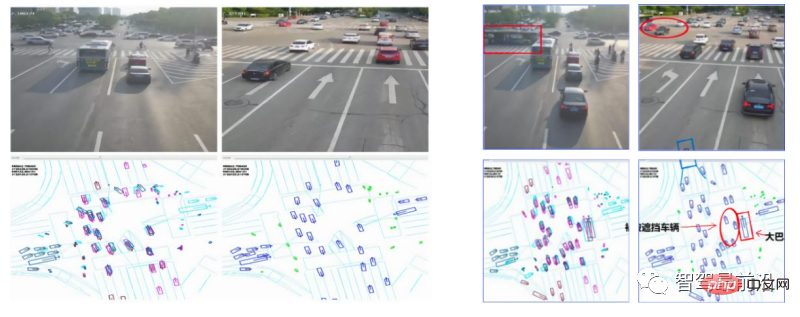

Vehicle-road collaborative autonomous driving is based on single-vehicle intelligent autonomous driving. The Internet organically connects the "people-vehicle-road-cloud" traffic participation elements to realize dynamic and real-time information exchange and sharing between vehicles and vehicles, vehicles and roads, and vehicles and people, ensuring traffic safety. Through information interaction and collaboration, collaborative sensing and collaborative decision-making control, vehicle-road collaborative autonomous driving can greatly expand the perception range of a single vehicle, improve its perception capabilities, and introduce new intelligent elements represented by high-dimensional data to achieve group intelligence. It can help solve the technical bottlenecks encountered by intelligent autonomous driving of bicycles, improve autonomous driving capabilities, thereby ensuring the safety of autonomous driving and expanding the Operational Design Domain (ODD) of autonomous driving.

For example, vehicle-road cooperative autonomous driving can solve the problem of single-vehicle intelligence being easily affected by environmental conditions such as occlusion and bad weather, and the problem of dynamic and static blind spots/occlusion collaborative perception. Intelligent autonomous driving of bicycles is limited by the sensor sensing angle. When blocked by static obstacles or dynamic obstacles (such as large vehicles), the AV cannot accurately obtain the movement of vehicles or pedestrians in the blind spot. Vehicle-road collaboration realizes multi-directional and long-distance continuous detection and recognition through the deployment of multiple sensors on the roadside, and integrates it with AV perception to achieve accurate perception and recognition of vehicles or pedestrians in blind spots by autonomous vehicles, and vehicles can make predictions in advance. judgment and decision-making control, thereby reducing the risk of accidents.

Figure 2 Non-motor vehicle/pedestrian ghost detector collaborative sensing in dynamic and static blind areas

Figure 3 Intersection occlusion vehicle-road cooperative sensing

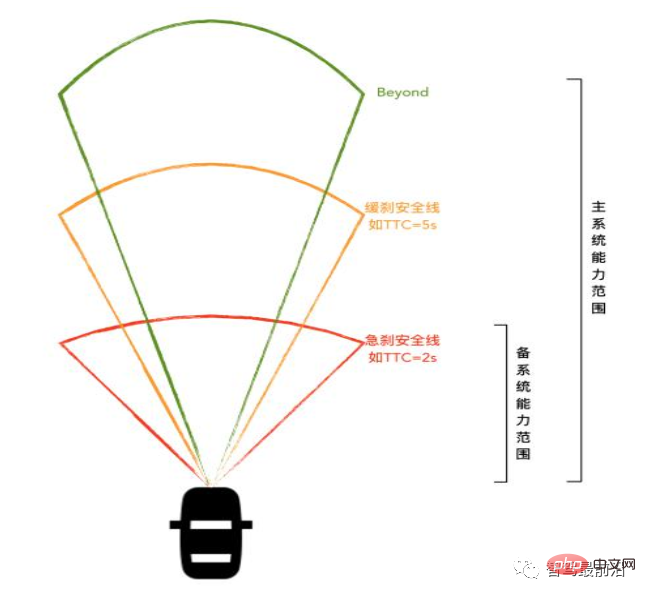

##Autonomous driving safety redundant system

According to "ISO 26262 Road Vehicle Functional Safety", system functional safety must consider the requirements of functional redundancy. According to the design standards of functional safety, functional redundancy is completed at three levels: component level, system level and vehicle level. Redundant system design is the key to ensuring safe and controllable autonomous driving. Relying on full-line redundant design, it can effectively deal with single-point failures or functional failures at the three levels of vehicle control system, hardware platform, and software platform, providing a completely unmanned autonomous driving system. Basic support.

The L4-level autonomous driving system is equipped with safety redundancy in addition to the on-board main computing unit and sensing system, realizing a heterogeneous redundant design of software and hardware, avoiding the need for each system to The single point of failure, the main computing system and the redundant security system have different divisions of labor and verify each other, greatly improving the overall safety and reliability. In terms of function and algorithm strategy design, the redundant safety system focuses on real-time monitoring of the main computing system software and hardware and hazard identification. When an abnormality in the main computing system is detected, the MRC mechanism will be triggered, through alarms, slow braking, and pulling over. , emergency braking and other methods to put the vehicle into the Minimal Risk Condition (MRC).

Hardware and sensor redundancy

From sensors, computing units to vehicle control systems, there are two sets of mutual It is an independent and redundant system to avoid single points of failure and improve the overall reliability and security of the system.

Computing unit redundancy

The safety system configures a set of SafetyDCU as a redundant computing unit to perform real-time calculations and Monitor the working status of the main system. When the main computing unit fails, the algorithm operation that can support the redundant system can continue to control the vehicle, and make slow braking, pull over and other actions with minimal risk of rollback.

Sensor redundancy

#The safety system uses redundant design to design two independent autonomous driving sensor systems, using laser Redundant solutions for components such as radars, cameras, and positioning equipment can trigger the redundant system in the event of any single component failure, providing complete environmental awareness capabilities to safely control the vehicle and ensure more reliable system operation.

Vehicle control system redundancy

The vehicle chassis has redundant capabilities, including steering, power, braking, etc. Key components can switch to the backup system to control the vehicle when a single system fails, helping to stop the vehicle safely and prevent the vehicle from losing control.

Fault monitoring system and software redundancy

The fault monitoring system is deployed in the main computing unit and the safety computing unit A complete fault detection system between them can detect and monitor in real time all software and hardware failures, faults, out-of-ODD range, system algorithm defects, etc. during system operation, and conduct cross-verification through the main system and redundant system. Check and monitor each other to ensure that no faults are missed. At the same time, risk prediction is performed, problem-prone data are mined, analyzed, and feature extracted, and real-time safety risk calculations were performed on the vehicle end.

The software redundancy system is a complete set of lightweight perception positioning and decision-making control software. For example, the complete positioning system redundancy adds multiple cross-validation to improve the ability of positioning anomaly detection and fault tolerance; it senses 360-degree surround detection coverage to achieve real-time perception of the surrounding and forward risks of the vehicle body; when a main system fault or failure is detected, The backup system takes over the control of the vehicle on its behalf, downgrading functions or entering MRC through speed limit, slow braking, pulling over, braking, etc., to achieve safe parking of the vehicle.

Figure 4 Fault monitoring system and software redundancy

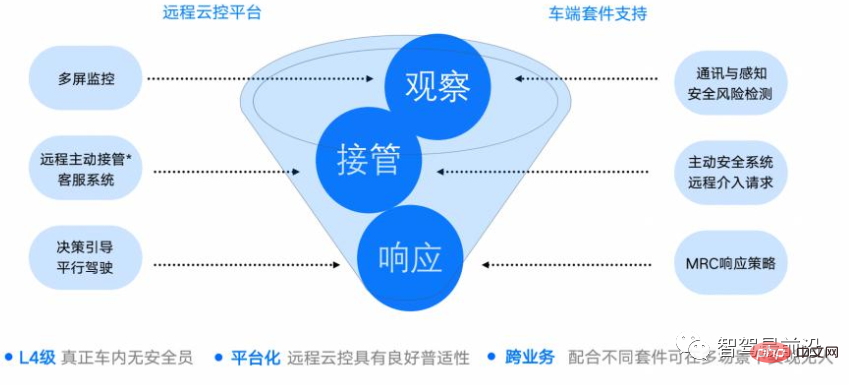

Remote Cloud Driving

The remote cloud driving system is used when the vehicle is trapped or extreme In the scene, the remote driver takes over the vehicle and displays the environment model, main vision, and overhead perspective through the surround screen, providing the safety officer with an immersive parallel driving experience. When the remote driver drives the vehicle to a safe zone and then hands over control to the car, the end-to-end delay of the entire process is shorter than the reaction time of a human driver, and the switching of control between the car and the remote is completely smooth and seamless. In the remote cockpit, fleet-level real-time monitoring can be achieved by configuring multi-screen monitoring and through functions such as risk warning and dynamic scheduling.

Remote cloud driving has a comprehensive security layered design including active safety, safety warning and basic safety functions, which can monitor the cockpit, network and driverless vehicle status in real time , and make safe handling according to different faults or risk levels to further comprehensively escort autonomous driving operations. Current autonomous driving technology mainly uses vehicle-side autonomous driving systems to achieve autonomous driving on regular urban roads, and only relies on remote cloud driving in extreme scenarios. Therefore, it can achieve efficient operation services where one remote driver can control multiple vehicles.

Figure 5 Remote cloud driving product design

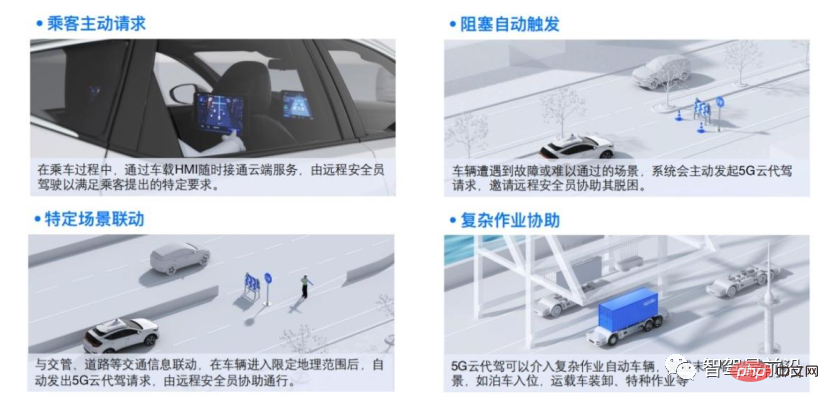

Parallel driving is based on With 5G technology, the safety operator in the remote control center can understand the environment and status of the vehicle in real time, seamlessly connect the vehicle and cloud, complete remote assistance in scenarios where autonomous driving cannot pass, and return the vehicle to the autonomous driving state after completion, achieving extreme Vehicle escape and avoidance in the scene.

5G cloud driving is an important supporting facility for autonomous driving in the future. Based on new infrastructure facilities such as 5G, smart transportation, and V2X, it can realize real-time video feedback inside and outside the autonomous vehicle. Transmission monitoring can fill the gap in the capabilities of the autonomous driving system when there is no driver in the car.

Figure 6 Applicable scenarios for remote cloud driving

Autonomous driving vehicle testing and verification

Autonomous driving systems need to be fully carried out from research and development to application Functional safety and performance safety test verification to prove its operational safety to protect the personal safety of vehicle users and other traffic participants. Virtual simulation requires hundreds of millions to tens of billions of kilometers of verification testing, and real road testing requires more than one million kilometers of test accumulation.

Testing process system

The autonomous driving test uses a scenario-based testing method to verify whether it has safe driving capabilities in each scenario. The autonomous driving test scenario library is the basis of the test system and drives all aspects of autonomous vehicle testing.

The test scenario library includes typical daily driving scenarios, high collision risk scenarios, legal and regulatory scenarios, etc. It also includes scenarios that have formed industry standards, such as standard test scenarios for AEB functions. Specifically, it is divided into different natural conditions (weather, lighting), different road types (pavement status, lane line type, etc.), different traffic participants (vehicles, pedestrian locations, speed, etc.), different environment types (highways, communities, shopping malls, Multiple types of virtual simulation test scenarios (rural areas, etc.) and test scenarios in real traffic environments. The test content includes sensors, algorithms, actuators, human-machine interfaces, and complete vehicles, etc., to verify the rationality of the autonomous driving system from various aspects such as application functions, performance, stability and robustness, functional safety, expected functional safety, type certification, etc. performance, safety and stability, thereby ensuring that vehicles can take to the road autonomously.

The testing process system of self-driving cars mainly includes offline environment testing, vehicle in the loop testing (VIL), road in the loop testing (Road in the Loop, RIL) three At each stage, software, hardware, and vehicles are tested layer by layer to ensure the safety of the autonomous driving system on the road. In the offline testing phase, every line of code can be fully and timely tested. When the software is modified, the system will automatically trigger each test link one by one until the safe on-board test standard is reached before entering the vehicle-in-the-loop test phase and road-in-the-loop test phase. stage. If problems are found during the road-in-the-loop testing phase, the next round of code modifications will be made and the next cycle will begin. After rounds of closed loops, autonomous driving capabilities continue to improve.

Offline testing

Offline refers to testing that does not include the vehicle. Most of the work is done in the laboratory of. This stage includes model in the loop testing (Model in the Loop, MIL), software in the loop testing (Software in the Loop, SIL), and hardware in the loop testing (Hardware in the Loop, HIL).

Model-in-the-loop testing uses large-scale data sets to accurately evaluate core algorithm models such as perception, prediction, positioning, and control, and measures changes in model capabilities through various indicators after model evaluation. , Expose algorithm problems and BadCase at an early stage through automated mining to avoid being left behind in the subsequent testing process.

In the software-in-the-loop testing stage, simulation testing is a key link in the autonomous driving test system. By pouring massive road test data into the simulation system, the effect of the new algorithm is verified through repeated regression. At the same time, a large number of extreme scenarios are constructed in the simulation system, and a single scenario is automatically produced into large-scale scenarios through parameter expansion to improve test coverage. In addition, the simulation platform also has a sophisticated measurement system that can automatically determine collision problems, traffic violation problems, somatosensory problems, and unreasonable route problems that occur during the simulation process.

During the hardware-in-the-loop testing phase, software and hardware are integrated to test the compatibility and reliability of the software and hardware systems. Usually, hardware failure occurs with a certain probability and a certain degree of contingency. During the hardware-in-the-loop testing phase, thousands of real-life scenarios are restored and tested based on a combination of real and virtual hardware, and autonomous driving is continuously tested 24 hours a day. The system applies pressure to simulate the performance and stability of the system under different resource limit conditions (for example: insufficient GPU resources, excessive CPU usage). At the same time, a large number of hardware faults are simulated at this stage to test the system's response in the case of hardware faults, such as hardware failure, power outage, frame loss, upstream and downstream interface abnormalities, etc., to ensure that the system meets ISO26262 functional safety requirements.

Vehicle in-the-loop test

#The vehicle-in-the-loop test phase will first conduct bench-based testing. Various vehicle control-by-wire functions, performance and stability tests are completed on the rack to ensure that the autonomous driving system can control the vehicle as intended. After completing the vehicle control-by-wire test, the VIL phase will enter a closed venue and build a virtual and real scenario based on real roads to test the performance of the autonomous driving system on real vehicles.

Road-in-the-loop testing

After passing the offline test and vehicle in-loop test stages (each link has strict test passing standards), then enter the closed venue to build a real scene to test the vehicle's autonomous driving capabilities and safety. The closed test site covers common urban roads and highways, including straight roads, curves, intersections, slopes, tunnels and parking lots. In addition, various low-frequency scenarios are constructed through test equipment such as dummies and fake cars. Such low-frequency scenarios exist on social roads, but they occur less frequently and are not easy to be fully verified on open roads. For example, bicycles traveling in the opposite direction, pedestrians suddenly rushing out, water on the road, etc.

Open road testing is the final step of road-in-the-loop testing and an important step that autonomous vehicles must go through to complete test and evaluation. Open road testing is carried out step by step, and usually the latest systems are deployed on a small number of vehicles for testing, and then deployed to a larger fleet after being confirmed to be safe. By deploying large-scale autonomous vehicles for continuous testing and verification on actual roads, a continuous closed loop of actual road scenarios and autonomous driving capabilities will be formed, so that autonomous vehicles will continue to improve in intelligence, safety, etc., thereby gradually approaching the level of autonomous vehicles. The ability to reach thousands of households.

The above is the detailed content of An article discussing the safety technical features of self-driving cars. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

How to Undo Delete from Home Screen in iPhone

Apr 17, 2024 pm 07:37 PM

How to Undo Delete from Home Screen in iPhone

Apr 17, 2024 pm 07:37 PM

Deleted something important from your home screen and trying to get it back? You can put app icons back on the screen in a variety of ways. We have discussed all the methods you can follow and put the app icon back on the home screen. How to Undo Remove from Home Screen in iPhone As we mentioned before, there are several ways to restore this change on iPhone. Method 1 – Replace App Icon in App Library You can place an app icon on your home screen directly from the App Library. Step 1 – Swipe sideways to find all apps in the app library. Step 2 – Find the app icon you deleted earlier. Step 3 – Simply drag the app icon from the main library to the correct location on the home screen. This is the application diagram

The role and practical application of arrow symbols in PHP

Mar 22, 2024 am 11:30 AM

The role and practical application of arrow symbols in PHP

Mar 22, 2024 am 11:30 AM

The role and practical application of arrow symbols in PHP In PHP, the arrow symbol (->) is usually used to access the properties and methods of objects. Objects are one of the basic concepts of object-oriented programming (OOP) in PHP. In actual development, arrow symbols play an important role in operating objects. This article will introduce the role and practical application of arrow symbols, and provide specific code examples to help readers better understand. 1. The role of the arrow symbol to access the properties of an object. The arrow symbol can be used to access the properties of an object. When we instantiate a pair

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

In the past month, due to some well-known reasons, I have had very intensive exchanges with various teachers and classmates in the industry. An inevitable topic in the exchange is naturally end-to-end and the popular Tesla FSDV12. I would like to take this opportunity to sort out some of my thoughts and opinions at this moment for your reference and discussion. How to define an end-to-end autonomous driving system, and what problems should be expected to be solved end-to-end? According to the most traditional definition, an end-to-end system refers to a system that inputs raw information from sensors and directly outputs variables of concern to the task. For example, in image recognition, CNN can be called end-to-end compared to the traditional feature extractor + classifier method. In autonomous driving tasks, input data from various sensors (camera/LiDAR

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

From beginner to proficient: Explore various application scenarios of Linux tee command

Mar 20, 2024 am 10:00 AM

From beginner to proficient: Explore various application scenarios of Linux tee command

Mar 20, 2024 am 10:00 AM

The Linuxtee command is a very useful command line tool that can write output to a file or send output to another command without affecting existing output. In this article, we will explore in depth the various application scenarios of the Linuxtee command, from entry to proficiency. 1. Basic usage First, let’s take a look at the basic usage of the tee command. The syntax of tee command is as follows: tee[OPTION]...[FILE]...This command will read data from standard input and save the data to

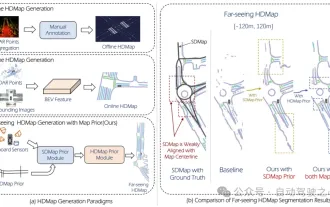

Mass production killer! P-Mapnet: Using the low-precision map SDMap prior, the mapping performance is violently improved by nearly 20 points!

Mar 28, 2024 pm 02:36 PM

Mass production killer! P-Mapnet: Using the low-precision map SDMap prior, the mapping performance is violently improved by nearly 20 points!

Mar 28, 2024 pm 02:36 PM

As written above, one of the algorithms used by current autonomous driving systems to get rid of dependence on high-precision maps is to take advantage of the fact that the perception performance in long-distance ranges is still poor. To this end, we propose P-MapNet, where the “P” focuses on fusing map priors to improve model performance. Specifically, we exploit the prior information in SDMap and HDMap: on the one hand, we extract weakly aligned SDMap data from OpenStreetMap and encode it into independent terms to support the input. There is a problem of weak alignment between the strictly modified input and the actual HD+Map. Our structure based on the Cross-attention mechanism can adaptively focus on the SDMap skeleton and bring significant performance improvements;