This sharing will systematically analyze the main performance bottlenecks in the AI model training process, as well as the current main acceleration solutions and technical principles for these bottlenecks, and introduce some practical results of Baidu Intelligent Cloud in this regard.

Today’s sharing mainly includes three parts:

First introduce why we need to accelerate AI training, that is, what is the overall background and starting point;

The second part We will systematically analyze the performance bottlenecks that may be encountered during the actual training process, and then introduce the current main acceleration solutions to address these problems;

The third part introduces the AI training acceleration of Baidu Baige Platform The practical effect of the package AIAK-Training on some model training acceleration.

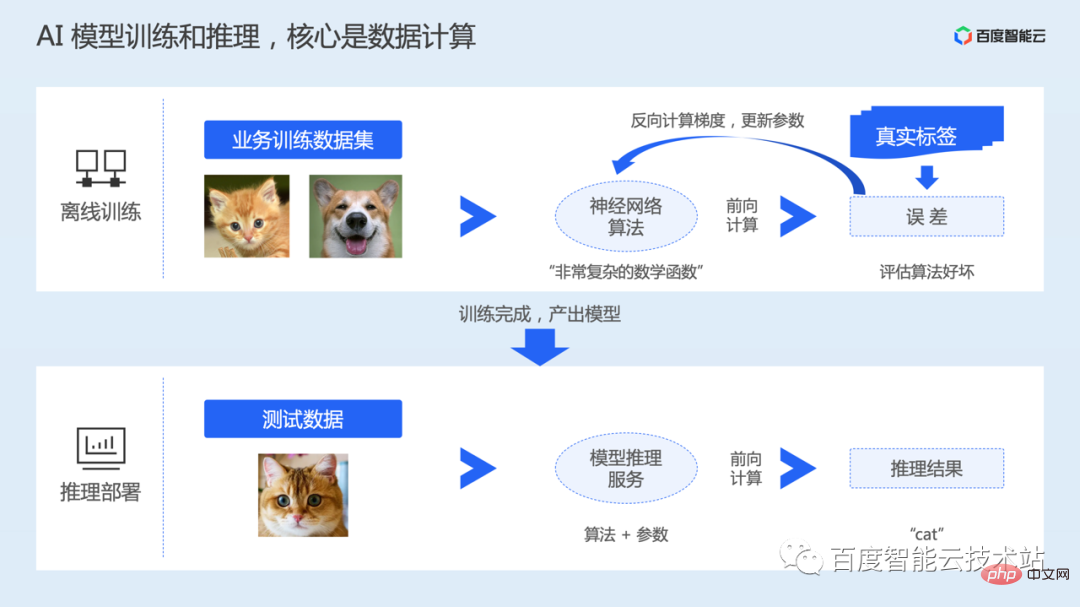

In an AI system, a model generally includes two stages: offline training and inference deployment from production to application.

The offline training phase is the process of generating a model. Users need to prepare the data sets and neural network algorithms required for training the model according to their own task scenarios.

The algorithm can be understood as a highly complex non-convex mathematical function, which includes many variables and parameters. The process of model training is actually learning the parameters in the neural network model.

After the model training starts, the data will be read, then sent to the model for forward calculation, and the error with the real value will be calculated. Then perform the reverse calculation to obtain the parameter gradient, and finally update the parameters. Training involves multiple rounds of data iterations.

After the training is completed, we will save the trained model, then deploy the model online, accept the user's real input, and complete inference through forward calculation.

Therefore, whether it is training or inference, the core is data calculation. In order to accelerate computing efficiency, training and inference are generally performed through heterogeneous acceleration chips such as GPUs.

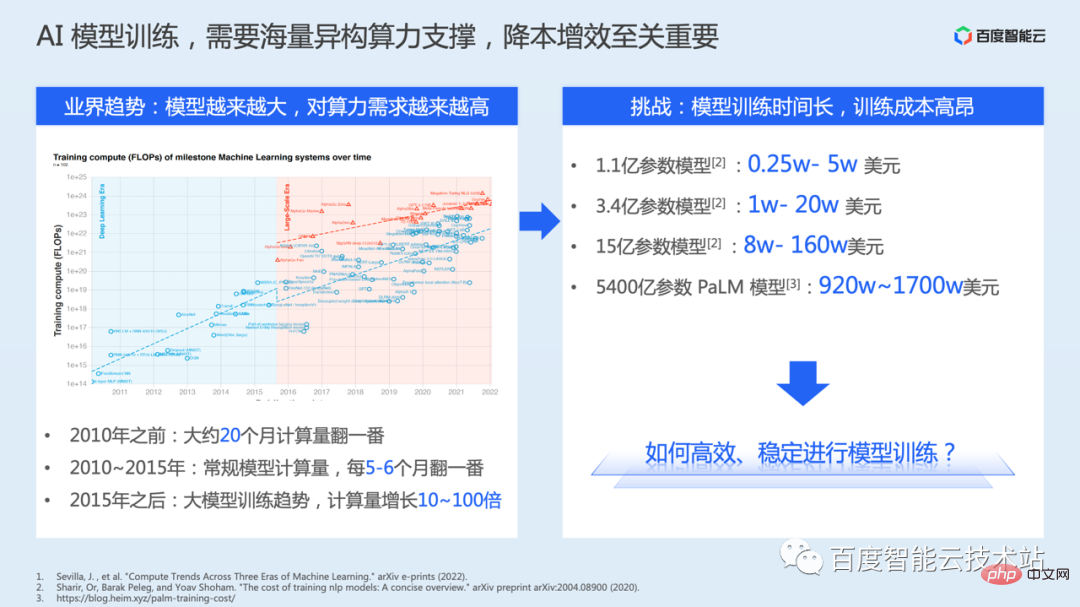

In addition, judging from the development history of deep learning models, in order to continue to break through the upper limit of model accuracy, the number of model parameters is actually expanding rapidly. However, a larger number of parameters will bring greater computational complexity.

The left side of the figure below is taken from a public paper. From this summary, we see that before 2010, the calculation amount of the model doubled in about 20 months. From 2010 to 2015, conventional model calculations doubled every 5-6 months. After 2015, the trend of large model training emerged, and the amount of calculation increased by 10 to 100 times.

Model training has increasingly higher requirements on computing power and infrastructure. Training requires more computing power and takes longer, which also leads to more resource costs. Here we list the cost data disclosed in some papers or studies, which reflects that the cost of model training is very high.

Therefore, how to conduct model training stably and how to continuously reduce costs and increase efficiency are actually crucial.

Against this background, Baidu Intelligent Cloud launched Baidu Baige·AI heterogeneous computing platform, with the goal of providing integrated software and hardware solutions for AI scenarios. . Through the four-layer technology stack of AI computing, AI storage, AI acceleration, and AI containers, it meets the needs of upper-level business scenarios.

#When we think about performance acceleration, the first thing that comes to mind may be to use better hardware.

This will bring a certain performance improvement, but in most cases the computing power of the hardware may not be fully utilized. The core reason is that the execution efficiency of the training code has not been adjusted to optimal or better. state.

Therefore, when we decide to use a certain model algorithm, in order to achieve better resource efficiency and training efficiency, we need to consciously optimize it. However, there are also many technical challenges here:

These challenges have greatly affected the tuning of model training performance, so we launched the AIAK-Training acceleration suite, hoping to reduce optimization costs through abstract and easy-to-use interfaces, and through software and hardware collaboration Optimization methods to fully accelerate customers' model training performance on Baidu Smart Cloud.

Before introducing the specific effects of AIAK-Training, we first introduce the key issues under the topic of training acceleration. What are the technical ideas and program principles?

Because model training optimization itself is a comprehensive work of software and hardware, and the technology stack is relatively complex, today’s content will definitely not be able to cover all the details. We will try our best to cover the key ideas.

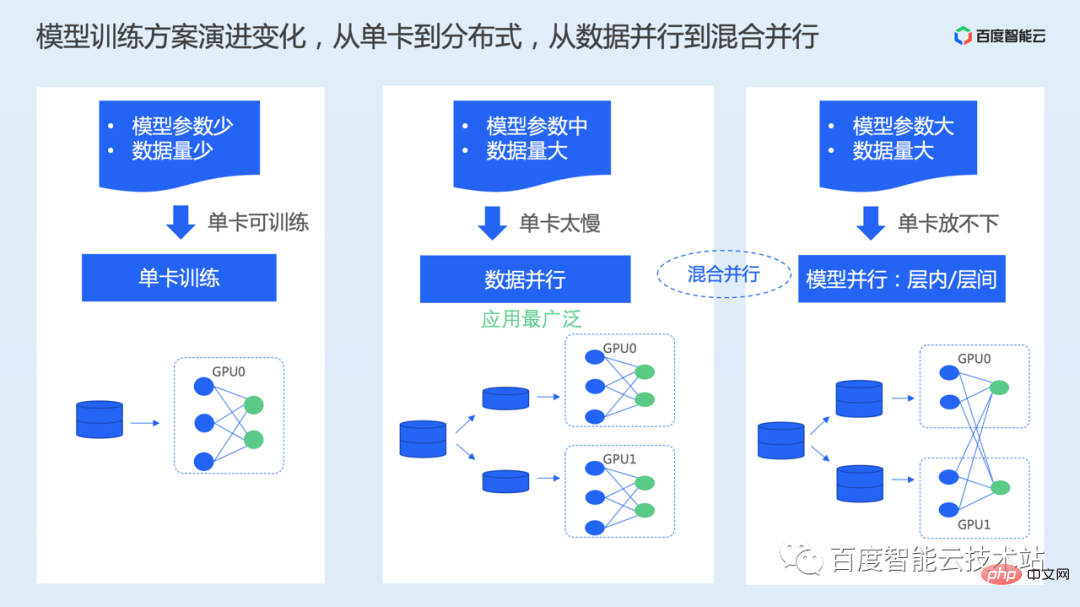

First, let’s take a look at the current model training scheme. In the past development stage, there have been two key changes in model training solutions. One is the change from single-card training to distributed training, and the other is the change from data parallel training to multi-dimensional hybrid parallel training. The core driving points here are the amount of training data and the amount of model parameters.

The most widely used here is data parallelism. Under the data parallel scheme, the data set is evenly divided into multiple parts, and then the complete model is saved on each card, and the divided sub-data sets are processed independently and in parallel.

When the number of model parameters is large enough, for example, the number of parameters reaches tens of billions or hundreds of billions, and a single card cannot hold the complete model. Here again, model parallelism, or the use of data parallelism and model parallelism at the same time, appears. Hybrid parallel scheme.

Model parallelism will split the model into different cards, and place a part of the model on each card. Here, the splitting method is different, such as intra-layer splitting or inter-layer splitting, and Tensor parallelism and pipeline parallelism are subdivided.

Because in general model training, data parallelism is used more, we will focus on data parallelism as an example to introduce the ideas of performance optimization.

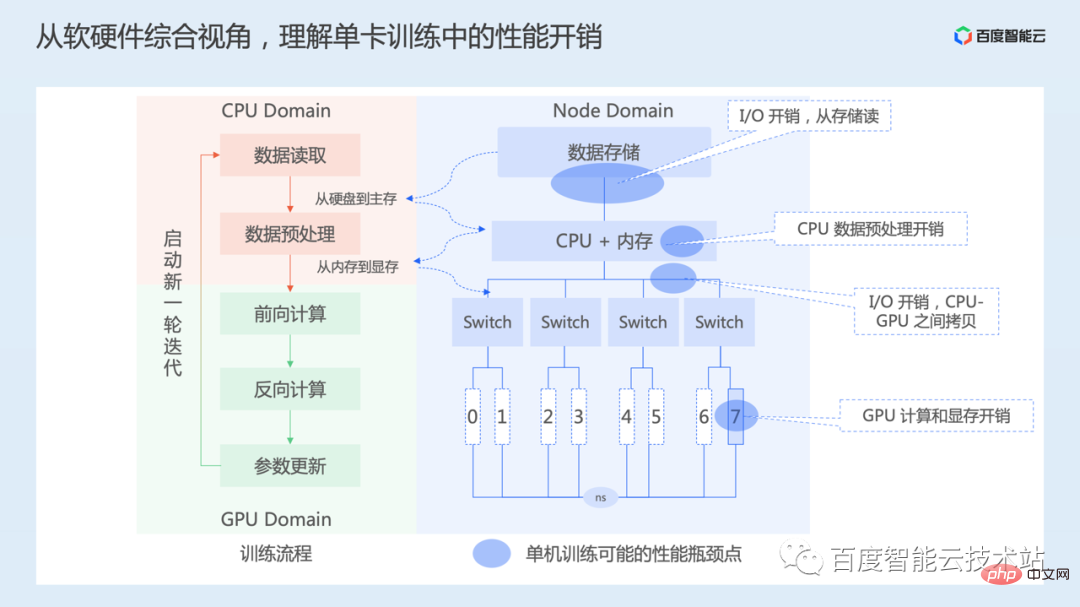

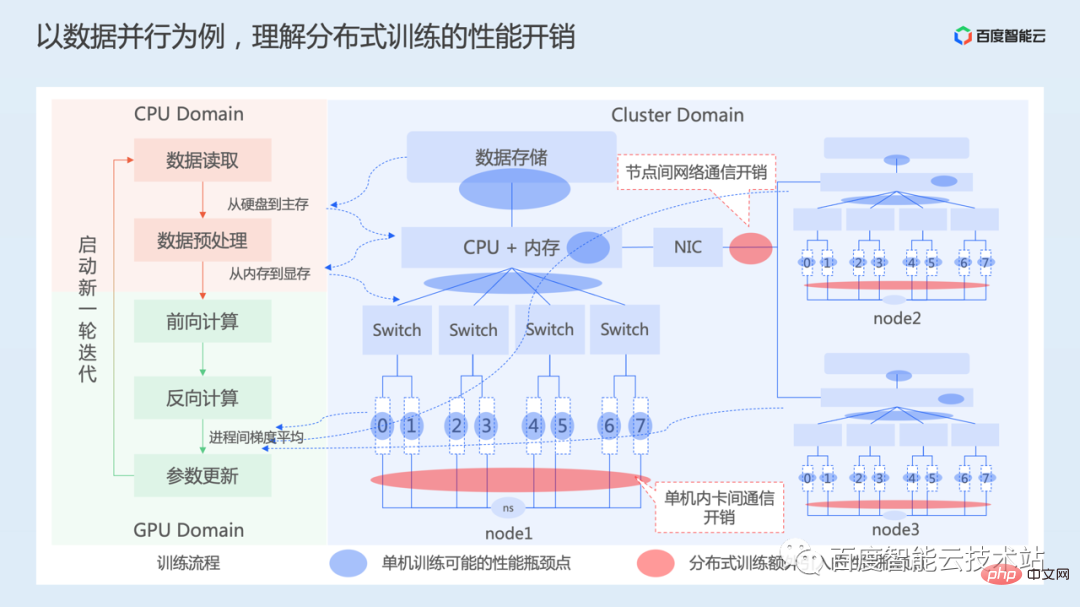

# Let’s first understand the performance overhead that exists during single-card training from the overall perspective of software and hardware.

The left side of the picture below is our training process from a software perspective. The process of single-card training mainly includes data reading, data preprocessing, forward calculation output and loss calculation, reverse calculation according to the loss function to obtain the gradient of each layer parameter, and finally updating the model parameters according to the gradient. Continue this process until training converges.

The right side of the figure below is a simplified node hardware topology diagram. The top is data storage, which can be local storage or network storage. Then there are the CPU and memory. There are 8 GPU cards connected to the CPU through multiple PCIe Switches, numbered from 0 to 7, and the 8 cards are interconnected through NVSwitch. Different computing instances have different hardware topologies.

Therefore, from a single card perspective, there are mainly overheads in I/O, CPU preprocessing, data copying between CPU and GPU, GPU calculation, etc.

Then let’s look at the process of data parallelism.

The left side of the figure below is still the main process of training, and the right side shows the hardware topology of a 3-machine 24-card training cluster. The 3 machines are interconnected through the network.

We also introduced in the previous part that in data parallelism, each device performs the forward and reverse calculation processes in parallel and independently. Therefore, each training process will also encounter the single-card training mentioned above. performance overhead issues.

In order to ensure that data parallelization is mathematically equivalent to single-card training, it is necessary to ensure that the model parameters of each card are always consistent during the iteration process. On the one hand, it is necessary to make the initialization status of the model parameters of each GPU card consistent. This is usually done by broadcasting the parameter status on the first card to other cards before starting training.

During training, due to the different data processed by each device, the model loss value obtained by forward calculation is also different. Therefore, it is necessary to calculate the gradient after each device reversely calculates the gradient. Average, update the model parameters with the averaged gradient value to ensure that the model parameters of each card remain consistent during the iteration process.

Gradient averaging involves the communication process, including communication between cards within nodes, as well as network communication overhead across nodes. The communication here includes synchronous communication and asynchronous communication. However, in order to ensure the convergence of model training, the synchronous communication solution is generally used, and subsequent optimization work is also carried out based on synchronous communication.

It can be seen from the above that compared with single-card training, data parallelism mainly adds additional communication overhead.

Through the above analysis, we know that accelerating AI training is not just a certain aspect of work, but requires comprehensive consideration from system dimensions such as data loading, model calculation, and distributed communication. The data loading mentioned here includes data I/O, preprocessing, memory copy and other processes.

In specific optimization practice, given a model to be optimized, accelerating model training means continuously improving the overall throughput of training (the number of samples that can be trained per second). In this process, we can generally first analyze the training throughput of a single card. When the training throughput of a single card improves, we can then look at expanding from a single card to multiple cards to see how to improve the training acceleration ratio of multiple cards.

First of all, single-card training optimization, the ultimate optimization goal is to spend all time on GPU computing, and the accelerator utilization rate is 100%. Of course, it is difficult to completely achieve this state in practice, but we can use this indicator to guide or measure our work.

The key to single-card performance optimization includes two parts:

Then expand from a single card to multiple cards, the goal is how to achieve a linear acceleration ratio. The indicator of linear acceleration ratio simply means whether the training performance is twice that of a single card when training is expanded from 1 card to 2 cards.

The core here is to optimize the efficiency of distributed communication. On the one hand, it is the optimization of the hardware level. On the other hand, in actual communication, we need to consider how to make good use of the bandwidth resources of the network, or whether the communication process can be hidden. wait.

Below we will expand on these aspects in detail.

The first is the optimization of data loading.

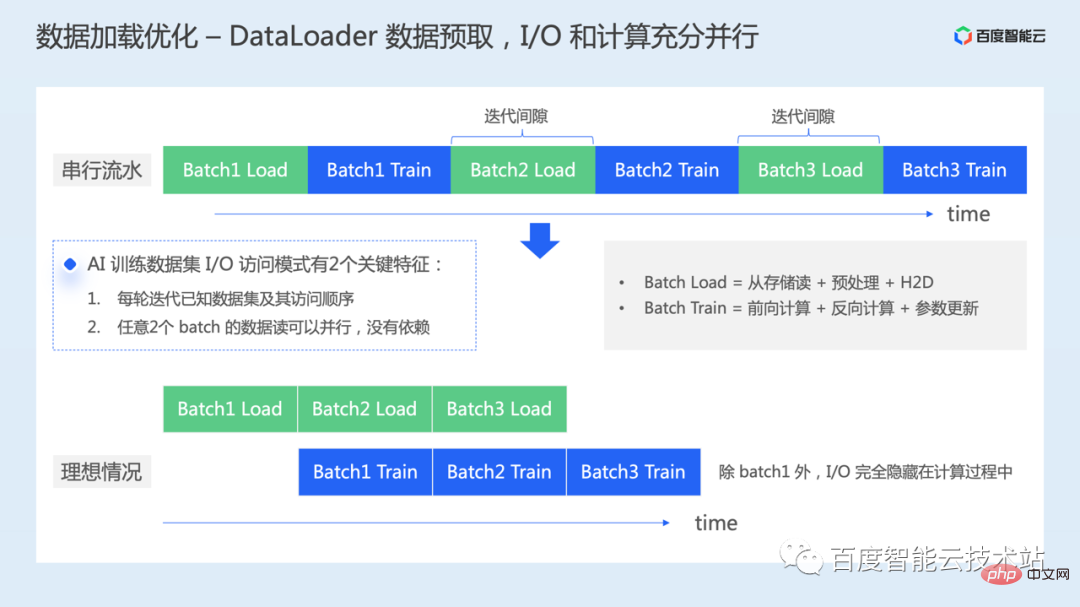

After we instantiate a dataloader, we will continue to iterate the dataloader to read a batch of data for model training.

If no optimization is done here, as shown in the upper part of the figure below, the data loading process of each batch and the model training process of each batch are actually carried out in series. From a GPU perspective, there will be calculation gaps caused by data loading, and there will be a waste of time in computing resources.

In addition to what we mentioned earlier that we use better hardware to directly improve the efficiency of data reading, how else can we optimize it?

In fact, during the AI training process, there are two key features in data access:

Therefore, when we do not rely on hardware-level changes, one optimization work we can do is data prefetching. When training the first batch of data, we can load the next batch of data in advance. Let the I/O process and the calculation on the GPU be fully parallelized.

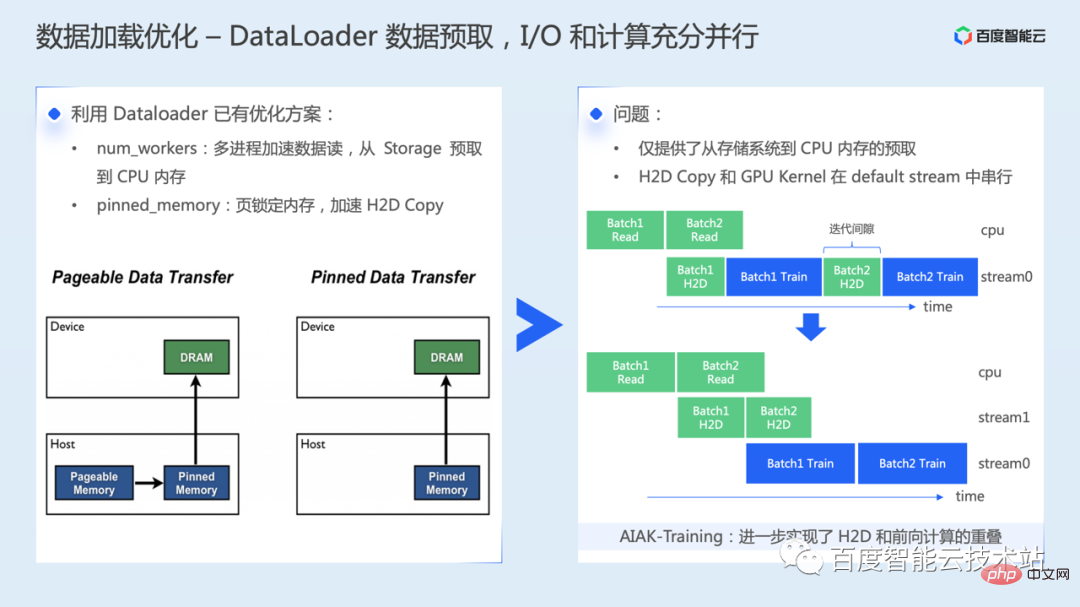

First of all, we need to make good use of the existing optimization solutions in dataloader. First, set the num_workers hyperparameters reasonably and read the data through multiple processes. This step can achieve data Prefetch from storage system to host memory. The second is to copy from the host memory to the GPU memory, which can be accelerated through the pinned memory mechanism.

Brief introduction to the main principle of pinned memory acceleration: There are two types of memory data: pageable memory and pinned memory. The data in pageable memory may be swapped out to the disk. In this case, when executing H2D, it may be necessary to first read from the disk to the memory, and then copy from the memory to the video memory. In addition, when copying pageable memory data to GPU memory, you need to create a temporary pinned memory buffer first, copy the data from pageable memory to pinned memory, and then transfer it to the GPU, which also requires additional data transfer operations.

However, when we enable the above solution, we only implement prefetching from the storage system to the host memory, speeding up the data copy speed from the host to the device. However, the memory copy from the host to the device and the actual calculation kernel are still executed serially on the GPU, that is, there is still a small time gap on the GPU.

AIAK has made further optimizations to address this problem, enabling overlap of H2D and forward calculations.

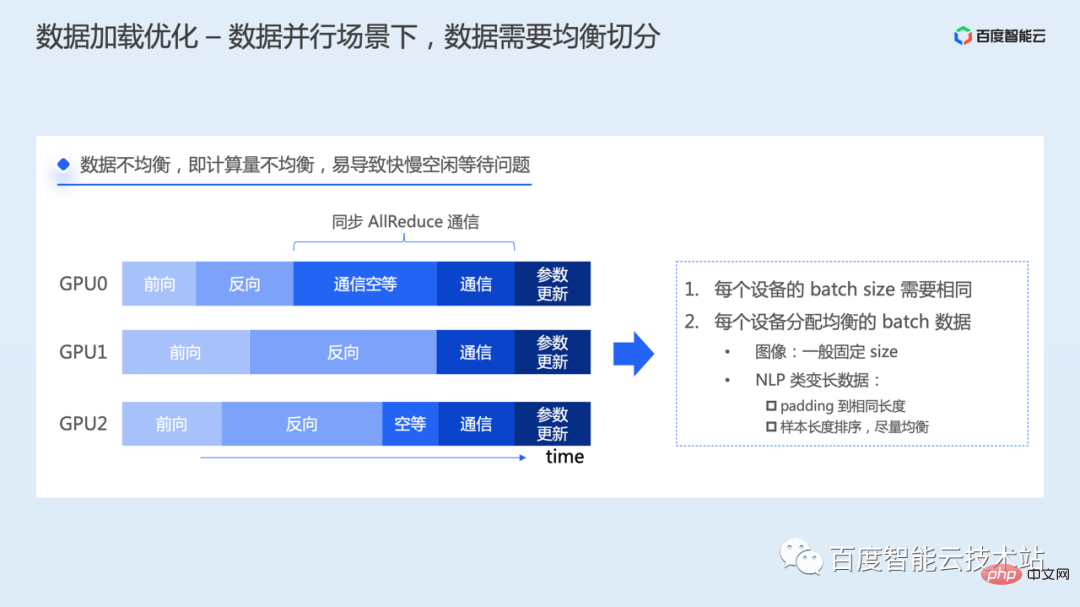

In a data parallel scenario, one thing that needs to be paid attention to is that the data needs to be split evenly.

If the data allocated to each training process is unbalanced, the amount of calculation will be different, which will lead to different completion times for forward calculation and reverse calculation for each process. Then the process that completes the calculation first will end up with the reverse calculation. During the process, it will first enter the gradient communication link. However, because Allreduce communication is a synchronous communication operation, all processes need to start and end at the same time. Therefore, the process that starts communication first will wait for all other processes to also initiate AllReduce. Complete communication operations together. There will be resource idle problems caused by different speeds.

In order to solve this problem, each process needs to use the same batchsize to read data, and the amount of data in each batch must be balanced. Image data are generally of fixed size for training, while NLP models need to process variable-length statements, which may require special processing. For example, data can be padded to the same length, or evenly distributed by sorting sample lengths, etc. .

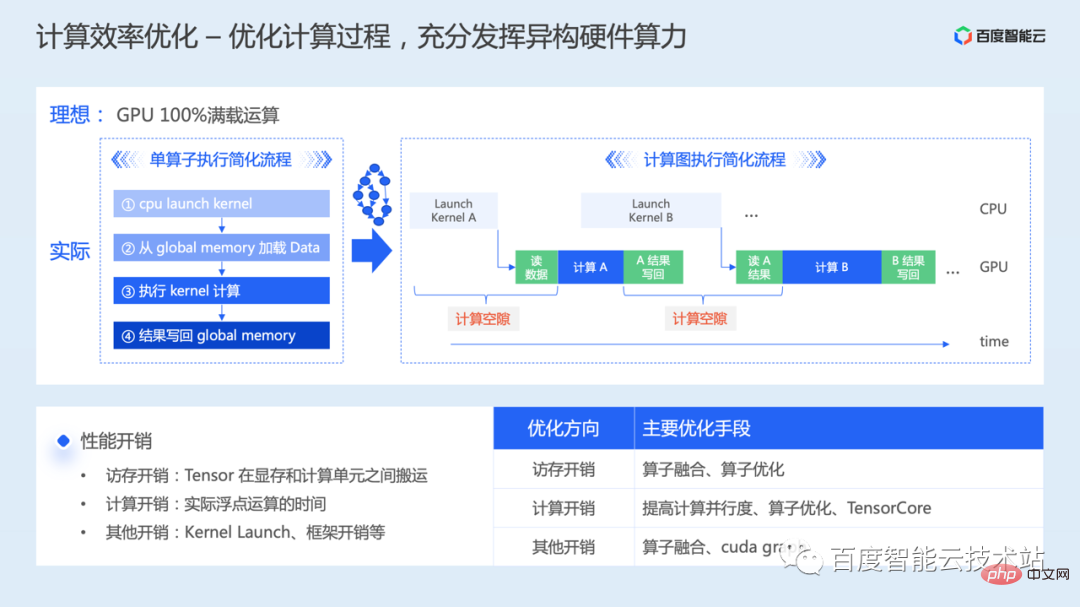

The following introduces the optimization of computing efficiency.

Calculation includes forward, reverse, and parameter updates. The goal of optimizing calculations is to fully utilize the computing power of heterogeneous hardware. The ideal situation is to allow the actual computing performance of the GPU chip to reach the theoretical peak.

Let’s first analyze it from the perspective of a single operator. When we prepare to perform a calculation operation on the GPU, the simplified process has four steps.

According to the proportion of computing and memory access overhead, operators are generally classified as computing bottlenecks or memory access bottlenecks.

When expanding from an operator to a complete model training, because a lot of Kernel calculations need to be performed continuously, there will be many errors between Kernel calculations caused by Kernel Launch and the reading and writing of intermediate results. Calculate the gap problem.

It can be seen from the above that optimizing model calculation efficiency requires comprehensive consideration of memory access optimization, calculation optimization, and other overhead optimization.

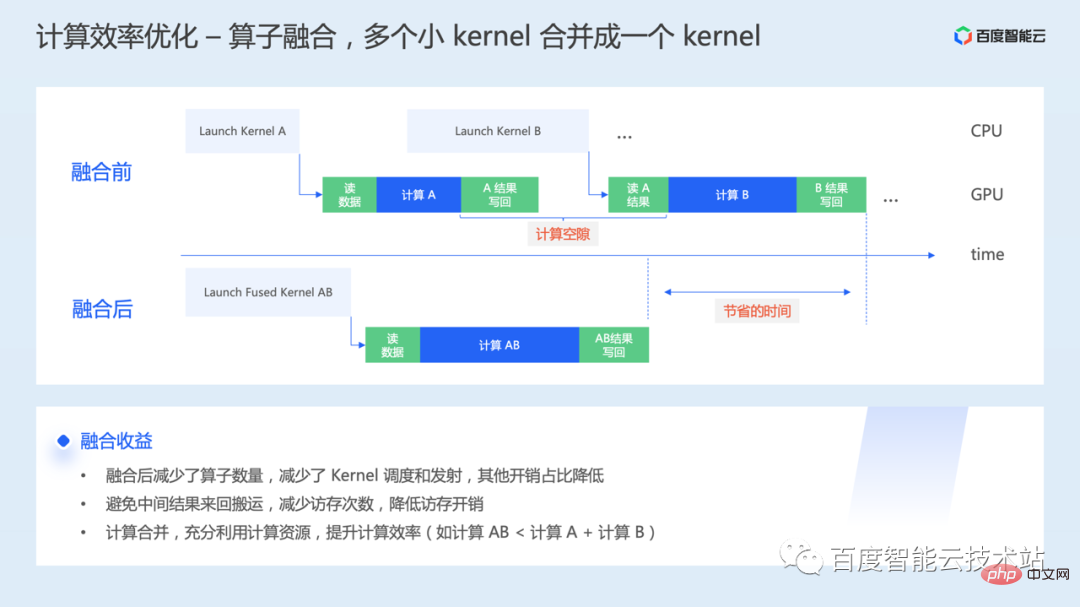

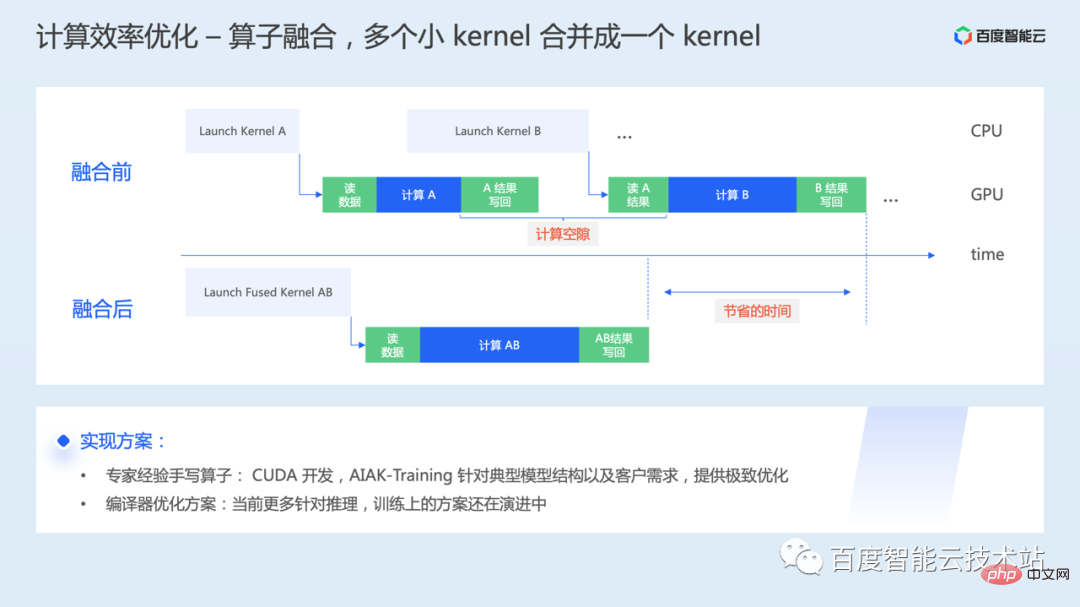

#The first is operator fusion. When an operator is executed on the underlying GPU, one or more Kernel Launches will be initiated, and the interactive data between Kernels also needs to pass through the video memory. Operator fusion is to fuse multiple GPU Kernels into one large Kernel for unified initiation and execution.

#How to implement operator fusion specifically?

A way to analyze inefficient operations in the model, using handwritten fusion operators based on expert experience. On the GPU, it is mainly about CUDA operator research and development, and there is a certain threshold here. AIAK-Training will provide efficient and optimized operator implementation based on typical model structures or customer needs.

Another way is to compile and optimize the solution. Computational optimization is performed through compilation, and code is automatically generated, thereby reducing the cost of manual optimization on different hardware. However, many current compilation solutions are more optimized for inference, and training solutions are still in the process of rapid evolution. However, from the perspective of ultimate performance, the work of handwriting fusion operators will still be indispensable for some time to come.

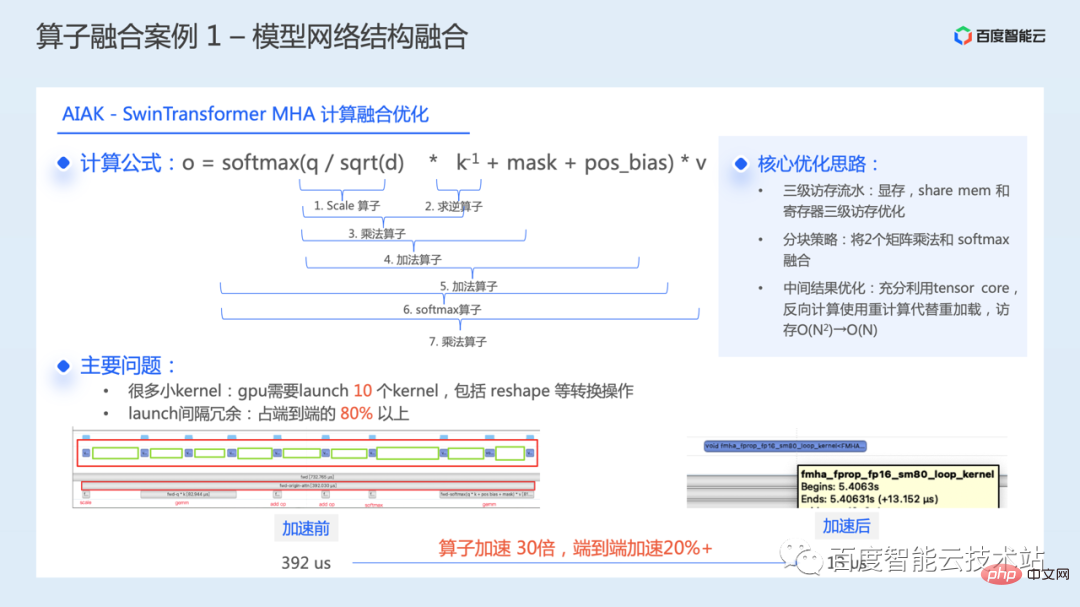

The following introduces some practical cases of operator fusion. The first is the optimization of typical model network structures.

The following figure shows our calculation fusion optimization for the core module WindowAttention in the SwinTransformer model.

WindowAttention structure, the core operation formula is shown in the figure below. During the calculation process, 7 calculation kernels need to be executed in sequence. Coupled with some reshape and other conversion operations, a total of 10 Kernels are required to be launched. Through performance analysis, it was found that the interval redundancy overhead of launch kernel accounts for more than 80% of the end-to-end time during the actual execution process, resulting in a large optimization space for this module.

By fusing these Kernels into one, the execution time of the entire module is reduced from 392 microseconds to 13 microseconds, and a single operator is accelerated by 30 times. The training efficiency of the entire model has been accelerated by more than 20% end-to-end.

The core idea of this optimization has three main points:

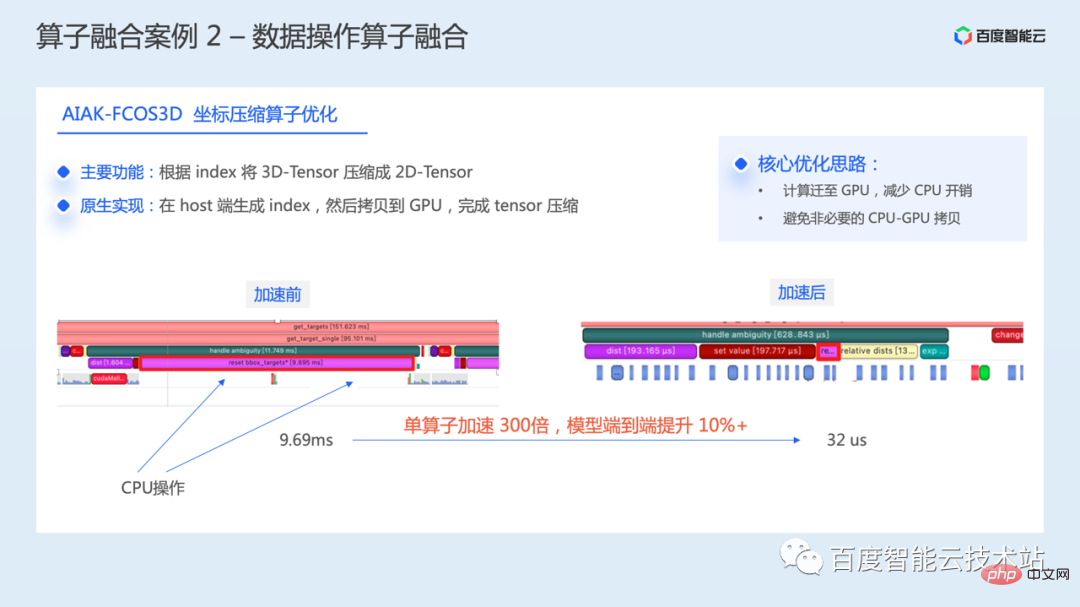

The following figure is an example of fusion of data operations, which is an optimization of coordinate compression operations in the FCOS3D model.

Through performance analysis, it was found that there are a large number of GPU gaps during this operation and the GPU utilization is low. The main function of this operation is to compress 3D-Tensor into 2D-Tensor based on index. In the native implementation, the index is first generated on the host side, then H2D copy is performed, and finally Tensor compression is completed, which will cause additional copy and waiting overhead.

To this end, we re-implemented this part of the operation. The core idea is to migrate all operations to the GPU, directly completing the generation of index and compression of Tensor on the GPU, reducing CPU participation and avoiding unnecessary Memory copy between CPU-GPU.

The execution time of a single operator is reduced from 9.69 milliseconds to 32 microseconds, an acceleration of 300 times, and the end-to-end training of the entire model is improved by more than 10%.

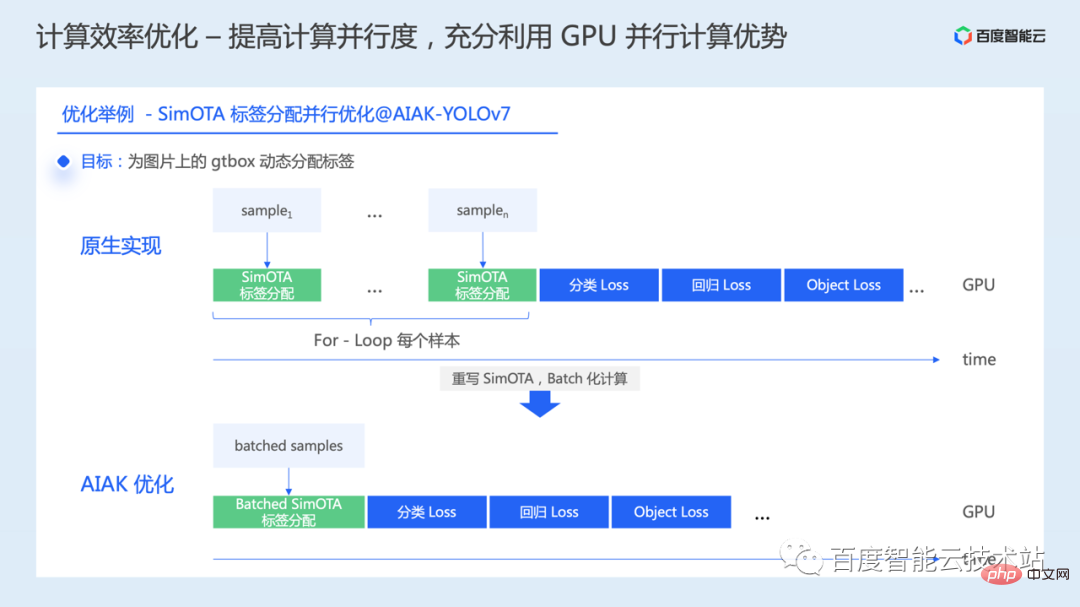

Next we introduce another idea of computing optimization, which is to improve the parallelism of calculation and make full use of the advantages of GPU parallel computing. We will also introduce it with the help of some practical cases.

We found that in some models, some operations are performed serially. For example, in some target detection models, during the loss calculation process, some operations are not performed according to a batch, but for-loop each picture or sample. In this case, when we increase the batchsize, because The serialization here may not achieve the performance we want.

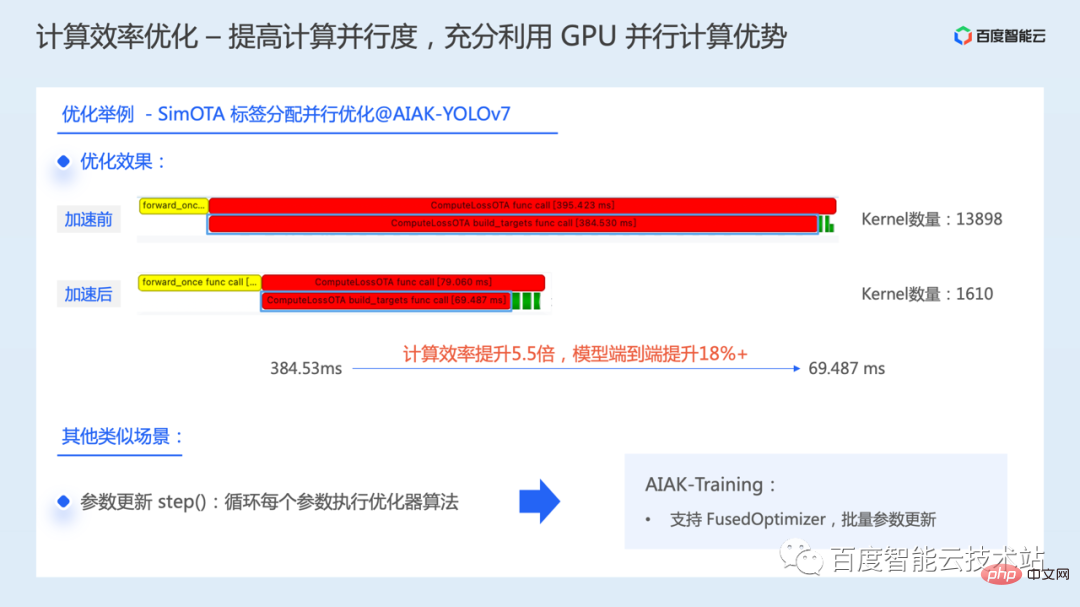

Take the SimOTA operation in YOLOv7 as an example. In the native implementation, each picture of a batch is traversed through a for-loop, and then SimOTA label assignment is performed for the gtbox of the picture. This serial implementation results in very inefficient GPU utilization for this part of the operation.

There is no dependency between data when processing each picture. Therefore, one of the tasks we have done is to change the serial calculation into batch parallel calculation, and speed up the efficiency of this part of the calculation by parallelizing label allocation for a batch of data.

In the final effect, the SimOTA operation time dropped from 384 milliseconds to 69 milliseconds, the computing efficiency increased by 5.5 times, and the end-to-end training efficiency of the entire model increased by 18% above.

There are other similar scenarios during the model training process, such as parameter update. When parameters are updated, by default, each parameter is traversed through a loop, and then each parameter will start a Cuda Kernel for parameter update, and then execute it in sequence.

In response to this situation, AIAK has also added the optimization of FusedOptimizer. By fusing the parameter update operator, it can update parameters in batches and greatly reduce the number of Kernel Launches.

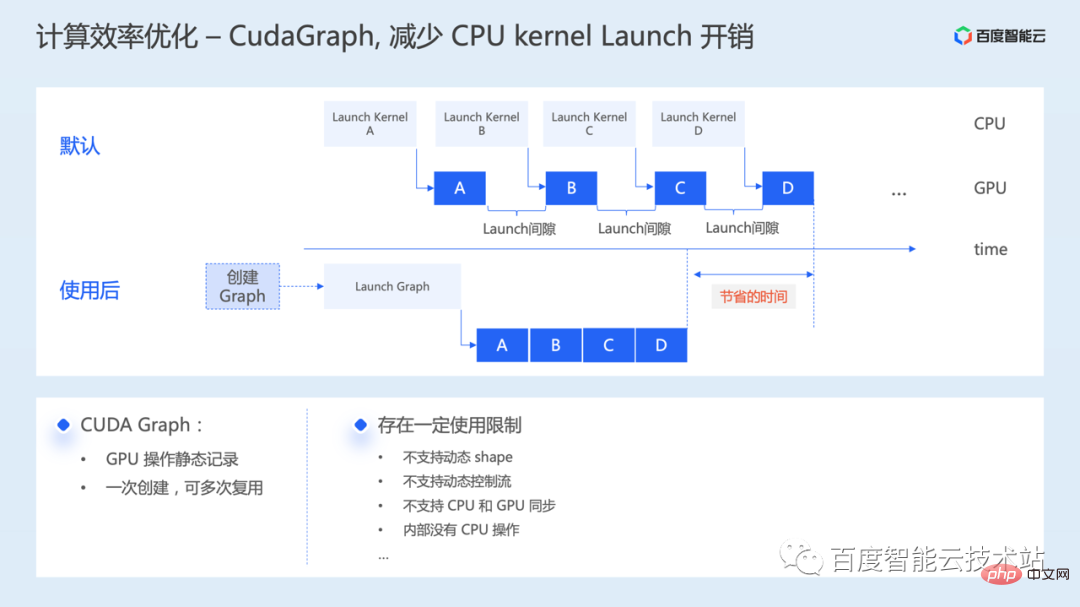

The following introduces another optimization method CUDA Graph, mainly to reduce the overhead of CPU Launch Kernel.

CUDA Graph is a feature introduced in the CUDA 10 version. It can encapsulate a series of CUDA Kernels into a single unit. Multiple GPU Kernels can be launched through a single CPU Launch operation, thus reducing the overhead of the CPU Launch Kernel. .

As shown in the figure below, by default, the CPU needs to launch multiple Kernels in sequence. If the Kernel calculation time is relatively short, the Launch gap between Kernels may become a performance bottleneck. Through CUDA Graph, you only need to spend some extra time to build the Graph, and subsequent emission of the Graph can greatly shorten the gap between Kernels during actual execution.

Now many frameworks have also added support for CUDA Graph, enabling K to enable this function by inserting some code. However, there are some usage restrictions, such as not supporting dynamic shape, not supporting dynamic control flow, not being able to capture CPU operations during the process, etc. You can try to use this optimization capability based on the model situation.

The following is the last calculation optimization method to make full use of the Tensor Core computing unit.

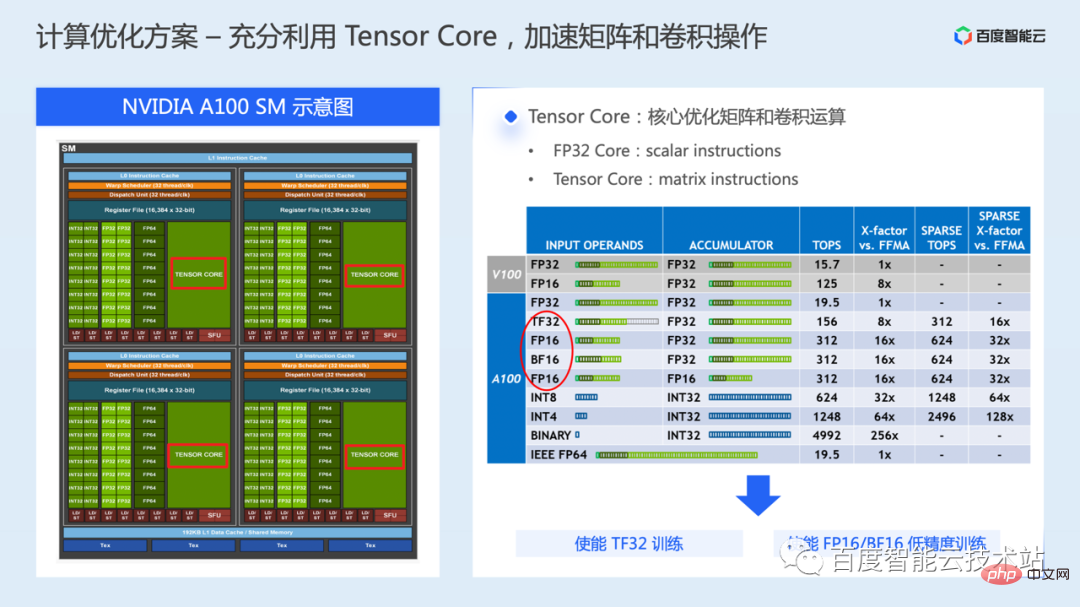

A GPU generally contains multiple SMs. Each SM includes computing cores of different data types, as well as various storage resources. Shown on the left side of the figure below is a schematic diagram of an NVIDIA A100 SM. An SM contains 64 FP32 CUDA Cores and 4 Tensor Cores.

When training the model, FP32 CUDA Core is mainly used for calculation by default, and Tensor Core is a special hardware execution unit introduced from the Volta series of GPUs. It is mainly used to accelerate matrix or convolution. Operational efficiency.

Compared with FP32 CUDA Core, which can only perform calculations on two scalars at a time, Tensor Core can perform calculations on two matrices at one time, so the calculation throughput of Tensor Core is much higher than that of FP32 CUDA Core.

In A100, Tensor Core supports a variety of floating point data types. For deep learning training, it may involve FP16, BF16, and TF32 modes.

TF32 is mainly used in single-precision training scenarios. Compared with FP32 training, while maintaining the same memory access bandwidth requirements, the theoretical computing throughput is increased by 8 times.

FP16/BF16 is mainly used in mixed-precision training scenarios. Compared with FP32 training, the memory access requirements are reduced by half, and the theoretical computing throughput is increased by 16 times.

Using Tensor Core, you can use the underlying cublas or cuda interface for programming. For algorithm developers, it is more straightforward to use the TF32 training or mixed precision training solution provided in the framework.

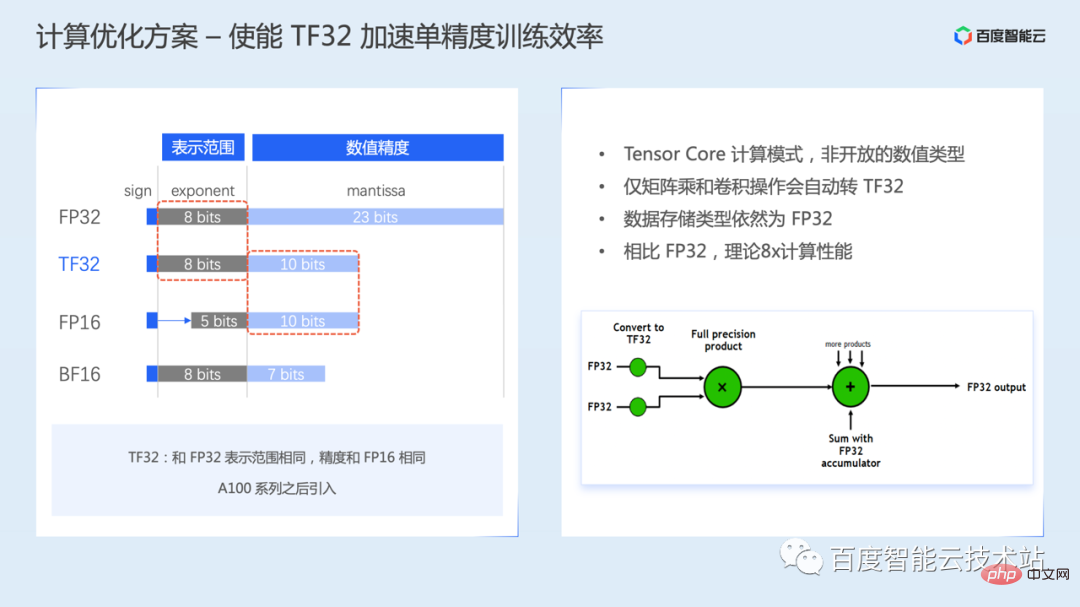

The first is the TF32 training mode, TF32 was introduced by Ampere.

TF32 In the expression of floating point numbers, there are 8 exponent bits, 10 mantissa bits and 1 sign bit. The exponent bit is the same as FP32, that is, the data representation range is the same, but the mantissa bit is lower than FP32 and the same as FP16.

It should be noted that TF32 is not an open numerical type, but a computing mode of Tensor Core. That is, users cannot directly create a TF32 type floating point number.

When TF32 is enabled, Tensor Core will automatically convert FP32 to TF32 when calculating matrices or convolution operations. After the calculation is completed, the output data type is still the FP32 type.

TF32 training is enabled by default in some framework versions. In some framework versions, you may need to manually enable it through environment variables or parameter configuration. For details, please refer to the user manual of the framework.

However, since the accuracy range of TF32 is reduced compared to FP32, you need to pay attention to the impact on the convergence accuracy of the model during actual training.

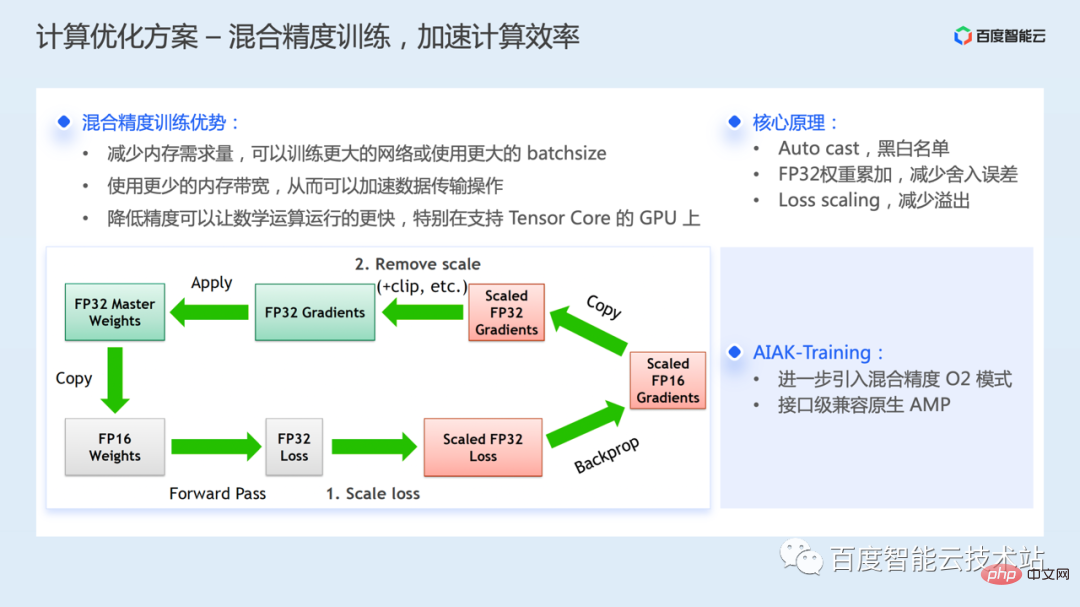

Mixed precision training refers to training using FP32 and FP16 mixed precision while minimizing the loss of model accuracy.

The main benefits of mixed precision training are: compared with FP32 training, memory requirements are reduced, and larger networks can be trained or larger batch sizes can be used. Using less memory bandwidth can speed up data transmission, and half-precision calculations can also make mathematical operations faster;

However, because the range of the exponent bit and mantissa bit of FP16 is smaller than that of FP32, the numerical value The representation range and precision will be reduced. In actual use, numerical overflow problems may occur due to the narrow representation range, or rounding errors may occur due to insufficient precision.

In order to optimize similar problems, there are several key technical tasks in the mixed precision training solution:

Currently all frameworks support mixed precision. The AIAK-Training component further introduces the AMP O2 mixed precision mode in NVIDIA Apex, which will more aggressively transfer more calculations to FP16 to accelerate training. Compared with the default O1 mode, the speed will be further improved, but the accuracy may be affected and needs to be verified with specific models.

AIAK-Training provides a usage method that is compatible with the native usage of torch amp, making it easy to enable O2 mode.

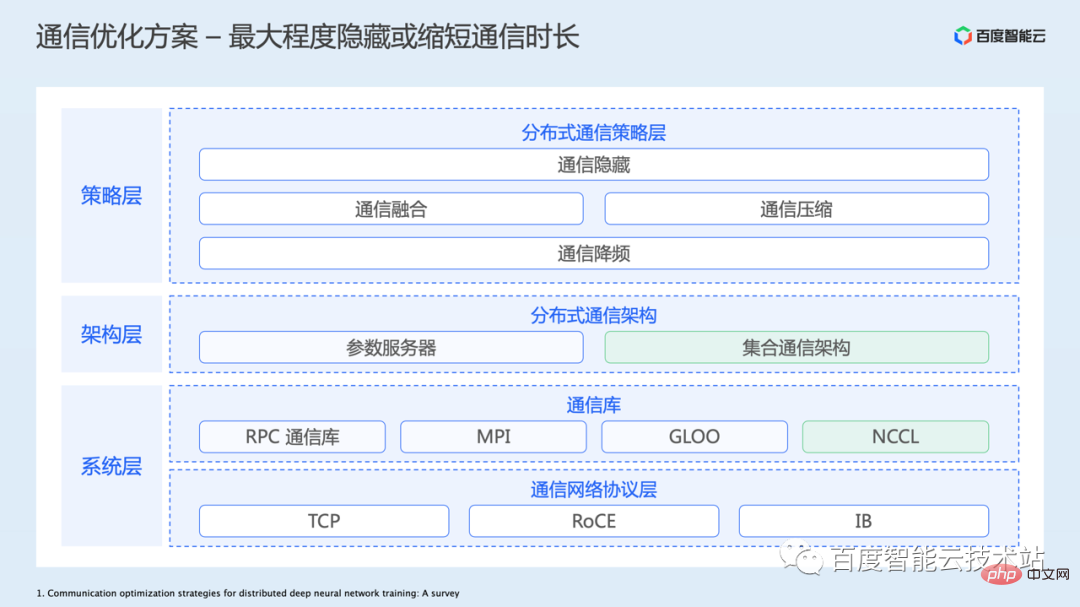

#The following is an introduction to communication optimization. This is also a very big topic and involves a lot of content.

As mentioned earlier, communication is mainly introduced in distributed training, because expanding from a single card to multiple cards requires some data synchronization between multiple cards. These synchronization operations are implemented through communication. .

The following figure lists an overall architecture for communication optimization:

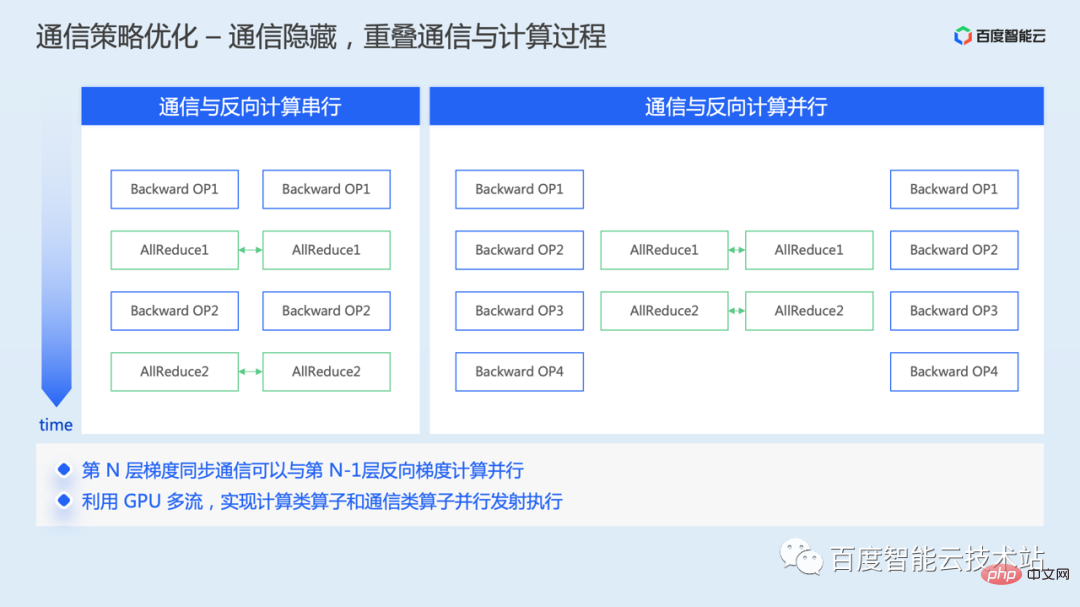

# Let’s first look at the optimization ideas at the communication strategy level. The first is communication hidden optimization.

In data parallelism, gradient synchronization communication is performed during the reverse process of training. After the gradient is calculated in the reverse direction, the global gradient average can be performed.

If no mechanism optimization is done, reverse calculation and communication will be carried out serially, and there will be a time gap in calculation.

Since there is no data dependency between the communication of the previous gradient and the calculation of the next gradient during the reverse process, the communication of the previous gradient and the calculation of the next gradient can be parallelized, allowing the two to The time consuming of the users overlaps with each other, thereby hiding part of the communication time consuming.

At the implementation level, communication and calculation operators are usually scheduled to different cuda streams. Communication operators are scheduled to communication streams, calculation operators are scheduled to calculation streams, and calculations on different streams are Sub-steps can be launched and executed in parallel, thereby achieving parallel overlap of gradient communication and calculation in the reverse direction.

Currently, this optimization capability is enabled by default in the framework.

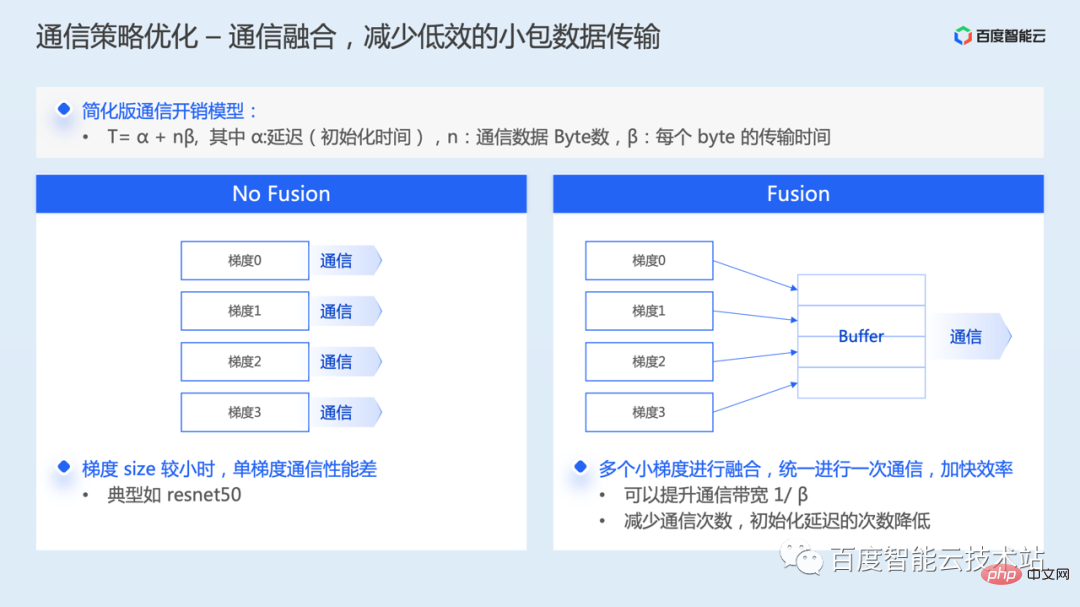

Secondly, communication integration optimization.

By default, each gradient in the model needs to initiate a communication operation. If the size of a single gradient is relatively small, then when small data packets are actually communicated, the network bandwidth utilization will be very low and the communication performance will be poor.

Communication fusion is to fuse multiple gradients together for one communication. From the analysis of the communication overhead model, it can not only improve bandwidth utilization, but also reduce the initialization delay term of communication.

Nowadays, many distributed training frameworks also support the gradient fusion strategy by default. There are certain differences in the implementation methods of different frameworks. Some implementations require gradient negotiation to determine the communication sequence first, while others use static communication directly. Buckets.

Although the framework supports communication fusion by default, the size of gradient fusion can generally be configured through parameters. Users can adjust the appropriate fusion threshold according to the needs of the physical environment and model, and should be able to achieve better benefits.

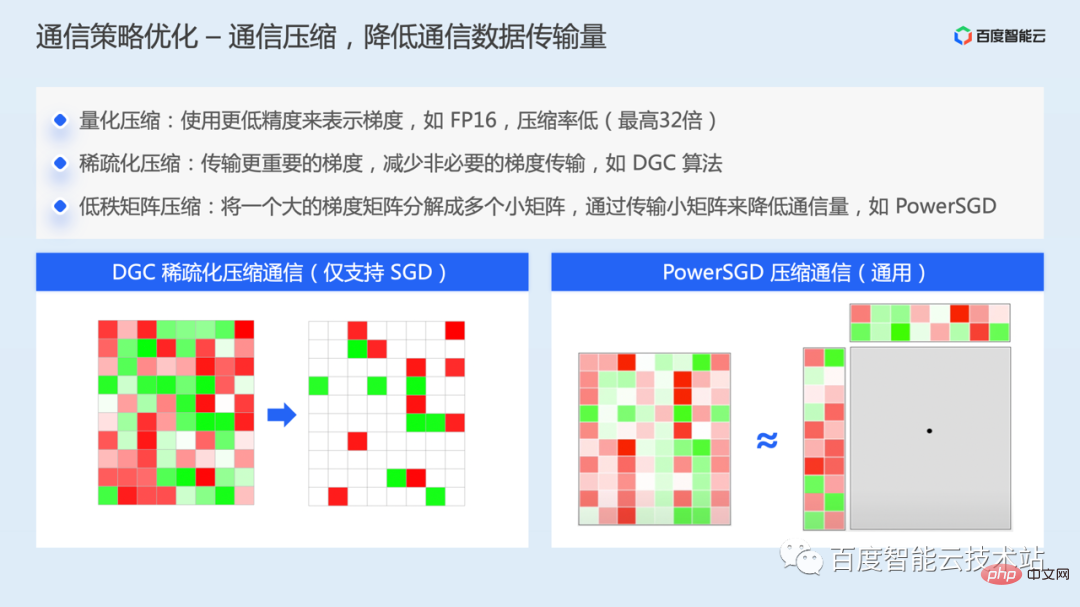

If you are in a training scenario with low network bandwidth, such as a low-bandwidth TCP environment, the delay in gradient synchronization may become the main performance bottleneck of training.

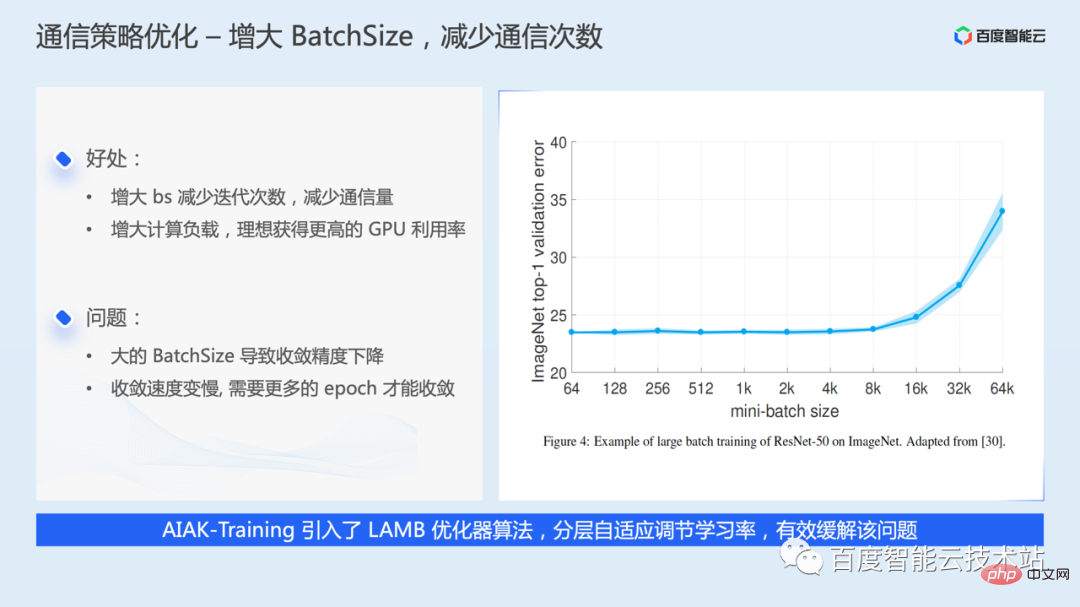

# Communication frequency reduction optimization, the simplest idea is to increase the batch size, each iteration more data, reduce the number of iterations, that is, reduce communication quantity.

However, the bigger the batchsize, the better. A larger batchsize may cause the model convergence accuracy to decrease or the convergence speed to slow down. In response to similar problems, the industry has also proposed optimizer algorithms such as LARS and LAMB, which adaptively adjust the learning rate through layers to alleviate similar problems. AIAK-Training has also added support.

In order to increase the batchsize, if the video memory is sufficient, you can directly adjust the batchsize super parameter. If the video memory is tight, you can also skip several gradient communications through gradient accumulation, which is actually equivalent to increasing the batch size.

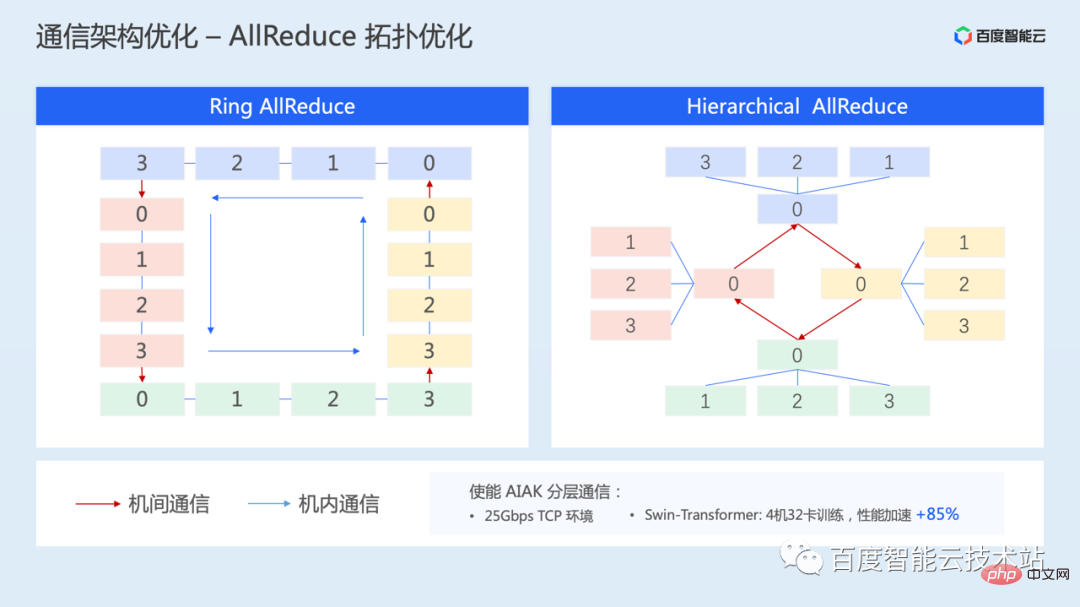

The following introduces an optimization solution for communication topology - hierarchical topology communication, which is also aimed at the situation where the inter-machine network bandwidth is relatively low.

Through hierarchical communication, the high interconnection bandwidth within the machine can be fully utilized, while the impact of low network bandwidth between machines can be weakened.

This communication solution has also been implemented in AIAK. In a 25Gbps TCP environment, 4 machines with 32 cards were tested for SwinTransformer training. Through layered allreduce, the performance can be accelerated by 85%.

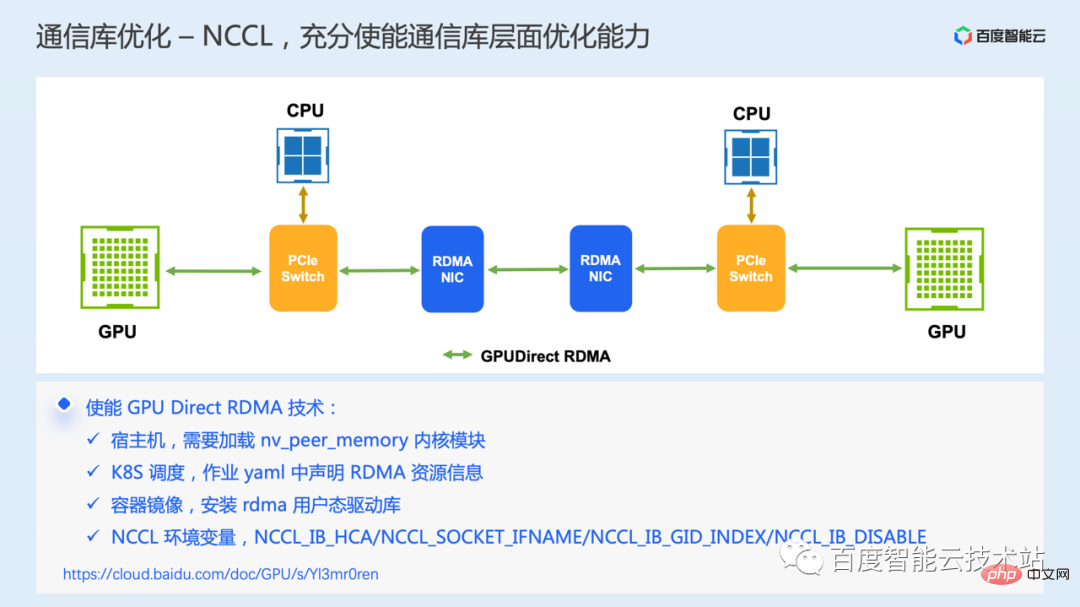

Finally, we introduce an optimization at the underlying communication library level, GPU Direct RDMA communication technology. This technology requires the hardware environment to support the RDMA network.

RDMA communication allows local applications to directly read and write user-mode virtual memory of remote applications. The entire communication process, except for the initial step of submitting a send request, which requires the participation of the CPU, is completed by the network card hardware. It does not require memory copying, system interrupts and software processing, so it can achieve extremely low latency and high bandwidth.

In the GPU scenario, GPU Direct RDMA technology further adds support for RDMA to directly access GPU video memory, which avoids data copying back and forth between GPU video memory and host memory during communication, further reducing cross-machine communication delays.

However, in actual cases, we found that some users purchased RDMA environments, but did not actually use GDR technology, resulting in low communication efficiency. Several key configuration items are listed here. If you have similar problems, you can troubleshoot and set them in order.

We have introduced some of the current main performance optimization ideas and solutions. Overall, regardless of I/O optimization, computing optimization, and communication optimization, the simplest optimization ideas are mainly how to Optimize the operation itself, or whether the number of times the operation can be reduced, or whether the operation can be parallelized with other processes to hide the overhead, etc.

A lot of optimization work has been introduced before. To enable it correctly, each user needs to have a clear understanding of the engineering implementation principles of the framework. In order to simplify the cost of training optimization, we built the AIAK-Training acceleration package.

AIAK-Training will build full-link optimization capabilities around data loading, model calculation, communication, etc. At the same time, we will encapsulate this optimization capability into a simple and easy-to-use interface, and users can insert a few lines of code , which can be more convenient for integrated use. At the same time, we are also building a mechanism for automated strategy combination optimization to automatically help users choose effective optimization strategies.

When used specifically, the acceleration library components can be installed and deployed independently, or you can directly use the container image we provide.

The following are some specific application cases.

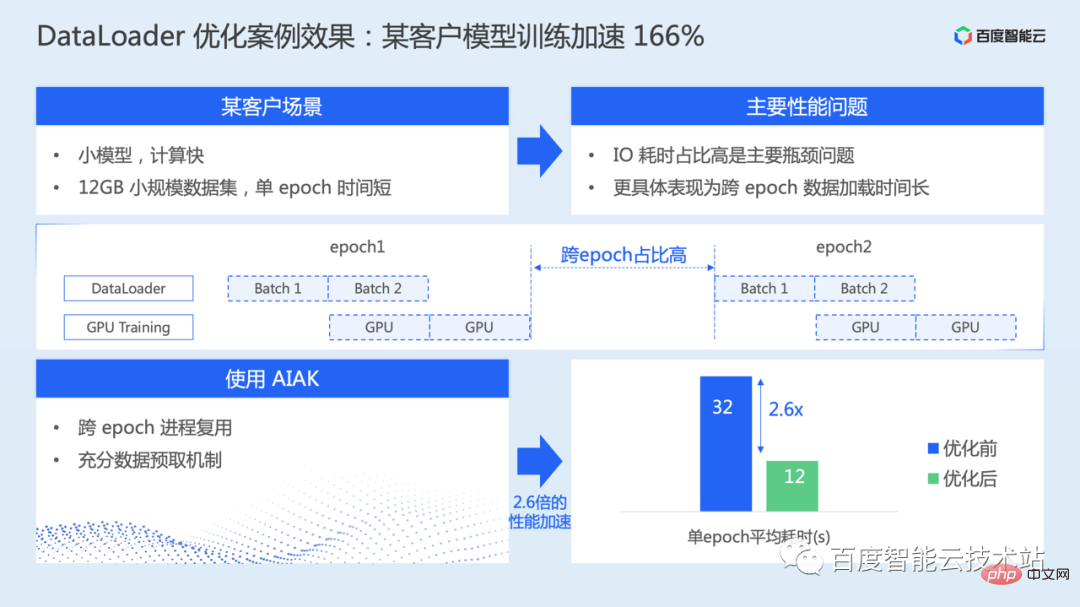

As shown in the figure below, it is mainly for the optimization of dataloader. In this scenario, the model is relatively small, the data set size is also relatively small, and the pure calculation speed is actually faster. However, the cross-EPOCH data loading time is relatively long, causing I/O time-consuming to become the main bottleneck.

By using the process reuse and full prefetch mechanism provided in AIAK, the entire model training is accelerated by 166%.

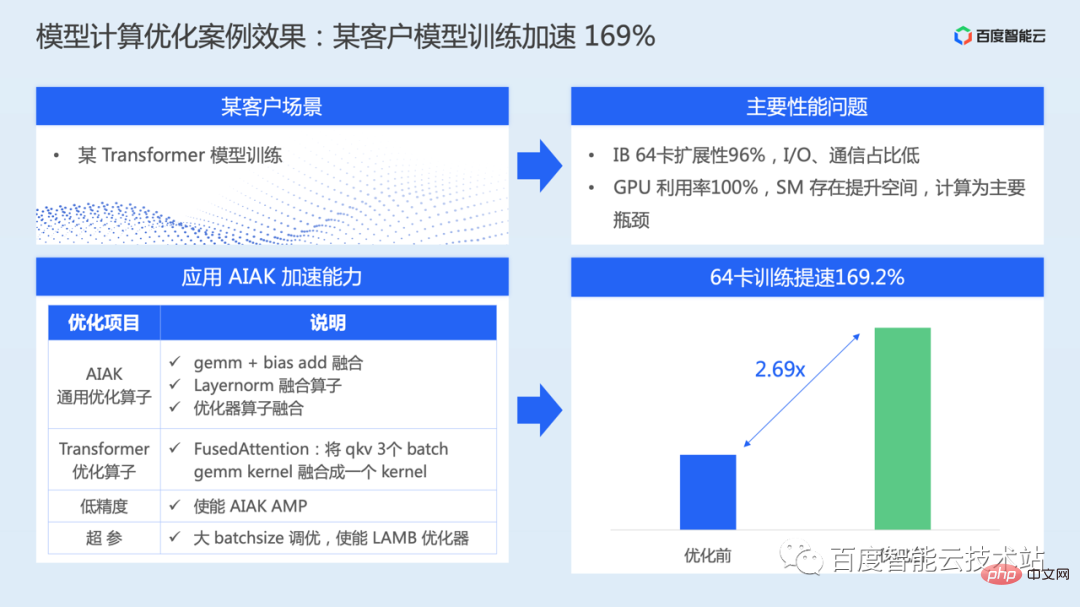

The following figure is a case of model calculation optimization.

In the Transformer class model training scenario, during actual training, the communication scalability is close to linear, the I/O time-consuming ratio is also very low, and computing is the main performance bottleneck.

For this model, AIAK-Training has carried out a series of calculation-level optimizations, including operator fusion of main structures, mixed precision, large batch tuning, etc., and the training efficiency of the entire model has increased by 169%.

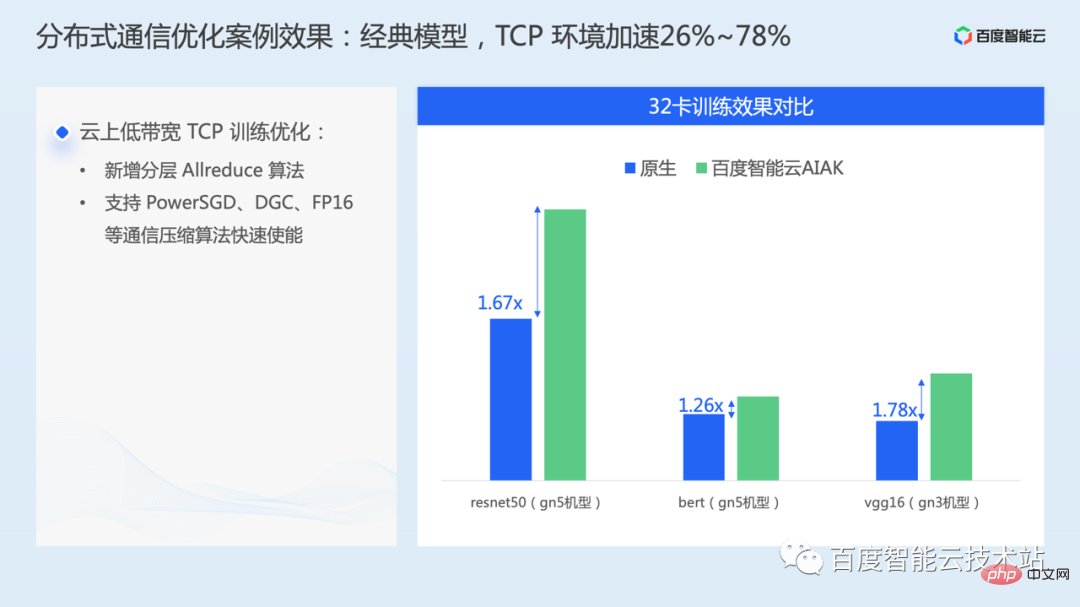

The case in the figure below mainly applies communication-level optimization, enabling optimization strategies for low-bandwidth networks in the cloud TCP environment. In some classic models, such as resnet50, bert, vgg16 can speed up 26%~78%.

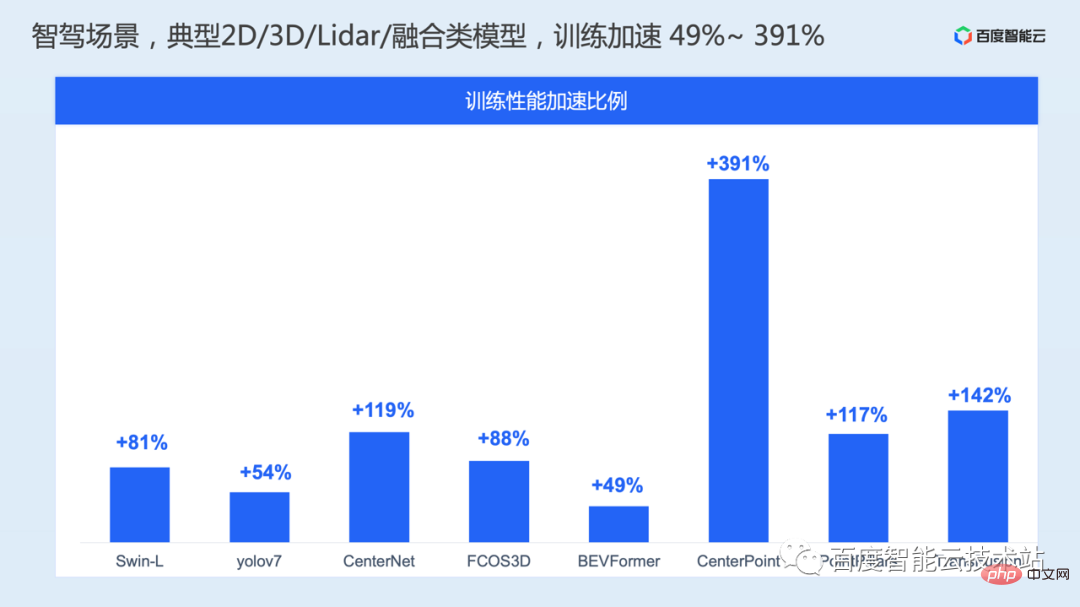

In autonomous driving scenarios, we have also conducted a series of model training performance tests on typical 2D vision, 3D vision, lidar, and pre-fusion models. Optimized, training performance is accelerated by 49%~391%.

The above is the detailed content of AI training acceleration principle analysis and engineering practice sharing. For more information, please follow other related articles on the PHP Chinese website!

How to clean up the computer's C drive when it is full

How to clean up the computer's C drive when it is full

fil currency price real-time price

fil currency price real-time price

How to defend cloud servers against DDoS attacks

How to defend cloud servers against DDoS attacks

How to download Binance

How to download Binance

How to buy and sell Bitcoin on Huobi.com

How to buy and sell Bitcoin on Huobi.com

How to open iso file

How to open iso file

What are the functions of computer networks

What are the functions of computer networks

how to hide ip address

how to hide ip address

How to solve problems when parsing packages

How to solve problems when parsing packages