In the past two days, Turing Award winner Yann LeCun has had a bit of a mental breakdown.

Since the ChatGPT fire, Microsoft has been very straight back with OpenAI.

Google, which was slapped in the face, did not say anything about "reputational risk".

All its language models, whether it is LaMDA, or DeepMind's Sparrow, and Apprentice Bard, can be accelerated. Shelves for shelves. Then he invested nearly 400 million US dollars in Anthropic, hoping to quickly support his own OpenAI. (I also want what Microsoft has)

#However, there is one person who looks at Microsoft and Google taking the lead, but can only jump around anxiously.This person is Meta’s chief AI scientist—Yann LeCun.

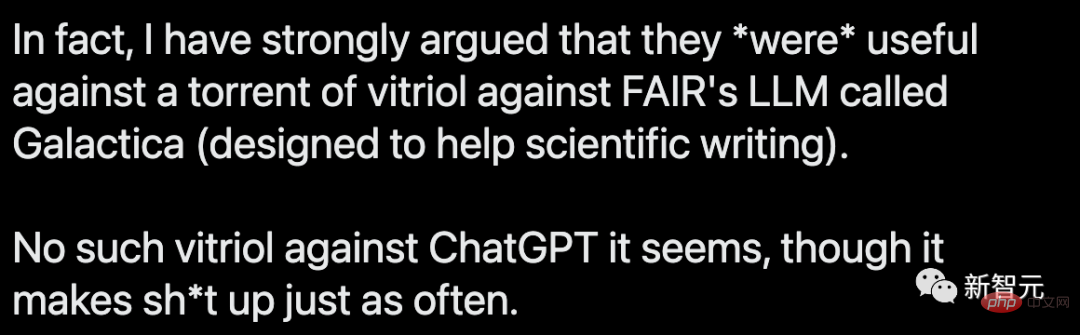

He was very upset on Twitter: "ChatGPT is full of nonsense, but you are so tolerant of it, but my Galactica has only been out for three days, and you are scolding it to the bottom It’s online."

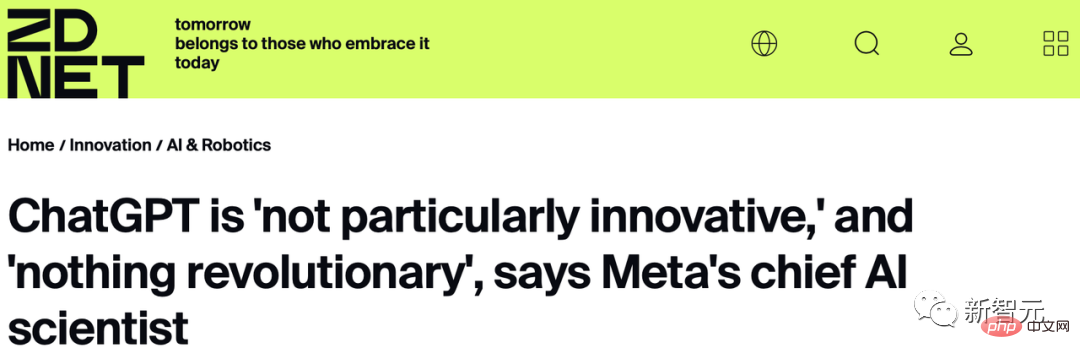

On January 27, at a small gathering of media and executives at Zoom, LeCun made a surprising comment about ChatGPT: “As far as the underlying technology is concerned, ChatGPT is not It’s not such a great innovation. Although in the public eye, it is revolutionary, but we know that it is a well-put together product, nothing more.”

In addition, he also said that the Transformer architecture used by ChatGPT was proposed by Google, and the self-supervision method it used was exactly what he advocated. OpenAI had not yet been born at that time.

As soon as these remarks came out, the public was in an uproar. Sam Altman, CEO of Open AI, seems to have directly dismissed LeCun because of this statement.

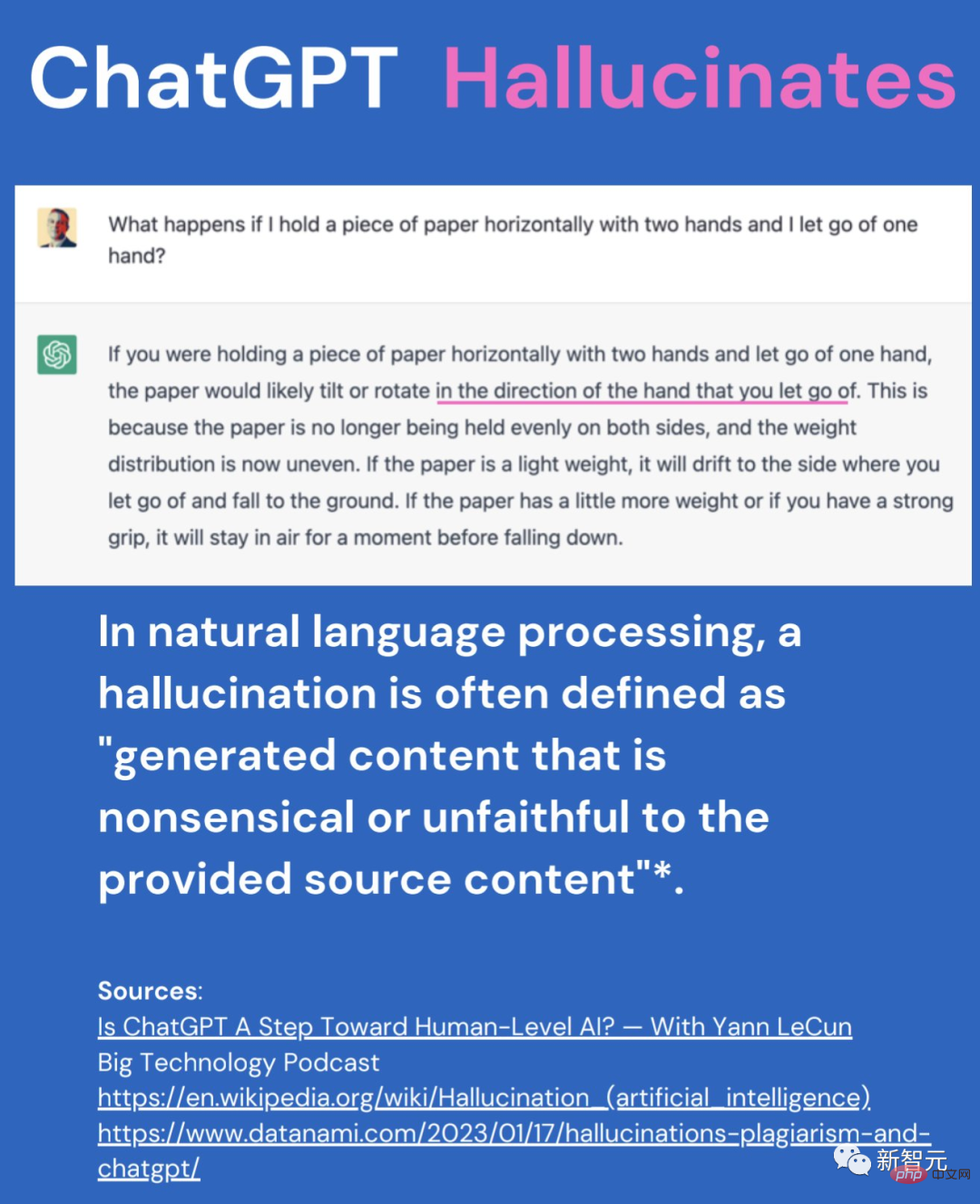

On January 28, LeCun tweeted, “Large language models do not have physical intuition, they are trained based on text. If they can retrieve similar information from huge associative memories, The answers to the questions, they might get the physical intuition questions right. But their answers might also be completely wrong."

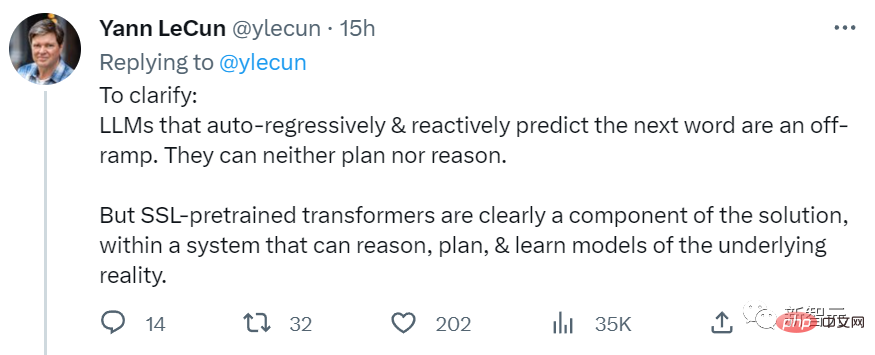

LeCun later added: "LLMs that rely on automatic regression and response prediction of the next word are a wrong way to go, because they can neither plan nor reason."

"But SSL pre-trained Transformer is the solution because the real-world system it is in has the ability to reason, plan and learn."

LeCun gave an interesting example: When he was participating in a podcast, he showed ChatGPT’s answer. It seemed reasonable, but it was completely wrong. However, after the moderator read ChatGPT's answer, he did not immediately realize that it was wrong.

LeCun explained: "The way we think and perceive the world allows us to anticipate what is about to happen. This is the basis for our common sense, and LLM does not have this Ability."

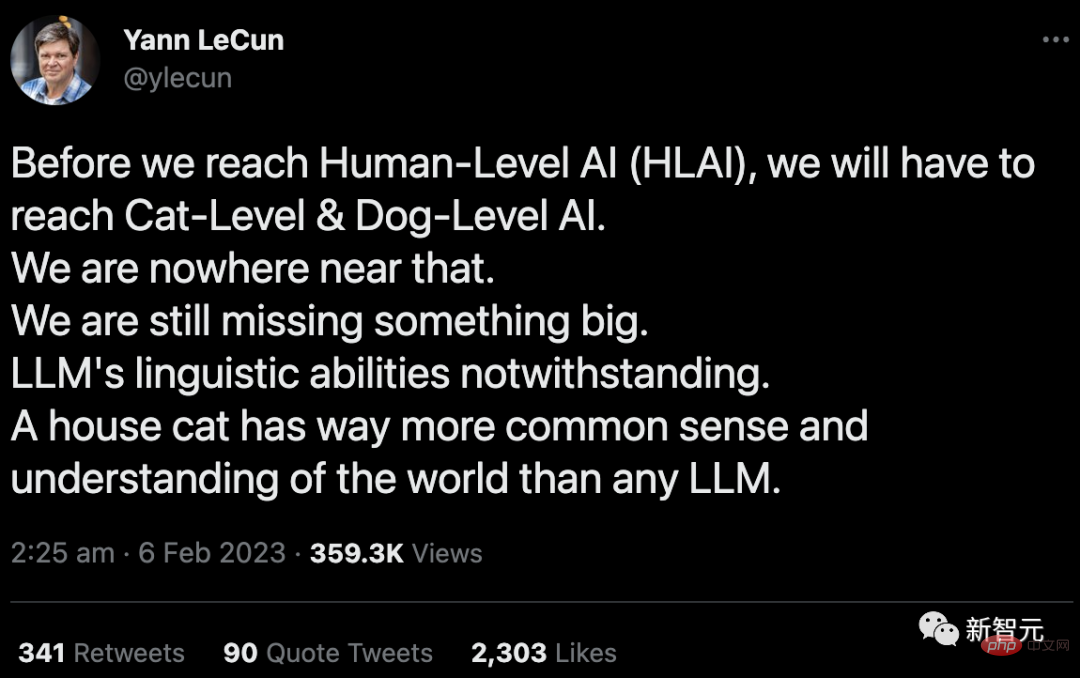

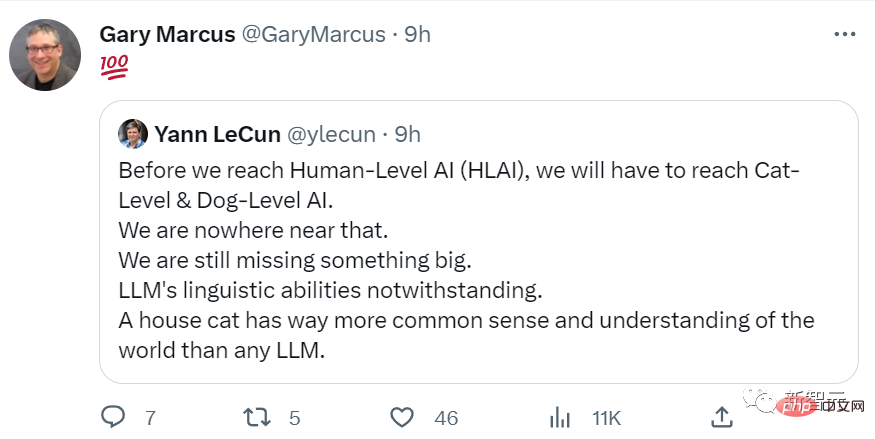

He tweeted again: "Before we can make human-level AI, we need to make cat/dog-level AI. And now we Even that can't be done. We're missing something very important. You know, even a pet cat has more common sense and understanding of the world than any large language model."

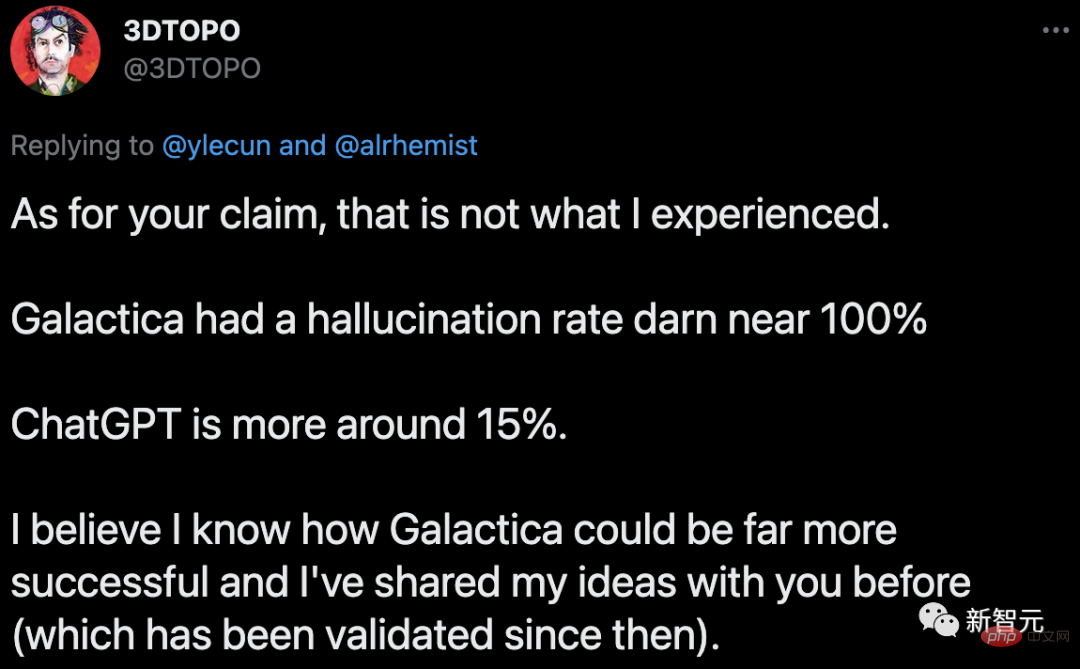

In the comment area, some netizens criticized LeCun unceremoniously: "You are not right. I personally tested it and found that Galactica's error rate is close to 100%, while the error rate of ChatGPT is almost 15%."

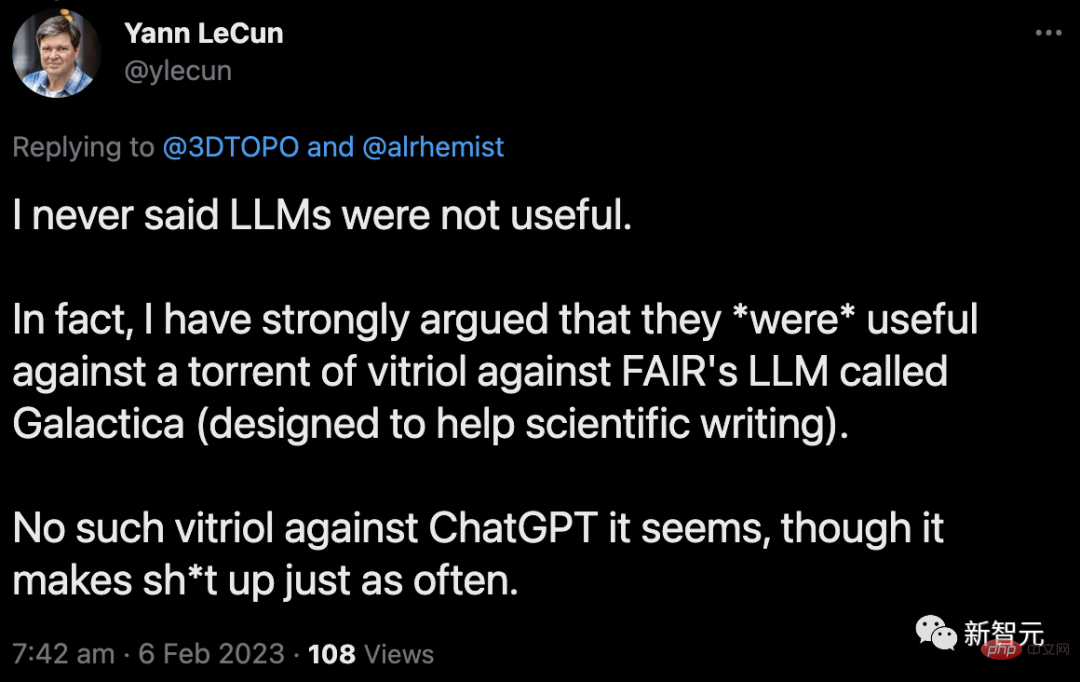

In response to the criticism from netizens, LeCun once again issued a Tweet to express attitude: "I never said that large language models are useless. In fact, we at Meta also launched the Galactica model, but it is not as successful as ChatGPT. ChatGPT is full of nonsense, but you are so tolerant of it, but my Glacatica, I’ve only been out for three days, and I’ve been scolded by you until I’m offline."

In response to this, some netizens ridiculed in the comment area Said: "You are awesome. Why don't you go back to the laboratory and make what you said."

LeCun replied: "Today is Sunday, a big Twitter debate It’s my favorite weekend pastime.”

It’s understandable that LeCun is so upset.

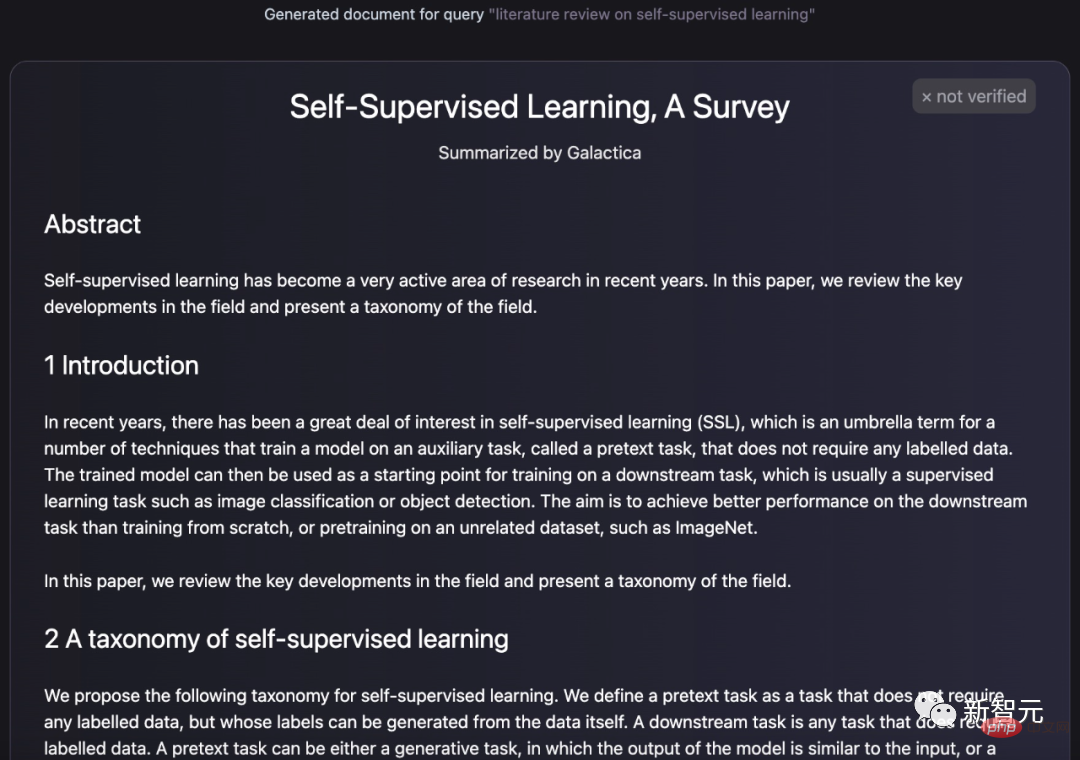

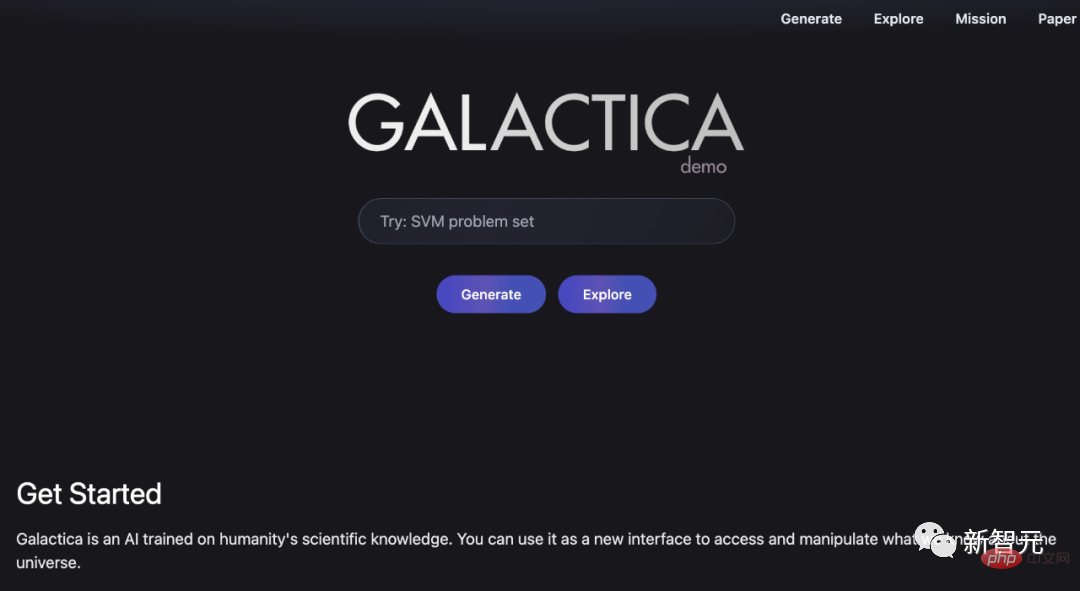

In mid-November last year, Meta’s FAIR laboratory proposed a Galactica model, which can generate papers, generate encyclopedia entries, answer questions, and complete multi-model chemical formulas and protein sequences. status tasks, etc.

Galactica generated paper

LeCun He also happily tweeted and praised it, saying that this is a model trained based on academic literature. If you give it a paragraph, it can generate a paper with a complete structure.

However, because Galactica was so full of lies, it was only three days after it was online that it was trolled offline by netizens. .

LeCun forwarded the notice from Papers with Code and said like a "big grudge": "Now we can no longer play happily with Galactica, are you happy?"

Although Galactica’s demo was only online for a few days, users at the time felt like they were facing a formidable enemy.

Some netizens warned: Think about what students will use this "paper writing" artifact.

Some netizens said, “The Galactica model’s answers are full of errors and biases, but its tone is very confident. Authoritative. This is terrifying."

Marcus also said that this kind of large language model may be used by students Used to fool teachers, very worrying.

This familiar formula and familiar taste are really touching: wasn’t the panic and doubt caused by Galactica exactly what ChatGPT later experienced?

Looking at this period of history repeating itself, but with a completely different ending, it cannot be said that LeCun is so bitter for no reason.

Then why can ChatGPT become more and more popular amidst the voices of doubt, but Galactica can only be miserably scolded and taken offline?

First of all, Galactica was proposed by Meta that large companies do face more "reputational risks" than small startups like OpenAI.

In addition, OpenAI’s product positioning strategy is very smart. It can be seen from the name of ChatGTP that its main concept is chatting.

You can chat with it about knowledge and papers, but since it is "chat", you can naturally let it go. Who stipulates that chatting must be about "accurate" and "rigorous" things?

But Glactica is different. Its official definition is: "This is a model used for scientific research." This is an artificial intelligence trained on human scientific knowledge. You can use it as a new interface to access and manipulate our knowledge of the universe."

Just give it to yourself A big thunder has been laid.

Although from a technical perspective, ChatGPT does not have much innovation, from a product operation perspective, OpenAI has done a very good job.

Why is LLM talking nonsense?

So, why are big language models talking nonsense?

In an article liked by LeCun, the author explained: "I have tried to use ChatGPT to help write blog posts, but they all ended in failure. The reason is simple: ChatGPT often produces many false "facts."

You must know that LLM is for Sound like a human in conversations with other humans, and they achieve this goal well. But the thing is, sounding natural and assessing the accuracy of the information are two completely different things.

So, how to solve this problem?

For example, we can use a machine that has encoded the understanding of physics - the physics engine:

As for the "fake paper problem", we can also use similar corrections.

That is, it makes ChatGPT recognize that it has been asked a question about a scientific paper, or that it is writing something about a paper, and forces it to consult a trusted database before continuing.

But please note that if you do this, it means that you have grafted a specific additional "thinking" onto LLM. And there are a whole bunch of special circumstances that have to be taken into account. At this point, the human engineers knew where the truth came from, but the LLM did not.

Furthermore, as engineers grafted together more and more of these fixes, it became increasingly clear that LLM was not a form of artificial general intelligence.

Whatever human intelligence is, we know that it is more than just the ability to speak well.

Why do humans talk to each other, or write things for each other?

One of the purposes is to convey factual information directly, such as "I am in the store", "It is not plugged in", etc., but this is far from the only reason why we use language:

……

You can see that, The purposes of human communication are very diverse. Moreover, we usually do not indicate the purpose of the content in the writing, and the author and the reader have different perceptions of the role of the content.

If ChatGPT wants to become a trustworthy disseminator of facts, it may have to learn how to differentiate between the various purposes of the human writing it has been trained to do.

That is, it will have to learn not to take bullshit seriously, to distinguish persuasion and propaganda from objective analysis, to independently judge a source's credibility versus its popularity, etc. wait.

This is a very difficult trick even for humans. Studies have shown that false information spreads on Twitter many times faster than accurate information - often more inflammatory, entertaining or seemingly novel.

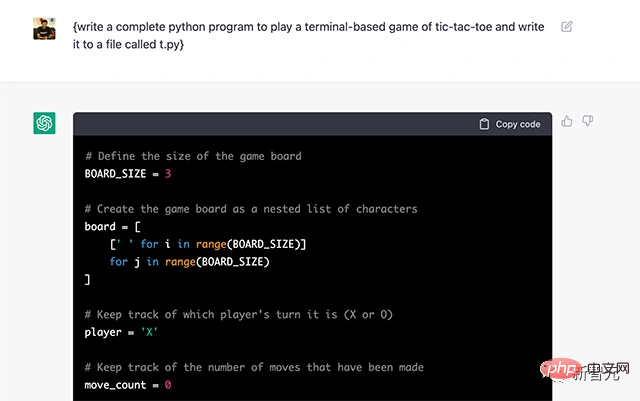

So the question is, why does generative artificial intelligence perform so well on computer code? Why doesn't accuracy in writing functional code translate into accuracy in communicating facts?

A possible answer to this is that computer code is functional, not communicative. Writing a piece of code with correct grammar will automatically perform some tasks, while writing a sentence with correct grammar will not necessarily achieve anything.

Furthermore, it is easy to limit the training corpus of computer code to "good" code, that is, code that performs its intended purpose perfectly. In contrast, it is nearly impossible to produce a text corpus that successfully serves its purpose.

So, in order to train itself to be a trustworthy disseminator of facts, LLLM must complete a task that is much more difficult than training itself to come up with functional computer code.

Although I don’t know how difficult it is for engineers to build an LLM that can distinguish facts from nonsense, it is a difficult task even for humans.

Marcus: The Reconciliation of the Century

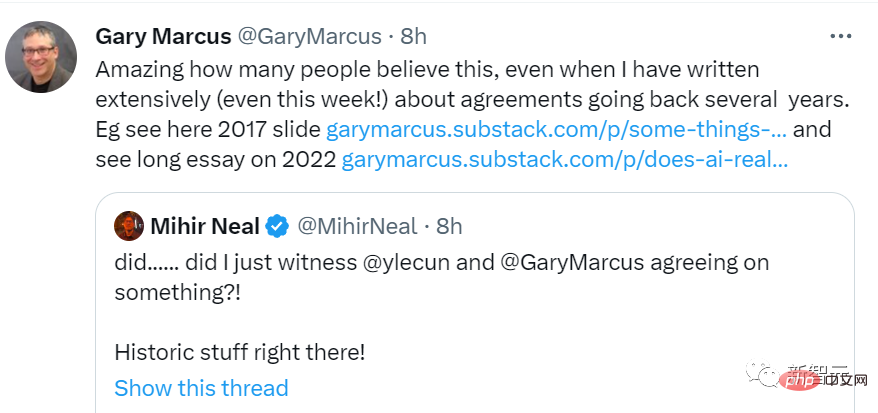

LeCun’s series of remarks made everyone wonder: Isn’t this what Marcus would say?

## Hot (chi) passionate (gua) netizens have @Marcus, looking forward to his sharp response to this matter Comment.

Marcus, who has been suffering from GPT for a long time, was naturally overjoyed and immediately forwarded LeCun’s post and commented, “100 faint ”.

Marcus also posted a post on his blog, reviewing his "love-hate relationship" with LeCun.

Marcus said that he and LeCun were old friends for many years, and the two of them became attracted to each other because of a few words they said about Galactica. Make enemies.

In fact, Marcus and LeCun have been at war with each other for several years, and it’s not just because of Galactica going offline.

Different from the relative low profile of the other two Turing Award winners Bengio and Hinton, in recent years, LeCun is also famous in the AI circle for being active on social media. Many jobs were promoted on Twitter as soon as they failed Arxiv.

Marcus, who is also high-profile, has always regarded Twitter as his home court. When LeCun’s publicity conflicts with Marcus’s views, neither party intends to hold back.

On social media, it can be said that the two of them have reached the point where they will quarrel whenever they have a fight. They speak rudely to each other, and they might even start to fight each other when they meet.

And speaking of Liang Zi, after LeCun won the Turing Award with Hinton and Bengio in 2019, there was a group photo in which Marcus was originally standing next to LeCun, but after LeCun shared it In the photo, Marcus was cut out ruthlessly.

However, the birth of ChatGPT changed everything.

ChatGPT went viral, and Galactica was delisted three days later. Marcus was naturally happy to see LeCun's crazy output against LLM.

As the saying goes, the enemy of my enemy is my friend. Whether LeCun’s remarks are a result of his enlightenment after the failure of his own product, or his envy of the current situation of top-notch competitive products, Marcus is willing to add to it. fire.

Marcus believes he and LeCun agree on more than just the hype and limitations of LLM. They both think Cicero deserves more attention.

Finally, Marcus @ found the person who understood everything and said, "It's time to provide welfare to the family."

It seems that Marcus is the ultimate winner.

The above is the detailed content of ChatGPT went viral, LeCun's mentality collapsed! Calling large language models an evil path, the Meta model was taken offline in 3 days. For more information, please follow other related articles on the PHP Chinese website!

What are the differences between spring thread pool and jdk thread pool?

What are the differences between spring thread pool and jdk thread pool?

phpstudy

phpstudy

Login token is invalid

Login token is invalid

Photo display time

Photo display time

How to find the location of a lost Huawei phone

How to find the location of a lost Huawei phone

How to make a round picture in ppt

How to make a round picture in ppt

Detailed explanation of java displacement operator

Detailed explanation of java displacement operator

How to delete blank pages in word without affecting other formats

How to delete blank pages in word without affecting other formats