Technology peripherals

Technology peripherals

AI

AI

Another revolution in reinforcement learning! DeepMind proposes 'algorithm distillation': an explorable pre-trained reinforcement learning Transformer

Another revolution in reinforcement learning! DeepMind proposes 'algorithm distillation': an explorable pre-trained reinforcement learning Transformer

Another revolution in reinforcement learning! DeepMind proposes 'algorithm distillation': an explorable pre-trained reinforcement learning Transformer

In current sequence modeling tasks, Transformer can be said to be the most powerful neural network architecture, and the pre-trained Transformer model can use prompts as conditions or in-context learning to adapt to different situations. Downstream tasks.

The generalization ability of the large-scale pre-trained Transformer model has been verified in multiple fields, such as text completion, language understanding, image generation, etc.

Since last year, there has been relevant work proving that by treating offline reinforcement learning (offline RL) as a sequence prediction problem, then the model can Can learn policies from offline data.

But current methods either learn a policy from data that does not contain learning (such as an expert policy fixed by distillation), or learn a policy from data that contains learning (such as an agent's heavy buffer), but its context is too small to capture policy improvements.

DeepMind researchers discovered through observation that, in principle, the sequential nature of learning in reinforcement learning algorithm training can transform reinforcement into The learning process itself is modeled as a "causal sequence prediction problem".

Specifically, if the context of a Transformer is long enough to include policy improvements due to learning updates, then it should not only be able to represent a fixed policy, but also be able to The states, actions, and rewards of previous episodes are represented as a policy improvement operator.

This also provides a technical feasibility that any RL algorithm can be distilled into a sufficiently powerful sequence model through imitation learning and transformed into an in- context RL algorithm.

Based on this, DeepMind proposed Algorithm Distillation (AD), which extracts reinforcement learning algorithms into neural networks by establishing a causal sequence model.

## Paper link: https://arxiv.org/pdf/2210.14215.pdf

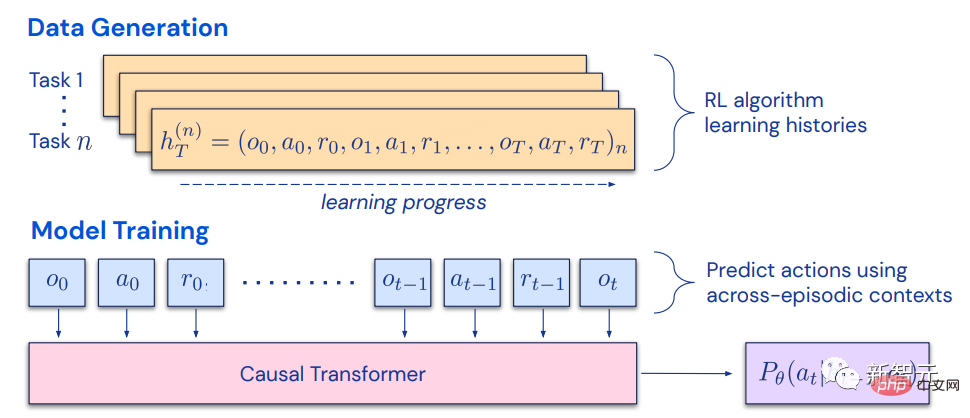

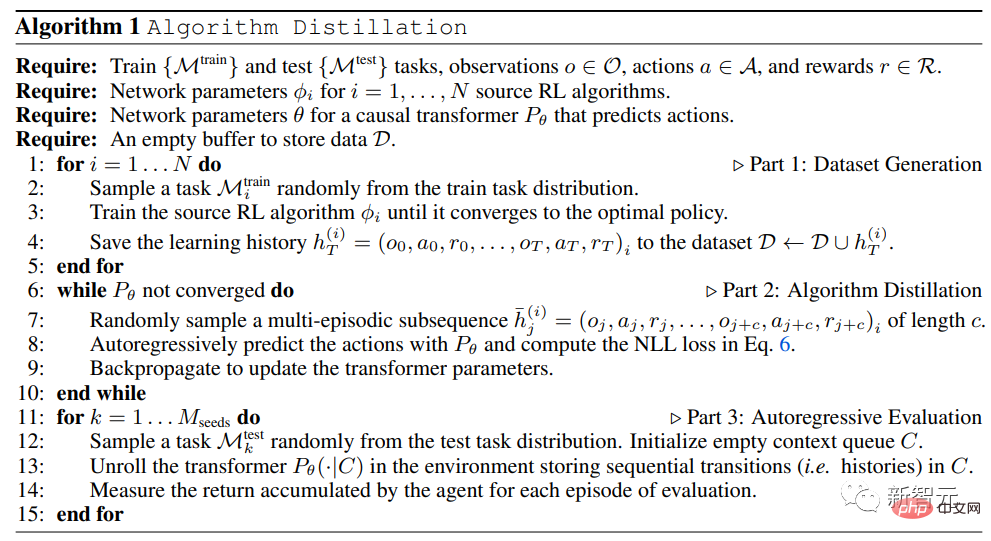

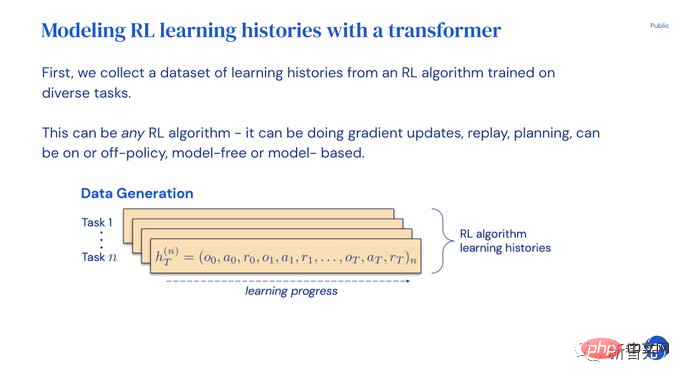

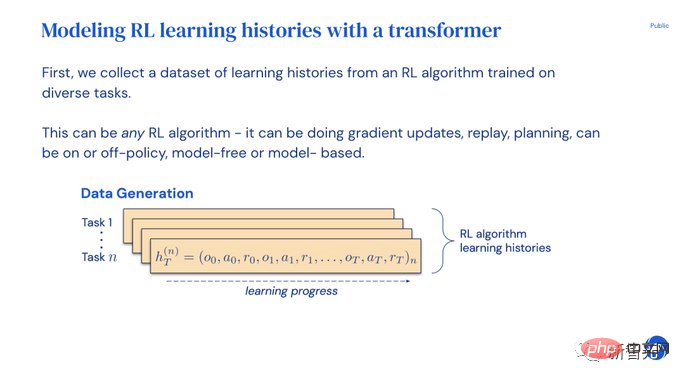

Algorithmic distillation treats learning reinforcement learning as a cross-episode sequence prediction problem, generates a learning history data set through the source RL algorithm, and then uses the learning history as the context to train the causal Transformer through autoregressive prediction behavior .

Unlike post-learning or sequence policy prediction structures for expert sequences, AD is able to improve its policy entirely in context without updating its network parameters.

- Transfomer collects its own data and maximizes rewards on new tasks;

- No prompting or fine-tuning required;

- With weights frozen, the Transformer can explore, exploit, and maximize the return of the context! Expert Distillation methods such as Gato cannot explore and cannot maximize returns.

The experimental results prove that AD can perform reinforcement learning in various environments with sparse rewards, combined task structures, and pixel-based observation, and the data efficiency of AD learning (data- efficient) than the RL algorithm that generated the source data.

AD is also the first to demonstrate in-context reinforcement learning methods through sequence modeling of offline data with imitation loss.

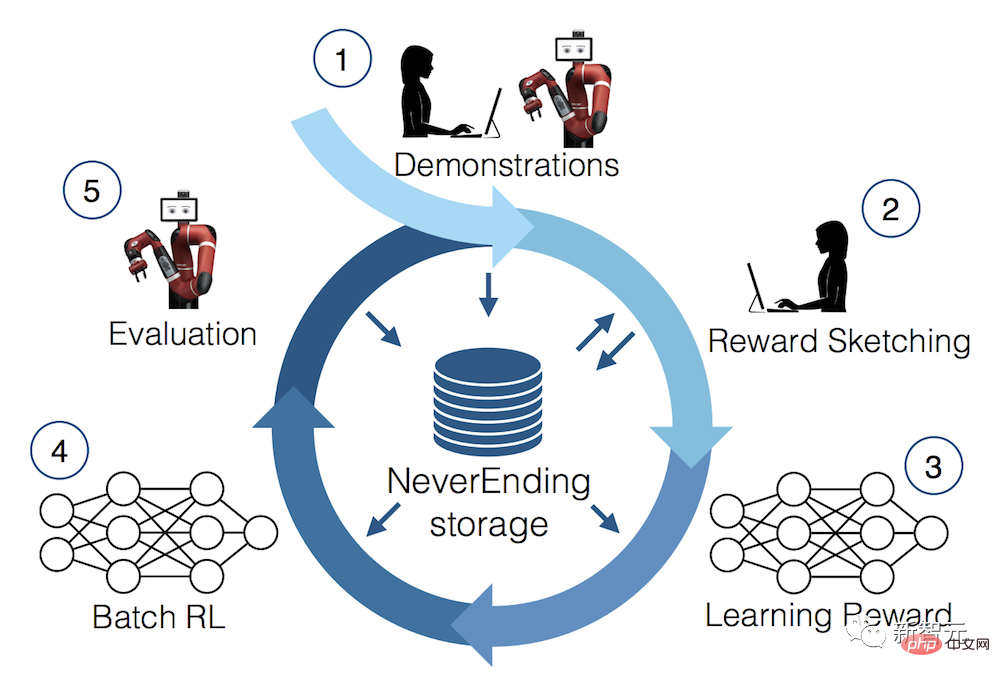

Algorithm DistillationIn 2021, some researchers first discovered that Transformer can learn a single-task policy from offline RL data through imitation learning, and was subsequently extended to be able to Extract multitasking strategies in same-domain and cross-domain settings.

These works propose a promising paradigm for extracting general multi-task policies: first collect a large number of different environmental interaction data sets, and then extract one from the data through sequence modeling Strategy.

The method of learning policies from offline RL data through imitation learning is also called offline policy distillation, or simply Policy Distillation (Policy Distillation, PD).

Although the idea of PD is very simple and easy to extend, PD has a major flaw: the generated strategy does not improve from additional interactions with the environment.

For example, MultiGame Decision Transformer (MGDT) learned a return conditional policy that can play a large number of Atari games, while Gato learned a conditional policy in Strategies for solving tasks in different environments, but neither approach can improve its strategies through trial and error.

MGDT adapts the transformer to new tasks by fine-tuning the weights of the model, while Gato requires expert demonstration tips to adapt to new tasks.

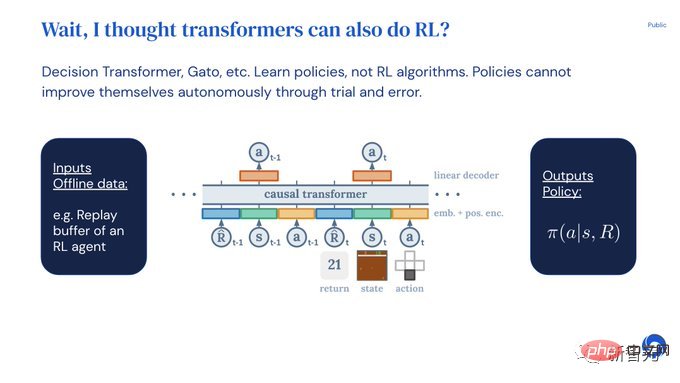

In short, the Policy Distillation method learns policies rather than reinforcement learning algorithms.

The researchers hypothesized that the reason Policy Distillation cannot improve through trial and error is that it is trained on data that does not show learning progress.

Algorithmic distillation (AD) is a method of learning intrinsic policy improvement operators by optimizing the causal sequence prediction loss in the learning history of an RL algorithm.

##AD includes two components:

1. By saving The training history of an RL algorithm on many separate tasks generates a large multi-task data set;

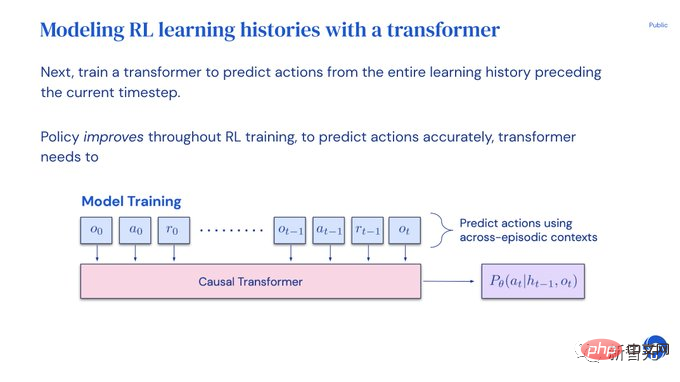

#2. Transformer uses the previous learning history as its background to construct causality for actions. mold.

Because the policy continues to improve throughout the training process of the source RL algorithm, AD must learn how to improve the operator in order to accurately simulate the actions at any given point in the training history.

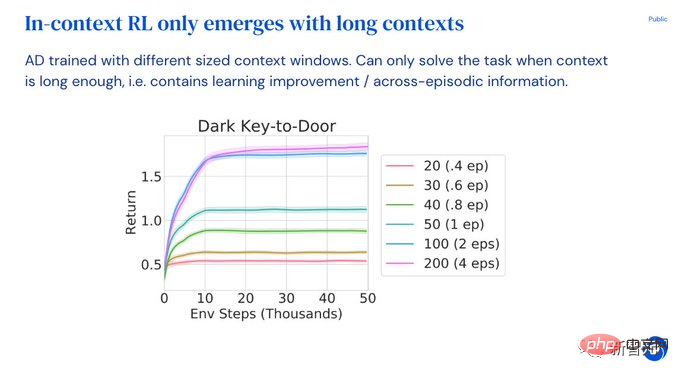

Most importantly, the Transformer's context size must be large enough (i.e. across epochs) to capture improvements in the training data.

In the experimental part, in order to explore the advantages of AD in in-context RL capabilities, the researchers focused on the inability to pass zero after pre-training -shot generalizes to solve environments where each environment is required to support multiple tasks and the model cannot easily infer the solution to the task from observations. At the same time, episodes need to be short enough so that causal Transformers across episodes can be trained.

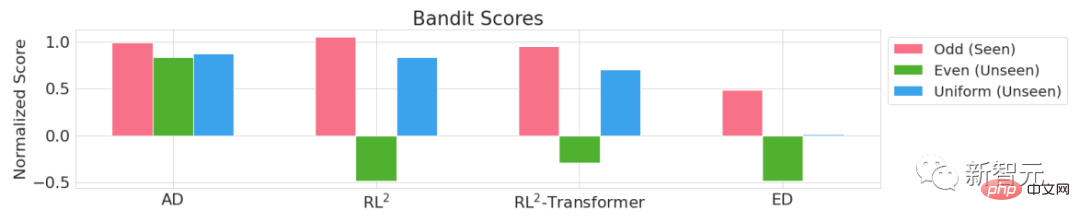

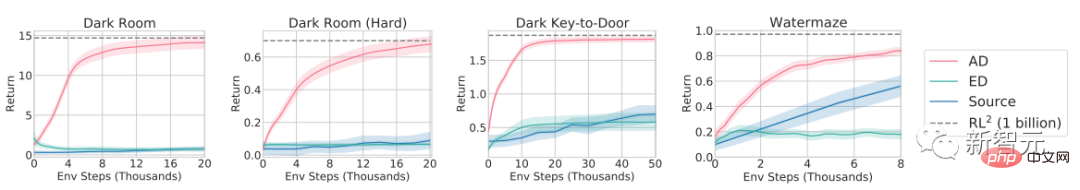

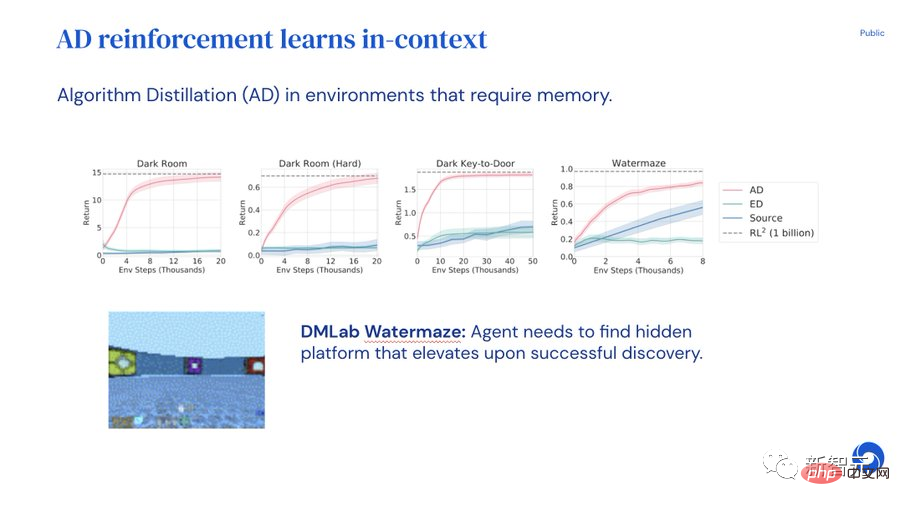

As can be seen in the experimental results of the four environments Adversarial Bandit, Dark Room, Dark Key-to-Door, and DMLab Watermaze, through imitation Gradient-based RL algorithm, using a causal Transformer with a large enough context, AD can reinforce learning new tasks completely in context.

AD can perform in-context exploration, temporal credit allocation and generalization. The algorithm of AD learning is better than the source of Transformer training. Data algorithms are more data efficient.

PPT explanationIn order to facilitate the understanding of the paper, Michael Laskin, one of the authors of the paper, published a ppt explanation on Twitter.

# Experiments on algorithm distillation show that Transformer can independently improve the model through trial and error without updating weights, prompts, or fine-tuning. A single Transformer can collect its own data and maximize rewards on new tasks.

Although there are many successful models showing how Transformer learns in context, Transformer has not yet been proven to strengthen learning in context.

To adapt to new tasks, developers either need to manually specify a prompt or need to adjust the model.

Wouldn’t it be great if Transformer could adapt to reinforcement learning and be used out of the box?

But Decision Transformers or Gato can only learn strategies from offline data and cannot automatically improve through repeated experiments.

Transformers generated using the pre-training method of algorithmic distillation (AD) can perform reinforcement learning in context.

First train multiple copies of a reinforcement learning algorithm to solve different tasks and save the learning history.

#Once the learning history data set is collected, a Transformer can be trained to predict previous learning history actions.

Since policies have improved historically, accurately predicting actions will force the Transformer to model policy improvements.

The whole process is that simple. Transformer is only trained by imitating actions. There is no Q value like the common reinforcement learning model, and there is no long The operation-action-reward sequence also has no return conditions like DTs.

In context, reinforcement learning has no additional overhead, and the model is then evaluated by observing whether AD can maximize the reward for new tasks.

While Transformer explores, exploits, and maximizes returns in the context, its weights are frozen!

Expert Distillation (most similar to Gato), on the other hand, cannot explore nor maximize returns.

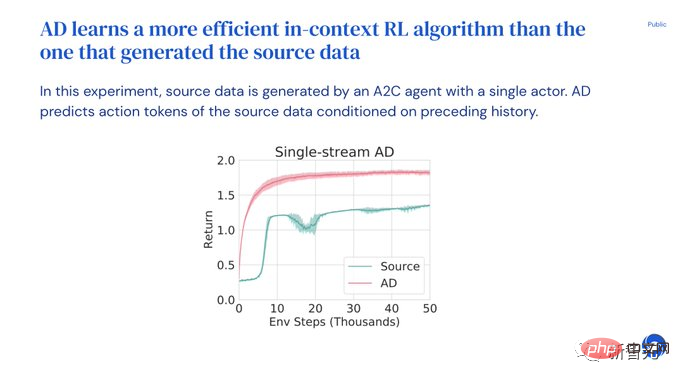

AD can extract any RL algorithm. The researchers tried UCB and DQNA2C. An interesting finding is that in contextual RL algorithm learning, AD More data efficient.

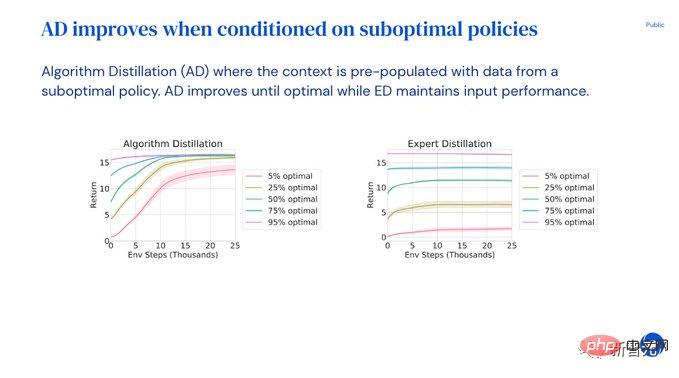

Users can also enter prompts and suboptimal demos, and the model will automatically improve the strategy until the optimal solution is obtained!

However, expert distillation ED can only maintain sub-optimal demo performance.

Context RL will only appear when the context of the Transformer is long enough and spans multiple episodes.

AD requires a long enough history to perform effective model improvement and identification tasks.

Through experiments, the researchers came to the following conclusions:

- Transformer can be used in context Performing RL in

- The contextual RL algorithm with AD is more efficient than the gradient-based source RL algorithm

- AD improves the sub-optimal policy

- in-context reinforcement learning arises from long-context imitation learning

The above is the detailed content of Another revolution in reinforcement learning! DeepMind proposes 'algorithm distillation': an explorable pre-trained reinforcement learning Transformer. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

Abandon the encoder-decoder architecture and use the diffusion model for edge detection, which is more effective. The National University of Defense Technology proposed DiffusionEdge

Feb 07, 2024 pm 10:12 PM

Abandon the encoder-decoder architecture and use the diffusion model for edge detection, which is more effective. The National University of Defense Technology proposed DiffusionEdge

Feb 07, 2024 pm 10:12 PM

Current deep edge detection networks usually adopt an encoder-decoder architecture, which contains up and down sampling modules to better extract multi-level features. However, this structure limits the network to output accurate and detailed edge detection results. In response to this problem, a paper on AAAI2024 provides a new solution. Thesis title: DiffusionEdge:DiffusionProbabilisticModelforCrispEdgeDetection Authors: Ye Yunfan (National University of Defense Technology), Xu Kai (National University of Defense Technology), Huang Yuxing (National University of Defense Technology), Yi Renjiao (National University of Defense Technology), Cai Zhiping (National University of Defense Technology) Paper link: https ://ar

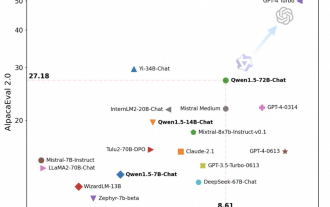

Tongyi Qianwen is open source again, Qwen1.5 brings six volume models, and its performance exceeds GPT3.5

Feb 07, 2024 pm 10:15 PM

Tongyi Qianwen is open source again, Qwen1.5 brings six volume models, and its performance exceeds GPT3.5

Feb 07, 2024 pm 10:15 PM

In time for the Spring Festival, version 1.5 of Tongyi Qianwen Model (Qwen) is online. This morning, the news of the new version attracted the attention of the AI community. The new version of the large model includes six model sizes: 0.5B, 1.8B, 4B, 7B, 14B and 72B. Among them, the performance of the strongest version surpasses GPT3.5 and Mistral-Medium. This version includes Base model and Chat model, and provides multi-language support. Alibaba’s Tongyi Qianwen team stated that the relevant technology has also been launched on the Tongyi Qianwen official website and Tongyi Qianwen App. In addition, today's release of Qwen 1.5 also has the following highlights: supports 32K context length; opens the checkpoint of the Base+Chat model;

Large models can also be sliced, and Microsoft SliceGPT greatly increases the computational efficiency of LLAMA-2

Jan 31, 2024 am 11:39 AM

Large models can also be sliced, and Microsoft SliceGPT greatly increases the computational efficiency of LLAMA-2

Jan 31, 2024 am 11:39 AM

Large language models (LLMs) typically have billions of parameters and are trained on trillions of tokens. However, such models are very expensive to train and deploy. In order to reduce computational requirements, various model compression techniques are often used. These model compression techniques can generally be divided into four categories: distillation, tensor decomposition (including low-rank factorization), pruning, and quantization. Pruning methods have been around for some time, but many require recovery fine-tuning (RFT) after pruning to maintain performance, making the entire process costly and difficult to scale. Researchers from ETH Zurich and Microsoft have proposed a solution to this problem called SliceGPT. The core idea of this method is to reduce the embedding of the network by deleting rows and columns in the weight matrix.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

LLaVA-1.6, which catches up with Gemini Pro and improves reasoning and OCR capabilities, is too powerful

Feb 01, 2024 pm 04:51 PM

LLaVA-1.6, which catches up with Gemini Pro and improves reasoning and OCR capabilities, is too powerful

Feb 01, 2024 pm 04:51 PM

In April last year, researchers from the University of Wisconsin-Madison, Microsoft Research, and Columbia University jointly released LLaVA (Large Language and Vision Assistant). Although LLaVA is only trained with a small multi-modal instruction data set, it shows very similar inference results to GPT-4 on some samples. Then in October, they launched LLaVA-1.5, which refreshed the SOTA in 11 benchmarks with simple modifications to the original LLaVA. The results of this upgrade are very exciting, bringing new breakthroughs to the field of multi-modal AI assistants. The research team announced the launch of LLaVA-1.6 version, targeting reasoning, OCR and