Technology peripherals

Technology peripherals

AI

AI

Compared with generative models, why does robotics research still use the same old methods from a few years ago?

Compared with generative models, why does robotics research still use the same old methods from a few years ago?

Compared with generative models, why does robotics research still use the same old methods from a few years ago?

Remarkable progress has been made in the field of robotics, and these advances indicate that robots can do more things in the future. But there are also troubling things, because compared with generative models, the progress of robots is still a bit behind, especially with the emergence of models such as GPT-3, this gap is even more prominent.

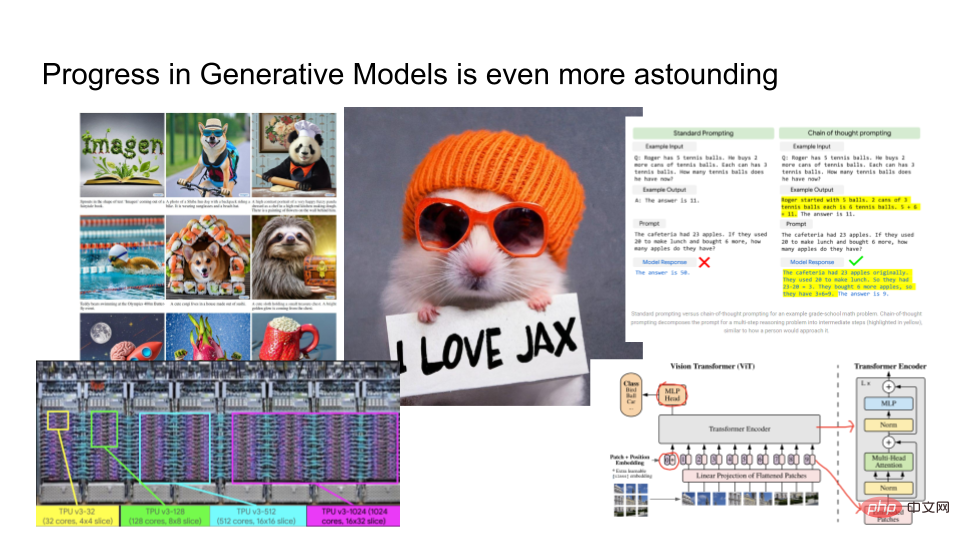

Generative models produce results that are shockingly good. The left side of the picture above is the output result of Imagen launched by Google. You can provide it with a piece of text, such as "A hamster wearing an orange hat and holding a piece of paper saying I love JAX." Based on the given text, Imagen will render a reasonable image. In addition, Google also trained a large language model PaLM, which can be used to explain why jokes are funny and so on. They train models with advanced hardware such as TPUv4, and in the field of computer vision, researchers are developing some very complex architectures such as Vision Transformers.

Generative models are developing so rapidly. Compared with robotic technology, what is the connection between the two?

In this article, Eric Jang, Vice President of AI from the Norwegian robotics company “Halodi Robotics” introduces “How do we make robots more like generative models? 》. The following is the main content of the article.

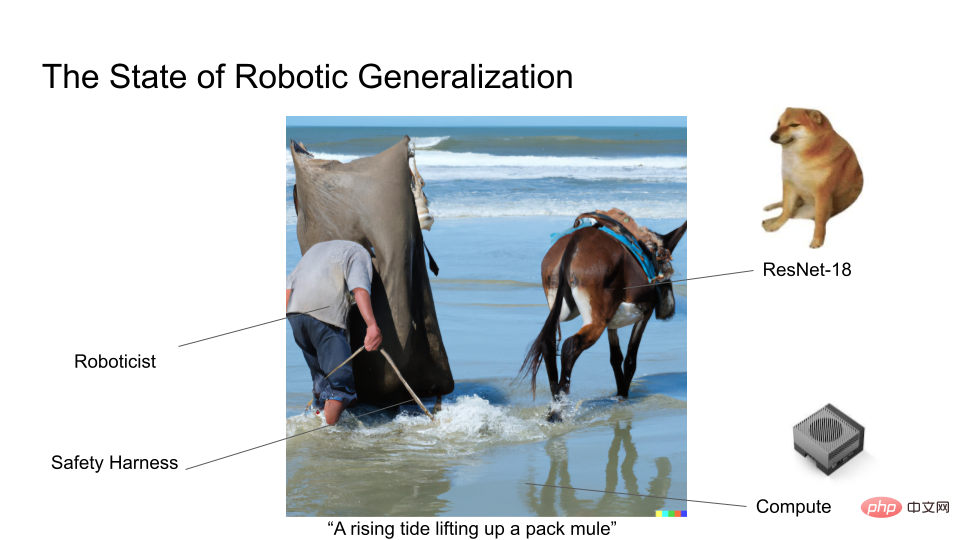

As an expert in the field of robotics, the progress in the field of generative models is a bit enviable. Because in the field of robotics, most researchers are probably still using ResNet18, a 7-year-old deep learning architecture. We certainly don’t train models on huge data sets like generative models do, so very few robotics research efforts make headlines.

We know about Moravec’s Paradox: Compared with cognitive tasks, dexterous manipulation of robots is difficult. It seems intuitive that the operations of letting robots pick up and transport objects are difficult. , doesn't seem as impressive as turning words into images or explaining jokes.

First we define the generative model. Generative models are more than just rendering images or generating reams of text. It is also a framework that we can use to understand all probabilistic machine learning. There are two core questions in generative models:

#1. How many bits are there in the data category you want to model?

2. How well can you build the model?

AlexNet achieved a breakthrough in 2012. It can predict 1000 categories, and Log2 (1000 classes) is about 10 class bits. You can think of AlexNet as an image-based generative model containing 10 bits of information. If you upgrade the difficulty of the modeling task to the MS-CoCo subtitle task, the model contains approximately 100 bits of information. If you are doing image generation, for example from text to image using DALLE or Imagen, this contains approximately 1000bits of information.

Usually the more categories are modeled, the more computing power is required to calculate the conditional probabilities contained in them. This is why the model will change as the number of categories increases. huge. As we train larger and larger models, it becomes possible to exploit features in the data so that richer structures can be learned. This is why generative models and self-supervised learning have become popular methods to perform deep learning on large amounts of input without the need for extensive human labeling.

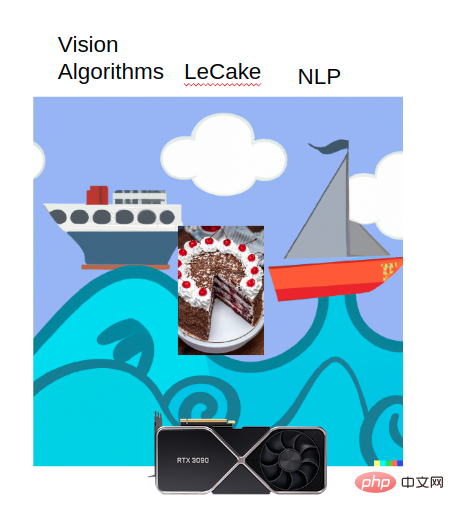

Rich Sutton pointed out in his article "The Bitter Lesson": Most of the progress in artificial intelligence seems to have been made in this computing boom, while there has been little development in other aspects. . The Vision algorithm, NLP, and Yann LeCun’s LeCake are all benefiting from this computing boom.

What enlightenment does this trend give us? If you have an over-parameterized model that can handle more data, and the model can capture all the features in the network, coupled with strong computing power and training goals, deep learning is almost always feasible.

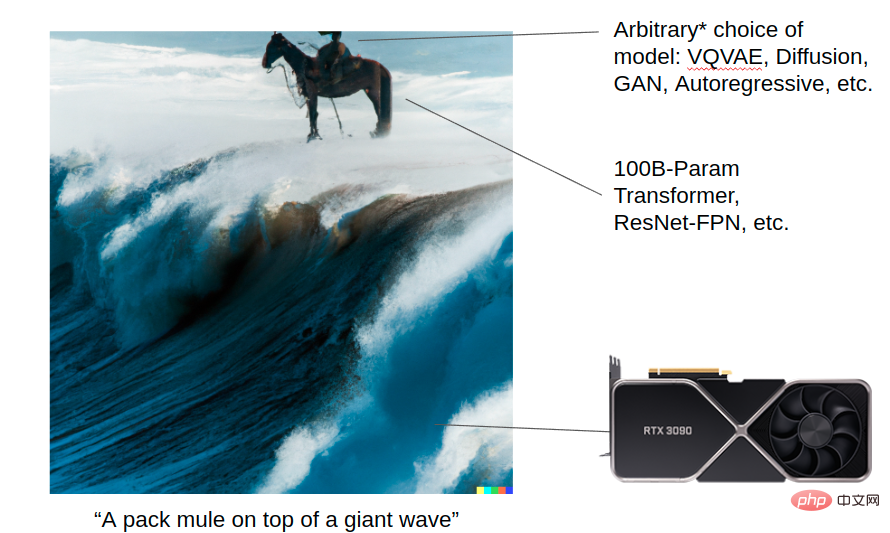

Let DALL-E 2 generate an image: a mule riding on a huge wave. This picture shows how generative models can achieve extraordinary results with the help of computing boom. You have powerful computing power (transformer, Resnet, etc.), and you can choose VQVAE, Diffusion, GAN, Autoregressive and other algorithms for modeling. The details of each algorithm are important today, but they may not matter in the future once computers become powerful enough. But in the long run, model scale and good architecture are the foundation for all these advances.

In contrast, the figure below shows the current status of generalization research in the field of robotics. Currently, many robotics researchers are still training small models and have not yet used Vision Transformer!

For those who engage in robot research, they all hope that robots can be more widely used in the real world and play a greater role. In the field of generative models, researchers face relatively few problems, while in the field of robotics research, they often encounter problems such as difficulty in robot deployment and noisy data, which researchers engaged in generative models will not encounter.

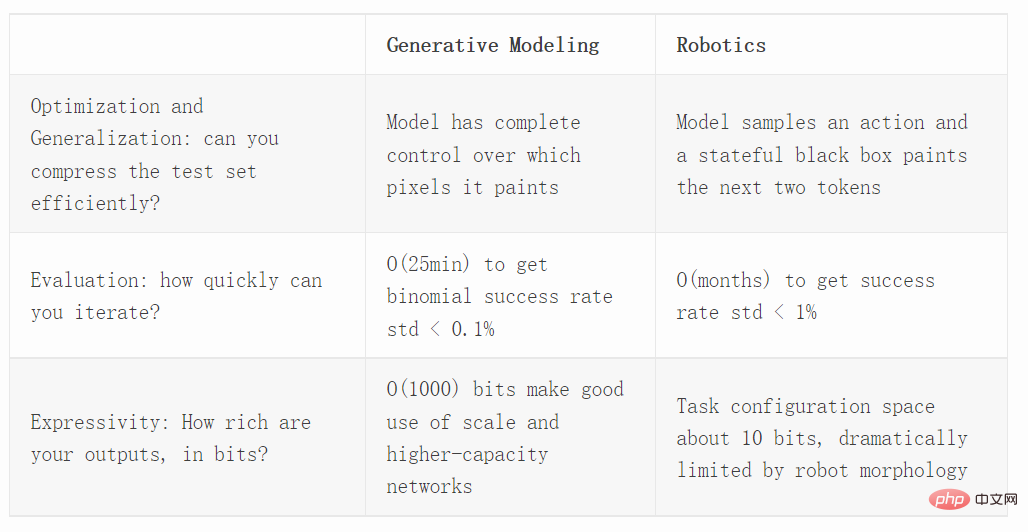

Next we compare generative models and robotics from three different dimensions, including optimization, evaluation, and expression capabilities.

Optimization

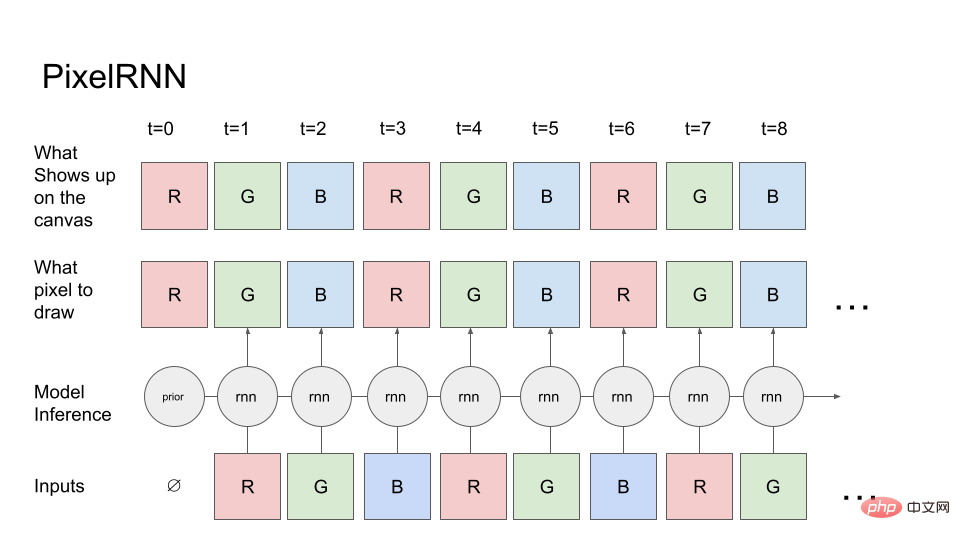

First let us look at a simple generative model: PixelRNN.

Starting from the red channel of the first pixel (the a priori probability of the red channel is known), the model tells the canvas (top row) which pixel it wants to draw. The canvas will draw exactly as instructed, so it copies the pixel values onto the canvas and then reads the canvas back into the model to predict the next channel which is the green channel. Then the values on the R and G canvas are fed back to the RNN, and so on, finally generating the RGBRGBRGB... sequence.

In actual image generation tasks, diffusion or transformer can be used. But for simplicity, we only use forward-executing RNNs.

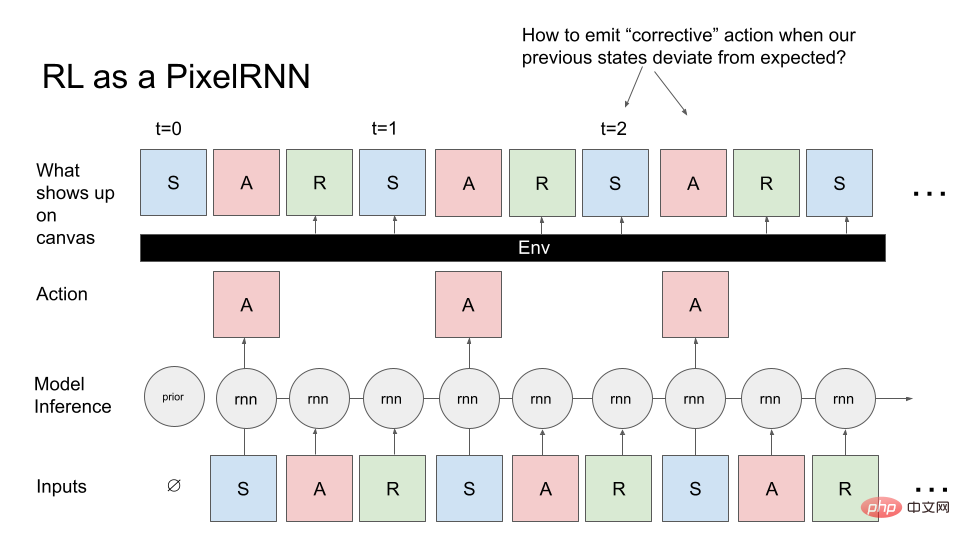

Now let’s convert the general control problem into PixelRNN. Instead of generating images, we want to generate MDPs (Markov Decision Processes): sequences of states, actions, and rewards. We want to generate an MDP that corresponds to an agent (such as a robot) that performs some task. Here too we start from prior knowledge and the model samples the initial state of the reinforcement learning (RL) environment. This is the first input to the model, the RNN samples the first pixel (A), and the canvas generates A exactly as instructed. However, unlike generating images, canvas always returns the previous RNN output, and the current operation is that the next two pixels (R, S) are determined by the current environment: i.e. it accepts the action and all previous states, and Calculate R,S somehow.

We can think of the RL environment as a painter object, which performs RNN actions instead of directly Draw what you want on the canvas, and it will draw the pixels using an arbitrarily complex function.

If we compare this to the previous PixelRNN that drew the image, this task is obviously more challenging because when you try to sample the image you want, there is a black box , this black box will make it difficult to draw content.

A typical problem encountered during the drawing process: if the environment draws an unexpected state, there will be a problem, that is, how to issue corrective instructions so that you can return to The image we are trying to draw. Additionally, unlike image generation, we actually have to generate MDP images sequentially and cannot go back for editing, which also poses optimization challenges.

If we want to understand how RL methods like PPO generalize, we should benchmark it in a non-controlled environment, apply it to image generation techniques, and compare it with modern generative models . In the 2006 work of Hinton and Nair, they used the springs system to model MNIST digital synthesis. DeepMind replicates part of this image synthesis work using RL methods.

Image generation is a great benchmark to study optimization and control because it really emphasizes the need to generalize across thousands of different scenarios.

Recent works such as Decision Transformer, Trajectory Transformer and Multi-Game Decision Transformer have shown that upside-down RL technology does a good job at generalization. So how do upside-down RL techniques compare to online (PPO) or offline RL algorithms (CQL)? It is also easy to evaluate, we can evaluate the density (likelihood model of the expert's complete observation) and verify whether a given choice of RL algorithm can generalize to a large number of images when measuring the test likelihood.

Evaluation

If we want to estimate the success rate of a robot on certain tasks, we can use the binomial distribution.

The variance of the binomial distribution is p(1-p)/N, p is the sample mean (estimated success rate); N is the number of trials. In the worst case, if p=50% (maximum variance), then 3000 samples are needed to get the standard deviation to less than 1%!

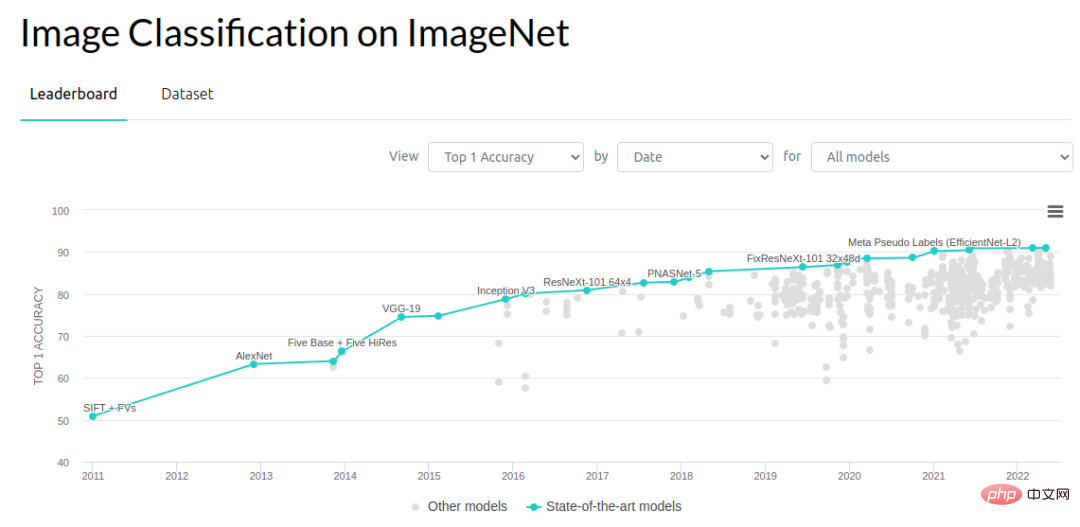

If we look at it from a computer vision perspective, improvements in the range of 0.1-1% are an important driving force for progress. The ImageNet object recognition problem has made a lot of progress since 2012. The error rate decreased by 3% from 2012 to 2014 and then by about 1% per year. There are a lot of people working on how to make this work. Perhaps this year (2022) has reached a bottleneck in benchmark improvement, but in the seven years from 2012 to 2018, researchers have made a lot of progress and results.

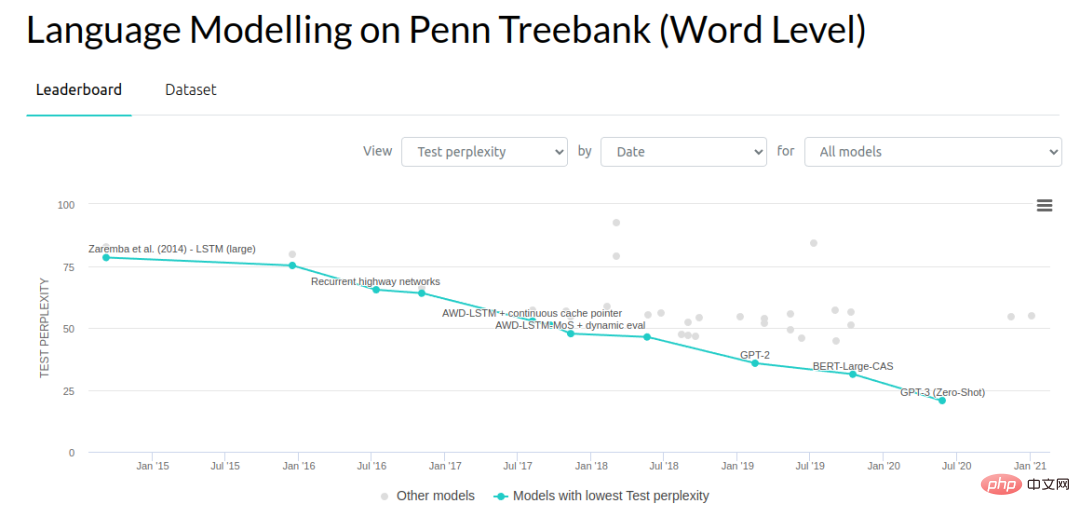

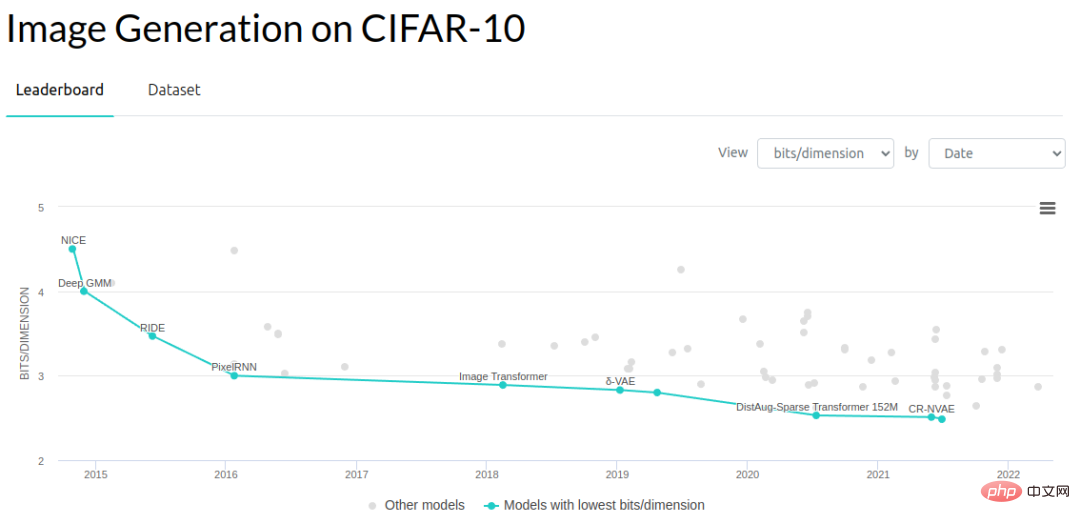

In other areas of generative modeling, researchers have been reducing the complexity of language models and the number of bits per dimension of the image that the generative model can Number (bits-per-dimension).

The following is a rough comparison of the evaluation speed of common benchmarks. The 2012 ImageNet object recognition test set has 150,000 images. Assuming an inference speed of 10ms per image, and evaluating each image consecutively, it would take approximately 25 minutes to evaluate each test example (actually the evaluation is much faster because batch processing is possible). But here we assume that we only have a single robot performing the evaluation operation, and the images must be processed continuously.

Because there are massive images, we can get a standard error estimate within 0.1%. We actually don't need a standard error of 0.1% to make progress in this field, maybe 1% is enough.

In terms of evaluating complexity, end-to-end performance is also an important piece. Let's take a look at how to perform end-to-end performance evaluation of neural networks in simulated tasks. Habitat Sim is one of the fastest simulators available and is designed to minimize the overhead between neural network inference and environment stepping. The simulator can do 10,000 steps per second, but since the forward pass of the neural network is about 10ms, this bottleneck results in an evaluation time of 2 seconds per episode (assuming a typical navigation episode of 200 steps). This is much faster than running a real robot, but much slower than evaluating a single computer vision sample.

If one were to evaluate an end-to-end robotic system with a level of diversity similar to what we did with ImageNet, a typical evaluation would take 1 week to process hundreds of thousands of evaluation scenarios. This is not entirely a reasonable comparison, since each episode actually has around 200 inference passes, but we cannot treat the images within a single episode as independent validation sets. Without any other episode metric, we only know whether the task was successful, so all inference within the episode only contributes to a single sample of the binomial estimate. We have to estimate success rates based on tens of thousands of episodes rather than pictures. Of course, we could try to use other policy evaluation methods, but these algorithms are not reliable enough to work out of the box.

In the next stage, we conduct on-site evaluation of the real robot. In the real world each episode takes about 30 seconds to evaluate, and if a team of 10 operators performs evaluations, each of which can complete 300 episodes per day, then about 3000 evaluations can be performed per day.

If it takes a full day to evaluate a model, this puts a huge limit on productivity because you can only try one idea per day. So we can no longer work on small ideas that incrementally improve performance by 0.1%, or very extreme ideas. We had to find a way to make a big leap in performance. While this looks good, it's difficult to do in practice.

When considering an iterative process of robot learning, it’s easy to have the number of evaluation trials far exceed your training data! Months of continuous evaluation produced approximately tens of thousands of episodes, which is already larger than most robotic deep learning demonstration datasets.

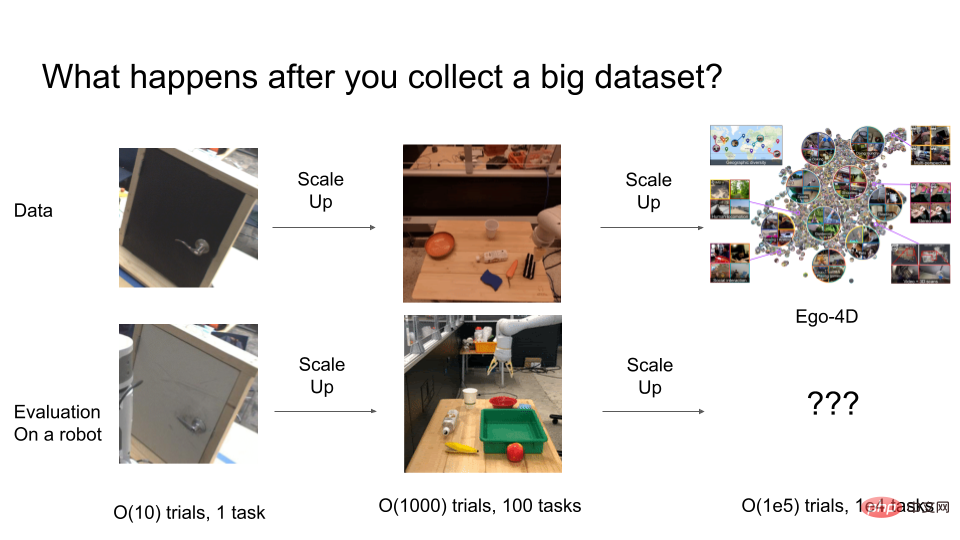

A few years ago, researchers were still solving problems like getting robotic arms to open doors, but these strategies didn’t work very well. Good generalization. Researchers typically conduct evaluations in a sequence of around 10 episodes. But 10-50 trials is actually not enough to guarantee statistical robustness. To achieve good results, more than 1000 trials may actually be performed for final evaluation.

But what happens when you extend the experiment further? If we eventually need to train an extremely general robotic system with O(100,000) behaviors, how many trials will we need to evaluate such a general system? This is where the cost of appraisal becomes extremely expensive.

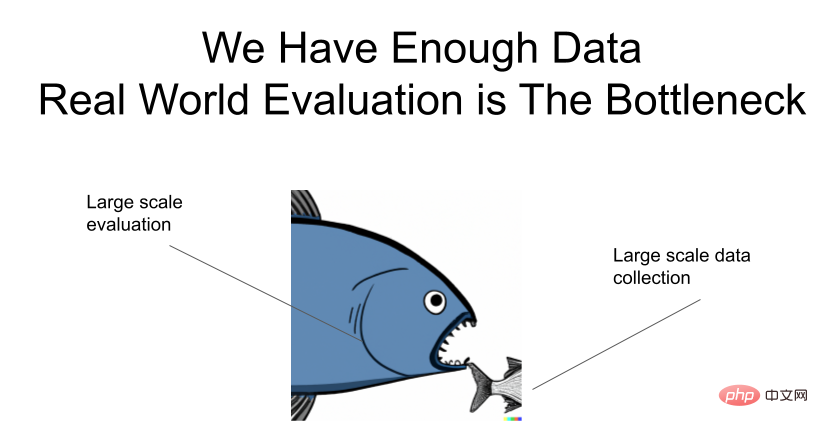

I emphasize it again: the data is sufficient, but there is a bottleneck in the evaluation!

#How to speed up the evaluation?

Here are some ideas on how to speed up the evaluation of universal robotic systems.

One approach is to study the generalization problem and the robot separately. In fact, the deep learning community has already achieved this. Most computer vision and generative modeling researchers do not directly test their ideas on actual robots, but expect that once their models achieve strong generalization capabilities, they will be quickly transferred to robots. ResNets, developed in the field of computer vision, greatly simplify many robotic visual motion modeling options. Imagine if a researcher had to test their idea on a real robot every time they wanted to try a different neural network architecture! Another success story is CLIPort, which decouples the powerful multimodal generalization capabilities of image-text models from the underlying geometric reasoning for grasp planning.

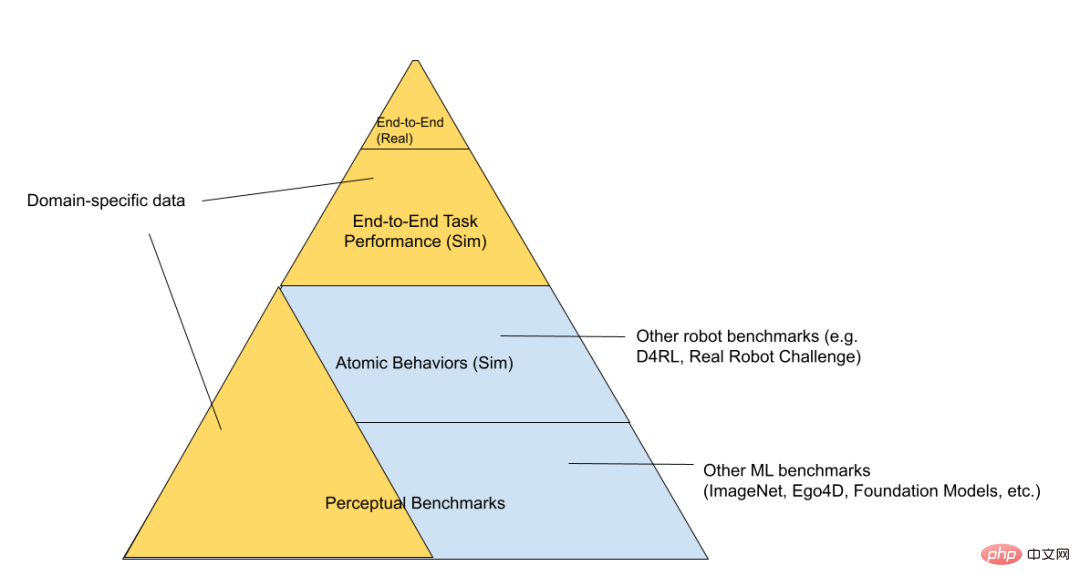

We can further divide the RL technology stack into three layers: "simulated toy environment", "simulated robot" and "real robot" (arranged in order of increasing difficulty of evaluation).

At the bottom of the pyramid, there are general benchmarks, such as the benchmarks of Kaggle competitions. Going up, there is a set of "toy control problems", which study the problem in a "bare metal" way. Only the simulator and neural network are running, and all the code related to real-world robots, such as battery management, does not exist. Going further up the pyramid leads to more specific areas, more relevant to the problem you are trying to solve. For example, a "simulated robot" and a "real robot" may be used for the same task and reuse the same underlying robot code. Simulated toy environments can be used to study general algorithms, but have less overlap with the field of final robotics. At the top of the “evaluation pyramid” are the real robotic tasks we are trying to solve. Iterating this process directly is very slow, so we all want to spend as little time as possible here. We hope that base models trained and evaluated at lower levels will help understand which ideas work without having to do every evaluation at the top level.

Similarly, the field already operates in this decoupled way. Most people interested in contributing to robots will not necessarily operate robots. They may train visual representations and architectures that may eventually be useful to robots. Of course, the disadvantage of decoupling is that improvements in perceptual baselines do not always correspond to improvements in robot capabilities. For example, while the mAP metric is improving semantic segmentation or video classification accuracy, or even lossless compression benchmarks (which in theory should eventually contribute), we don't know how improvements in representation goals actually map to improvements in downstream tasks. So ultimately you have to test on the end-to-end system to understand where the real bottlenecks are.

Google once published a cool paper "Challenging Common Assumptions in Unsupervised Learning of Disentangled Representations". They proved that many completely unsupervised representation learning methods will not be used downstream. brings significant performance improvements in the task, unless we are performing evaluation and selection of models using final downstream criteria that we care about.

Paper address: https://arxiv.org/pdf/1811.12359.pdf

Another way to reduce evaluation costs The approach is to ensure that data collection and evaluation processes are consistent. We can collect both assessment data and expert operational data simultaneously. We can collect HG-dagger data through certain interventions, so that useful training data can be collected. At the same time, the average number of interventions per episode can roughly tell us whether the strategy is good enough. We can also observe scalar metrics instead of binomial metrics because each episode of these metrics produces more information than a single success/failure.

Using RL algorithms for autonomous data collection is another way to combine assessment and data collection, but this method requires us to manually score episodes or use carefully designed reward function. All of these approaches require deploying large numbers of robots in the real world, which still gets bogged down in constant iteration in the real world.

The way to make the evaluation algorithm faster is to improve the sim-to-real migration algorithm. We can simulate many robots in parallel so there are no constraints. Mohi Khansari, Daniel Ho, Yuqing Du and others developed a technique called "Task Consistency Loss", which regularizes the representations from sim and real into invariants, so the strategy is in sim The behavior should be similar to real. When we migrate strategies evaluated in sim to real, we want to ensure that higher performance metrics in sim also indeed correspond to higher performance metrics in real. The smaller the sim2real gap, the more trustworthy the indicators in the simulation experiment.

Expressivity

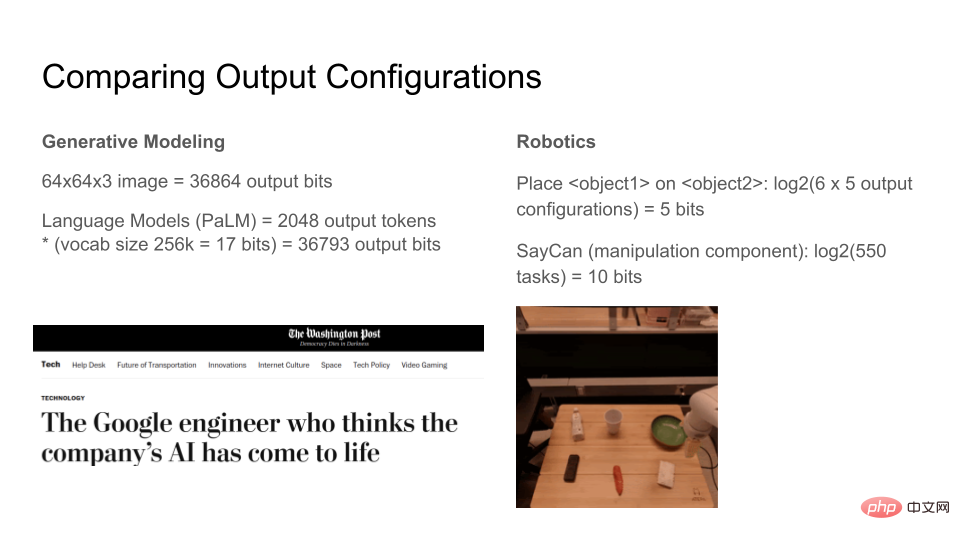

Let’s see how many bits a modern generative model can output. A 64x64x3 RGB image with 8 bits per channel is 36864bits. The language model can generate any number of tokens, but if we fix the output window to 2048 tokens, each token has 17 bits, for a total of 36793 bits. Therefore, both image and text generation models can synthesize approximately 37kbits. As models become more expressive, people's perception of these models will undergo a qualitative leap. Some are even beginning to think that language models are partially conscious because they are so expressive!

In comparison, how expressive are current robots? Here we design a simplified real-world environment. There are 6 items on the table. The robot's task is to move one item to the top of another item or transport certain items, for a total of 100 tasks. log2(100) is about 7 bits, which means "given the state of the world, the robot can move atoms to one of N states, where N can be described with 7 bits." Google’s SayCan algorithm can accomplish around 550 operations with a single neural network, which is quite impressive by current robotics deep learning standards, in only about 10 bits in total.

This comparison is not perfectly reasonable because the definition of information is different between the two. It is only provided here to provide a rough intuition when one measures a set of tasks versus The relative complexity of another set of tasks requires figuring out what is important.

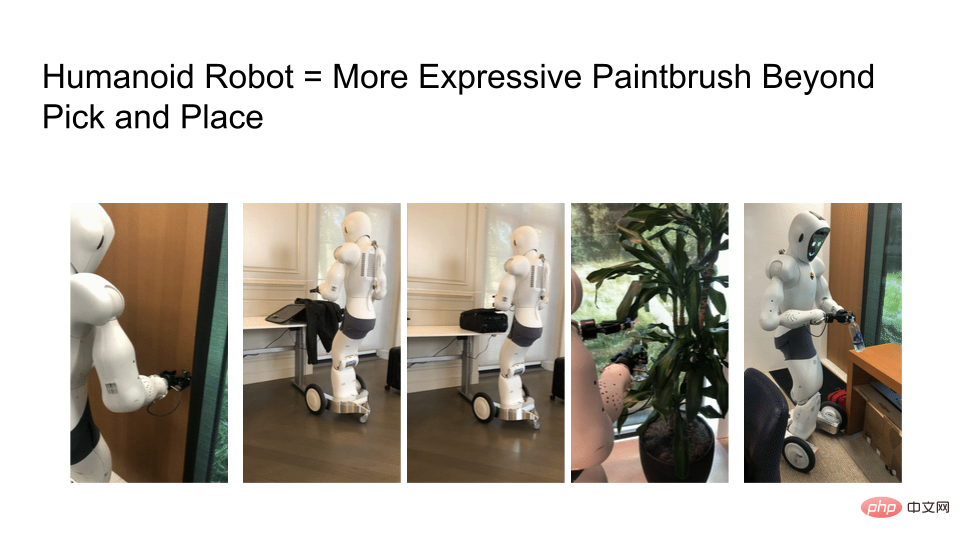

One of the challenges we encountered is that the functionality of the robot is still limited. If you look at the Ego4D dataset, many tasks require two hands, but most robots today still use a wheeled base, single-arm mobile manipulator. They can't move or go anywhere, and obviously all we have is a "robot arm", which rules out a lot of interesting tasks.

#We need to study more expressive robots, but the expressiveness of robot learning algorithms is limited by hardware. Below is a picture of the robot opening doors, packing suitcases, zipping up zippers, watering plants and flipping water bottle caps. As robotic hardware gets closer to real humans, the number of things you can do in a human-centered world grows exponentially.

As robots become more expressive, we not only need Internet-scale training data, but also Internet-scale evaluation processes. If you look at the progress of large language models (LLMs), there are now a lot of papers looking at tuning and what existing models can and cannot do.

For example, BigBench’s benchmark compiles a series of tasks and asks what we can gain from these models. OpenAI offers Internet users the opportunity to evaluate their DALLE-2 and GPT-3 models. Their engineering and product teams can learn from user-involved AI experiments because the details of LLMs models are difficult for any one researcher to master.

Finally, a question for readers, what is the technology equivalent to GPT-3 or DALLE-2 API in the field of robotics? Through this equivalent technology, can researchers in the Internet community question robot research and understand what it can actually do?

Finally use a table to summarize the comparison between optimization, evaluation and expressivity:

The above is the detailed content of Compared with generative models, why does robotics research still use the same old methods from a few years ago?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

In order to align large language models (LLMs) with human values and intentions, it is critical to learn human feedback to ensure that they are useful, honest, and harmless. In terms of aligning LLM, an effective method is reinforcement learning based on human feedback (RLHF). Although the results of the RLHF method are excellent, there are some optimization challenges involved. This involves training a reward model and then optimizing a policy model to maximize that reward. Recently, some researchers have explored simpler offline algorithms, one of which is direct preference optimization (DPO). DPO learns the policy model directly based on preference data by parameterizing the reward function in RLHF, thus eliminating the need for an explicit reward model. This method is simple and stable

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

At the forefront of software technology, UIUC Zhang Lingming's group, together with researchers from the BigCode organization, recently announced the StarCoder2-15B-Instruct large code model. This innovative achievement achieved a significant breakthrough in code generation tasks, successfully surpassing CodeLlama-70B-Instruct and reaching the top of the code generation performance list. The unique feature of StarCoder2-15B-Instruct is its pure self-alignment strategy. The entire training process is open, transparent, and completely autonomous and controllable. The model generates thousands of instructions via StarCoder2-15B in response to fine-tuning the StarCoder-15B base model without relying on expensive manual annotation.

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

MetaFAIR teamed up with Harvard to provide a new research framework for optimizing the data bias generated when large-scale machine learning is performed. It is known that the training of large language models often takes months and uses hundreds or even thousands of GPUs. Taking the LLaMA270B model as an example, its training requires a total of 1,720,320 GPU hours. Training large models presents unique systemic challenges due to the scale and complexity of these workloads. Recently, many institutions have reported instability in the training process when training SOTA generative AI models. They usually appear in the form of loss spikes. For example, Google's PaLM model experienced up to 20 loss spikes during the training process. Numerical bias is the root cause of this training inaccuracy,