Technology peripherals

Technology peripherals

AI

AI

The technical reasons behind the success of ChatGPT and its inspiration for the life sciences field

The technical reasons behind the success of ChatGPT and its inspiration for the life sciences field

The technical reasons behind the success of ChatGPT and its inspiration for the life sciences field

As early as the 1950s, some scholars proposed the concept of artificial intelligence (Artificial Intelligence), whose purpose was to make computers possess human intelligence (or part of human intelligence). After many years of development in this field, there was still no breakthrough until deep learning technology emerged in 2012. Deep learning mainly solves the bottleneck of model representation capabilities. The modeling problems we face, such as image understanding, language translation, speech recognition, molecule-protein binding conformation prediction and other technologies, are all very complex nonlinear problems. Before the emergence of deep learning, the model representation ability was very weak and unable to Accurate representation of these complex problems. Deep learning technology can theoretically build models of any depth through the layered stacking of models, breaking through the bottleneck of model representation capabilities, thus making breakthrough progress in fields such as speech recognition, computer vision, and natural language understanding.

The emergence of deep learning technology marks that artificial intelligence has entered a new stage. Let us call the wave of artificial intelligence driven by deep learning that began around 2012New Generation of Artificial Intelligence Era (In fact, the application of deep learning in the field of speech recognition can be traced back to the earliest, but in view of the fact that many subsequent deep learning advances come from the field of computer vision, we use the 2012 AlexNet appears as a starting point). This period can be considered the first stage of the new generation of artificial intelligence driven by deep learning, that is, the annotation data-driven deep learning model, which has greatly improved the model's representation capabilities, thereby promoting Artificial intelligence technology has improved significantly and has achieved product and commercial success in the fields of computer vision and speech recognition. The main limitation of this stage is that it is very dependent on the amount of labeled data. As the number of model parameters increases, a large amount of training data is required as a constraint to solve so many model parameters. It is very expensive to obtain a large amount of annotated data, and it will be difficult to improve after reaching the 100 million level, and the effective model size supported by the data is also limited. During the period from 2012 to 2015, computer vision was the most active field, with various deep network models including ResNet emerging. In 2017, an important basic work, Transformer, appeared. In 2019, in the field of natural language processing (NLP), which has not been able to make major breakthroughs, a work called BERT stood out and achieved the best results in more than a dozen different natural language processing (NLP) tasks. The differences between these tasks are very large, so after the BERT work was published, it immediately attracted the attention of the entire field. BERT adopts an idea called

Self-supervised pre-training, which can train the model without labeling the data and only using the constraints of the text corpus itself (for example, only a certain position of a certain sentence can be trained Certain qualified words can be used), so that high-quality corpus existing on the Internet can be used for training without manual calibration, which suddenly greatly increases the amount of available training data, and coupled with large models, it makes The BERT model is far more effective than past models and has good versatility across different tasks, making it one of the milestones in the field of NLP. In fact, before BERT appeared in 2018, there was another work called GPT (i.e. GPT1.0), which earlier used the idea of self-supervised pre-training for text generation. That is, the previous text is input, and the model predicts and outputs the following text. High-quality corpus in the field can be trained without annotation. Both BERT and GPT were developed based on Transformer, and Transformer has gradually developed into a general model in the AI field. The effect of GPT1.0 is not amazing. Shortly after the emergence of BERT, GPT quickly released GPT2.0. The model size and training data volume were greatly improved. As a general model (that is, without downstream tasks for training and direct testing results), the results are better than the current ones on most tasks. There are models. However, since the BERT model has more advantages than the GPT2.0 model in feature representation and is easier to train, the most concerned work in the field at this stage is still BERT. But in July 2020, GPT3.0 turned out, stunning everyone with 170 billion parameters. More importantly, in terms of effect, GPT3.0 serves as a universal language model. You only need to provide it with a simple description to describe the generation you want to generate. Based on the content, you can generate executable code, generate web pages or icons, complete an article or news, and write poetry and music according to prompts without retraining. After the emergence of GPT3.0, it has received widespread attention from the industry, and many developers have made many interesting applications based on GPT3.0. GPT3.0 has become the best and most popular text generation model.

After the emergence of self-supervised pre-training technology, we can think that the new generation of artificial intelligence has developed into the second stage of , that is, self-supervised pre-training technology has increased the number of available training data by several orders of magnitude With the improvement of training data, the size of the model has also been improved by several orders of magnitude (the effective model has reached the scale of hundreds of billions), and in terms of model effect, these models no longer depend on the data of downstream task fields. Retraining, therefore, the field has entered the era of general large models based on self-supervised pre-training.

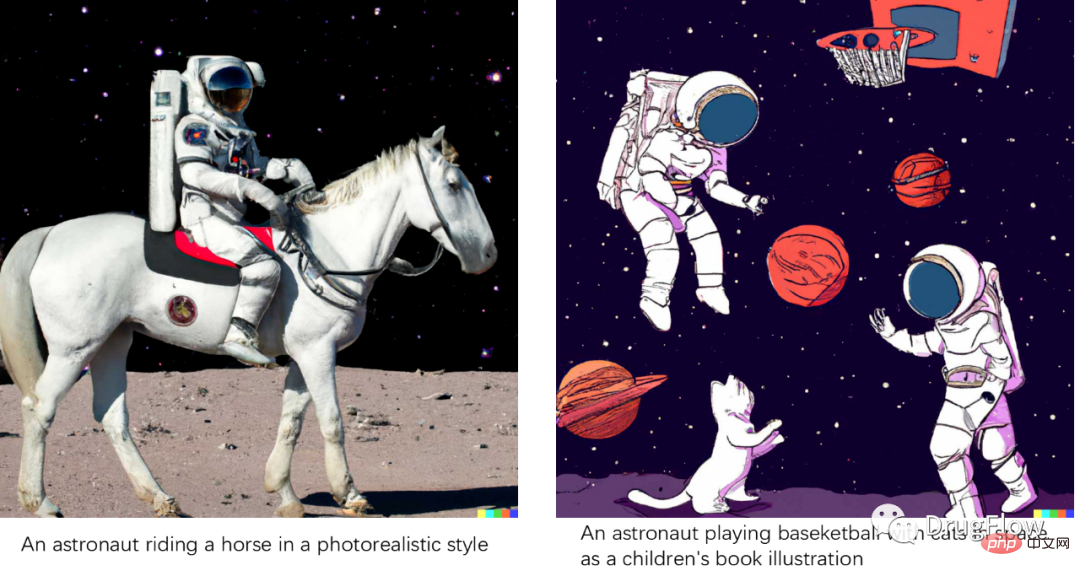

After that, GPT3.0, together with other work in the field, triggered a wave of model-sized arms races, but few truly breakthrough technologies emerged. Everyone realizes that simply increasing the model size does not fundamentally solve the problem. Just when everyone is looking forward to the emergence of follow-up work such as GPT4.0, GPT has not been updated for two full years. During this period, the most active work in the field of artificial intelligence mainly focused on two aspects, multi-modal unified models and content generation. Multimodal unified model, hoping to build a multimodal or cross-modal unified model, trying to uniformly represent different modal data such as text, image, and voice in one model, including the earliest cross-modal representation model CLIP, and subsequent A series of multimodal unified representation models. In the field of content generation, on the one hand, at the technical level, basic models such as the Diffusion Model have emerged. The development of the Diffusion Model and a series of variant models has made the field of AI Generated Content (AIGC) become It is very hot and has expanded from the field of image generation to the fields of natural language processing and life sciences. On the other hand, at the application level, a lot of substantial progress has been made in the field of image generation based on text. Among them, the most representative work is DALLE2. The model can be based on the input text. The description outputs images that look realistic, and even when the text description exceeds reality, it is still possible to generate images that appear to reasonably conform to the text description, as shown in the figure below. The success of a series of works such as DALLE2 is due on the one hand to the large amount of text-image correspondence data (approximately hundreds of millions of pairs), which models the correspondence between text and image semantics, and on the other hand to the diffusion model that overcomes GAN, VAE and other models have shortcomings such as difficulty in training and insufficient retention of details of the generated effects. The image generation effects are so stunning that many people believe that AI can already create content.

Figure. DALLE2 generation effect

At the end of November 2022, OpenAI released ChatGPT. After the release of ChatGPT, everyone found that this chatbot is very unusual and often gives amazing answers. There have been many conversational robots in the field of conversational robots, such as Apple's Siri, Microsoft's Xiaobing Xiaona, etc. The experience of these general conversational systems is not very ideal. People use them to tease them and then throw them aside. The system framework of command-execution question-and-answer robots used in products such as smart speakers is based on rule-driven dialogue management systems. There are a large number of manual rules, which makes these systems unable to be extended to general fields and can only provide simple and stylized answers. , on the other hand, cannot handle the environmental semantic information (Context) of multiple rounds of dialogue. From a technical perspective, ChatGPT is completely different from the original mainstream dialogue system. The entire system is based on a deep generation large model. For a given input, it is processed by the deep model and directly outputs an abstract summary answer. In terms of product experience, ChatGPT also far surpasses past chat systems. As a general chatbot, it can answer questions in almost any field, and its accuracy has reached the level that humans are willing to continue using it. It can still maintain a very good experience in multiple rounds of dialogue scenarios.

Of course, ChatGPT is not perfect. As a deep learning model, ChatGPT has flaws that cannot be 100% accurate. For some questions that require accurate answers (such as mathematical calculations, logical reasoning, or names, etc.) ), there will be some obvious errors that can be perceived. There have been some improvements later. For example, some work will provide reference web links for information. In Facebook's latest work ToolFormer , they try to include specific tasks in the generated model. By leaving the calculation to a specific API instead of using a general model, this is expected to overcome the problem that the model cannot be 100% accurate. If this path goes forward, the deep generative model is expected to become the core framework of AGI, and it will be integrated with other skill APIs through plug-ins. It is very exciting to think about it.

Commercially, on the one hand, ChatGPT has triggered imaginations about the challenges faced by search engines such as Google. On the other hand, everyone has seen various vertical product application opportunities related to natural language understanding. There is no doubt that ChatGPT is creating a new business opportunity in the field of natural language understanding that may rival search recommendations.

Why does ChatGPT have such amazing effects? One of the core reasons is that ChatGPT is built based on the large generative model GPT3.5, which should be the best model for text generation in the current field of natural language understanding (GPT3.5 is used more than GPT3.0 With more data and larger models, better results).

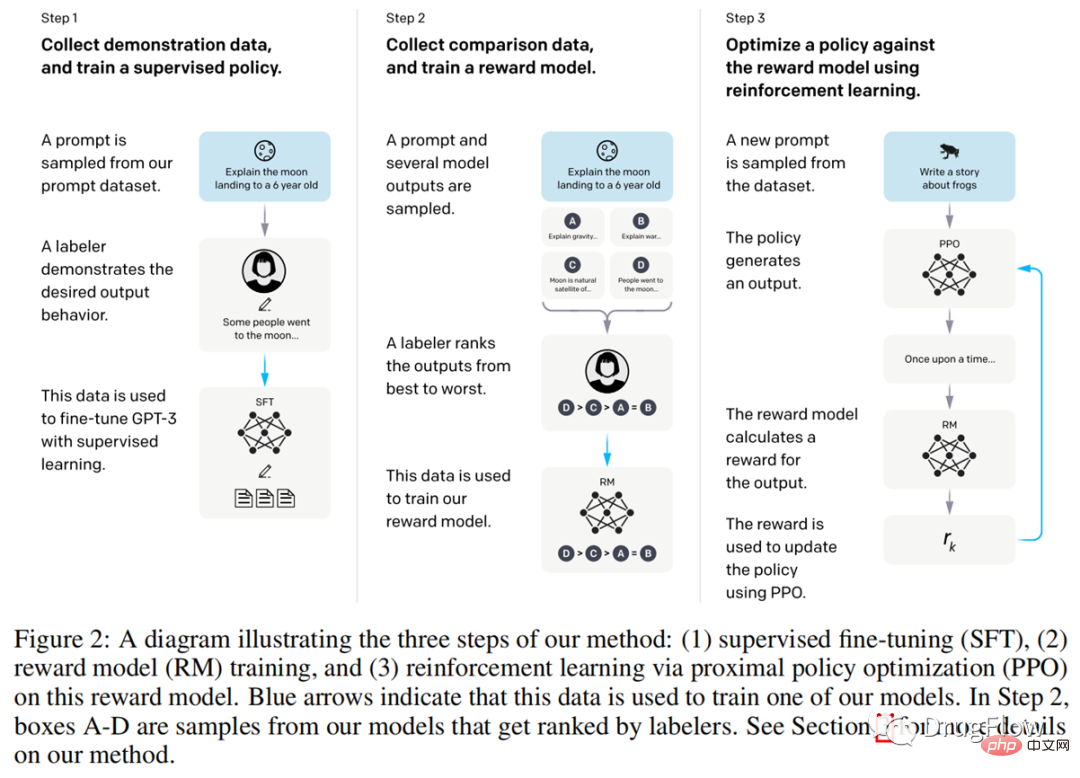

The second core reason is Reinforcement learning technology based on human feedback, that is, Reinforcement Learning from Human Feedback (abbreviated as RLHF) . Since OpenAI has not published a paper on ChatGPT and has not made the code public, it is generally believed that it is most similar to the technology disclosed in a previous article, InstructGPT (https://arxiv.org/pdf/2203.02155.pdf). As shown in the figure below, according to the description in InstructGPT, the first step is to collect user preference data for different answers to the same question; the second step is to use this preference data to retrain the GPT model. This step is based on fine-tuning of supervision information; The third step is to train a scoring function according to the user's preference for different answers. A score will be given for the answer of ChatGPT. This score will reflect the user's preference for different answers. The fourth step is to use this scoring function as a reinforcement learning method. Feedback (Reward) trains the reinforcement learning model, making the final answers output by ChatGPT more biased towards answers that users like. Through the above process, ChatGPT outputs more user-friendly answers to user input based on GPT3.5.

ChatGPT The first stage of training the GPT generation model uses a lot of training data, about With tens of terabytes, it costs tens of millions of dollars to train a model once, while in the second stage, a small amount of high-quality data feedback based on reinforcement learning only requires tens of thousands of high-quality data. We can see that ChatGPT technology is based on self-supervised pre-training large models combined with reinforcement learning technology based on human feedback, and has made very significant progress. This new paradigm may become the core driving technology of the third stage of artificial intelligence, that is, first based on large models of self-supervised pre-training, and then combined with reinforcement learning technology based on a small amount of high-quality data feedback to form a model and closed-loop feedback of data to achieve further technological breakthroughs.

Regarding ChatGPT, our views are as follows:

(1) ChatGPT is indeed the greatest work of this era One, let us see the amazing effects of AI based on self-supervised pre-training to generate large models and reinforcement learning feedback strategy results based on a small amount of high-quality data, which in a sense has changed our perception.

(2) ChatGPT related technologies have very great commercial value, causing many products including search engines to face the opportunity to be reconstructed or subverted, which will undoubtedly bring many new business opportunities, and the entire NLP field will benefit.

(3) Generative large models based on self-supervised pre-training and learning paradigms based on reinforcement learning feedback strategies based on a small amount of high-quality data are expected to become the driving force for the advancement of various fields in the future, except for the NLP field. In addition, it is expected to trigger a new round of artificial intelligence boom in various fields such as life sciences, robotics, and autonomous driving.

(4) ChatGPT does not prove that artificial intelligence has a human mind. Some of the creativity and intelligence shown by ChatGPT are because the natural language understanding corpus contains semantics and logic, based on The generative model trained on natural language corpus has learned these correspondences in a statistical sense and seems to have intelligence, but it does not really have human mind. ChatGPT is great, but it is not rigorous enough to say that its intelligence is equal to that of a child of several years old. Because fundamentally speaking, AI does not yet have the ability for people to learn new knowledge, perform logical reasoning, imagination, and motion feedback. Excessive hype on the intelligence and capabilities of ChatGPT will drive out good coins and harm the entire industry.

(5) In this field, there is still a gap in China’s technology. In the past two years, we have not seen a text generation model that can truly replicate the effect of GPT3.0. Without GPT3.0 and 3.5, There will be no ChatGPT. Work such as GPT3, GPT3.5 and ChatGPT are not open source, and even the API is blocked in China. This is a practical difficulty for copying work. To put it more pessimistically, most teams that want to replicate the effects of ChatGPT will not succeed.

(6) ChatGPT is not an algorithm breakthrough made by one or two researchers, but the result of a very complex algorithm engineering system guided by advanced concepts. It needs to be Team and organizational matching (analogous to OpenAI and DeepMind). A purely research-oriented team may not succeed, nor will a team that does not understand deep learning enough and is too engineering-oriented. This team needs: first, sufficient resource support to support expensive deep learning training and talent recruitment; second, expert leaders who have truly led engineering large model teams in the industry. ChatGPT not only has algorithm innovation, but also It is engineering system innovation; thirdly, and perhaps most importantly, it requires an organization that is united, collaborates, has unified leadership, and does not pursue publication of papers (a loose organization is conducive to algorithm innovation, but not conducive to engineering algorithm research), and is adequately equipped. What great engineering and algorithmic talents.

(7) We not only pursue making a ChatGPT, but also continue to pursue the technological innovation behind it, that is, vigorously develop self-supervised pre-training to generate large models and reinforcement learning based on a small amount of high-quality data Feedback strategy technology is not only the core technology of the next generation of ChatGPT, but also the technology that promotes overall progress in the field of artificial intelligence. The biggest concern is that a lot of resources will be wasted due to the dispersion of power due to speculation and chasing trends, or that excessive publicity of ChatGPT will damage the industry.

(8) ChatGPT still has room for improvement, and it is not the only technology worthy of attention and expectation. The most common misconception about AI is overestimating its short-term performance and underestimating its long-term performance. This is a great era in which AI has become the core driving force, but AI will not become omnipotent so quickly and requires our long-term efforts.

Here, we briefly summarize the key technology evolution in the new generation of artificial intelligence wave caused by deep learning since 2012:

(1 ) In the first stage, the key progress is the labeled data-driven supervised deep learning model, which greatly improves the model representation capabilities, thereby promoting significant progress in artificial intelligence technology. This stage is the most active In the field of computer vision and speech recognition, the main limitation is that labeled data is relatively expensive, which limits the amount of data that can be obtained, thereby limiting the effective model size that the data can support.

(2) In the second stage, the key progress isself-supervised pre-training of a general large model driven by big data, self-supervised pre-training The training technology has improved the available training data by several orders of magnitude, thus supporting the improvement of the model size by several orders of magnitude, becoming a universal model that does not need to rely on data in downstream task fields for retraining. At this stage, the greatest progress and the most active is the natural model. In the field of language understanding; the main limitation is that it requires massive data training, and the model is very large. It is very expensive to train and use, and it is also very inconvenient to retrain the vertical scene model.

(3) Although the third stage cannot be finalized yet, it shows a certain trend. The very important technical key in the future is whether, based on the large model, reinforcement learning, prompting and other methods can be used to significantly affect the output results of the large model with only a small amount of high-quality data. If this technology takes off, fields such as autonomous driving, robotics, and life sciences where data acquisition is expensive will benefit significantly. In the past, if you wanted to improve the problems of an AI model, you had to collect a large amount of data and retrain the model. If, in the field of robots that require offline interaction, on the basis of pre-trained large models, the robot's decision-making can be affected only by informing the robot of some correct and wrong action choices in real scenes, then the technology in the fields of driverless driving and robotics will Iteration will be more efficient. In the field of life sciences, if only a small amount of experimental data feedback can significantly affect the model prediction results, the revolution in the integration of the entire life science field with computing will come faster. At this point, ChatGPT is a very important milestone, and I believe there will be a lot of work to come.

Let us turn our attention back to the field of life sciences that we are more concerned about.

Since the technological advancements brought by ChatGPT have improved most NLP-related fields, technologies and products related to information query, retrieval and extraction in the field of life sciences will benefit first. For example, is it possible that in the future there will be a conversational vertical search engine in the field of life sciences? Experts can ask it any questions (such as questions about diseases, targets, proteins, etc.), and on the one hand it can give comprehensive trends. Judgment (perhaps not that precise, but probably correct, helps us quickly understand a field), on the other hand, it can give relevant and valuable information about a certain topic, which will undoubtedly significantly improve the information processing efficiency of experts. For example, is it possible to build an AI doctor so that patients can consult about disease knowledge and treatment methods (limited by technical limitations, AI cannot give precise answers, let alone replace doctors), but it can provide a lot of information for reference and follow-up treatment. For suggestions on what to do, the experience will definitely be significantly better than today's search engines.

There are still many important unsolved tasks in the field of life sciences, such as small molecule-protein binding conformation and affinity prediction, protein-protein interaction prediction, small molecule representation and property prediction , protein property prediction, small molecule generation, protein design, retrosynthetic route design and other tasks. At present, these problems have not been perfectly solved. If breakthroughs are made in these tasks, drug discovery and even the entire field of life sciences will usher in huge changes.

The AIGC field based on large models and the RLHF field based on expert or experimental feedback will benefit from the promotion of ChatGPT and will definitely lead to a new round of technological progress. Among them, AIGC (artificial intelligence content generation) technology has made good progress in the fields of small molecule generation and protein design in the past year. We predict that in the near future, the following tasks will significantly benefit from the development of AIGC generation technology and generate technological steps:

(1) Small molecule generation and optimization technology, i.e. How to generate small ligand molecules based on protein pocket structure information instead of relying on active ligand information, taking into account various constraints such as activity, druggability, and synthesizability. This part of the technology will significantly benefit from the development of the AIGC field;

(2) Conformation prediction can be regarded as a generation problem in a sense, and small molecule and protein binding conformation prediction tasks will also benefit from the development of AIGC-related technologies;

(3) Sequence design fields such as proteins, peptides, and AAV will also definitely benefit from the development of AIGC technology.

The above-mentioned AIGC-related tasks, as well as almost all tasks that require experimental verification feedback, including but not limited to activity prediction, property prediction, synthetic route design, etc., will have the opportunity to benefit from RLHF technology bring dividends.

Of course there are many challenges. Limited by the amount of available data, the current generation models used in the life science field are still relatively shallow, and shallow deep learning models such as GNN are mainly used (GNN is limited by the smoothness of message transmission, and the number of layers can only be used to about 3 layers. ), although the generation effect shows good potential, it is still not as amazing as ChatGPT. Reinforcement learning technology based on expert or experimental feedback is limited by the speed of experimental data generation and insufficient expression capabilities of the generated model, and it will also take a certain amount of time to present stunning results. However, judging from the evolution trend of ChatGPT technology, if we can train a large generative model that is deep enough and has strong enough representation capabilities, and use reinforcement learning to further improve the effect of the large model based on a small amount of high-quality experimental data or expert feedback, we can expect that the field of AIDD There will definitely be a revolution.

In short, ChatGPT is not only a technological advancement in the field of natural language understanding, but will also trigger a new round of business trends in the field of information services and content generation. At the same time, it is based on Deep generation technology of massive data and reinforcement learning technology based on human feedback are longer-term driving forces for progress and will lead to rapid development in life sciences and other fields. We will usher in another wave of AI technology advancement and industrial implementation.

The above is the detailed content of The technical reasons behind the success of ChatGPT and its inspiration for the life sciences field. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

When developing a project that requires parsing SQL statements, I encountered a tricky problem: how to efficiently parse MySQL's SQL statements and extract the key information. After trying many methods, I found that the greenlion/php-sql-parser library can perfectly solve my needs.

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

When managing WordPress websites, you often encounter complex operations such as installation, update, and multi-site conversion. These operations are not only time-consuming, but also prone to errors, causing the website to be paralyzed. Combining the WP-CLI core command with Composer can greatly simplify these tasks, improve efficiency and reliability. This article will introduce how to use Composer to solve these problems and improve the convenience of WordPress management.

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

In Laravel development, dealing with complex model relationships has always been a challenge, especially when it comes to multi-level BelongsToThrough relationships. Recently, I encountered this problem in a project dealing with a multi-level model relationship, where traditional HasManyThrough relationships fail to meet the needs, resulting in data queries becoming complex and inefficient. After some exploration, I found the library staudenmeir/belongs-to-through, which easily installed and solved my troubles through Composer.

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

When developing a Geographic Information System (GIS), I encountered a difficult problem: how to efficiently handle various geographic data formats such as WKT, WKB, GeoJSON, etc. in PHP. I've tried multiple methods, but none of them can effectively solve the conversion and operational issues between these formats. Finally, I found the GeoPHP library, which easily integrates through Composer, and it completely solved my troubles.

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

Git Software Installation Guide: Visit the official Git website to download the installer for Windows, MacOS, or Linux. Run the installer and follow the prompts. Configure Git: Set username, email, and select a text editor. For Windows users, configure the Git Bash environment.

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

When developing PHP projects, ensuring code coverage is an important part of ensuring code quality. However, when I was using TravisCI for continuous integration, I encountered a problem: the test coverage report was not uploaded to the Coveralls platform, resulting in the inability to monitor and improve code coverage. After some exploration, I found the tool php-coveralls, which not only solved my problem, but also greatly simplified the configuration process.

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

During Laravel development, it is often necessary to add virtual columns to the model to handle complex data logic. However, adding virtual columns directly into the model can lead to complexity of database migration and maintenance. After I encountered this problem in my project, I successfully solved this problem by using the stancl/virtualcolumn library. This library not only simplifies the management of virtual columns, but also improves the maintainability and efficiency of the code.

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

I'm having a tricky problem when developing a front-end project: I need to manually add a browser prefix to the CSS properties to ensure compatibility. This is not only time consuming, but also error-prone. After some exploration, I discovered the padaliyajay/php-autoprefixer library, which easily solved my troubles with Composer.