Technology peripherals

Technology peripherals

AI

AI

The 'secret' of robot implementation: continuous learning, knowledge transfer and autonomous participation

The 'secret' of robot implementation: continuous learning, knowledge transfer and autonomous participation

The 'secret' of robot implementation: continuous learning, knowledge transfer and autonomous participation

This article is reproduced from Lei Feng.com. If you need to reprint, please go to the official website of Lei Feng.com to apply for authorization.

On May 23, 2022, ICRA 2022 (IEEE International Conference on Robotics and Automation), the annual top international conference in the field of robotics, was held as scheduled in Philadelphia, USA.

This is the 39th year of ICRA. ICRA is the flagship conference of the IEEE Robotics and Automation Society and the primary international forum for robotics researchers to present and discuss their work.

At this year’s ICRA, three of Amazon’s chief robotics experts, Sidd Srinivasa, Tye Brady and Philipp Michel, briefly discussed the challenges of building robotic systems for human-machine interaction in the real world.

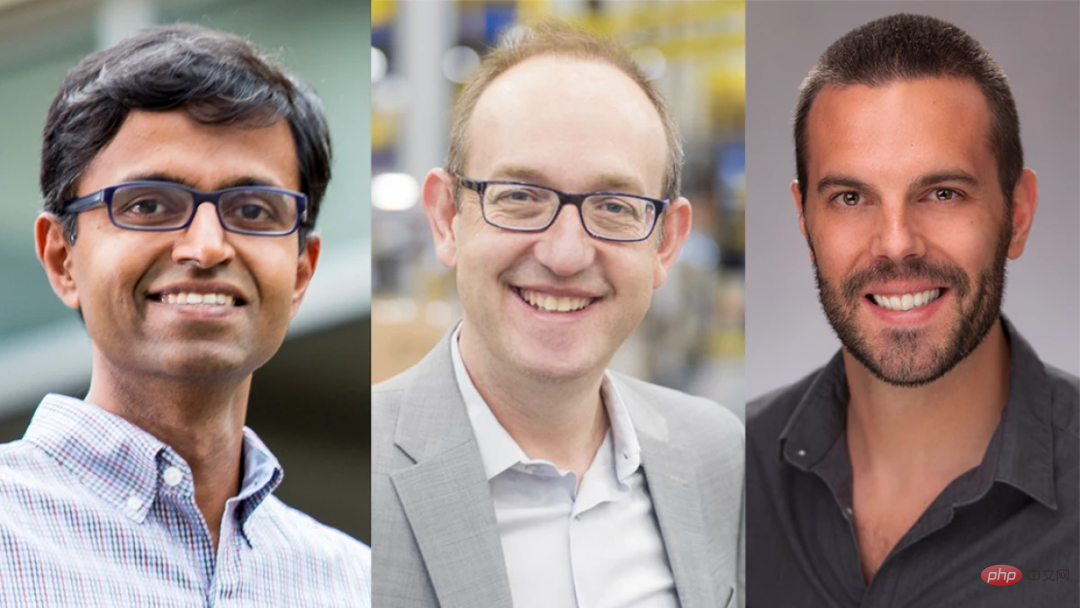

Note: From left to right are Sidd Srinivasa, director of artificial intelligence for Amazon Robotics, Tye Brady, chief technical expert of Amazon Robotics (Global), and senior manager of applied science at Amazon Scout Philipp MichelSidd

Srinivasa is a world-renowned robotics expert, IEEE Fellow, currently a Distinguished Professor at Boeing at the University of Washington, and the leader of the Amazon Robot Artificial Intelligence Project. He is responsible for managing the algorithms of autonomous robots that assist Amazon logistics center employees. Research into robots that can pack and package products and cart-style robots that can autonomously lift, unload, and transport goods.

Tye Brady is the chief technical expert of Amazon Robotics (global) and has a master's degree in aerospace engineering from MIT. Philipp Michel and Sidd Srinivasa are both doctoral alumni of the CMU Robotics Institute and are senior managers of Amazon’s Scout robot project.

They put forward their own views on solving the challenges of robot landing. The AI technology review has been compiled without changing its original meaning, as follows:

Q: Your research in the field of robotics solves different problems. What are the similarities between these problems?

Sidd Srinivasa: An important difficulty in robotics research is that we live in an open world. We don’t even know what the “input” is about to face. In our fulfillment center, I have over 20 million items to control, and the number of items is increasing by the hundreds every day. Most of the time, our robots don't know what the items they are picking up are, but they need to pick them up carefully and package them quickly without damaging them.

Philipp Michel: For Scout, the difficulty is the objects encountered on the sidewalk, and the environment of transportation. We have private delivery facilities deployed in four states across the United States. Weather conditions, lighting conditions... it was clear from the beginning that we had to deal with a large number of variables to enable the robot to adapt to complex environments.

Tye Brady: In the process of developing execution robots, we have a significant advantage in that we operate in a semi-structured environment. We can make our own traffic rules for robots, and understanding the environment really helps our scientists and engineers gain a deep understanding of the objects we want to move, manipulate, classify, and identify to fulfill orders. In other words, we can realize the pursuit of technology in the real world.

Philipp Michel: Another thing we have in common is that we rely heavily on learning from data to solve problems. Scout receives real-world data as it performs tasks and then iteratively develops machine learning solutions for perception, localization, and navigation.

Sidd Srinivasa: I completely agree (learning to solve problems from data). I think machine learning and adaptive control are key to super-linear scaling. If we deploy thousands of robots, we can't have thousands of scientists and engineers working on them. We need to rely on real-world data to achieve super-linear growth.

In addition, I think the open world will force us to think about how to "continuous learning". Our machine learning models are often trained based on some input data distributions, but because this is an open world, we will encounter the problem of "covariate shift", that is, the data we see does not match the distribution. , which causes machine learning models to often be overconfident for no reason.

Therefore, a lot of the work we do is to create "watchdogs" (watchdogs, a supervisory device) to identify when the input data distribution deviates from the distribution it was trained on. Then, we perform "importance sampling" so that we can pick out the data that has changed and retrain the machine learning model.

Philipp Michel: This is one of the reasons why we want to train the robot in different places, so that we can know early on the real-life data that the robot may encounter, which in turn forces us to Develop solutions that address new data.

Sidd Srinivasa: This is indeed a good idea. One of the advantages of having multiple robots is the system's ability to recognize changed content, retrain, and then share this knowledge with other robots.

Think of a story about a sorting robot: In a corner of the world, a robot encounters a new packaging type. At first, it was troubled because it had never seen anything like this before and couldn't recognize it. Then a new solution emerged: a robot that could transmit new packaging types to all the robots in the world. That way, when this new packaging type appears elsewhere, the other robots will know what to do with it. It is equivalent to having a "backup". When new data appears at one point, other points will know it, because the system has been able to retrain itself and share information.

Philipp Michel: Our robot is doing similar things. If our robots encounter new obstacles that they haven't encountered before, we try to adjust the model to recognize and deal with these obstacles, and then deploy the new model to all robots.

One of the things that keeps me up at night is the idea that our robots will encounter new objects on the sidewalk that they won’t encounter again for the next three years, such as: People on the sidewalk Gargoyles used to decorate lawns for Halloween, or people place an umbrella on a picnic table to make it look less like a "picnic table." In this case, all machine learning algorithms fail to recognize that this is a picnic table.

So part of our research is about how to balance common things that don’t need to be entangled with specific categories of things. If this is an open manhole cover, the robot must be good at identifying it, otherwise it will fall. But if it's just a random box, we probably don't need to know the hierarchy of the box, just that this is the object we want to walk around.

Sidd Srinivasa: Another challenge is that when you change your model, there may be unintended consequences. The changed model may not affect the robot's perception, but it may change the way the robot "brakes", causing the ball bearings to wear out after two months. In end-to-end systems, a lot of interesting future research is about "understanding the impact of changes in parts of the system on the performance of the entire system."

Philipp Michel: We spent a lot of time thinking about whether we should divide the different parts of the robot stack. Integration between them can bring many benefits, but it is also limited. One extreme case is camera-to-motor-to-torque learning, which is very challenging in any real-world robotics application. Then there's the traditional robotics stack, which is nicely divided into parts like localization, perception, planning, and control.

We also spent a lot of time thinking about how the stack should evolve over time, and what performance improvements are there when bringing these pieces closer together? At the same time, we want to have a system that remains as interpretable as possible. We attempt to maximize the integration of learning components leveraging the entire stack while preserving interpretability and the number of safety features.

Sidd Srinivasa: This is a great point. I completely agree with Philipp’s point of view. It may not be correct to use one model to rule all models. But often, we end up building machine learning models that share a backbone with multiple applied heads. What is an object and what does it mean to segment an object? It might be something like picking, stacking, or packing, but each requires a specialized head, riding on a backbone that specializes in tasks.

Philipp Michel: Some of the factors we consider are battery, range, temperature, space and computing constraints. So we need to be efficient with our models, optimize the models, and try to take advantage of the shared backbone as much as possible, like Sidd mentioned, different heads for different tasks.

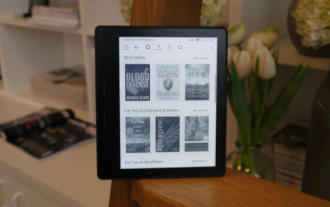

Caption: Amazon Scout is an autonomous delivery robot that can move at walking speed on public sidewalks and is currently undergoing field testing in four states in the United States.

Q: When I asked about the commonalities between your projects, one thing that came to mind is that your robots all work in the same environment as humans. Why does this complicate the issue?

Sidd Srinivasa: Robots are approaching human life, and we must respect all the complex interactions that occur in the human world. In addition to walking, driving, and performing tasks, there are also complex social interactions. What’s important for a robot is, first, to be conscious and, second, to be involved.

It's really hard, when you're driving, sometimes it's hard to tell what other people are thinking and to decide how to act based on what they're thinking. Just reasoning about the problem is hard, and then closing the loop is even harder.

If a robot is playing chess or playing against a human, it's much easier to predict what they're going to do because the rules are already well laid out. If you assume your opponents are optimal, you will do well even if they are suboptimal. This is guaranteed in some two-player games.

But the actual situation is not like this. When we play this kind of cooperative game that ensures a win-win situation, we find that it is actually difficult to predict accurately during the game, even if the collaborators have good intentions.

Philipp Michel: And the behavior of the human world changes greatly. Some pets completely ignore the robot, and some pets will walk towards the robot. The same goes for pedestrians, with some turning a blind eye to the robot and others walking right up to it. Children, in particular, are extremely curious and highly interactive. We need to be able to handle all situations safely, and these variability are exciting.

The above is the detailed content of The 'secret' of robot implementation: continuous learning, knowledge transfer and autonomous participation. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Amazon Kindle Oasis is discontinued in the United States, marking the end of the era of high-end e-readers

Feb 25, 2024 pm 12:10 PM

Amazon Kindle Oasis is discontinued in the United States, marking the end of the era of high-end e-readers

Feb 25, 2024 pm 12:10 PM

According to the latest news, Amazon has announced the discontinuation of its high-end e-reader Kindle Oasis and has removed it from the US market. This move indicates that the once highly anticipated Kindle Oasis has officially withdrawn from the market. Although there is still a small amount of stock in some overseas markets such as Canada and the United Kingdom, once sold out, it will no longer be available. This marks the beginning of this acclaimed high-end reader becoming a thing of the past. Kindle Oasis is loved by users for its excellent performance and design. However, as market demand changes and new products are launched, Amazon may have decided to discontinue this product. Although Kindle Oasis has left a certain mark on the market, Amazon may have shifted its focus to other product lines

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

Editor of Machine Power Report: Wu Xin The domestic version of the humanoid robot + large model team completed the operation task of complex flexible materials such as folding clothes for the first time. With the unveiling of Figure01, which integrates OpenAI's multi-modal large model, the related progress of domestic peers has been attracting attention. Just yesterday, UBTECH, China's "number one humanoid robot stock", released the first demo of the humanoid robot WalkerS that is deeply integrated with Baidu Wenxin's large model, showing some interesting new features. Now, WalkerS, blessed by Baidu Wenxin’s large model capabilities, looks like this. Like Figure01, WalkerS does not move around, but stands behind a desk to complete a series of tasks. It can follow human commands and fold clothes

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

In the field of industrial automation technology, there are two recent hot spots that are difficult to ignore: artificial intelligence (AI) and Nvidia. Don’t change the meaning of the original content, fine-tune the content, rewrite the content, don’t continue: “Not only that, the two are closely related, because Nvidia is expanding beyond just its original graphics processing units (GPUs). The technology extends to the field of digital twins and is closely connected to emerging AI technologies. "Recently, NVIDIA has reached cooperation with many industrial companies, including leading industrial automation companies such as Aveva, Rockwell Automation, Siemens and Schneider Electric, as well as Teradyne Robotics and its MiR and Universal Robots companies. Recently,Nvidiahascoll

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects

Cloud computing giant launches legal battle: Amazon sues Nokia for patent infringement

Jul 31, 2024 pm 12:47 PM

Cloud computing giant launches legal battle: Amazon sues Nokia for patent infringement

Jul 31, 2024 pm 12:47 PM

According to news from this site on July 31, technology giant Amazon sued Finnish telecommunications company Nokia in the federal court of Delaware on Tuesday, accusing it of infringing on more than a dozen Amazon patents related to cloud computing technology. 1. Amazon stated in the lawsuit that Nokia abused Amazon Cloud Computing Service (AWS) related technologies, including cloud computing infrastructure, security and performance technologies, to enhance its own cloud service products. Amazon launched AWS in 2006 and its groundbreaking cloud computing technology had been developed since the early 2000s, the complaint said. "Amazon is a pioneer in cloud computing, and now Nokia is using Amazon's patented cloud computing innovations without permission," the complaint reads. Amazon asks court for injunction to block

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

The following 10 humanoid robots are shaping our future: 1. ASIMO: Developed by Honda, ASIMO is one of the most well-known humanoid robots. Standing 4 feet tall and weighing 119 pounds, ASIMO is equipped with advanced sensors and artificial intelligence capabilities that allow it to navigate complex environments and interact with humans. ASIMO's versatility makes it suitable for a variety of tasks, from assisting people with disabilities to delivering presentations at events. 2. Pepper: Created by Softbank Robotics, Pepper aims to be a social companion for humans. With its expressive face and ability to recognize emotions, Pepper can participate in conversations, help in retail settings, and even provide educational support. Pepper's