Bytedance model large-scale deployment actual combat

1. Background introduction

In ByteDance, applications based on deep learning are blooming everywhere. While engineers focus on model effects, they also need to pay attention to online service consistency and performance. , in the early days, this usually required division of labor and close cooperation between algorithm experts and engineering experts. This model has relatively high costs such as diff troubleshooting and verification.

With the popularity of the PyTorch/TensorFlow framework, deep learning model training and online reasoning have been unified. Developers only need to pay attention to the specific algorithm logic and call the Python API of the framework to complete the training verification process. After that, the model can It is very convenient to serialize and export, and the reasoning work is completed by a unified high-performance C engine. Improved developer experience from training to deployment.

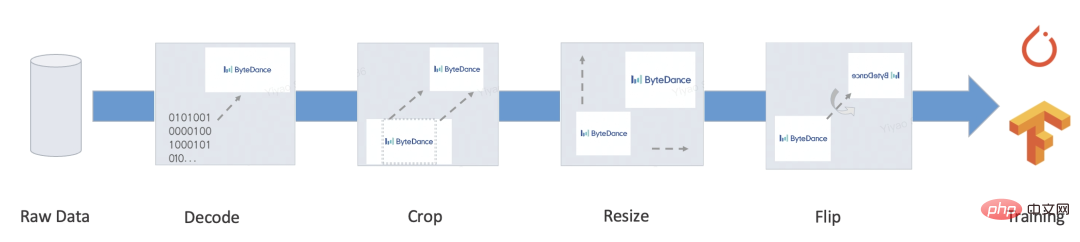

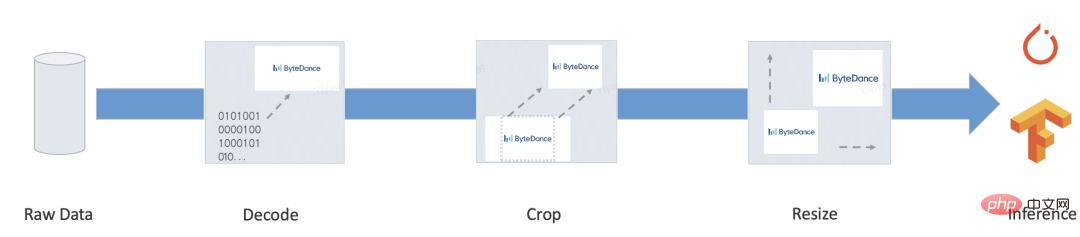

However, a complete service usually still has a lot of business logic such as pre-processing/post-processing. This type of logic usually processes various inputs and converts them into Tensors, and then inputs them into the model, and then the model outputs Tensor is then processed into the target format. Some typical scenarios are as follows:

- Bert

- Resnet

Our goal is to provide automated and unified training and inference solutions for the above end-to-end process, alleviate a series of problems such as manual development of inference processes and alignment diffs, and achieve large-scale unified deployment solutions.

2. Core issues

Frameworks such as PyTorch/TensorFlow have relatively solved the problem of unified model training/inference, so the model calculation itself does not have the problem of integrating training and inference (operator performance Optimization is beyond the scope of this discussion).

The core problem to be solved is: pre-processing and post-processing need to provide a high-performance training and push integrated solution.

For this type of logic, TensorFlow 2.x provides tf.function (not yet complete), and PyTorch provides TorchScript, which without exception selects a subset of native Python syntax. But even if it is so powerful, there are still problems that cannot be ignored:

- Performance: Most of this solution is based on virtual machine implementation. The virtual machine solution is flexible and very controllable, but most of the virtual machines in the deep learning framework are usually Performance is not good enough. As a supplement, the framework was designed for Tensor computing in the early days. The cost of each operator in array computing is very high, and the cost of virtual machine dispatch and scheduling can be ignored. However, the overhead of porting to programming language programming is difficult to ignore, and writing too much code will become a performance bottleneck. According to tests, the performance of the TorchScript interpreter is only about 1/5 of that of Python, and the performance of tf.function is even worse.

- Incomplete functions: In fact, when applied to real scenarios, we can still find many important functions that tf.function/TorchScript does not support, such as: custom resources cannot be packaged and can only serialize built-in types; Strings can only be processed by bytes, and unicode such as Chinese will cause diff; the container must be isomorphic and does not support custom types, etc...

Furthermore, there are many non-deep learning tasks, For example, there are still many non-deep learning applications or subtasks in natural language processing, such as sequence annotation, language model decoding, artificial feature construction of tree models, etc. These usually have more flexible feature paradigms, but at the same time they are not fully implemented. The end-to-end integrated training and promotion solution still requires a lot of development and correctness verification work.

In order to solve the above problems, we have developed a preprocessing solution based on compilation: MATXScript!

3. MATXScript

In the development of deep learning algorithms, developers usually use Python for rapid iteration and experimentation, while using C to develop high-performance online services, including correctness checksums Service development will become a heavier burden!

MatxScript (https://github.com/bytedance/matxscript) is an AOT compiler for Python sub-language, which can automatically translate Python into C and provide one-click packaging and publishing functions. Using MATXScript allows developers to quickly iterate on models while deploying high-performance services at a lower cost.

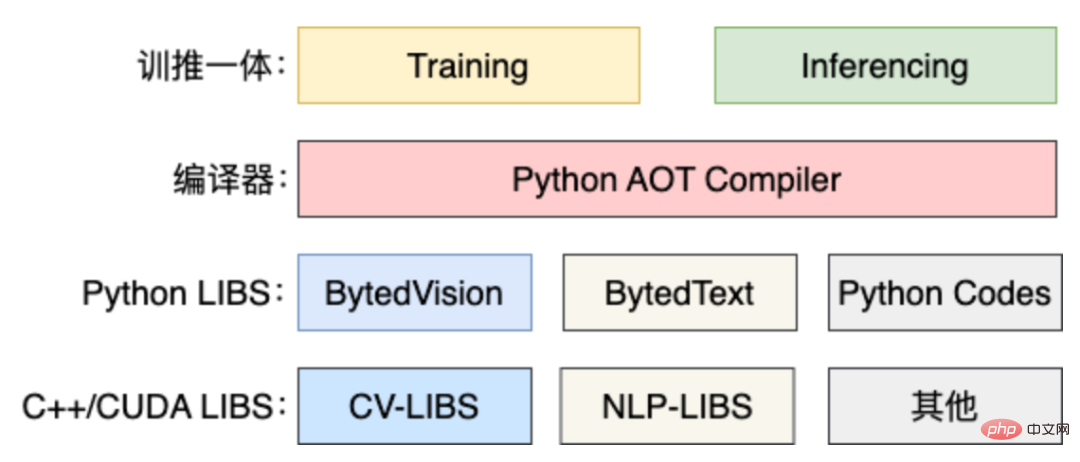

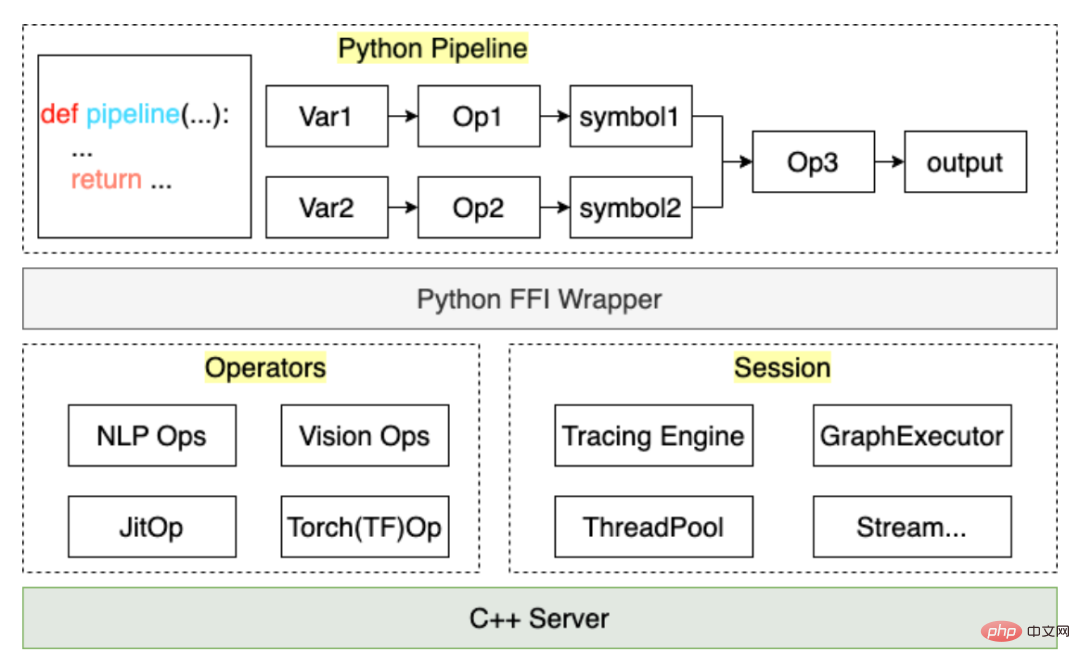

The core architecture is as follows:

- The lowest layer is a pure C/CUDA basic library, developed by high-performance operator experts.

- On top of the basic library, the Python library is encapsulated according to the convention and can be used in the training process.

- When inferencing is required, MATXScript can be used to translate the Python code into equivalent C code, compile it into a dynamic link library, add the model and other dependent resources, and package and publish it together.

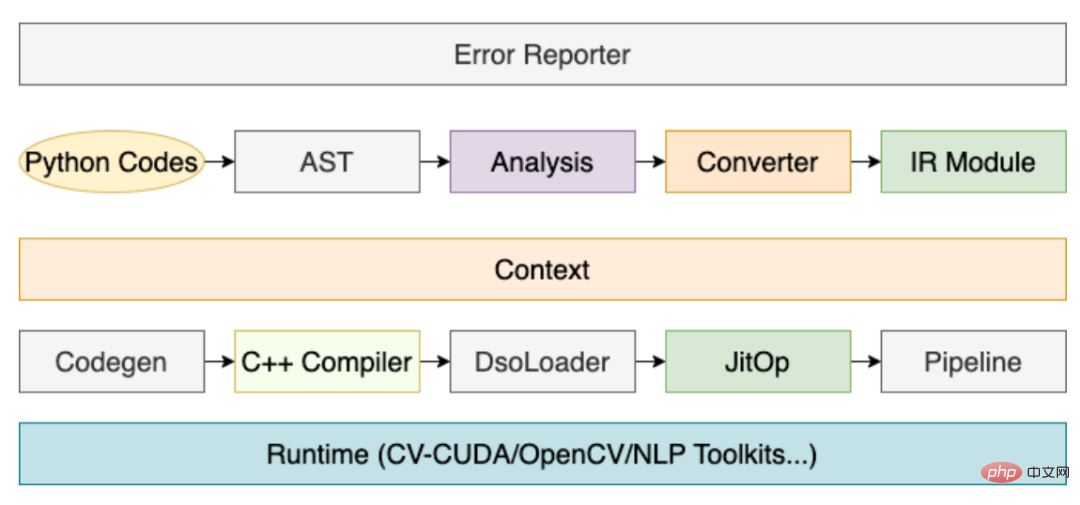

Among them, the role of the compiler is very critical, and its core process is as follows:

Through the above process, the preprocessing code written by the user can It is compiled into a JitOp in the Pipeline. In order to link the pre- and post-processing with the model, we also developed a tracing system (the interface design refers to PyTorch). The architecture is as follows:

Based on MATXScript, we can use the same set of codes for training and inference, which greatly reduces the cost of model deployment. At the same time, the architecture and algorithm are decoupled, and algorithm students can work entirely in Python. Architecture students focus on compiler development and runtime optimization. In ByteDance, this solution has been verified by large-scale deployment!

4. A small test

Here is the simplest English text preprocessing as an example to show how to use MATXScript.

Goal: Convert a piece of English text into indexes

- Write a basic dictionary lookup logic

1 2 3 4 5 6 7 8 9 10 11 12 13 |

|

- Write Pipeline

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

|

- Trace Export to disk

1 2 3 4 5 6 7 |

|

- C Load

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

|

For the complete code, see: https://github. com/bytedance/matxscript/tree/main/examples/text2ids

Summary: The above is a very simple preprocessing logic implemented in pure Python, and can be loaded and run by a general C code. Next we combine the model Show an actual multimodal end-to-end case!

5. Multi-modal case

Here we take graphic multi-modal (Bert Resnet) as an example. The model is written using PyTorch to show the actual work in training and deployment.

- Configuration environment

a. Configure gcc/cuda and other infrastructure (usually the operation and maintenance students have already done this)

b. Install MATXScript and the basic libraries developed based on it (text, vision etc.) - Write model code

a. Omitted here, you can refer to papers or other open source implementations to do it yourself - Write preprocessing code

a . text

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

|

b. vision

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

|

- Connect to DataLoader

a. TextPipeline can be used as a normal Python Class and connected to Dataset

b. VisionPipeline involves GPU preprocessing is more suitable for batch processing. You need to construct a separate DataLoader by yourself (bury a point here, and will open source ByteDance’s internal multi-threaded DataLoader later) - Add the model code and start training Bar

- Export the end-to-end Inference Model

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

|

Summary: After the above steps, we can complete the end-to-end training & release work, and the entire process is completed with pure Python code , can be completely controlled by the algorithm students themselves. Of course, if there are performance issues in the model calculation itself, it can also be completed behind the scenes through automatic image modification and optimization.

Note: For complete code examples, see https://github.com/bytedance/matxscript/tree/main/examples/e2e_multi_modal

6. Unified Server

In the previous In this chapter, we got a model package released by an algorithm classmate. This chapter discusses how to load and run it using a unified service.

The complete Server includes: IDL protocol, Batching strategy, thread/thread scheduling and arrangement, model reasoning...

Here, we only discuss model reasoning, the others are It can be developed as agreed. We use a main function to illustrate the process of model loading and running:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

|

The above code is the simplest case of loading a multi-modal model in C. For students who develop Server, they only need to perform a simple Through abstraction and convention, the above code can be transformed into a unified C model service framework.

7. More information

We are the Bytedance-AML-machine learning system team, committed to providing the company with a unified high-performance training and promotion integrated framework, and also It will serve partner companies through the Volcano Engine Machine Learning Platform. The Volcano Engine Machine Learning Platform is expected to provide MATX-related support from 2023, including preset mirror environments, public samples of common scenarios, and technical support during enterprise access and use. etc., which can achieve low-cost acceleration and integration of training and inference scenarios. Welcome to learn more about our products at https://www.volcengine.com/product/ml-platform.

The above is the detailed content of Bytedance model large-scale deployment actual combat. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

In today's wave of rapid technological changes, Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) are like bright stars, leading the new wave of information technology. These three words frequently appear in various cutting-edge discussions and practical applications, but for many explorers who are new to this field, their specific meanings and their internal connections may still be shrouded in mystery. So let's take a look at this picture first. It can be seen that there is a close correlation and progressive relationship between deep learning, machine learning and artificial intelligence. Deep learning is a specific field of machine learning, and machine learning

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Almost 20 years have passed since the concept of deep learning was proposed in 2006. Deep learning, as a revolution in the field of artificial intelligence, has spawned many influential algorithms. So, what do you think are the top 10 algorithms for deep learning? The following are the top algorithms for deep learning in my opinion. They all occupy an important position in terms of innovation, application value and influence. 1. Deep neural network (DNN) background: Deep neural network (DNN), also called multi-layer perceptron, is the most common deep learning algorithm. When it was first invented, it was questioned due to the computing power bottleneck. Until recent years, computing power, The breakthrough came with the explosion of data. DNN is a neural network model that contains multiple hidden layers. In this model, each layer passes input to the next layer and

Accelerate diffusion model, generate SOTA-level images in the fastest 1 step, Byte Hyper-SD is open source

Apr 25, 2024 pm 05:25 PM

Accelerate diffusion model, generate SOTA-level images in the fastest 1 step, Byte Hyper-SD is open source

Apr 25, 2024 pm 05:25 PM

Recently, DiffusionModel has made significant progress in the field of image generation, bringing unprecedented development opportunities to image generation and video generation tasks. Despite the impressive results, the multi-step iterative denoising properties inherent in the inference process of diffusion models result in high computational costs. Recently, a series of diffusion model distillation algorithms have emerged to accelerate the inference process of diffusion models. These methods can be roughly divided into two categories: i) trajectory-preserving distillation; ii) trajectory reconstruction distillation. However, these two types of methods are limited by the limited effect ceiling or changes in the output domain. In order to solve these problems, the ByteDance technical team proposed a trajectory segmentation consistent method called Hyper-SD.

Xiaomi Byte joins forces! A large model of Xiao Ai's access to Doubao: already installed on mobile phones and SU7

Jun 13, 2024 pm 05:11 PM

Xiaomi Byte joins forces! A large model of Xiao Ai's access to Doubao: already installed on mobile phones and SU7

Jun 13, 2024 pm 05:11 PM

According to news on June 13, according to Byte's "Volcano Engine" public account, Xiaomi's artificial intelligence assistant "Xiao Ai" has reached a cooperation with Volcano Engine. The two parties will achieve a more intelligent AI interactive experience based on the beanbao large model. It is reported that the large-scale beanbao model created by ByteDance can efficiently process up to 120 billion text tokens and generate 30 million pieces of content every day. Xiaomi used the beanbao large model to improve the learning and reasoning capabilities of its own model and create a new "Xiao Ai Classmate", which not only more accurately grasps user needs, but also provides faster response speed and more comprehensive content services. For example, when a user asks about a complex scientific concept, &ldq

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve

TensorFlow deep learning framework model inference pipeline for portrait cutout inference

Mar 26, 2024 pm 01:00 PM

TensorFlow deep learning framework model inference pipeline for portrait cutout inference

Mar 26, 2024 pm 01:00 PM

Overview In order to enable ModelScope users to quickly and conveniently use various models provided by the platform, a set of fully functional Python libraries are provided, which includes the implementation of ModelScope official models, as well as the necessary tools for using these models for inference, finetune and other tasks. Code related to data pre-processing, post-processing, effect evaluation and other functions, while also providing a simple and easy-to-use API and rich usage examples. By calling the library, users can complete tasks such as model reasoning, training, and evaluation by writing just a few lines of code. They can also quickly perform secondary development on this basis to realize their own innovative ideas. The algorithm model currently provided by the library is:

Shenzhen Bytedance Houhai Center has a total construction area of 77,400 square meters and the main structure has been topped out

Jan 24, 2024 pm 05:27 PM

Shenzhen Bytedance Houhai Center has a total construction area of 77,400 square meters and the main structure has been topped out

Jan 24, 2024 pm 05:27 PM

According to the Nanshan District Government’s official WeChat public account “Innovation Nanshan”, the Shenzhen ByteDance Houhai Center project has made important progress recently. According to the China Construction First Engineering Bureau Construction and Development Company, the main structure of the project has been capped three days ahead of schedule. This news means that the core area of Nanshan Houhai will usher in a new landmark building. The Shenzhen ByteDance Houhai Center project is located in the core area of Houhai, Nanshan District. It is the headquarters office building of Toutiao Technology Co., Ltd. in Shenzhen. The total construction area is 77,400 square meters, with a height of about 150 meters and a total of 4 underground floors and 32 above ground floors. It is reported that the Shenzhen ByteDance Houhai Center project will become an innovative super high-rise building integrating office, entertainment, catering and other functions. This project will help Shenzhen promote the integration of the Internet industry