While everyone is eagerly awaiting the release of GPT-4, there has been no news from OpenAI, which is still fixing its predecessor. The San Francisco-based company yesterday released a new model called ChatGPT, a derivative of GPT-3 that interacts in a conversational manner.

This dialogue allows ChatGPT to answer follow-up questions, admit mistakes, challenge incorrect premises, and reject inappropriate requests. It is different from other language models that are made up and fabricated. For example, Meta’s language model Galactica was removed from the shelves within a few days of its launch due to irresponsible fabrication. What's different about ChatGPT is that when it doesn't know what it's talking about, it admits it doesn't know. Furthermore, ChatGPT refuses to answer questions it is not trained to answer - for example, it will not attempt to answer questions about events that occurred after 2022. Nor does it answer questions about individuals.

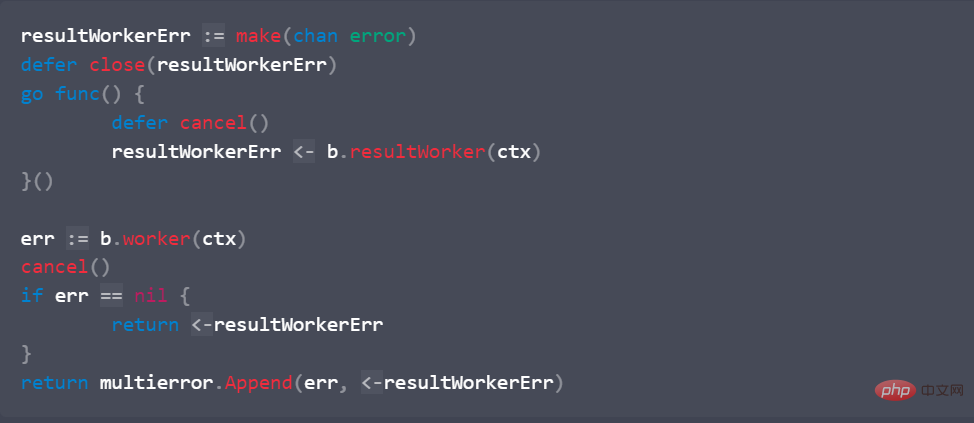

How smart is this model? In the past, the mental work of debugging code had to be done by programmers. Now ChatGPT can take over this drudgery.

User said: This code does not run as I expected, how should I modify it?

ChatGPT: The context information you gave is too little, and it is difficult to tell the problem with the code. Can you provide more information about the code, such as what the code does, is it the whole code or a part of it?

User: This is a part of the code. I think there is something wrong with the channel.

ChatGPT: This question is difficult to say without more information on what the code is supposed to do and what happens when executed. From the information you've provided, it appears that one potential issue with your code is that the resultWorkerErr channel is never closed, which means the code may hang if the resultWorkerErr channel is never written to. This can happen if b.resultWorker does not return an error or it is canceled before it has a chance to return an error.

To solve this problem, you can close the resultWorkerErr channel after writing to it. For example, you could add the following line of code after the line that sends the error to the channel:

This will ensure that the resultWorkerErr channel is always closed, even if b.c retworker never returns an error. Again, without more information about the problem you're seeing, it's hard to tell if this is the problem you're experiencing. Can you provide more details about what happens when running the code?

The above is a process of fixing bugs in ChatGPT, which can be said to be getting to the bottom of it.

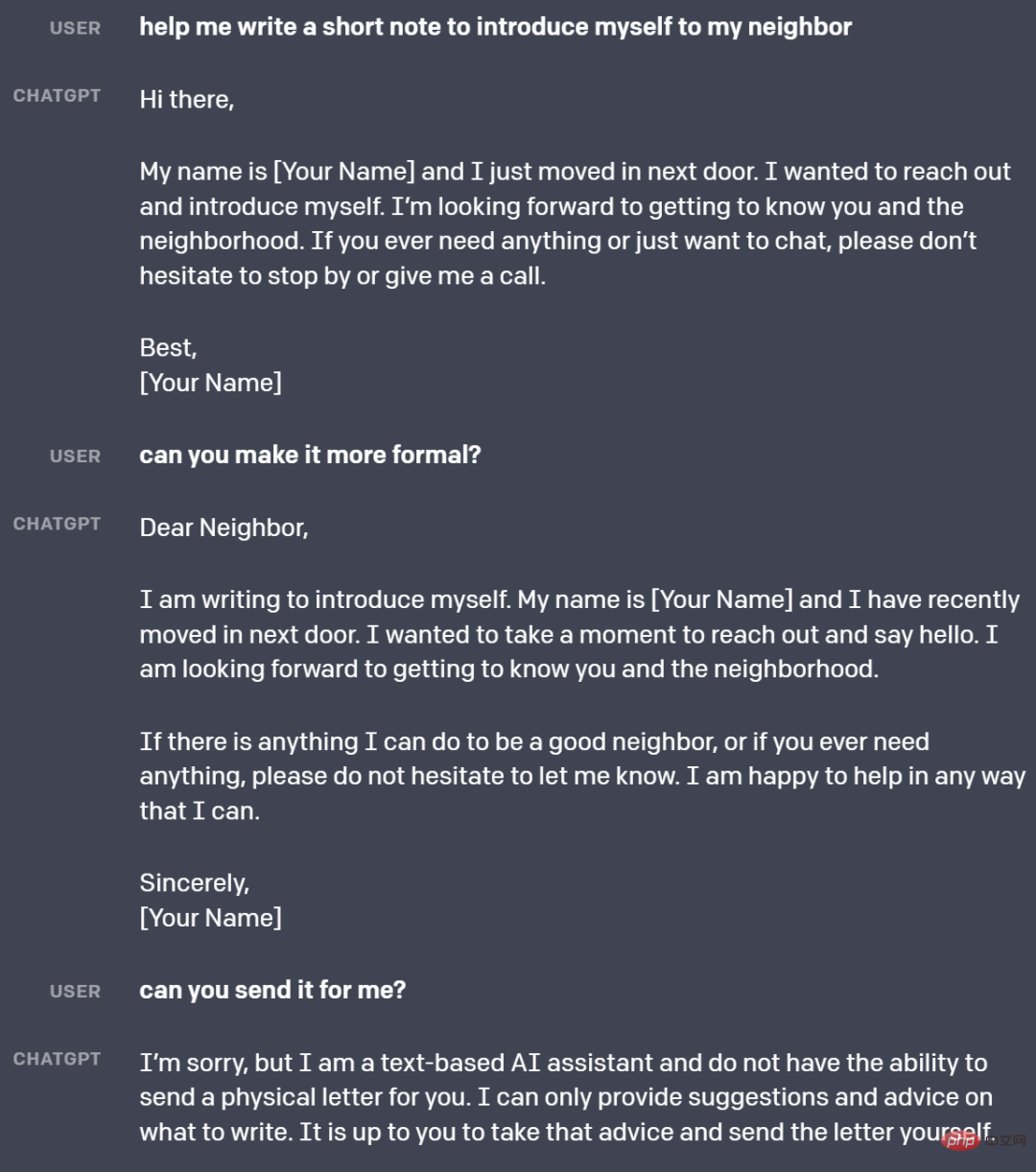

In the example below, ChatGPT provides a response to subsequent commands. The picture below roughly means that the user asked ChatGPT to write a short introduction for him or her so that he could get to know his neighbors; ChatGPT gave a short text introduction, but the user disliked it because it was not formal enough, so the good-tempered ChatGPT came up with another official version A little; then the user made another request and asked ChatGPT to help send it out. Finally ChatGPT replied: "Sorry, I am a text-based AI assistant and do not have the ability to send you a physical letter. I can only provide suggestions on what to write. You can accept the suggestion and send the letter yourself."

After seeing this, do you also want to try it? Yes, once this model was released, many people became addicted and unable to extricate themselves. Even Musk said: "Many people have fallen into the damn crazy ChatGPT loop."

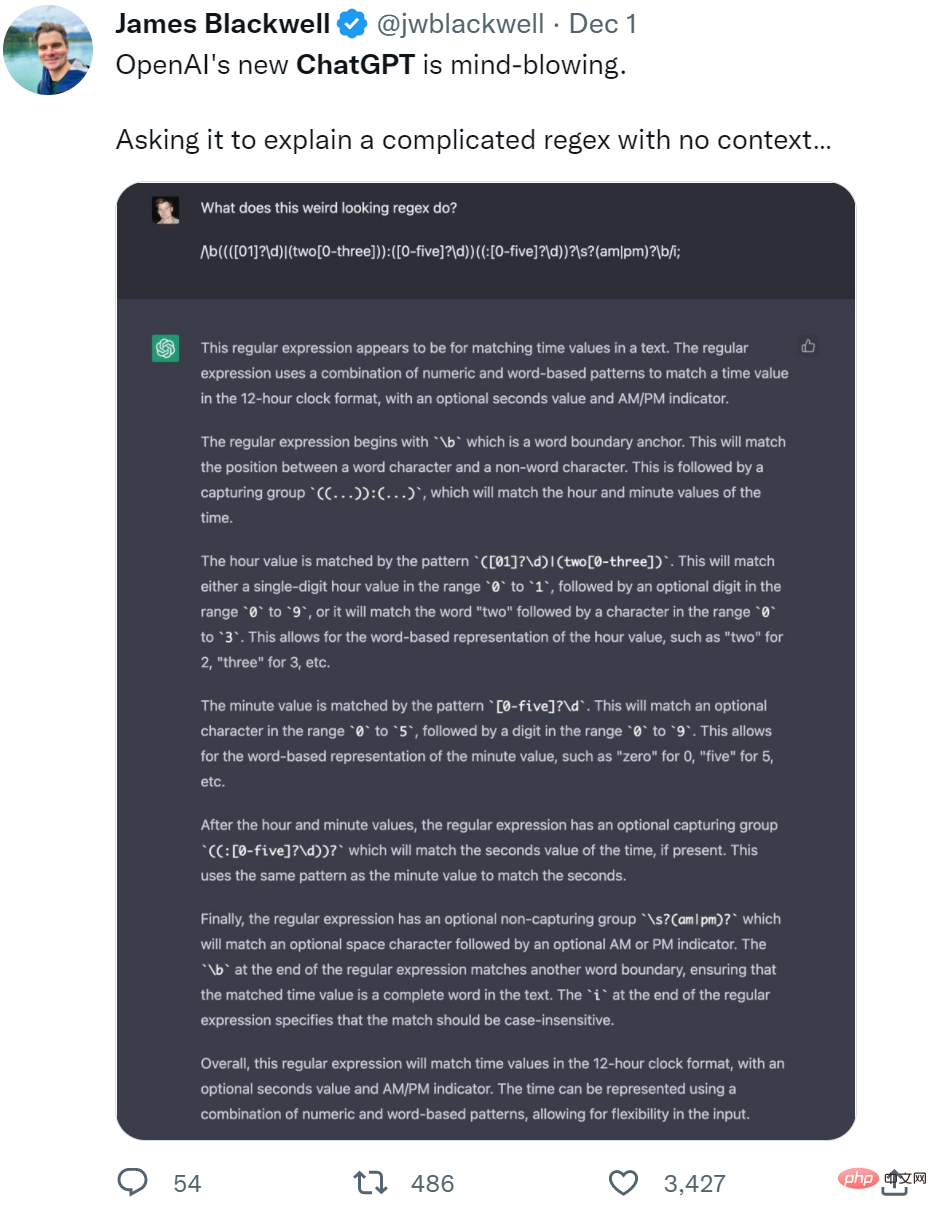

For a time, everyone seemed to have found a new fun, and opened the conversation mode with ChatGPT one after another. "Asking it to interpret a complex regular expression without context..." This problem is really difficult, but it can't trouble ChatGPT. Judging from the length of this explanation, it should be explained clearly.

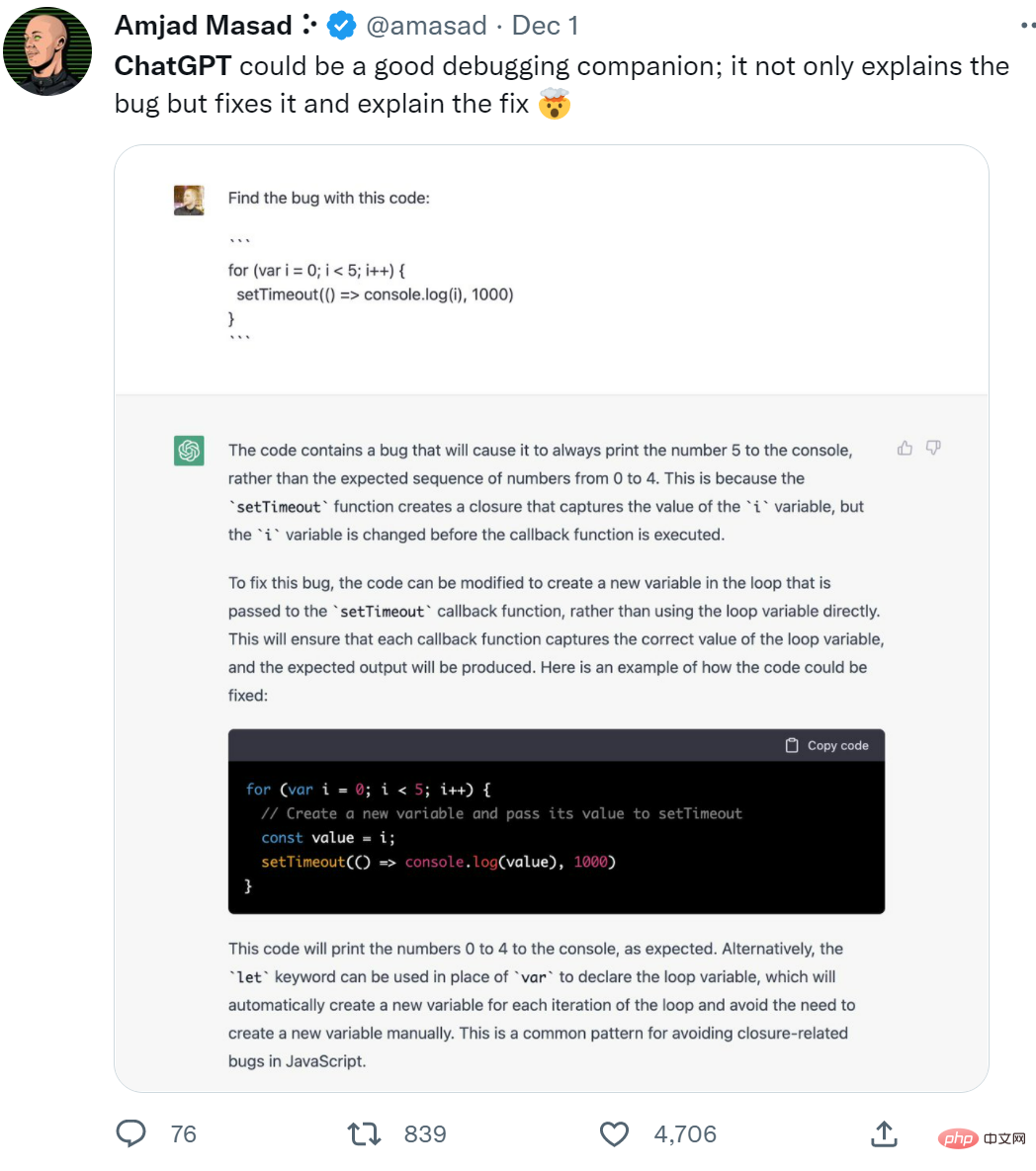

"ChatGPT is a good debugging partner. It can not only explain bugs, but also fix bugs and explain how to fix bugs." Netizens said.

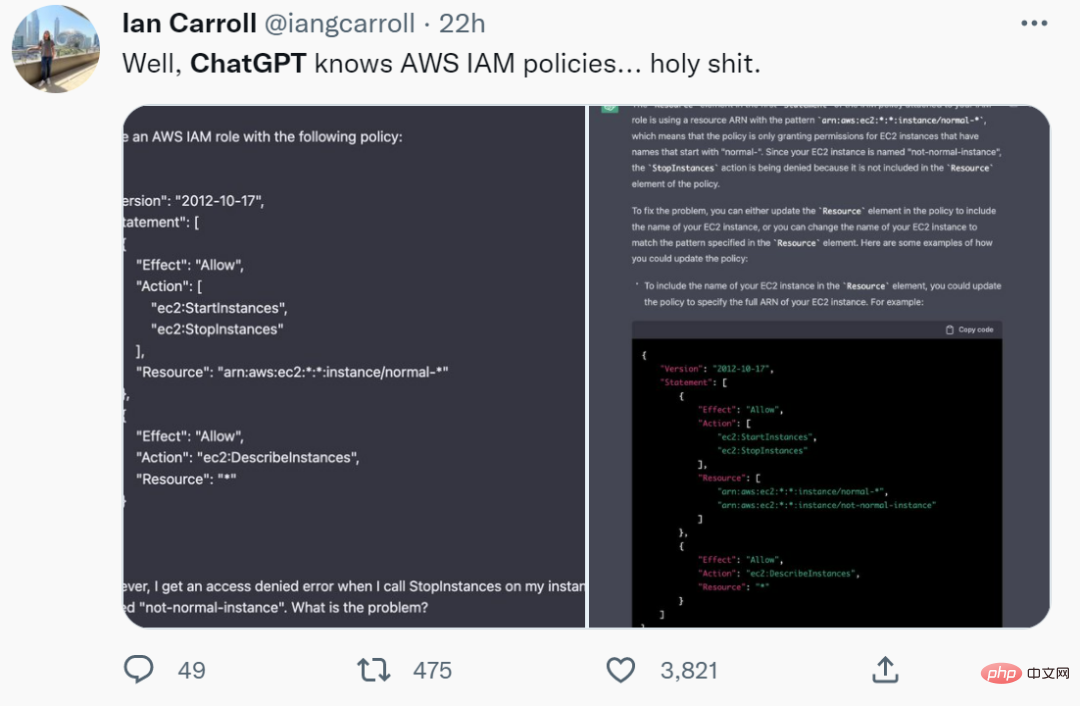

Some netizens said in disbelief: "Oh my god, ChatGPT actually knows the AWS IAM policy."

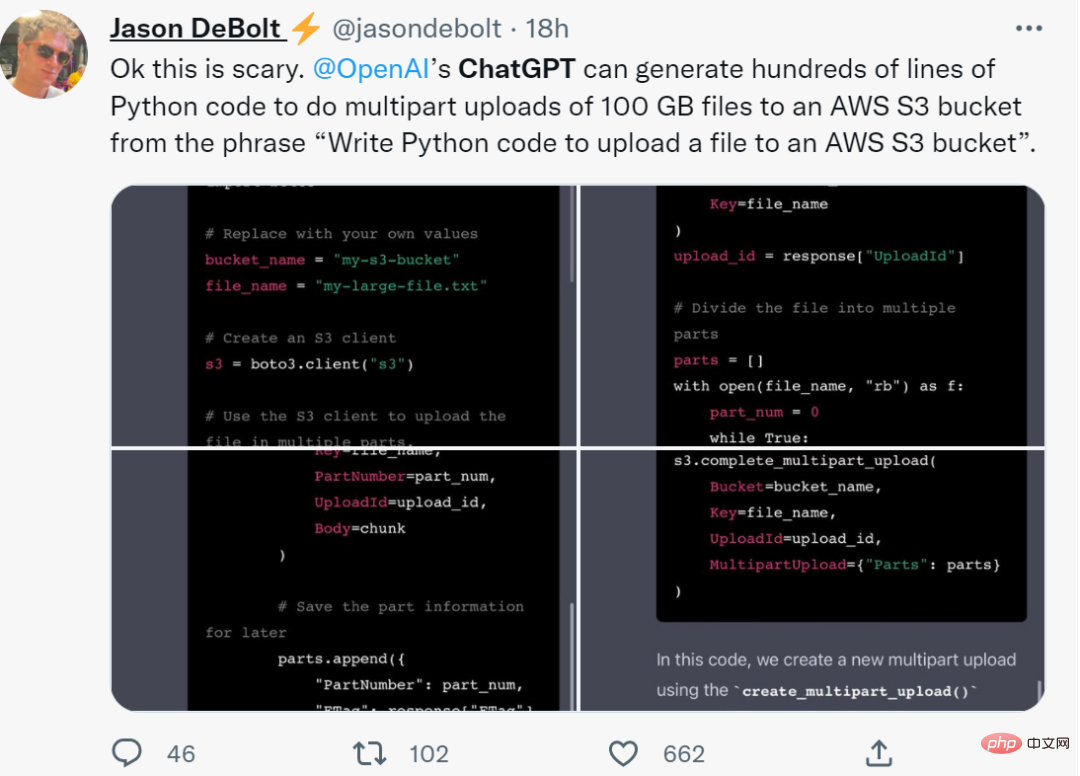

"ChatGPT can generate hundreds of lines of Python code to upload a 100 GB file to an AWS S3 bucket. Just write this sentence "Write Python code to upload a file to an AWS S3 bucket (write Python code and upload files to AWS S3 bucket)."

The trial website has crashed due to too much enthusiasm. "Our demand is extremely strong. Please wait, we are expanding our system."

Trial address: chat. openai.com

ChatGPT uses the same method as InstructGPT - training the model with human feedback reinforcement learning (RLHF), but the data collection settings are slightly There are different. ChatGPT trained an initial model using supervised fine-tuning: human AI trainers provided conversations in which they played both parties—the user and the AI assistant—and the AI trainers had access to conversation responses written by the model to help the AI tailor the responses.

To create a reward model for reinforcement learning, the study needed to collect comparative data containing two or more model responses ranked by quality. The study collected conversations between an AI trainer and a chatbot, randomly selected a message written by the model, extracted several alternative responses, and asked the AI trainer to rank the responses. In addition, the study also used the proximal policy optimization algorithm (PPO) to fine-tune the model and conducted several iterations of the entire process.

ChatGPT fine-tuned a model in the GPT-3.5 series that was trained in early 2022. ChatGPT and GPT 3.5 were trained on Azure AI supercomputing infrastructure.

1. ChatGPT sometimes writes answers that seem reasonable but are incorrect or ridiculous. Solving this problem is very challenging because:

Compared with GPT-3 and Codex, ChatGPT, as the latest model released by OpenAI, has made many improvements in security and usability, and absorbed the advantages of previous models. Lessons learned, and the model’s harmful and unrealistic outputs are significantly reduced through the use of reinforcement learning with human feedback (RLHF).

The above is the detailed content of OpenAI's newly launched GPT is so powerful that the server is instantly crowded. Musk: You are too addicted. For more information, please follow other related articles on the PHP Chinese website!