Technology peripherals

Technology peripherals

AI

AI

Li Feifei's two apprentices jointly guide: A robot that can understand 'multi-modal prompts' can improve zero-shot performance by 2.9 times

Li Feifei's two apprentices jointly guide: A robot that can understand 'multi-modal prompts' can improve zero-shot performance by 2.9 times

Li Feifei's two apprentices jointly guide: A robot that can understand 'multi-modal prompts' can improve zero-shot performance by 2.9 times

The next development opportunity in the field of artificial intelligence may be to equip the AI model with a "body" and interact with the real world to learn.

Compared with existing natural language processing, computer vision and other tasks performed in specific environments, Open field robotics is obviously more Disaster.

For example, prompt-based learning can allow a single language model to perform any natural language processing tasks, such as writing code, doing abstracts, and answering questions, just by Just modify the prompt.

Butthere are more types of task specifications in robotics, such as imitating a single sample demonstration, following language instructions, or achieving a certain visual goal, which are usually considered For different tasks, they are handled by specially trained models.

Recently, researchers from NVIDIA, Stanford University, Macalester College, California Institute of Technology, Tsinghua University and the University of Texas at Austin jointly proposed a universal robot based on Transformer The intelligent agent VIMA uses multi-modal prompt to achieve extremely high generalization performance and can handle a large number of robot operation tasks.

##Paper link: https://arxiv.org/abs/2210.03094

Project link: https://vimalabs.github.io/

## Code link: https://github.com/vimalabs/ VIMA

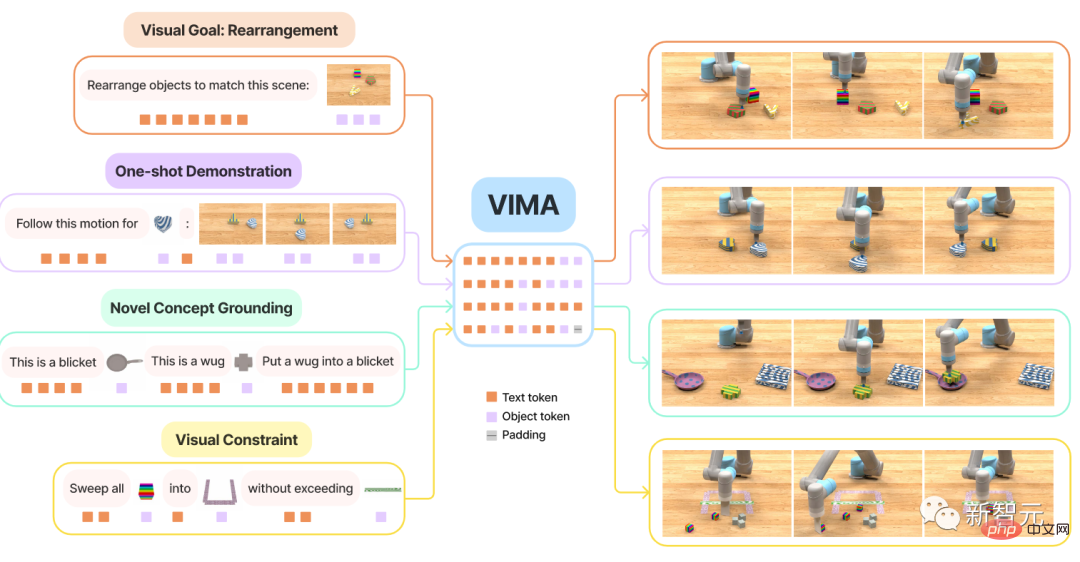

The input prompt isinterleaved text and visual symbols .

To train and evaluate VIMA, the researchers propose anew simulation benchmark dataset containing thousands of procedurally generated images with multimodal cues Desktop tasks, and more than 600,000 expert trajectories are used for imitation learning, with four levels to evaluate the model's generalization performance.

With the same size model and the same amount of training data, VIMA’s task under the most difficult zero-shot generalization settingThe success rate is that of the current sota method 2.9 times .

With 10 times less training data, VIMA still performs 2.7 times better than other methods.Currently all code, pre-trained models, data sets and simulation benchmarks are

completely open source.

The first author of the paper isYunfan Jiang, a second-year master's student at Stanford University who is currently an intern at NVIDIA Research Institute. Graduated from the University of Edinburgh in 2020. His main research direction is embodied artificial intelligence (embodied AI), which learns through interaction with the environment. The specific research content is how to use large-scale basic models to implement open embodied agents

TwoMentors are both former students of Li Feifei.

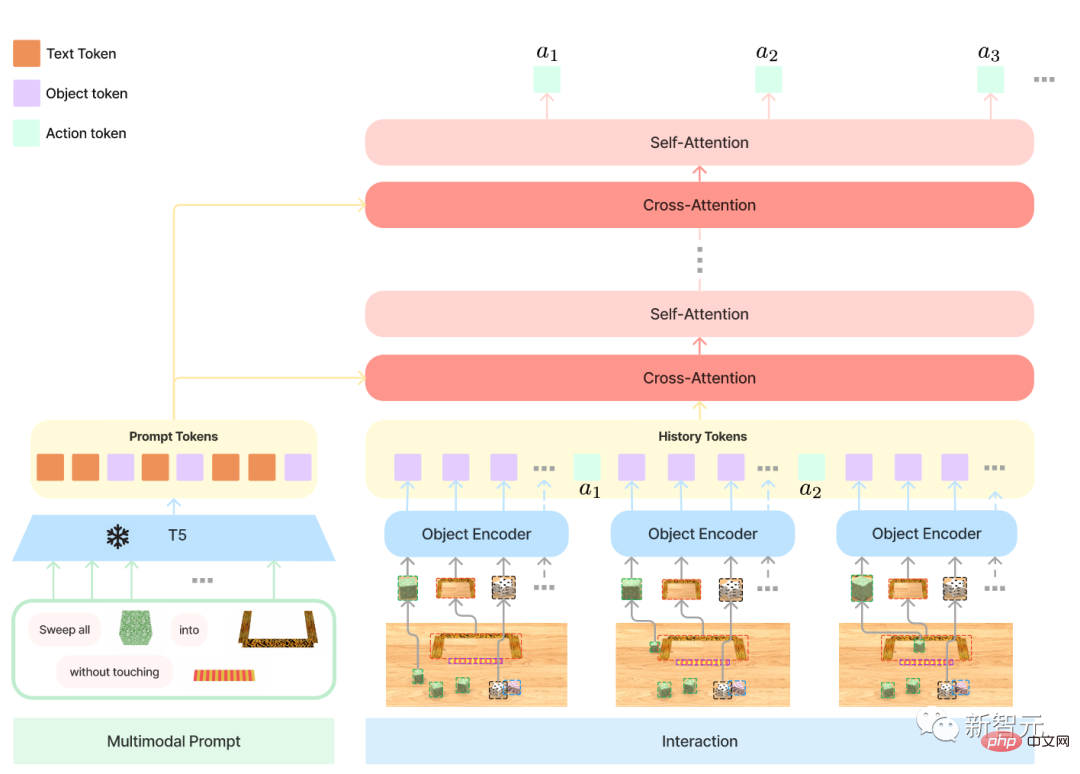

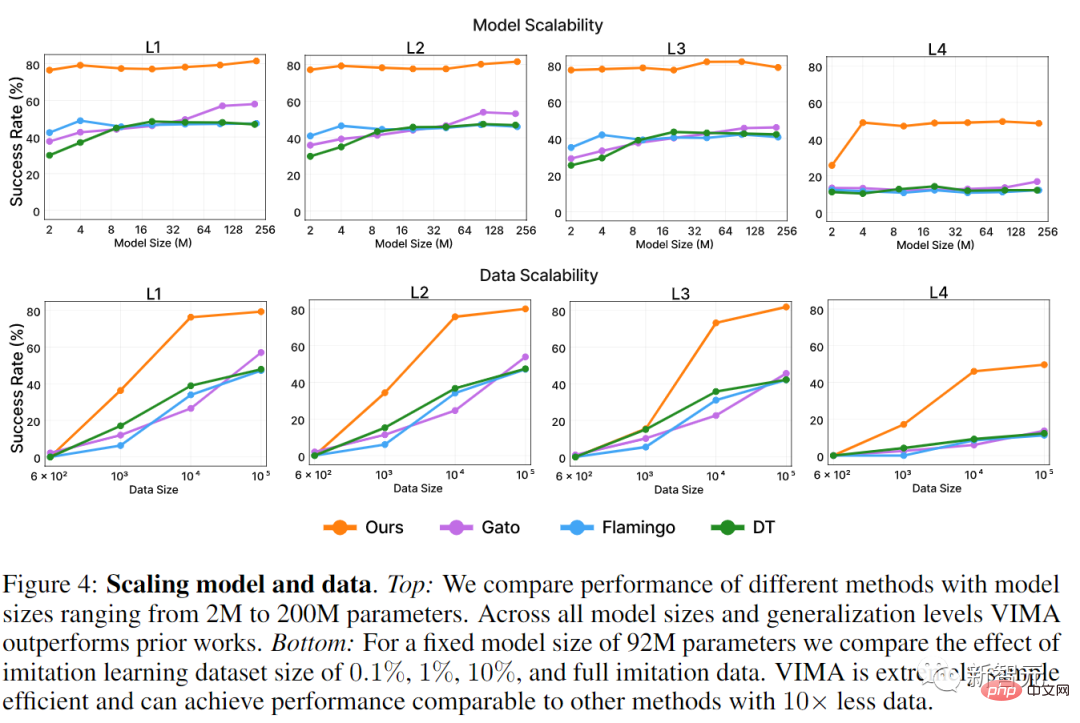

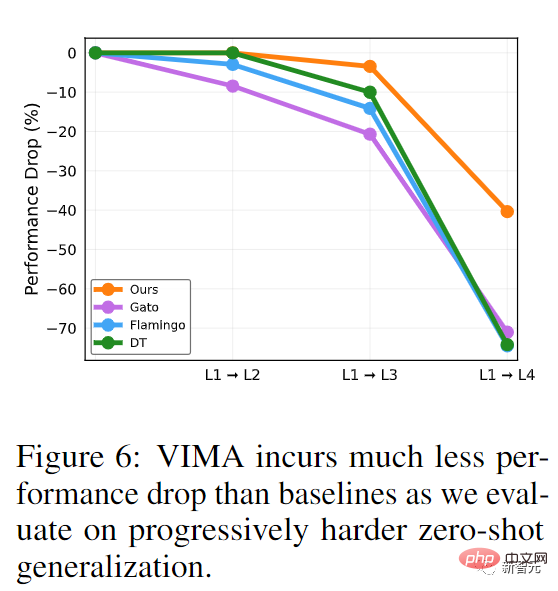

Zhu Yuke graduated from Zhejiang University with a bachelor's degree and obtained a double degree from Zhejiang University and Simon Fraser University in Canada. Master's and doctoral students studied at Stanford University under the tutelage of Li Feifei and obtained their doctorate in August 2019. Zhu Yuke is currently an assistant professor in the Department of Computer Science at UT Austin, the director of the Robot Perception and Learning Laboratory, and a senior research scientist at NVIDIA Research Institute. Fan Linxi, graduated from Stanford University with a Ph.D. under the tutelage of Li Feifei, and is currently a research scientist at NVIDIA AI. The main research direction is the development of generally capable autonomous agents. Specific research work covers basic models, policy learning, robotics, multi-modal learning and large-scale systems. Transformer has achieved very high performance in multi-tasking in the field of NLP. Only one model can complete question and answer, machine translation, and text at the same time. Abstract etc. The interface for implementing different tasks lies in the input text prompts, thereby passing specific task requirements to the general large model. Can this prompt interface be used on a general robot agent? For a housework robot, ideally, you only need to enter GET ME , and the robot can take the cup according to the picture come over. When the robot needs to learn new skills, it is best to learn them by inputting video demonstrations. If the robot needs to interact with unfamiliar objects, it can be easily explained with just an illustration. At the same time, in order to ensure safe deployment, users can further specify visual constraints, such as Do not enter the room ##In order to realize these functions, the VIMA model mainly contains three parts: 1, Formal multi-modal prompts, the robot The manipulation task is transformed into a sequence modeling problem; 2. A new robot agent model, capable of multi-task operations 3. A large-scale benchmark with different tasks to systematically evaluate the scalability and generality of the agent First, by The flexibility brought by multi-modal prompts allows developers to specify and build a model to support a large number of task specifications. This paper mainly considers six types of tasks: 1, Simple object manipulation, the task prompt is in the form of put into 2. Realize visual goal reaching, manipulate objects to achieve goal setting, such as rearrangement; 3.Accept new concepts (Novel concept grounding) , the prompts contain some uncommon words, such as dax, blicket, etc., which can be explained through the images in the prompts, and then used directly in the instructions, which can test the agent's recognition of new concepts. Know the speed; 4, One-shot video imitation, watch the video demonstration and learn how to imitate a specific Objects are reproduced; 5, Satisfy visual constraint satisfaction, the robot must carefully manipulate objects to avoid violating safety restrictions; 6, Visual reasoning(Visual reasoning), some tasks require the agent to be able to reason, such as "put all objects with the same texture as "In a container", or require visual memory, such as "Put It should be noted that these six types of tasks Not mutually exclusive, for example, some tasks may introduce a verb that has not been seen before (Novel Concept) through demonstration video (imitation) It’s hard to make a meal without rice. In order to train the model, the researchers also prepared some supporting data as the multi-modal robot learning benchmark VIMA-BENCH. In Simulation Environment(Simulation Environment), existing benchmarks are generally aimed at specific task specifications. Currently, there is no benchmark that can provide a rich multi-modal task suite and comprehensive A test platform to detect agent capabilities in a targeted manner. To this end, the researchers built VIMA-BENCH by extending the Ravens robot simulator to support an extensible collection of objects and textures to compose multi-modal cues and procedurally generate a large number of task. Specifically, VIMA-BENCH provides 17 meta-tasks with multi-modal prompt templates, which can be instantiated into 1000 independent tasks. Each meta-task belongs to one or more of the above six task specification methods. VIMA-BENCH can generate a large amount of imitation learning data through scripted oracle agents. On Observation and Actions, the simulator's observation space consists of RGB images rendered from front and top-down views, baseline Realistic object segmentations and bounding boxes are also provided for training object-centric models. VIM-BENCH inherited the advanced action space from the previous work, which consists of the most basic movement skills, such as "pick and place", "wipe", etc., specifically the terminal effects. Determined by posture. The simulator also has a scripted oracle program that can use privileged simulator state information, such as the precise location of all objects, and multi-modal instructions Basic explanations and expert demonstrations. Finally, the researchers generated a large offline dataset of expert trajectories for imitation learning through pre-programmed oracles. The dataset includes 50,000 trajectories for each meta-task, for a total of 650,000 successful trajectories. Also retain a subset of object models and textures for easy evaluation, and use 4 of the 17 meta-tasks for zero-shot generalization testing. Each task standard of VIMA-BENCH only has success and failure, and there is no reward signal for intermediate states. At test time, the researchers executed the agent strategy in a physics simulator to calculate the success rate, with the average success rate across all evaluated metatasks being the final reported metric. The evaluation protocol contains four levels to systematically probe the agent's generalization ability , with each level deviating more from training Distribution, so strictly speaking one level is more difficult than the other. 1, Placement generalization : During the training process, all prompts are verbatim, but during testing, the objects on the desktop Placement is random. 2, Combinatorial generalization: All materials (adjectives) and three-dimensional objects (nouns) can be seen during training, but in Some new combinations will appear in the test. 3. Novel object generalization: Test prompts and simulated workspaces include new adjectives and objects. 4, Novel task generalization: New meta-task with new prompt template during testing The multi-modal prompt contains a total of three formats: 1, Text, using the pre-trained T5 model Carry out word segmentation and obtain word vectors; 2, The entire desktop scene, first use Mask R-CNN to identify all independent objects, each object is represented by a bounding box and cropped image representations, and then encoded using a bounding bo encoder and ViT respectively. 3, Image of a single object, also use ViT to obtain tokens, and then input the result sequence into the pre-trained T5 encoder model. Robot Controller, that is, the input of the decoder is the representation and trajectory after multiple cross-attention layers on the prompt sequence. historical sequence. Such a design can enhance the connection to prompts; better retain and process the original prompt tokens more deeply; and better computing efficiency. The experimental design in the testing phase is mainly to answer three questions: 1, VIMA Performance comparison with the previous SOTA Transformer-based agent on various tasks with multi-modal prompts; 2, VIMA in model capacity and data volume Scaling properties; ##3. Whether different visual word segmenters, conditional prompts and conditional encoding will affect the final decision. The baseline models compared include Gato, Flamingo and Decision Transformer(DT) First on Model scaling(Model scaling), the researchers trained all methods from 2M to 200M parameter sizes, and the size of the encoder was always maintained as T5-base. VIMA is definitely better than other works in zero-shot generalization evaluation at all levels. Although Gato and Flamingo have improved performance on larger size models, VIMA is still better than all models. In Data scaling (Data scaling), the researchers adopted 0.1%, 1% for the training data of each method , different experiments on 10% and full imitation learning data sets, VIMA only needs 1% of the data to achieve the L1 and L2 generalization indicators of other methods trained with 10 times the data. On the L4 indicator, with only 1% of the training data, VIMA is already better than other models trained on the full amount of data. In the performance comparison of Progressive Generalization (Progressive Generalization), in the more difficult generalization task, there is no Apply any tweaks. The VIMA model has the least performance regression, especially from L1 to L2 and L1 to L3, while other models have degraded by more than 20%, which also means that VIMA has learned a more generalized strategy and a more robust representation. Reference: https://arxiv.org/ abs/2210.03094Robots and multi-modal prompt

VIMA model

The above is the detailed content of Li Feifei's two apprentices jointly guide: A robot that can understand 'multi-modal prompts' can improve zero-shot performance by 2.9 times. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

With such a powerful AI imitation ability, it is really impossible to prevent it. It is completely impossible to prevent it. Has the development of AI reached this level now? Your front foot makes your facial features fly, and on your back foot, the exact same expression is reproduced. Staring, raising eyebrows, pouting, no matter how exaggerated the expression is, it is all imitated perfectly. Increase the difficulty, raise the eyebrows higher, open the eyes wider, and even the mouth shape is crooked, and the virtual character avatar can perfectly reproduce the expression. When you adjust the parameters on the left, the virtual avatar on the right will also change its movements accordingly to give a close-up of the mouth and eyes. The imitation cannot be said to be exactly the same, but the expression is exactly the same (far right). The research comes from institutions such as the Technical University of Munich, which proposes GaussianAvatars, which

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

In the field of industrial automation technology, there are two recent hot spots that are difficult to ignore: artificial intelligence (AI) and Nvidia. Don’t change the meaning of the original content, fine-tune the content, rewrite the content, don’t continue: “Not only that, the two are closely related, because Nvidia is expanding beyond just its original graphics processing units (GPUs). The technology extends to the field of digital twins and is closely connected to emerging AI technologies. "Recently, NVIDIA has reached cooperation with many industrial companies, including leading industrial automation companies such as Aveva, Rockwell Automation, Siemens and Schneider Electric, as well as Teradyne Robotics and its MiR and Universal Robots companies. Recently,Nvidiahascoll

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

Editor of Machine Power Report: Wu Xin The domestic version of the humanoid robot + large model team completed the operation task of complex flexible materials such as folding clothes for the first time. With the unveiling of Figure01, which integrates OpenAI's multi-modal large model, the related progress of domestic peers has been attracting attention. Just yesterday, UBTECH, China's "number one humanoid robot stock", released the first demo of the humanoid robot WalkerS that is deeply integrated with Baidu Wenxin's large model, showing some interesting new features. Now, WalkerS, blessed by Baidu Wenxin’s large model capabilities, looks like this. Like Figure01, WalkerS does not move around, but stands behind a desk to complete a series of tasks. It can follow human commands and fold clothes

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

In the blink of an eye, robots have learned to do magic? It was seen that it first picked up the water spoon on the table and proved to the audience that there was nothing in it... Then it put the egg-like object in its hand, then put the water spoon back on the table and started to "cast a spell"... …Just when it picked up the water spoon again, a miracle happened. The egg that was originally put in disappeared, and the thing that jumped out turned into a basketball... Let’s look at the continuous actions again: △ This animation shows a set of actions at 2x speed, and it flows smoothly. Only by watching the video repeatedly at 0.5x speed can it be understood. Finally, I discovered the clues: if my hand speed were faster, I might be able to hide it from the enemy. Some netizens lamented that the robot’s magic skills were even higher than their own: Mag was the one who performed this magic for us.

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

The following 10 humanoid robots are shaping our future: 1. ASIMO: Developed by Honda, ASIMO is one of the most well-known humanoid robots. Standing 4 feet tall and weighing 119 pounds, ASIMO is equipped with advanced sensors and artificial intelligence capabilities that allow it to navigate complex environments and interact with humans. ASIMO's versatility makes it suitable for a variety of tasks, from assisting people with disabilities to delivering presentations at events. 2. Pepper: Created by Softbank Robotics, Pepper aims to be a social companion for humans. With its expressive face and ability to recognize emotions, Pepper can participate in conversations, help in retail settings, and even provide educational support. Pepper's

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Sweeping and mopping robots are one of the most popular smart home appliances among consumers in recent years. The convenience of operation it brings, or even the need for no operation, allows lazy people to free their hands, allowing consumers to "liberate" from daily housework and spend more time on the things they like. Improved quality of life in disguised form. Riding on this craze, almost all home appliance brands on the market are making their own sweeping and mopping robots, making the entire sweeping and mopping robot market very lively. However, the rapid expansion of the market will inevitably bring about a hidden danger: many manufacturers will use the tactics of sea of machines to quickly occupy more market share, resulting in many new products without any upgrade points. It is also said that they are "matryoshka" models. Not an exaggeration. However, not all sweeping and mopping robots are