Technology peripherals

Technology peripherals

AI

AI

New breakthrough in HCP laboratory of Sun Yat-sen University: using causal paradigm to upgrade multi-modal large models

New breakthrough in HCP laboratory of Sun Yat-sen University: using causal paradigm to upgrade multi-modal large models

New breakthrough in HCP laboratory of Sun Yat-sen University: using causal paradigm to upgrade multi-modal large models

Sun Yat-sen University’s Human-Computer Intelligence Fusion Laboratory (HCP) has made fruitful achievements in AIGC and multi-modal large models. It has been selected for more than ten articles in the recent AAAI 2023 and CVPR 2023, ranking among global research institutions. the first echelon.

One of the works realizes the use of causal models to significantly improve the controllability and generalization of multi-modal large models in tuning - "Masked Images Are Counterfactual Samples for" Robust Fine-tuning".

##Link: https://arxiv.org/abs/2303.03052

Using pre-trained large-scale models to fine-tune on downstream tasks is a currently popular deep learning paradigm. In particular, the recent outstanding performance of ChatGPT, a large pre-trained language model, has made this technical paradigm widely recognized. After pre-training with massive data, these large pre-trained models can adapt to the changing data distribution in the real environment, and therefore show strong robustness in general scenarios.

However, when the pre-trained large model is fine-tuned with downstream scenario data to adapt to specific application tasks, in the vast majority of cases these data are singular. Using these data to fine-tune the pre-trained large model will often reduce the robustness of the model, making it difficult to apply based on the pre-trained large model. Especially in terms of visual models, since the diversity of images far exceeds language, the problem of downstream fine-tuning training leading to a decrease in the robustness of vision-related pre-trained large models is particularly prominent.

Previous research methods usually maintain the robustness of the fine-tuned pre-trained model implicitly at the model parameter level through model integration and other methods. However, these works did not analyze the essential reasons why fine-tuning leads to out-of-distribution performance degradation of the model, nor did they clearly solve the above-mentioned problem of reduced robustness after fine-tuning of large models.

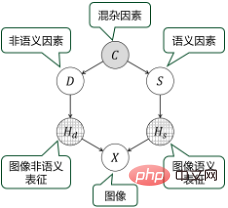

This work is based on the cross-modal large model, and analyzes the essential reasons for the robustness loss of the pre-trained large model from the perspective of causality, and accordingly proposes a method that can A fine-tuning training method that significantly improves model robustness. This method enables the model to maintain strong robustness while adapting to downstream tasks, and better meets the needs of practical applications.

Take the cross-modal pre-training large model CLIP (Contrastive Language–Image Pre-training) released by OpenAI in 2021 as an example: CLIP is a contrast-based image-text union The learned cross-modal pre-trained large model is the basis for generative models such as Stable Diffusion. The model is trained on massive multi-source data containing about 400 million image-text pairs, and learns some causal relationships that are robust to distribution changes to a certain extent.

However, when fine-tuning CLIP with single-feature downstream data, it is easy to destroy the causal knowledge learned by the model, because the non-semantic representation and semantic representation of the training image are highly entangled. of. For example, when applying CLIP model transfer to the downstream scenario of “farm,” many of the training images show “cows” in the grass. At this point, fine-tuning training may allow the model to learn to rely on the non-"cow" semantic representation of grass to predict the semantics of the image. However, this correlation is not necessarily true, for example "cows" may also appear on the road. Therefore, after the model is fine-tuned and trained, its robustness will be reduced, and the output results during application may become extremely unstable and lack controllability.

Based on the team’s years of experience in building and training large models, this work re-examines the problem of reduced robustness caused by fine-tuning of pre-trained models from the perspective of causality. Based on causal modeling and analysis, this work proposes a fine-tuning training method that constructs counterfactual samples based on image masks and improves model robustness through mask image learning.

Specifically, to break spurious correlations in downstream training images, this work proposes a class activation map (CAM)-based method to mask and replace the content of specific regions of the image , used to manipulate non-semantic representations or semantic representations of images to generate counterfactual samples. The fine-tuned model can learn to imitate the representation of these counterfactual samples by the pre-trained model through distillation, thereby better decoupling the influence of semantic factors and non-semantic factors, and improving the adaptability to distribution shifts in downstream fields.

Experiments show that this method can significantly improve the performance of the pre-trained model in downstream tasks, and at the same time improves the robustness compared to Existing fine-tuning training methods for large models have significant advantages.

The important significance of this work is to open up the "black box" inherited by the pre-trained large model from the deep learning paradigm to a certain extent, and to solve the "interpretability" of the large model. and "controllability" issues, bringing us closer to the tangible productivity improvements led by pre-trained large models.

The HCP team of Sun Yat-sen University has been engaged in research on large model technology paradigms for many years since the advent of the Transformer mechanism. It is committed to improving the training efficiency of large models and introducing causal models to solve the "controllable problem" of large models. "sex" issue. Over the years, the team has independently researched and developed multiple large pre-training models for vision, language, speech and cross-modality. The "Wukong" cross-modal large model jointly developed with Huawei's Noah's Ark Laboratory (link: https://arxiv .org/abs/2202.06767) is a typical case.

Team Introduction

Sun Yat-sen University Human-Computer-Object Intelligence Fusion Laboratory (HCP Lab) is engaged in multi-modal recognition It conducts systematic research in the fields of intelligent computing, robotics and embedded systems, metaverse and digital humans, and controllable content generation, and conducts in-depth application scenarios to create product prototypes, output a large number of original technologies, and incubate entrepreneurial teams. The laboratory was founded in 2010 by Professor Lin Liang, IAPR Fellow. It has won the first prize of Science and Technology Award of China Image and Graphics Society, the Wu Wenjun Natural Science Award, the first prize of provincial natural science and other honors; it has trained national-level young talents such as Liang Xiaodan and Wang Keze.

The above is the detailed content of New breakthrough in HCP laboratory of Sun Yat-sen University: using causal paradigm to upgrade multi-modal large models. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile