Technology peripherals

Technology peripherals

AI

AI

The new version of TensorFlow has another flag! The official team clarified the 'four pillars': committed to 100% backward compatibility and released in 2023

The new version of TensorFlow has another flag! The official team clarified the 'four pillars': committed to 100% backward compatibility and released in 2023

The new version of TensorFlow has another flag! The official team clarified the 'four pillars': committed to 100% backward compatibility and released in 2023

Between TensorFlow and PyTorch, who do you choose?

Alchemists must have been tortured by TF, static pictures, dependency issues, inexplicableChangeInterface, even after Google released TF 2.0, the problem still has not been solved. After having no choice but to switch to PyTorch, the world has become sunny.

"Life is short, I use PyTorch"

Even started from Google to announce the new generation computing framework JAXLooking at it, it seems that the officials have given up on TF. TensorFlow is only half a step away from the grave.

Before TF’s seventh birthday, the TensorFlow development team published a blog announcing that TensorFlow will continue to be developed and will be released in 2023 A new version will be released in 2020, rectifying the dirty, messy and poor interface, and promising 100% backward compatibility!

TensorFlow fights the future

About seven years ago, that is, on November 9, 2015, TensorFlow was officially open source.

Since then, thousands of open source contributors and Google development experts in the community, community organizers, researchers and global educators have invested in the development of TensorFlow superior.

Today, seven years later, TensorFlow is the most commonly used machine learning platform, used by millions of developers.

TF is the third-ranked software resource library on gitHub (after Vue and React), and is also the most downloaded machine learning on PyPI software package.

TF also brings machine learning to the mobile ecosystem: TFLite runs on 4 billion devices.

TensorFlow also brings machine learning to the browser: TensorFlow.js is downloaded 170,000 times per week.

TensorFlow powers nearly all production machine learning across Google’s product portfolio, including Search, GMail, YouTube, Maps, Play, Ads, Photos, and more.

In addition to Google, among other subsidiaries of Alphabet, TensorFlow and Keras also provide the basis of machine intelligence for Waymo’s self-driving cars.

In the broader industry, TensorFlow powers machine learning systems at thousands of companies, including most of the world’s largest machine learning users—Apple, ByteDance, Netflix , Tencent, Twitter, etc.

In the research field, every month, Google Scholar includes more than 3,000 new scientific documents mentioning TensorFlow or Keras

TF Today, its user base and developer ecosystem are larger than ever and still growing!

The development of TensorFlow is not only an achievement worth celebrating, but also an opportunity to further provide more value to the machine learning community.

The development team’s goal is to provide the best machine learning platform on the planet and work to transform machine learning from a niche industry into an industry as mature as web development.

To achieve this goal, the development team is willing to listen to user needs, anticipate new industry trends, iterate the software's interfaces, and strive to make large-scale innovation increasingly easier.

Machine learning is evolving rapidly, and so is TensorFlow.

The development team has begun working on the next iteration of TensorFlow, which will support the next decade of machine learning development and fight for the future together!

The four pillars of TensorFlow

Fast and scalable: XLA compilation, distributed computing, performance optimization

TF will focus on the compilation of XLA, based on the performance advantages of TPU, making the training and inference workflow of most models faster on GPU and CPU. The development team hopes that XLA will become the industry standard for deep learning compilers, and it is now open source as part of the OpenXLA initiative.

At the same time, the team also began to study a new interface DTensor that can be used for large-scale model parallelism, which may open up the future of very large model training and deployment. When users develop large models, even if they use multiple clients at the same time, it feels like training on a single machine.

DTensor will be unified with the tf.distribution interface to support flexible models and data parallelism.

The development team will also further research algorithm performance optimization techniques, such as mixed precision and reduced precision calculations, which can provide considerable GPU and TPU speed improvements.

Applied Machine Learning

Provides new tools for the fields of computer vision and natural language processing.

The applied machine learning ecosystem the team is working on, specifically through the KerasCV and KerasNLP packages, provides modular and composable components for applied CV and NLP use cases, including a large number of state-of-the-art Pre-trained model.

For developers, the team will also be adding more code samples, guides, and documentation for popular and emerging applied machine learning use cases , the ultimate goal is to gradually reduce industry barriers to machine learning and transform it into a tool in the hands of every developer.

Easier to deploy

Developers will be able to easily export models, such as to mobile devices (Android or iOS ), edge devices (microcontrollers), server backends or JavaScript will become simpler.

In the future, exporting models to TFLite and TF.js and optimizing their inference performance will be as simple as calling mod.export().

At the same time, the team is also developing a public TF2 C interface for native server-side inference, which can be directly used as part of a C program.

Whether you develop models using JAX and TensorFlow Serving, or mobile and web models developed using TensorFlow Lite and TensorFlow.js, it will become easier to deploy.

Easier

As the field of machine learning has expanded over the past few years, TensorFlow also has a growing number of interfaces, and they are not always presented in a consistent or easy-to-understand way.

The development team is actively integrating and simplifying these APIs, such as adopting the NumPy API standard for numbers.

Model debugging is also an issue that needs to be considered. An excellent framework is not only its API interface design, but also the debugging experience.

The team’s goal is to minimize the solution time for developing any applied machine learning system through better debugging capabilities.

Commitment: 100% backward compatibility

The development team hopes that TensorFlow will become the cornerstone of the machine learning industry, so the stability of the API is also the most important feature.

As an engineer who relies on TensorFlow as part of a product, and as a builder of TensorFlow ecosystem packages, you can upgrade to the latest TensorFlow version and immediately take advantage of new features and performance improvements without worrying that your existing code base might break.

Therefore, the development team promises full backward compatibility from TensorFlow 2 to the next version.

TensorFlow 2 code can be run as-is, without transcoding or manual changes.

The team plans to release a preview version of new TensorFlow features in the second quarter of 2023, and will release a product version later this year.

The above is the detailed content of The new version of TensorFlow has another flag! The official team clarified the 'four pillars': committed to 100% backward compatibility and released in 2023. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to install tensorflow in conda

Dec 05, 2023 am 11:26 AM

How to install tensorflow in conda

Dec 05, 2023 am 11:26 AM

Installation steps: 1. Download and install Miniconda, select the appropriate Miniconda version according to the operating system, and install according to the official guide; 2. Use the "conda create -n tensorflow_env python=3.7" command to create a new Conda environment; 3. Activate Conda environment; 4. Use the "conda install tensorflow" command to install the latest version of TensorFlow; 5. Verify the installation.

Create a deep learning classifier for cat and dog pictures using TensorFlow and Keras

May 16, 2023 am 09:34 AM

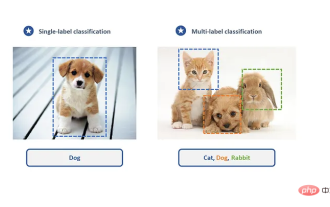

Create a deep learning classifier for cat and dog pictures using TensorFlow and Keras

May 16, 2023 am 09:34 AM

In this article, we will use TensorFlow and Keras to create an image classifier that can distinguish between images of cats and dogs. To do this, we will use the cats_vs_dogs dataset from the TensorFlow dataset. The dataset consists of 25,000 labeled images of cats and dogs, of which 80% are used for training, 10% for validation, and 10% for testing. Loading data We start by loading the dataset using TensorFlowDatasets. Split the data set into training set, validation set and test set, accounting for 80%, 10% and 10% of the data respectively, and define a function to display some sample images in the data set. importtenso

pip installation tensorflow tutorial

Dec 07, 2023 pm 03:50 PM

pip installation tensorflow tutorial

Dec 07, 2023 pm 03:50 PM

Installation steps: 1. Make sure that Python and pip have been installed; 2. Open the command prompt or terminal window and enter the "pip install tensorflow" command to install TensorFlow; 3. If you want to install the CPU version of TensorFlow, you can use "pip install tensorflow- cpu" command; 4. After the installation is complete, you can use TensorFlow in Python.

TensorFlow.js can handle machine learning on the browser!

Apr 13, 2023 pm 03:46 PM

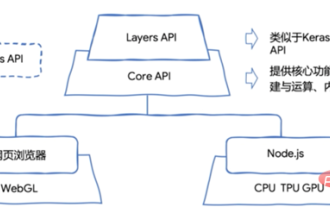

TensorFlow.js can handle machine learning on the browser!

Apr 13, 2023 pm 03:46 PM

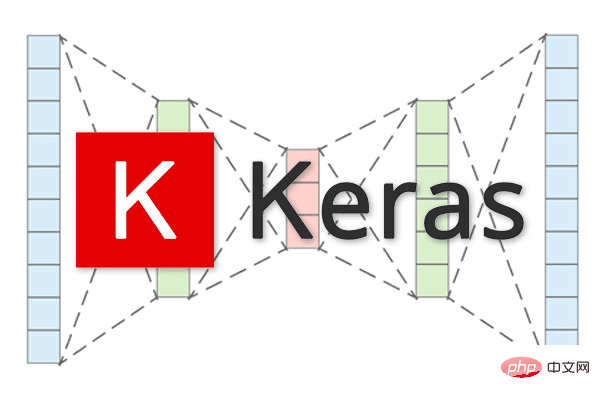

Today, with the rapid development of machine learning, various machine learning platforms emerge in endlessly. In order to meet the needs of different business scenarios, machine learning models can be deployed to Android, iOS, and Web browsers respectively, so that the models can be deduced on the terminal side, thus Realize the potential of your model. TensorFlow.js is the JavaScript version of TensorFlow, supports GPU hardware acceleration, and can run in Node.js or browser environments. It not only supports developing, training and deploying models from scratch based entirely on JavaScript, but can also be used to run existing Python versions of TensorFlow models, or based on existing

Create machine learning models and neural network applications using PHP and TensorFlow.

May 11, 2023 am 08:22 AM

Create machine learning models and neural network applications using PHP and TensorFlow.

May 11, 2023 am 08:22 AM

With the increasing development of artificial intelligence and machine learning, more and more developers are exploring the use of different technologies to build machine learning algorithms and applications. As a general-purpose language, PHP is gradually being used in the field of artificial intelligence. This article will introduce how to use PHP and TensorFlow to create machine learning models and neural network applications, helping developers better master this technology. Introduction to PHP and TensorFlow PHP is a scripting language suitable for web development and can be used for server-side scripts and also

Zebra launches new upgraded version of Thinking Machine: an interactive learning experience with artificial intelligence as the core

Aug 15, 2023 pm 05:09 PM

Zebra launches new upgraded version of Thinking Machine: an interactive learning experience with artificial intelligence as the core

Aug 15, 2023 pm 05:09 PM

Zebra officially released the Zebra Thinking Machine G2 on August 2, which is a comprehensive enlightenment learning machine with "Thinking Machine + Expansion Question Cards" as the core combination. It uses AI and big data to assist in the development of professional content to help children grow in an all-round way. According to reports, Zebra has launched a new generation upgraded flagship product that integrates AI technology and professional digital content. This is the latest product after smart toy teaching aids such as pinyin recognition machines, reading pens, and picture book machines. Zebra also announced the latest sales data for its full range of children's smart toy and teaching aids products, which currently exceed 4.3 million units. Tang Qiao, head of Zebra's toy and teaching aids business, pointed out that in addition to hardware, the competition barrier in the thinking machine category mainly lies in professional content R&D. The unique highlight of Zebra Thinking Machine is the use of AI big data to assist content development

TensorFlow, PyTorch, and JAX: Which deep learning framework is better for you?

Apr 09, 2023 pm 10:01 PM

TensorFlow, PyTorch, and JAX: Which deep learning framework is better for you?

Apr 09, 2023 pm 10:01 PM

Translator | Reviewed by Zhu Xianzhong | Ink Deep learning affects our lives in various forms every day. Whether it’s Siri, Alexa, real-time translation apps on your phone based on user voice commands, or computer vision technology that powers smart tractors, warehouse robots, and self-driving cars, every month seems to bring new advancements. Almost all of these deep learning applications are written in these three frameworks: TensorFlow, PyTorch, or JAX. So, which deep learning frameworks should you use? In this article, we will perform a high-level comparison of TensorFlow, PyTorch, and JAX. Our goal is to educate you on the types of applications that play to their strengths,

PHP and TensorFlow integration to achieve deep learning and artificial intelligence processing

Jun 25, 2023 pm 07:30 PM

PHP and TensorFlow integration to achieve deep learning and artificial intelligence processing

Jun 25, 2023 pm 07:30 PM

In today's era, deep learning and artificial intelligence have become an integral part of many industries. In the process of implementing these technologies, the role of PHP has received more and more attention. This article will introduce how to integrate PHP and TensorFlow to achieve deep learning and artificial intelligence processing. 1. What is TensorFlow? TensorFlow is an artificial intelligence system open sourced by Google. It can help developers create and train deep neural network models.