What can AIGC, driven by ChatGPT, do for vertical industries?

The AIGC craze has emerged since last year, triggering an explosive growth in the topic of AIGC and its applications. Many people lament that the era of strong artificial intelligence is not so far away from us. But on the other side of the boom, we see that there are still very few scenarios that can actually be implemented. Currently, the more successful applications are mainly concentrated in the field of personal consumption, while most of the applications of AIGC in the industry are still in the exploratory stage.

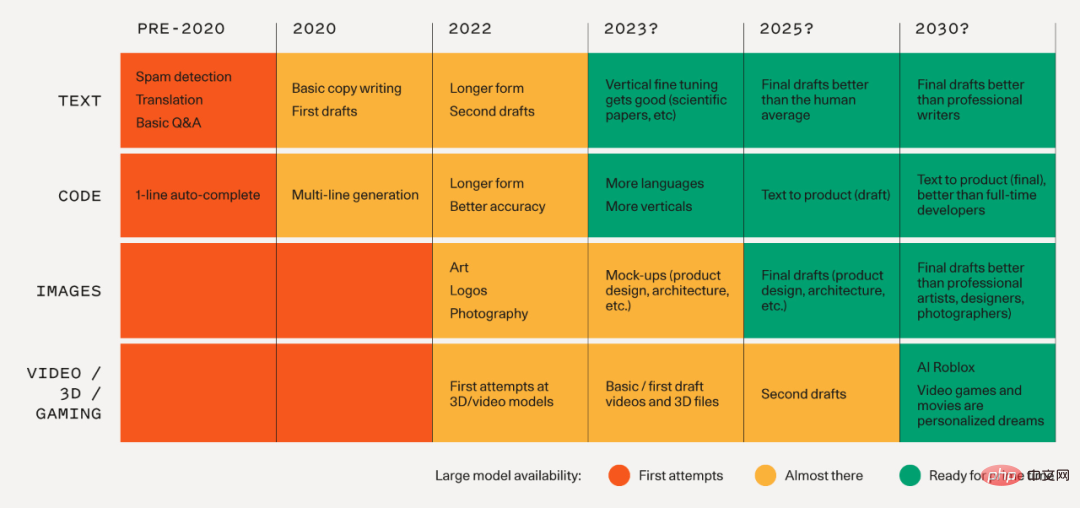

Sequoia Capital made the following prediction for AIGC in September 2022: Text-based AI generation will enter a golden period in 2023, and picture-based AI generation will be golden. The era will arrive around 2025. 3D and video AI generation may be in the draft stage in 2023, and may enter the golden age in 2030. It is undeniable that AI generation of text and pictures is indeed ahead of the curve, while 3D model, video and game generation are still in the development stage.

##Sequoia Capital’s maturity forecast for AIGC related products

If we consider the industrial applications of AIGC, such as in giant vertical entities such as manufacturing and construction, AIGC's C/Content content will not just stay in the field of pictures and text, but Need to enter the three-dimensional realm with richer information. Next, we will discuss its development path from AIGC technology -> products -> business applications and value realization, and use industrial examples to explore how AIGC can achieve closed loops and value implementation in the industrial field.

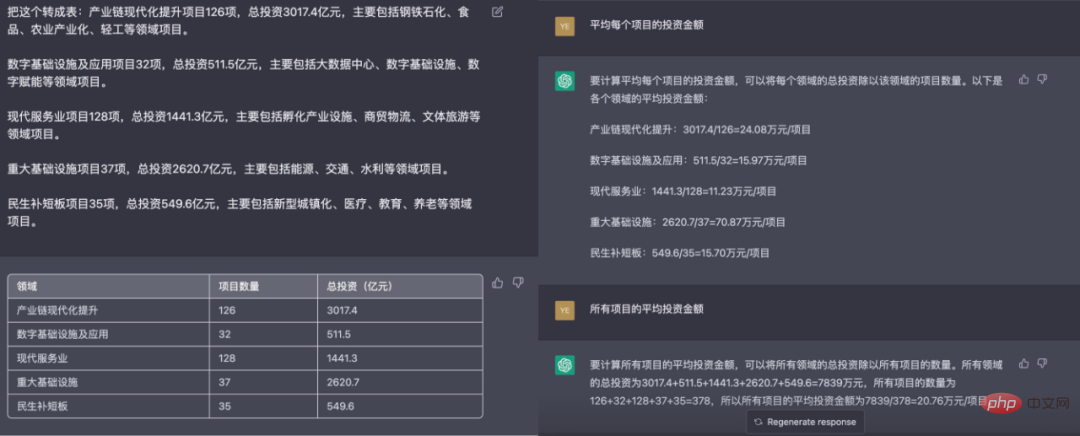

1.AIGC technology: from text to picturesAs can be seen from the increasing number of tests on ChatGPT, ChatGPT can not only parse and parse semantics but also Structured, NLP natural language processing can also be used for data analysis on this basis.

ChatGPT structured content and data analysis - provided by Jiage Data

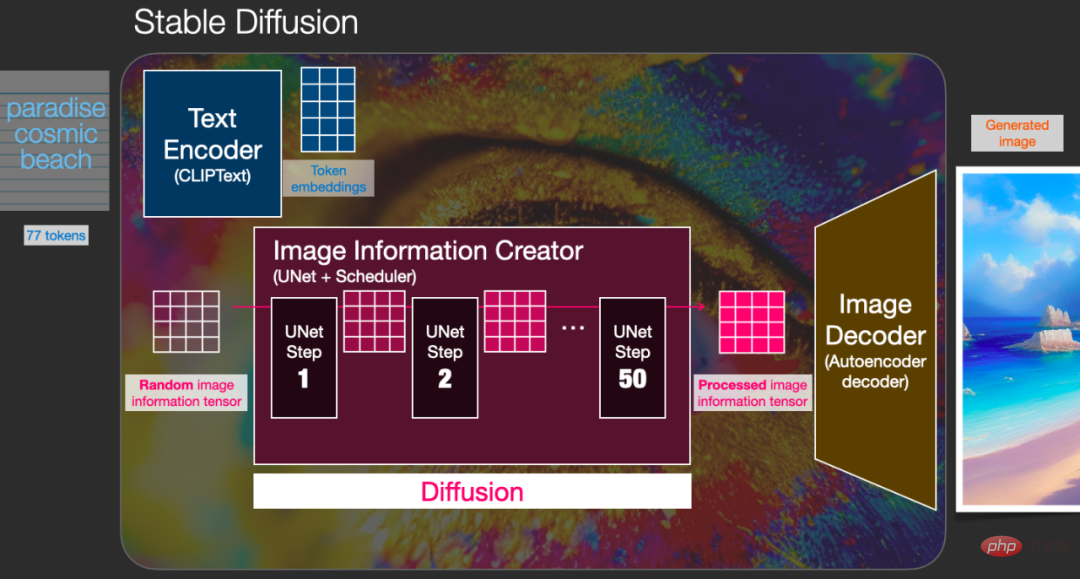

In fact, a number of AI drawing frameworks or platforms led by Stable Diffusion have caused a sensation earlier last year. Although pictures appear to have more complex information content than text, their technology matures earlier than text generation led by GPT. It is necessary for us to take the mainstream open source framework Stable Diffusion as an example to review these Picture how the AIGC framework works.

The pictures generated by Stable Diffusion have the ability to compare with human painters

Stable Diffusion mainly has three components, each part Each has its own neural network.

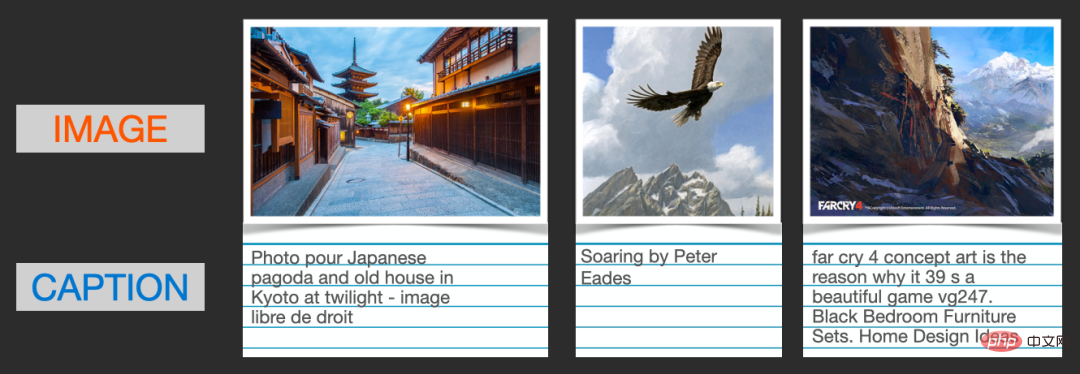

1. CLIP is used for text encoders: Use text as the output semantic information to form a 77*768 matrix. CLIP trains AI and performs natural language understanding at the same time. and computer vision analysis. CLIP can determine the degree of correspondence between images and text prompts, such as gradually matching the image of a building with the word "architecture", and its ability training is achieved through more than 4 billion pictures with text descriptions around the world.

Training set of CLIP

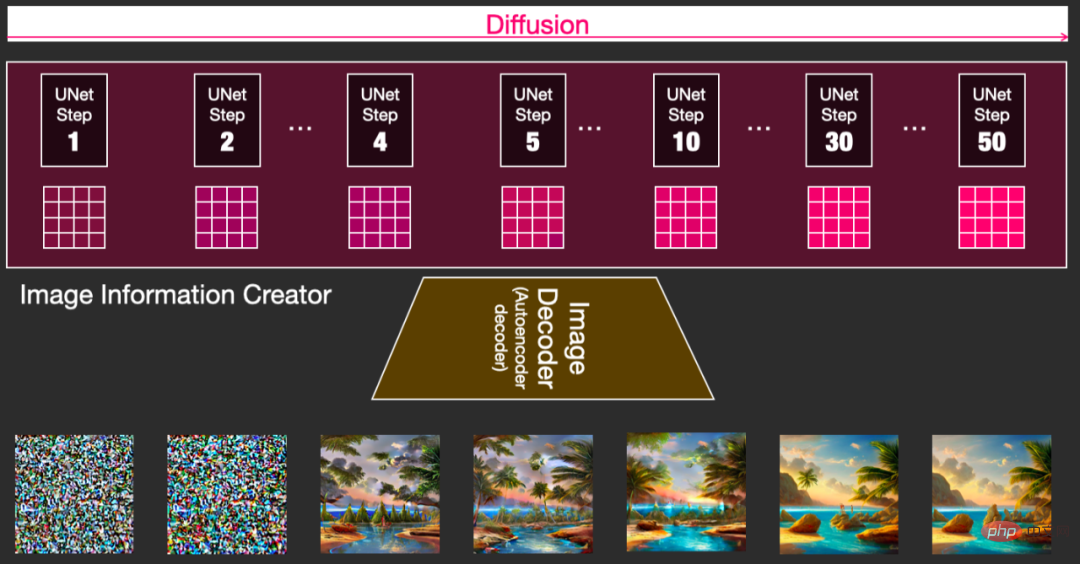

2. UNET and scheduler: This is the famous diffusion model main program (from the "Latent Diffusion Model" (LDM / Latent Diffusion Model) proposed by the CompVis and Runway teams in December 2021), which is used to analyze Noise is predicted to realize the process of reverse denoising, thereby realizing the generation of pictures in the information space. As shown in the picture, the process of dye diffusion is like the process of gradually changing from a picture to a noise point. When the researchers add random noise points to the picture and let the AI learn the overall process in reverse, then they have a set of reverse learning methods from the information space noise map. A model that generates images.

Diffusion model reverse denoising process

Explain with a popular example, if some dye is randomly dropped into clean water, over time By pushing it, you will get a gorgeous shape as shown below. So is there a way to reversely deduce information such as the initial dye dosage, sequence, and initial state of dripping into the water tank based on a specific state at a specific time? Obviously, this is almost impossible to achieve without borrowing methods from AI.

Different dyes are dropped into water and spread into different shapes

#3. Decoder from information space to real picture space: That is, convert the matrix information in the information space into an RGB picture visible to the naked eye. Imagine the process of communicating with others. The sound signals we hear are converted into text signals that the brain can understand and stored in the brain. This process is called encoding. If you try to express text signals through a certain language, this process can be called decoding - the expression here can be in any language, and each language corresponds to a different decoder. Decoding is just a way of expression, and its essence is still based on human beings. The description and understanding of something in the mind.

StableDiffusion interpretation of the entire process from input to output

It is precisely with the series of these key technical steps that Stable Diffusion has successfully created an omnipotent AI mapping robot that can not only understand semantics and convert it into information flow in the information space, but also be able to The creation is created through simulated noise reduction in space and restored to a picture visible to the naked eye through a decoder. This sci-fi process is considered a miracle in a world where AI does not exist.

2.AIGC technology: from pictures to 3D models

Picture generation has achieved breakthrough results, but if these results can be further optimized and applied to more fields , it will be possible to achieve greater value. We have also seen the results of exploration in some subdivided fields. For example, through understanding the scene, adding different data sets and adjusting parameters, we can achieve better control over image generation, not just through constant trial and error of text. Get better results.

2.1 Design Intent Map Generation

In early 2019, the "This XX does not exist" series generated using GANs was It has received a lot of attention overseas, and domestically we have also seen companies launch results in subdivided fields. The team also experimentally launched the "AI Creative Library" on the mobile phone in August 22. Just input a sentence, and the conversational robot can quickly understand the semantics within a minute and generate multiple pictures with detailed effects close to the architectural concept plan. intention diagram. On top of this, by inputting an existing picture and modifying the keywords of some descriptions, the "AI Creative Library" can generate a series of derivative pictures to assist designers in finding inspiration in daily creations.

Xiaoku Technology "This building does not exist", GANs model generates architectural image diagrams and iterative process

Left picture: Xiaoku's "AI creative library" is generated, triggering the statement Louis Kahn style, a small museum surrounded by mountains and rivers; right picture: Xiaoku The library "AI Creative Library" is generated based on the Louis Kahn style picture on the left, and the completed style is switched to Le Corbusier

In order to make the "AI Creative Library" more effective, the team has made some new explorations: Since the existing algorithms and models are more concentrated on general Internet materials, architecture-related pictures, descriptions and styles The data reserve at a professional level is obviously not enough. Here, a special identifier for construction-related words is used to form a fine-tuned prior data set and the data set is fused and trained to achieve model enhancement. Through the new model enhanced in the construction professional field, an AI creative library dedicated to the construction industry has been formed. For short construction description sentences, the rate of excellent products in the test set has increased by 13.6% compared with the original model.

##Google Dreambooth Fine-Tuning Algorithm Schematic

For example, when a picture of a museum and a word "Zaha Hadid (the deceased world-famous female architect)" are input, the model can understand that the architectural style or characteristics of the museum need to be closer to Zaha Hadid's works, and It is not to add a character or portrait of Zaha Hadid in the museum, or to create a cartoon portrait of Zaha Hadid in the AI world - this is often one of the results returned by the general model.

After fine-tuning the architectural model, Xiaoku "AI Creative Library" can fully understand "Zaha Hadid" ” The hidden meaning of this special word

2.2 3D model generation

Although two-dimensional pictures are wonderful , but in industrial applications it only serves the role of "intention library" for the time being. If it is to become an outcome that can accurately express design in the future, it needs to move towards 3D and higher information dimensions.

In 2020, when AIGC was not as mature as it is now, the above-mentioned team was exploring how to use AI to generate 3D models, and disclosed its research and development progress during the teaching of the DigitalFUTURES workshop at Tongji University. In the algorithm that generates images from graphics and further generates models, we can see that the model effect at that time was not ideal. What is valuable is the linkage of graphics-image-model.

##2020 Tongji University DigitalFUTURES workshop Xiaoku teaching team results, hand-drawn graphics generate images and then generate models

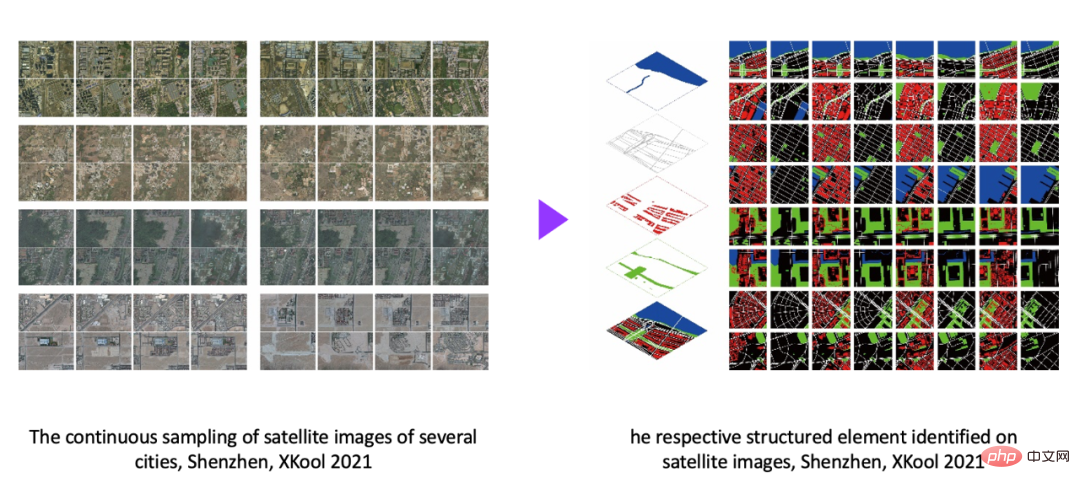

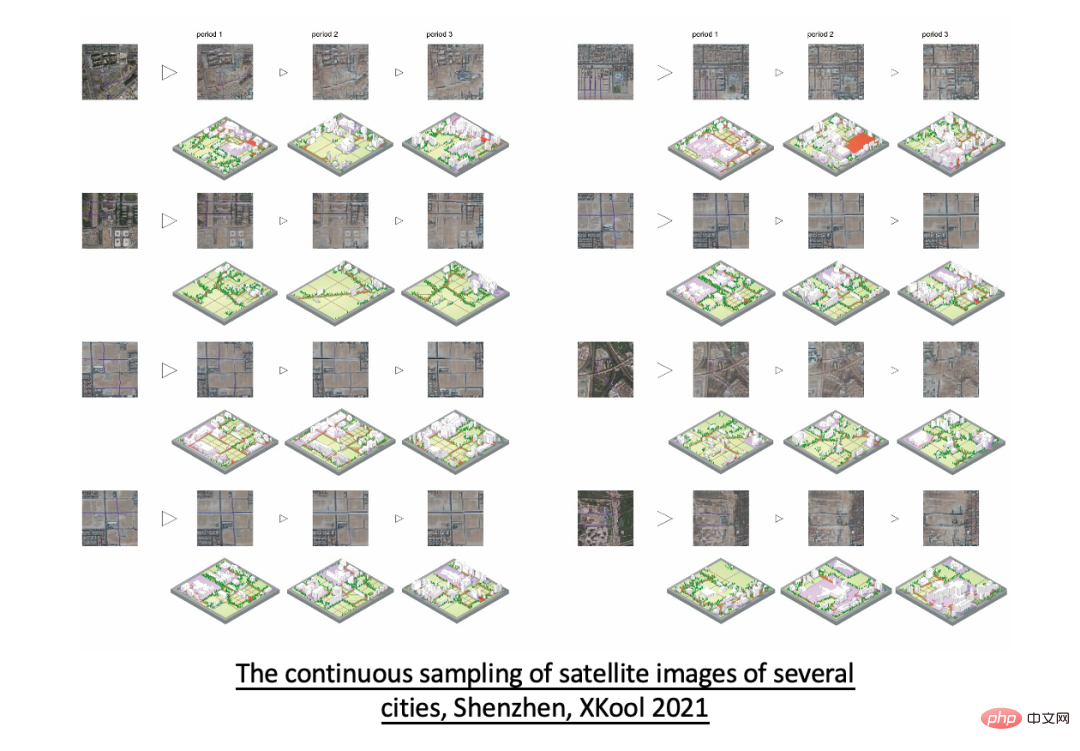

In the second year of teaching at the DigitalFUTURES workshop at Tongji University, the team released a method to learn the relationship between graphs and real three-dimensional models through GANs, and generate the graph into a real three-dimensional model. model algorithm. By learning features from different layer elements on the image, this algorithm can roughly restore the three-dimensional stretched shape of the main object corresponding to the image, and predict the height of the original object corresponding to the projection of different objects. Of course, this method still has certain flaws. It can only be used in picture scenes, and it is difficult to accumulate the relationship between similar pictures and three-dimensional shapes in other scenes. Secondly, the restored three-dimensional shape can only roughly predict the height, and other details need to be passed through The algorithm is regenerated, which has a large error with the real three-dimensional model. It can only be used for early research and judgment of the project, and the application scenarios are limited.

## Urban three-dimensional model hierarchical feature extraction training diagram

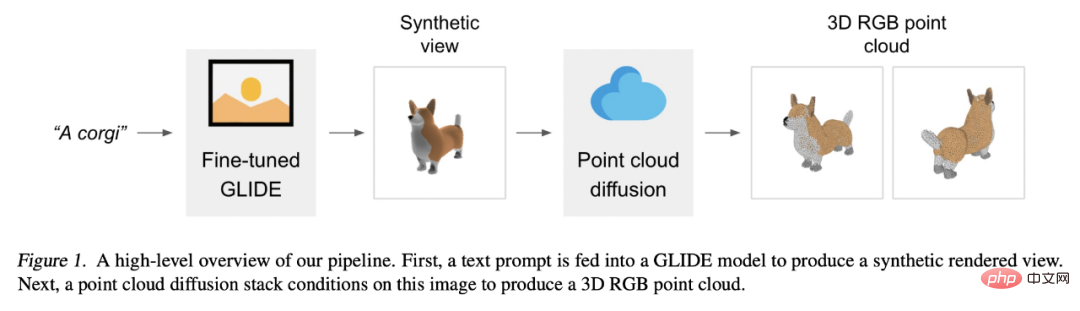

Thanks to the explosion of AIGC algorithms and the increasing maturity of 3D generation algorithms, we have also seen vertical AI companies begin to absorb more advanced technologies and ideas to improve their models, and on the 3D-AIGC route There are some new directions to try. For example, OPENAI has launched the Point-E framework, which can predict any two-dimensional image into a point cloud through an algorithm, and then predict three-dimensional objects through the point cloud.

##Schematic diagram of the whole process of PointE framework

However, the quality of model generation still has certain limitations, and the unusability of the model is mainly reflected in the following three aspects:

1. It is difficult to restore the three-dimensional shape: firstly Two-dimensional image data appeared earlier than three-dimensional model data. At the same time, there are currently more two-dimensional image data available than the latter. Therefore, the former can be used as more training materials. The less generalization ability of three-dimensional model training materials is limited. , it is difficult to restore the original three-dimensional shape;

2. The overall lack of materials: The most important part for a three-dimensional model is the filling and selection of materials. However, for AI generation, The method of directly estimating the material from the picture is not yet mature. The same material behaves differently under different shapes, environments, and light sources. When these variables are concentrated in one picture, material reconstruction is almost impossible;

3. The accuracy of the generated model is not up to standard: Models refined through point clouds usually rely on the density of point clouds to reconstruct the object surface Mesh. If there are too few point clouds, the object will be seriously distorted. Can't even rebuild the model.

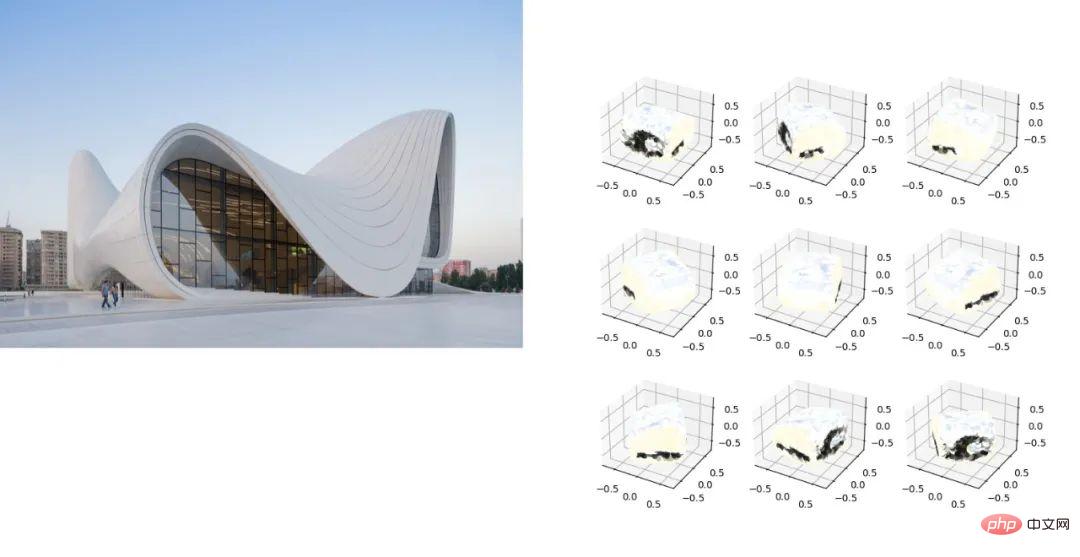

The Xiaoku team tested the Point-E model. The building picture on the left generated a point cloud and then simulated it. Extracting the 3D model on the right, unfortunately all we get is a bunch of meaningless point cloud models. Point-E is still unable to understand a picture of a building

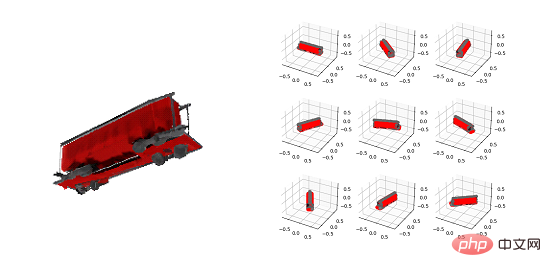

Of course we Understand the current technical bottlenecks. If you set the goal a little lower, choose a simple shape generated from a 3D modeling software, take a 2D screenshot and reconstruct it in the point-e model, you will surprisingly find that the effect is better than the above test. Better, but still within the scope of a "preliminary draft". This is closely related to the training set. Generating 2D views from each perspective through 3D modeling software is one of the easiest ways to obtain training data for this model.

The Xiaoku team tested the Point-E model and selected a simple model in the modeling software Taking screenshots of the 3D model at any angle and reconstructing the 3D model often produces pretty good results

To sum up, from text->picture->point cloud-> The technical route for three-dimensional objects is certainly amazing, but if it is to be applied in the industrial field, there is still a lot of work that needs to be done by AI scientists.

However, is this the only technical route to achieve the generation of 3D models?

3 New Ideas for AIGC Application in Vertical FieldsIn the development of large models in general fields, manufacturers led by OpenAI, including giants such as Nvidia and Google, have also launched My own universal 3D-AIGC framework is unfortunately still in an early stage. For vertical physical industries, there is obviously still a long way to go before practical applications.

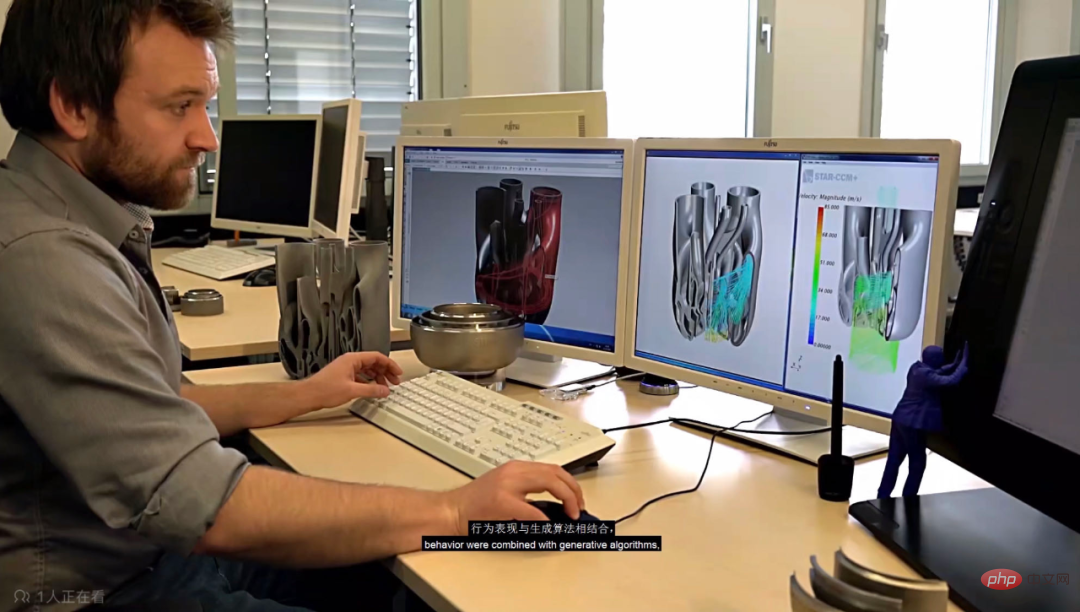

From a global perspective, in the field of 3D model generation, in addition to large pan-field models, some vertical industries are also exploring how to apply AIGC. For example, Siemens conducts policy simulation and further optimization on the generated model during the design and manufacturing of the engine. Finally, through 3D printing entities, it realizes the delivery of 3D model results and a closed business loop.

Siemens implements engine design and simulation through generative algorithms

The realization of such results depends on the continuous iteration of the underlying business content and its data standards under industrial logic.

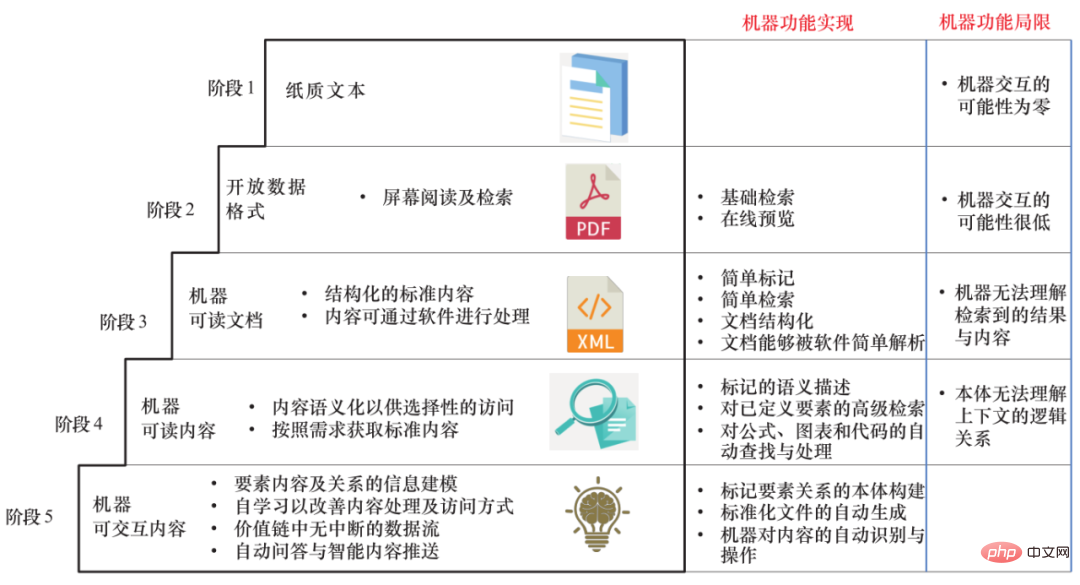

According to ISO/IEC, the digital standard definition of content is SMART (Standards Machine Applicable, Readable and Transferable): L1 level is paper Text, no machine interaction is possible; L2 level is an open digital format, with very low machine interactivity; L3 level is machine-readable document, but the machine cannot understand the retrieval results and content; L4 level is machine-readable content, and semantic interaction is possible However, the machine cannot understand the logical relationship of the context; at L5 level, the machine can interact with the content and realize intelligent attributes such as automatic identification and automatic generation.

In the industrial field, L3-level information content is currently widely used, and L4-level digital content is being developed. L5-level intelligence is the core foundation of Industry 4.0 and smart manufacturing. Therefore, generating machine-readable content above L4 level, especially L5 level intelligent content, is the direction of AIGC in the future.

ISO/IEC SMART Digital Standard "Chinese Engineering Science" Volume 23, 2021 6th issue of "Research on the Current Situation and Trends of Standard Digital Development" Liu Xize, Wang Yiyi, Du Xiaoyan, Li Jia, Che Di

Overseas has begun in the industrial application field of AIGC Although domestic exploration is still relatively scarce, we have also found some companies that are deeply engaged in vertical fields. For example, as mentioned above, the Xiaoku technology team is deeply involved in the construction industry. We will take the construction industry as an example to discuss the implementation path of AIGC in vertical industries.

The domestic real economy is currently in a window period of transformation. The national level has proposed the important task of "integration of artificial intelligence and the real economy". Major industries are eager to truly implement AI technology and assist industries. Achieve an upgrade leap in digitalization and intelligence, instead of being a DEMO product that stays in concept or a fun thing to discuss after dinner.

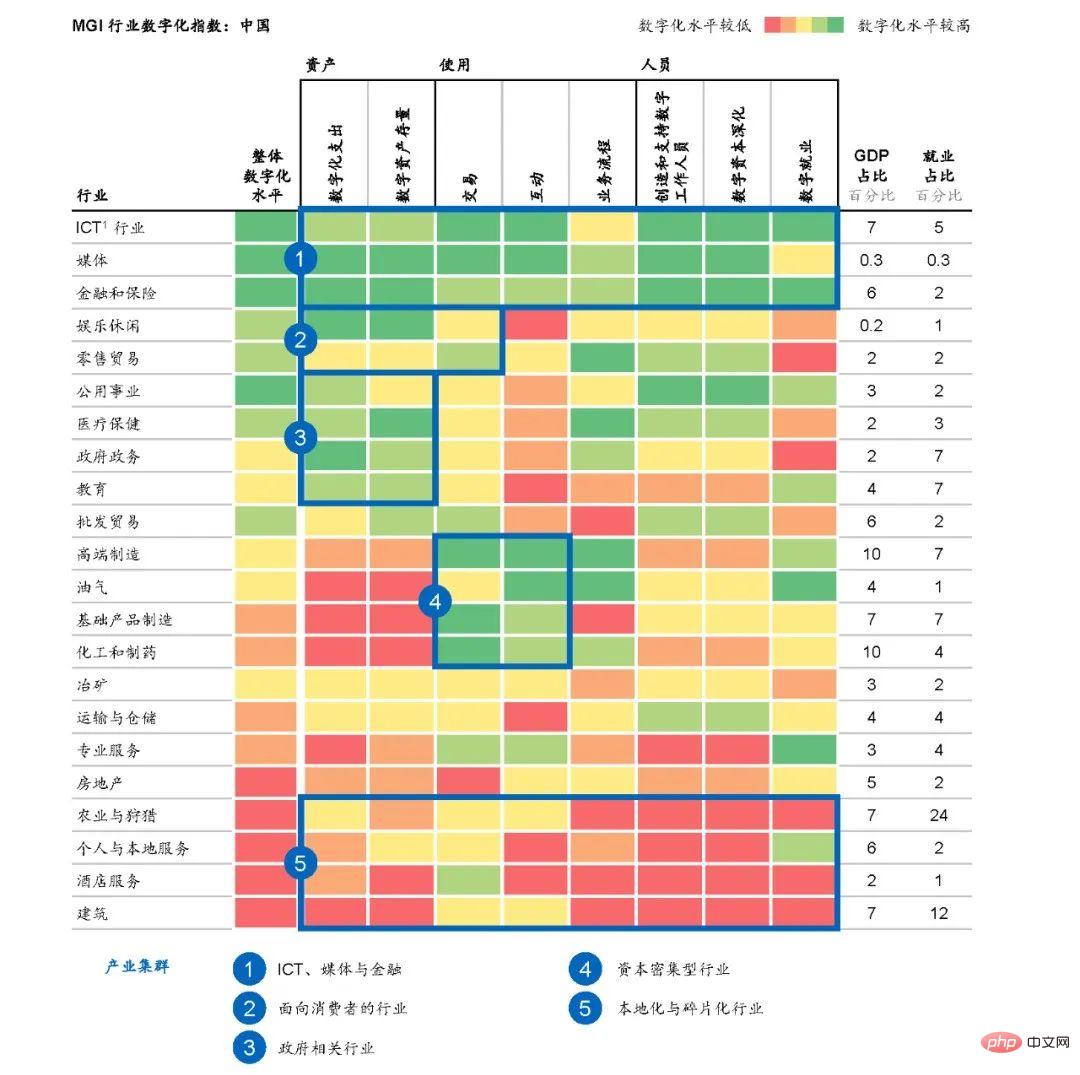

The construction industry is a national pillar industry with an annual revenue of nearly 30 trillion, but its digitalization level ranks last among all industries in the country. At present, the country has proposed intelligent construction policies and hopes to reach a new level of "Made in China". Intelligent construction is based on new building industrialization (industrialization/prefabricated, digital, intelligent), based on the deep integration of new generation information technology and advanced construction technology, and runs through all aspects of construction activities such as design, production, construction, operation and maintenance, and supervision. , which has the characteristics of self-perception, self-decision-making, self-execution, self-adaptation, and self-learning, and is an advanced construction method designed to optimize the quality, efficiency, and core competitiveness of the entire life cycle of the construction industry.

## Total output value and growth of China's construction industry from 2011 to 2021 - National Bureau of Statistics - Forward-looking Industry Research Academy,

Information source: Gartner; Kable; OECD; Central Bureau of Statistics ;Bloomberg; McKinsey Global Institute analysis

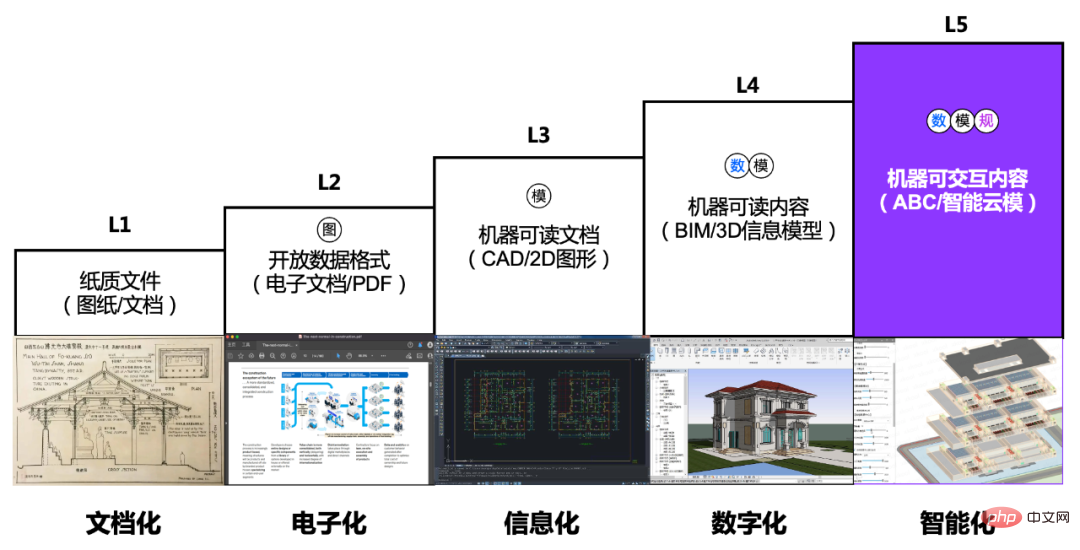

In the construction industry, the underlying data standard is moving from the CAD era of machine-readable documents L3 to L4 of machine-readable content The era of advanced BIM is entering. The requirement for 3D models in the construction industry is that the content object has full-dimensional accurate information in three-dimensional space, including model, data and other dimensions. If it can also include rule dimensions, it can then have self-perception, self-learning, self-iteration, etc. Intelligent capabilities. At present, L3-level CAD and L4-level BIM application software have been monopolized overseas. Our development space and potential must be concentrated on L5-level software that can cover low dimensions with high dimensions.

The content format of digital standard SMART in the field of architecture

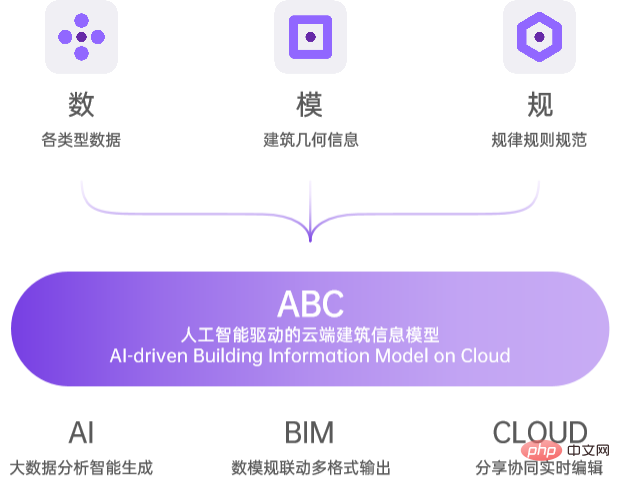

##Based on insights into the digital transformation of the construction industry, the Xiaoku team realized that it was necessary to redefine the data base of the entire industry. Since its establishment in 2016, it has been committed to the research and development of the underlying technology of L5-level 3D model AIGC and its application in the construction industry. Based on a set of AI systems containing business flow logic, the "digital-model-scale" linkable content including building information, multi-dimensional data, 3D models, and rules/norms/laws is generated to realize the intelligent generation of architectural design plans.

Such underlying data, the team calls it AI driven Building Information Model on Cloud Cloud Building Information Model (ABC for short) generated by artificial intelligence, and will The achievement of intelligent generation boils down to four practical steps: AI identifies existing content for training or structured data reconstruction, evaluates and simulates the data, optimizes preliminary data results, and finally generates a series of AI models. business results.

##L5 level building intelligent format ABC intelligent cloud model diagram

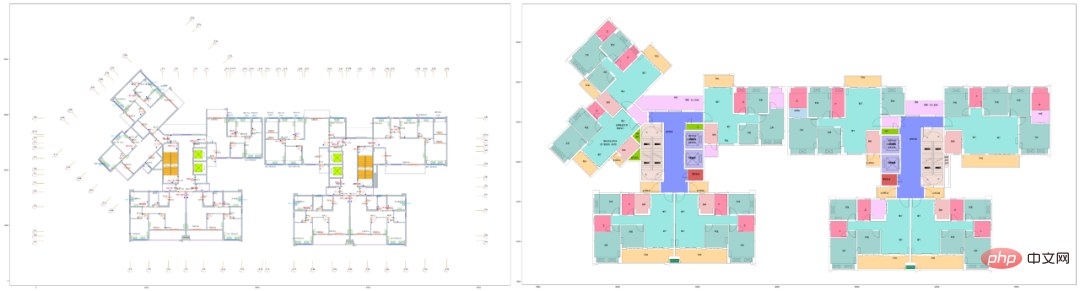

In the field of AI recognition, the team has obtained 100 L3-level semantic CAD drawings through cleaning and training of tens of millions of CAD drawing data of different business types. % cloud restoration and 99.8%* accurate semantic analysis and supplementation, reaching the world's advanced level in this field. This achievement has been deeply applied to many of the company's products and solutions. For example, the "intelligent drawing review" for construction drawing review has a clause review accuracy rate of about 96%.

Xiaoku Construction Drawing Components and Space Identification

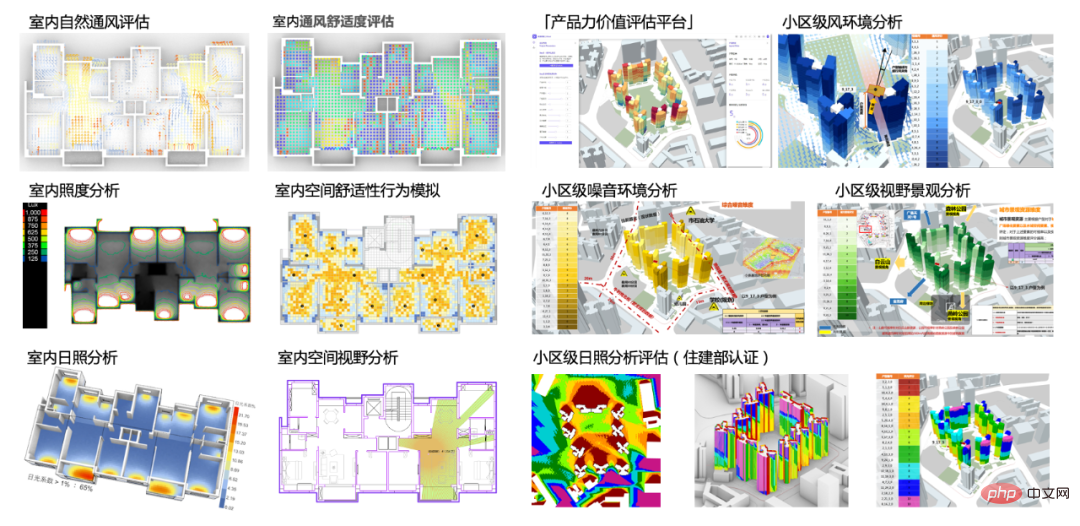

AI analysis field, based on the effective identification of projects, enables the team to conduct physical environment simulation analysis, human behavior data simulation and prediction, and project-related big data for common civil building types such as residences and shopping malls. analysis and simulation. At the application level, it can assist customers in quantitative analysis of project plans. For example, by evaluating the full range of residential products of real estate companies, different value evaluation coefficients can be obtained to assist real estate companies in improving product quality. Therefore, Xiaoku Technology was also selected as the first AI judge of the China Housing Association House Design Competition. This capability has also been applied in the development and operation of more than ten shopping mall buildings in Hong Kong and China.

In the field of AI optimization

, the team believes that "optimization" is based on further optimization iterations after the previous "identification" and "analysis", that is, regenerating better results based on existing content. This type of technology is already used in company-specific products and solutions. For example, in the "Smart Sunshine Optimization" function of the Design Cloud 2022 version, Xiaoku can automatically fine-tune solutions that fail to pass the sunshine, so that they can pass the sunshine verification without making huge adjustments to the original pattern. This capability is also used in the design development of architectural solutions, such as curtain wall design optimization scenarios. In a museum curtain wall project in Sichuan in cooperation with the Sichuan Provincial Commercial Design Institute, the Xiaoku algorithm optimized more than 30,000 original irregular triangular curtain wall panels into 12 types of standard modules, which is lower than the 116 types that the current world level can reduce to. Reduced by 90%, the cost of building curtain walls will be greatly reduced due to the reduction of SKU and mold quantity.

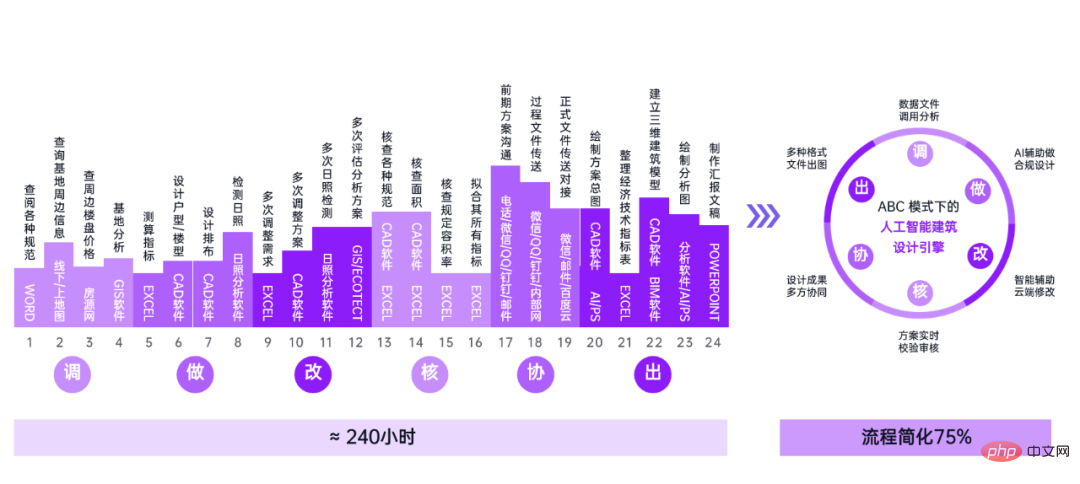

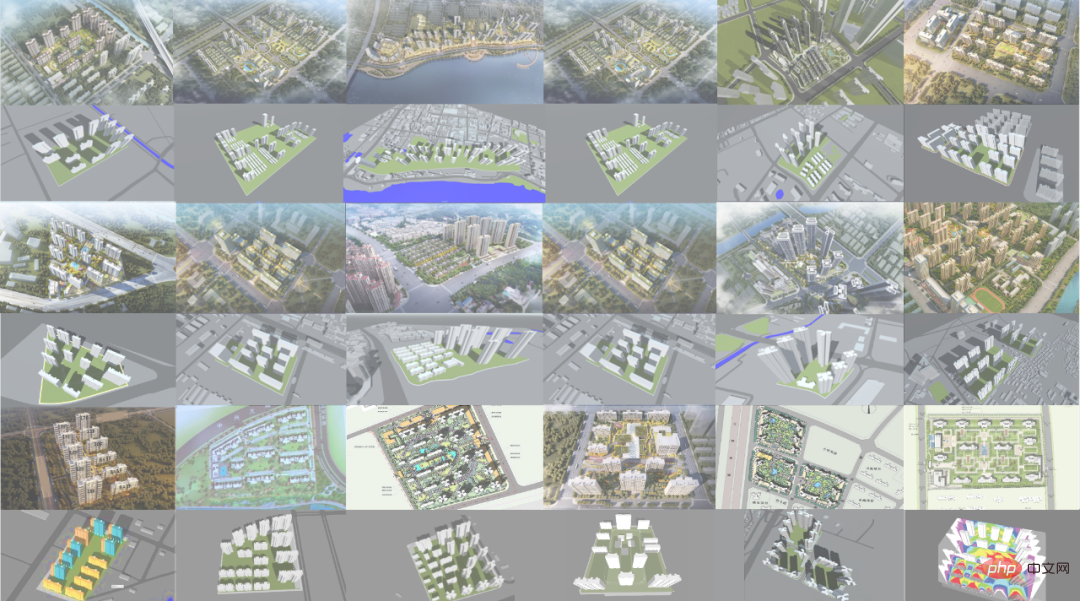

The field of AI generation is the core part of intelligent design. For the construction industry, choosing an economical, applicable and beautiful design plan and delivering safe, efficient and high-quality construction results requires the coordination and coordination of multiple disciplines and roles. It not only needs to be broken down one by one from the macro scale, to the meso scale and then to the micro scale, but also needs to be gradually covered in multiple majors such as architecture, structure, electromechanical, plumbing, landscape, etc. It also needs to cover various types of residences, apartments, industries, offices, commerce, etc. Business type. Therefore, the generation of professional results in vertical fields cannot be solved by a certain model algorithm and a set of data. It requires the organic integration of multiple technologies such as multi-model, multi-modal, and multi-data sets with business logic, through product design that fits the segmented scenarios. And continuous iteration based on user feedback can finally achieve it. Starting from business logic, the Xiaoku team sorted out the 24 business process steps required for traditional architectural design, extracted and reconstructed the core content into 6 business modules, and used the AI system to With the cloud architecture as the core, a new set of architectural design AIGC business processes have been established: adjust (information call and AI recognition), do (full AI generation and human-computer collaboration generation), change (manual changeability and AI optimization) ), verification (data verification and AI review), collaboration (multi-person collaboration and business management in the cloud), export (automatically output more formats - 3D models/2D drawings/images/PPT/Excel, etc.).

Left picture: 24 steps of the original business process of architectural design, right picture: small The library was restructured into 6 business process sections supported by AI

Based on a deep understanding of the business and reconstructed business logic, the 6 major business modules were integrated into the product design. Deeply integrated with AI recognition, AI generation, big data, cloud collaboration and other technologies, it realizes construction business needs of different depths such as architectural planning, unit design, component generation, etc., from analysis to design to review to collaboration and output, gradually covering It provides the breadth and depth of demand for residential business.

## "Xiaoku Design Cloud - Architectural Planning" product 6 major modules

4. The value of AIGC in the industry

In most industries, the application of AIGC is still in its infancy, and the continuous development of overall AI technology will promote subsequent innovative applications of AIGC. Taking the current practice in the construction industry as an example, AIGC can currently assist in improving specific business scenarios that have higher requirements for efficiency in some detailed business scenarios that generate user-perceivable value, such as investment research, design, evaluation, etc. in the construction industry. management and construction.

4.1 Optimal Solution Gain and Efficiency Improvement

In the investment research stage of the construction industry, the 2021 The "two concentrations" policy (centralized supply of land and centralized land auction) allows a large amount of land to be launched within a month. Development companies need to complete the investment evaluation of each piece of land in a short time. The most important thing is how to find a piece of land. Optimal architectural planning solutions to obtain maximum product value and investment return calculations. It originally took at least 3-5 days to complete a residential planning concept plan, which could not meet business needs. This raised the need for extreme efficiency in pre-investment architectural planning plans.

The Xiaoku team launched AIGC’s architectural planning plan, and it only took about 30% of the original time to output the preliminary plan. More importantly, AI can generate and optimize solutions that people have not thought of or that are difficult to exhaustively refine manually, thereby achieving better results in terms of performance or economy. For example, in a Jiangxi project of China Jinmao, the AI-generated plan not only took only 20% of the original method in time, but also increased the total value of the project by 56 million yuan compared with the original plan. In the real estate auction market in the nine months of 2021, the team has completed nearly a thousand projects and nearly ten thousand plans, helping customers successfully obtain dozens of pieces of land.

##"Xiaoku Design Cloud" AI generates actual residential land acquisition plan

4.2 Cost reduction, energy saving and emission reduction

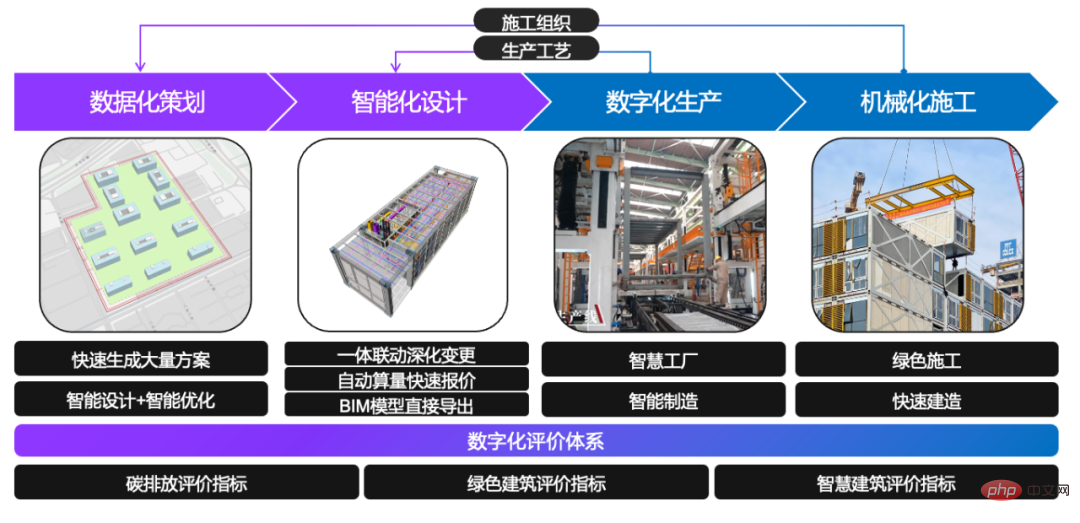

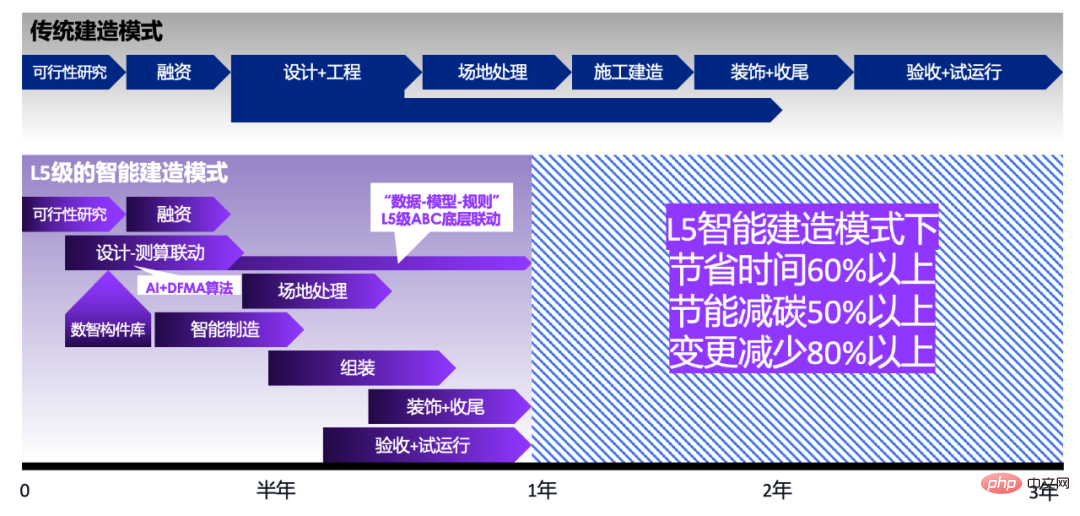

In the actual building construction process, the Xiaoku team combines AI and DFMA (Design For Manufacture and Assembly) for assembly Combined with the design method of "Designed for manufacturing", we have joined hands with China Construction Science and Technology, a subsidiary of the construction giant China Construction Group, to deeply combine box-type prefabricated buildings with AI design generation and L5-level ABC "digital-analog-scale" linkage to achieve It achieves real-time linkage of investment-plan-cost before implementation, reduces design and cost changes by 80%, and effectively reduces the overall assembly component SKU and mold opening volume, achieving more than 50% energy saving and emission reduction. While obtaining better performance and economic results, "native data" can be effectively connected with factory production lines and smart construction sites into "twin data". In a hotel project in Shenzhen, it was completed from design to construction in 4 months, significantly shortening the total construction period by at least 14 months and saving more than 60% of the time.

"Xiaoku Assembly Cloud" cooperates with China Construction Science and Technology for a hotel in Shenzhen, the entire process is intelligent Design and intelligent construction)

##Comparison between L5 intelligent construction mode and traditional mode

As can be seen from the above cases, L5-level AIGC can start from the source of data generation and effectively assist the industry chain in obtaining specific applications through subdivided scenarios in each link of the industry chain. Higher life-cycle quality, efficiency and core competitiveness. In the future, it is a general trend for AIGC to move from text and pictures to higher-dimensional 3D and L5 content results. This is not only the future expectation of artificial intelligence in the construction industry, but also the common expectation of all vertical industries.

Note: *On the basis that there are no obvious errors in the layers, the current Xiaoku AI recognition is for standard components (doors, windows, walls, stairs, elevators, air conditioners, fire hydrants , parking spaces), etc. The recognition accuracy is 99.8% (the test set is thousands of architectural CAD drawings, and the source of the drawings is the internal standard library of several leading developers)

The above is the detailed content of What can AIGC, driven by ChatGPT, do for vertical industries?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

Please note that this square man is frowning, thinking about the identities of the "uninvited guests" in front of him. It turned out that she was in a dangerous situation, and once she realized this, she quickly began a mental search to find a strategy to solve the problem. Ultimately, she decided to flee the scene and then seek help as quickly as possible and take immediate action. At the same time, the person on the opposite side was thinking the same thing as her... There was such a scene in "Minecraft" where all the characters were controlled by artificial intelligence. Each of them has a unique identity setting. For example, the girl mentioned before is a 17-year-old but smart and brave courier. They have the ability to remember and think, and live like humans in this small town set in Minecraft. What drives them is a brand new,

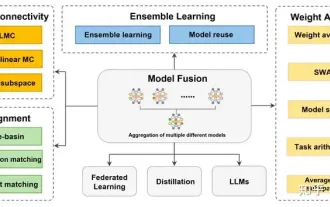

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

In September 23, the paper "DeepModelFusion:ASurvey" was published by the National University of Defense Technology, JD.com and Beijing Institute of Technology. Deep model fusion/merging is an emerging technology that combines the parameters or predictions of multiple deep learning models into a single model. It combines the capabilities of different models to compensate for the biases and errors of individual models for better performance. Deep model fusion on large-scale deep learning models (such as LLM and basic models) faces some challenges, including high computational cost, high-dimensional parameter space, interference between different heterogeneous models, etc. This article divides existing deep model fusion methods into four categories: (1) "Pattern connection", which connects solutions in the weight space through a loss-reducing path to obtain a better initial model fusion

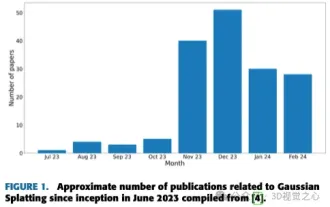

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

Written above & The author’s personal understanding is that image-based 3D reconstruction is a challenging task that involves inferring the 3D shape of an object or scene from a set of input images. Learning-based methods have attracted attention for their ability to directly estimate 3D shapes. This review paper focuses on state-of-the-art 3D reconstruction techniques, including generating novel, unseen views. An overview of recent developments in Gaussian splash methods is provided, including input types, model structures, output representations, and training strategies. Unresolved challenges and future directions are also discussed. Given the rapid progress in this field and the numerous opportunities to enhance 3D reconstruction methods, a thorough examination of the algorithm seems crucial. Therefore, this study provides a comprehensive overview of recent advances in Gaussian scattering. (Swipe your thumb up

Redis: the key technology for building a real-time ranking system

Nov 07, 2023 pm 03:58 PM

Redis: the key technology for building a real-time ranking system

Nov 07, 2023 pm 03:58 PM

Redis is an open source, high-performance key-value database system. It is widely used in real-time ranking systems due to its fast read and write speed, support for multiple data types, rich data structures and other characteristics. The real-time ranking system refers to a system that sorts data according to certain conditions, such as points rankings in games, sales rankings in e-commerce, etc. This article will introduce the key technologies used by Redis in building a real-time ranking system, as well as specific code examples. The content includes the following parts: Redis data type sorting calculation

Combination of Golang and front-end technology: explore how Golang plays a role in the front-end field

Mar 19, 2024 pm 06:15 PM

Combination of Golang and front-end technology: explore how Golang plays a role in the front-end field

Mar 19, 2024 pm 06:15 PM

Combination of Golang and front-end technology: To explore how Golang plays a role in the front-end field, specific code examples are needed. With the rapid development of the Internet and mobile applications, front-end technology has become increasingly important. In this field, Golang, as a powerful back-end programming language, can also play an important role. This article will explore how Golang is combined with front-end technology and demonstrate its potential in the front-end field through specific code examples. The role of Golang in the front-end field is as an efficient, concise and easy-to-learn